This guy takes 17 minutes to get to the point, and his left audio channel is busted. His vids have been chasing me around Youtube for years, especially his famous paradox one. I doubt his original thumbnail mentioned chatgpt because this vid is 2.5 years old. The paradox one is almost like trolling. He keeps saying he'll explain what a set is, then moves on. He eventually does it 5 mins in. Talk about padding. I hope the algorithm leaves me alone now.Ooh, this is discussion is already devolving into an argument against 'Strong AI' and the difference between syntax and semantics...

Here's one I watched earlier:

-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPT4 / CHATGPT Plugins for Speaker measurement analysis and comparison

- Thread starter Jeromeof

- Start date

- Thread Starter

- #22

You do have to pay for GPT4 at the moment - not sure what OpenAI ultimate revenue model is but to get early access to stuff (and priority access to use their API) you pay $20 - I am currently subscribing but when the hype dies down and its integrated into lots of other apps I use I will probably stop payingJust to clarify, GPT4 uses the black logo, not the green one, and currently you have to pay for it. Although I don't know if the code-writing ability changed much from 3.5 to 4. It would probably explain itself more succinctly.

Stop me if you've heard this before, but I think the revenue model is to sell it below cost so that everyone uses it. Then when it's embedded everywhere, including schools and governments, they jack up the price. $20 is already cheap, but the API tokens are so cheap, you can buy 200 million prompts for $20. It'll practically be infrastructure, then 20 years from now, Congress will have debates over whether it's a monopoly that needs breaking up. Ultimately they'll do nothing and move onto the next moral panic. Just a hunch, though. I'll have to Google some historical examples on my Windows PC.You do have to pay for GPT4 at the moment - not sure what OpenAI ultimate revenue model is but to get early access to stuff (and priority access to use their API) you pay $20 - I am currently subscribing but when the hype dies down and its integrated into lots of other apps I use I will probably stop paying

Open AI just published some more analysis of their GPT model behaviours for anyone interested.

It's more a case of approximately mapping mechanisms than 'understanding' the model conceptually. With a very large parameter space (billions of token proximity relationships in GPT-3, trillions in GPT-4) analysis is computationally intensive. Some would say tedious. But it's interesting they quantify in terms of "human preferences between explanations".

It's more a case of approximately mapping mechanisms than 'understanding' the model conceptually. With a very large parameter space (billions of token proximity relationships in GPT-3, trillions in GPT-4) analysis is computationally intensive. Some would say tedious. But it's interesting they quantify in terms of "human preferences between explanations".

Last edited:

Open AI just published some more analysis of their GPT model behaviours for anyone interested.

From the link:

"Language models have become more capable and more widely deployed, but we do not understand how they work."

How can we not know how they work? (The programmers, not my somewhat inept neuron?)

Last edited:

From the link:

"Language models have become more capable and more widely deployed, but we do not understand how they work."

How can we not know how they work? (The programmers, not my somewhat inept neuron?

That, or they can't write clearly.

I had the same reaction (among others) but it looks like they are talking about tracing the precise mechanistic steps that match what they (amusingly) call a neuron in their model to selecting a specific token (word, roughly) in the output text.

sam_adams

Major Contributor

- Joined

- Dec 24, 2019

- Messages

- 1,110

- Likes

- 2,808

Our nightmare has arrived! Ha ha!

Not until someone connects it to something that it controls but doesn't understand the consequences of its actions when it makes a mistake. Yes, Chat-GPT does make mistakes. It 'hallucinates' facts because it is not a true intelligence. It takes your input and iteratively applies probabilistic outcomes to it until it reaches a certain threshold of 'certainty' and then barfs out an answer.

Applying what is essentially a 'novelty' to scientific research, or to mathematics or code writing—when you have little or absolutely no knowledge of the subject matter—will quickly lead one to erroneous conclusions. Chat-GPT has already been tasked with programming and ≈50% or so of the code it wrote was insecure. Trusting this 'novelty' to generate something which one has no knowledge of how it is supposed to work would be a bad idea because if that code was used for something that needed to be threat-resistant or failsafe, it could turn out to be a bad day indeed.

badspeakerdesigner

Active Member

- Joined

- Jan 12, 2023

- Messages

- 274

- Likes

- 451

Be cool if this would stick to the topic, not really interested in everyone's broad opinion or philosophy on AI.

DualTriode

Addicted to Fun and Learning

- Joined

- Oct 24, 2019

- Messages

- 911

- Likes

- 601

Some day your car will be smart enough to drive you home, even if your drunk self nods off on the way there.

Is that AI or what?

Is that AI or what?

Because it is not procedural but data driven code. The actual code is rather small and has nothing interesting in it. It is the dataset that is massive (think billions of parameters). The data is filled in during training in a similar manner to neural networks (with optimizations to reduce size). Imagine if I give you a 1 million by 1 million matrix full of numbers. How exactly do you figure out what those numbers mean? You would know how you filled them (statistically) but you don't know what they do when the look up occurs in a different manner. You would have to probe the model to learn what the dataset really represents....From the link:

"Language models have become more capable and more widely deployed, but we do not understand how they work."

How can we not know how they work? (The programmers, not my somewhat inept neuron?)

- Thread Starter

- #32

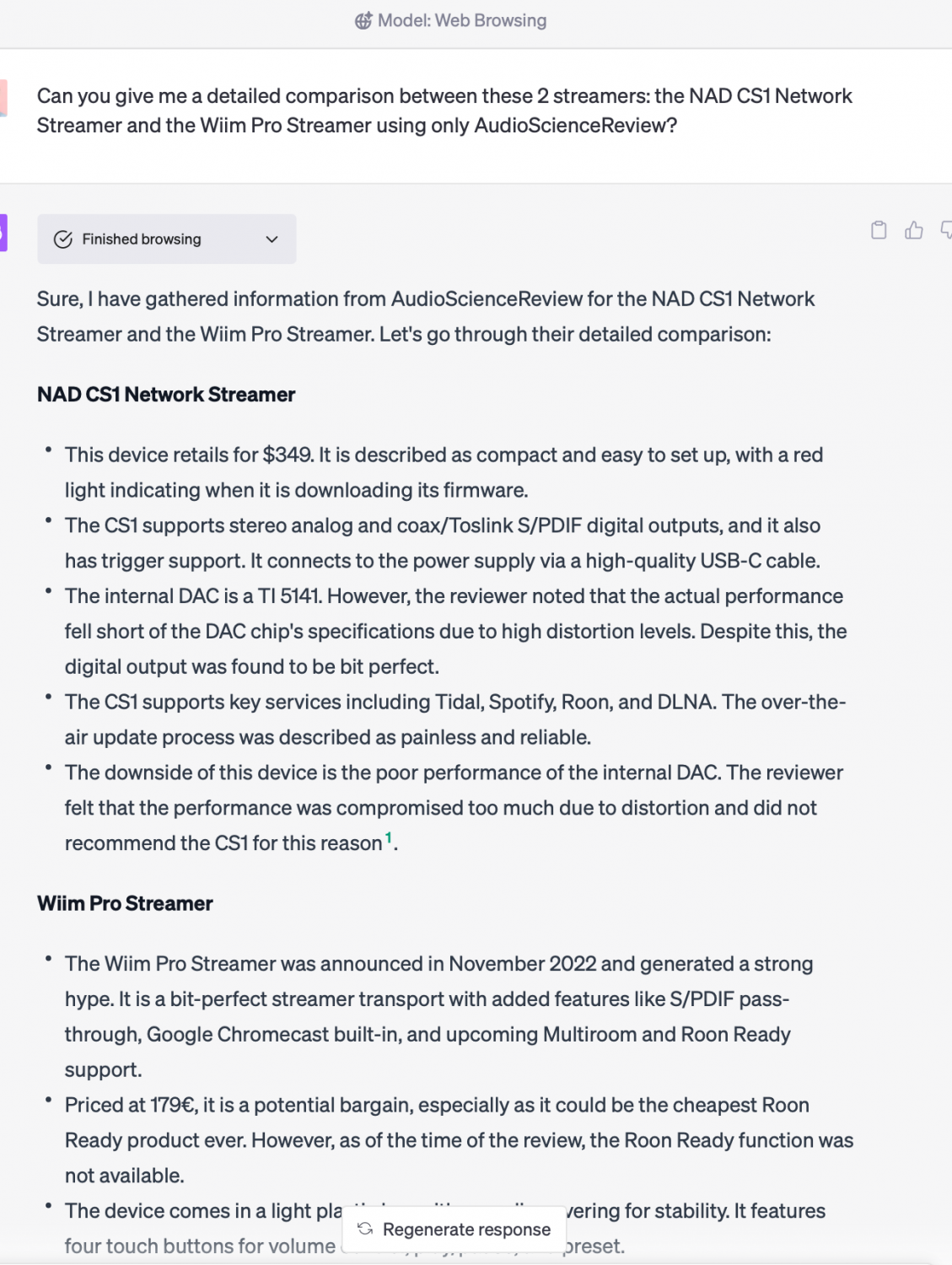

So, thought I would give a small update to ChatGPT plugins and using them for some analysis.

They are still in a very early state but I believe more people will get access soon. I still haven't gotten access to the Code Interpreter plugin (which is the one I would mostly be excited for a pure data science and analytics perspective.

The browser plugin can do a reasonable nice job of using real data from this website:

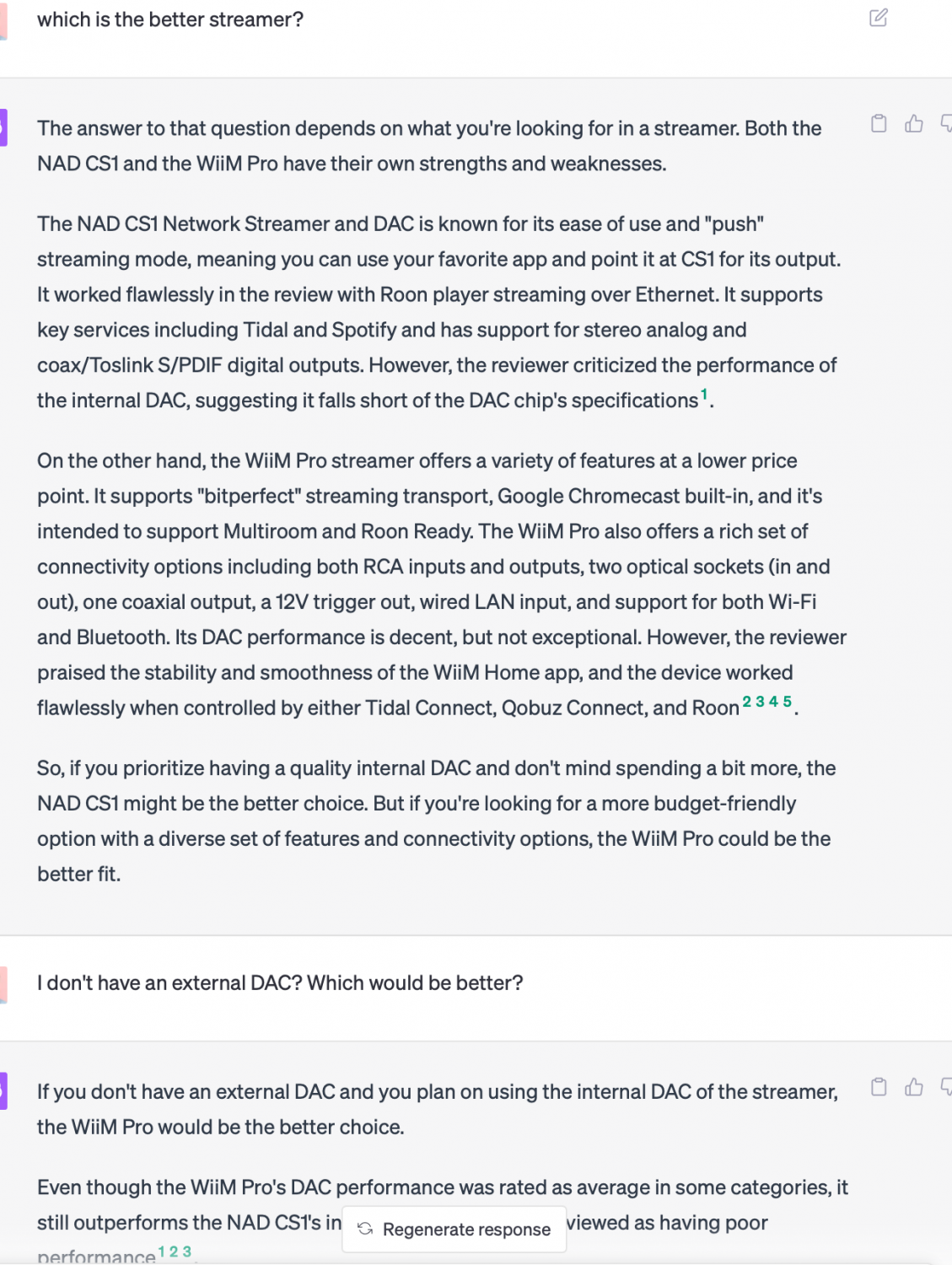

This is effectively just summarising 2 separate reviews - but once this is loaded into GPT4 'short term' memory you can ask questions against the data:

I have used the browser plugin to do similar comparisons between speakers via www.spinorama.org - but the most interesting IMO is @pierre has done a nice job already of enabling a chatGPT plugin for the spinorama data. But the browser plug-in very early and buggy at the moment and I still don’t have access to the data science “code interpreter” plugin

They are still in a very early state but I believe more people will get access soon. I still haven't gotten access to the Code Interpreter plugin (which is the one I would mostly be excited for a pure data science and analytics perspective.

The browser plugin can do a reasonable nice job of using real data from this website:

This is effectively just summarising 2 separate reviews - but once this is loaded into GPT4 'short term' memory you can ask questions against the data:

I have used the browser plugin to do similar comparisons between speakers via www.spinorama.org - but the most interesting IMO is @pierre has done a nice job already of enabling a chatGPT plugin for the spinorama data. But the browser plug-in very early and buggy at the moment and I still don’t have access to the data science “code interpreter” plugin

Gruesome

Active Member

Maybe ChatGPT can not only write Amir's paper, but also sweet talk the JAES editors into waving the over-limit page fee?

- Thread Starter

- #34

And hopefully to be clear IMO there are roughly 3 different approaches to using these tools in increasing useful ways:

1. Generate text - least useful IMO as it can / will definitely make stuff up - e.g. - I created a post with a Fake Review here:

audiosciencereview.com

audiosciencereview.com

2. Use them for summarisation and 'fine tuning' - this is what the browser plugin I used above can do - it is not make stuff up but has temporarily merged the content it extracted from the webpages / website and generates new text using that text as the main subject - so useful IMO as in its really is using up to date data.

3. Use them to generate the "code" (effectively small adhoc applications) and these small applications actually do data science work as if you have access to an entoustastic junior data scientist - whose "work" needs to be checked for errors / issues but when checked / fixed can be used to generate good reliable results and save a considerable amount of time

1. Generate text - least useful IMO as it can / will definitely make stuff up - e.g. - I created a post with a Fake Review here:

Using Audio science to find the perfect IEM "for you"

So let me start by stating something that might be controversial, in my opinion, you should not blindly buy an IEM that matches the harman target! This IMO is especially true if: You have bought some harman target IEM's in the past but needed to EQ them to sound correct for you (for various...

audiosciencereview.com

audiosciencereview.com

2. Use them for summarisation and 'fine tuning' - this is what the browser plugin I used above can do - it is not make stuff up but has temporarily merged the content it extracted from the webpages / website and generates new text using that text as the main subject - so useful IMO as in its really is using up to date data.

3. Use them to generate the "code" (effectively small adhoc applications) and these small applications actually do data science work as if you have access to an entoustastic junior data scientist - whose "work" needs to be checked for errors / issues but when checked / fixed can be used to generate good reliable results and save a considerable amount of time

Amazing job doing the summarization. Better than most human writers...This is effectively just summarising 2 separate reviews -

D

Deleted member 48726

Guest

Gruesome

Active Member

From my one voluntary interaction with ChatGPT, I was impressed by how its programmers/data feeders/care takers had integrated hard data into its repertoire (I asked it about yield strains for different grades of steel bolts), but also by how easily it confused itself with the meaning it had assigned to numbers/variables. Like, in one moment it would pick an arbitrary number as an example for yield stress, to illustrate the concept, and two sentences later it was mistaking that number for the yield stress in a computation. Impressive was again that it correctly parsed my comment pointing out this mistake, and corrected its calculations and spit out a corrected table of yield strains.

But unless a particular instance of this program has been configured to put a very high emphasis on not making stuff up, and a much lower emphasis on spitting results out to keep the 'conversation' going, I don't see how you can use a tool like this without cross checking every bit of output. From my interaction with a particular instance of one of these programs, I would fully expect it to make up correlations between say speaker sensitivity and predicted ranking if it 'got the impression' that was something you might like to hear.

But unless a particular instance of this program has been configured to put a very high emphasis on not making stuff up, and a much lower emphasis on spitting results out to keep the 'conversation' going, I don't see how you can use a tool like this without cross checking every bit of output. From my interaction with a particular instance of one of these programs, I would fully expect it to make up correlations between say speaker sensitivity and predicted ranking if it 'got the impression' that was something you might like to hear.

- Thread Starter

- #40

That is exactly what a plugin does, for a given users 'session' it load real data into short term memory (called 'fine tuning') - the ChatGPT session is then much more focused on using real data and not the 'pre-trained' data when generating text, so it will give proper results.But unless a particular instance of this program has been configured to put a very high emphasis on not making stuff up, and a much lower emphasis on spitting results out to keep the 'conversation' going, I don't see how you can use a tool like this without cross checking every bit of output. From my interaction with a particular instance of one of these programs, I would fully expect it to make up correlations between say speaker sensitivity and predicted ranking if it 'got the impression' that was something you might like to hear.

But for the real data science, openAI have a special plugin which is not about chatGPT understanding the 'raw' data, its about chatGPT understanding how to use standard analytical data science tools to allow the user interact in english with the tools, and those tools then manipulate the data based on what you ask it. It is probably hard to visualise and hopefully OpenAI will make these plugins fully available so people can play with them.

Similar threads

- Replies

- 0

- Views

- 651

- Replies

- 21

- Views

- 2K

- Replies

- 370

- Views

- 69K

- Poll

- Replies

- 17

- Views

- 1K