ctrl

Major Contributor

Yesterday I stumbled across a YouTube video about break-in of guitar speaker drivers (the test conditions and reproducibility of the measurements can certainly be debated, but on the whole I think the test is valid).

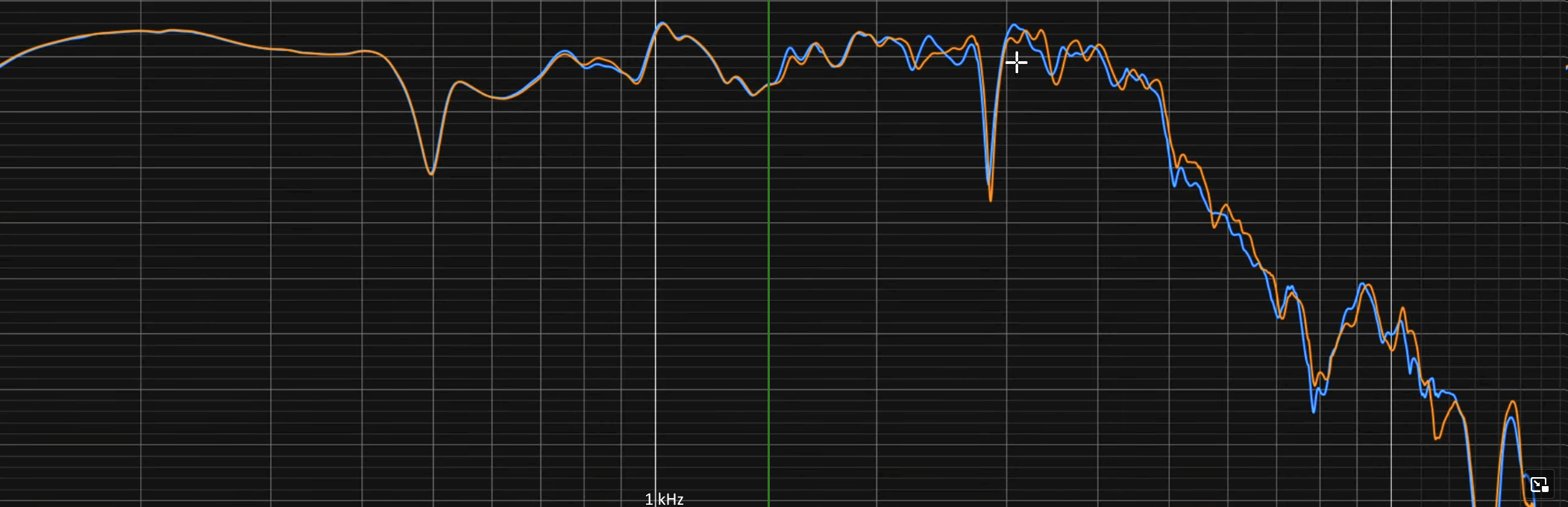

IMO this video shows the difference between break-in (minimal change in driver FR) and wearing out a driver. The driver is a 12'' Celestion Vintage 30, which would be used as a midrange driver up to 1kHz in a loudspeaker design (theoretical).

After 30 hours of really extreme break-in of the driver - distorted guitar sound with around 130dBA@1m SPL - there is hardly any change in the frequency response up to 1.4kHz (blue is before break-in, green line about 1.4kHz):

Source: YouTube video linked above

Such a driver would be used up to a maximum of 1kHz (likely XO around 700-800Hz) in a "normal speaker", so that anything above 1.4kHz with a fourth-order filter already shows more than 25dB SPL drop - so nearly no change in FR at all in the usable range of the driver.

Most changes occur above 2kHz, where the driver is no longer guaranteed to move in a piston-like manner (first resonances occur much earlier) and will show corresponding break-up resonances - such as concentric and circumferential modes, rim contour and surround modes/resonances:

Source: High Performance Loudspeaker - Martin Colloms and Paul Darlington

By using distorted guitar tones as a test tone, practically the entire mid and high frequency range is stimulated.

Due to the extreme test conditions and the excitation of the break-up resonances at full SPL (or nearly full SPL), the first material changes occur in the frequency range of the resonances.

This would not occur with a hifi loudspeaker (apart from a single driver full-range loudspeaker) as the crossover prevents the break-up resonances from being excited at high SPL.

After the driver had been "tortured" for 300 hours with the extreme test tones, there were clear changes in the frequency range above around 1400Hz (again, blue is before break-in):

Source: YouTube video linked above

In some cases, new resonances have been added or have increased significantly. I would assume that these are signs of wear out off the driver material due to the excessive strain caused by the 300 hours of extreme sound reproduction.

Even under these extrem conditions, the change up to 1.4kHz is rather small and the SPL deviation should be around or below the manufacturer's standard deviation - +-1dB SPL deviation compared to a reference FR during production is not unusual).

(The approximately 1dB SPL difference in the frequency range of 20-1400Hz might be caused by a measurement/calibration error)

IMO this video shows the difference between break-in (minimal change in driver FR) and wearing out a driver. The driver is a 12'' Celestion Vintage 30, which would be used as a midrange driver up to 1kHz in a loudspeaker design (theoretical).

After 30 hours of really extreme break-in of the driver - distorted guitar sound with around 130dBA@1m SPL - there is hardly any change in the frequency response up to 1.4kHz (blue is before break-in, green line about 1.4kHz):

Source: YouTube video linked above

Such a driver would be used up to a maximum of 1kHz (likely XO around 700-800Hz) in a "normal speaker", so that anything above 1.4kHz with a fourth-order filter already shows more than 25dB SPL drop - so nearly no change in FR at all in the usable range of the driver.

Most changes occur above 2kHz, where the driver is no longer guaranteed to move in a piston-like manner (first resonances occur much earlier) and will show corresponding break-up resonances - such as concentric and circumferential modes, rim contour and surround modes/resonances:

Source: High Performance Loudspeaker - Martin Colloms and Paul Darlington

By using distorted guitar tones as a test tone, practically the entire mid and high frequency range is stimulated.

Due to the extreme test conditions and the excitation of the break-up resonances at full SPL (or nearly full SPL), the first material changes occur in the frequency range of the resonances.

This would not occur with a hifi loudspeaker (apart from a single driver full-range loudspeaker) as the crossover prevents the break-up resonances from being excited at high SPL.

After the driver had been "tortured" for 300 hours with the extreme test tones, there were clear changes in the frequency range above around 1400Hz (again, blue is before break-in):

Source: YouTube video linked above

In some cases, new resonances have been added or have increased significantly. I would assume that these are signs of wear out off the driver material due to the excessive strain caused by the 300 hours of extreme sound reproduction.

Even under these extrem conditions, the change up to 1.4kHz is rather small and the SPL deviation should be around or below the manufacturer's standard deviation - +-1dB SPL deviation compared to a reference FR during production is not unusual).

(The approximately 1dB SPL difference in the frequency range of 20-1400Hz might be caused by a measurement/calibration error)

Last edited: