After finishing @Floyd Toole 's The Acoustics and Psychoacoustics of Loudspeakers and Rooms, I became interested in conducting a blind listening test. With the help of a similarly minded friend, we put together a test and ran 12 people through it. I’ll describe our procedures and the results. I’m aware of numerous limitations; we did our best with the space and technology we had.

All of the speakers in the test have spinorama data and the electronics have been measured on ASR.

Speakers (preference score in parentheses):

Kef Q100 (5.1)

Revel W553L (5.1)

JBL Control X (2.1)

OSD AP650 (1.1)

Test Tracks:

Fast Car – Tracy Chapman

Just a Little Lovin – Shelby Lynne

Tin Pan Alley – Stevie Ray Vaughan

Morph the Cat – Donald Fagen

Hunter – Björk

Amplifier:

2x 3e Audio SY-DAP1002 (with upgraded opamps)

DAC:

2x Motu M2

Soundboard / Playback Software:

Rogue Amoeba Farrago

The test tracks were all selected from Harman’s list of recommended tracks except for Hunter. All tracks were down mixed to mono as the test was conducted with single speakers. The speakers were set up on a table in a small cluster. We used pink noise and Room EQ and a MiniDSP UMik-1 to volume match the speakers to less than 0.5db variance. The speakers were hidden behind black speaker cloth before anyone arrived. We connected both M2 interfaces to a MacBook Pro, and used virtual interfaces to route output to the four channels. Each track was configured in Farrago to point to a randomly assigned speaker. This allowed us to click a single button on any track and hear it out of one of the speakers. We could easily jump around and allow participants to compare any speaker back to back on any track.

Our listeners were untrained, though we had a few musicians in the room and two folks that have read Toole’s book and spend a lot of time doing critical listening.

Participants were asked to rate each track and speaker combination on a scale from 0-10 where 10 represented the highest audio fidelity.

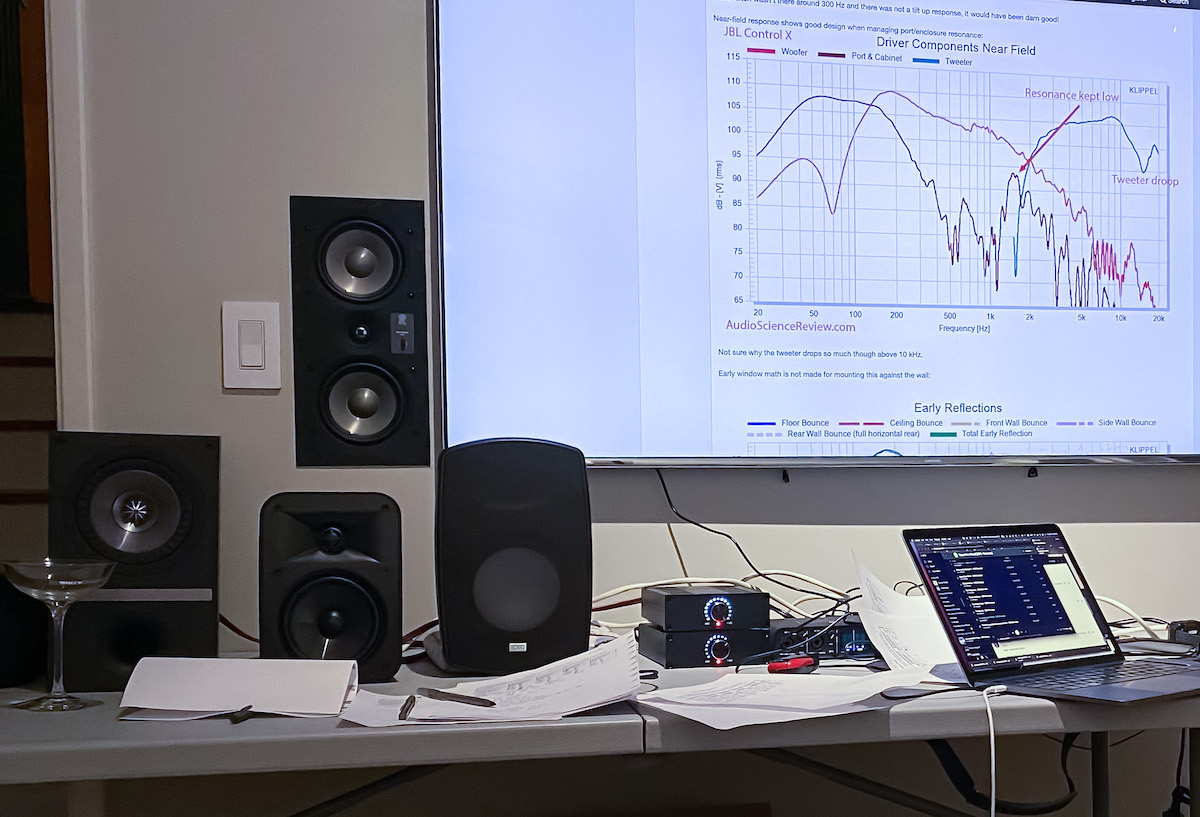

Here is a photo of what the setup looked like after we unblinded and presented results back to the participants:

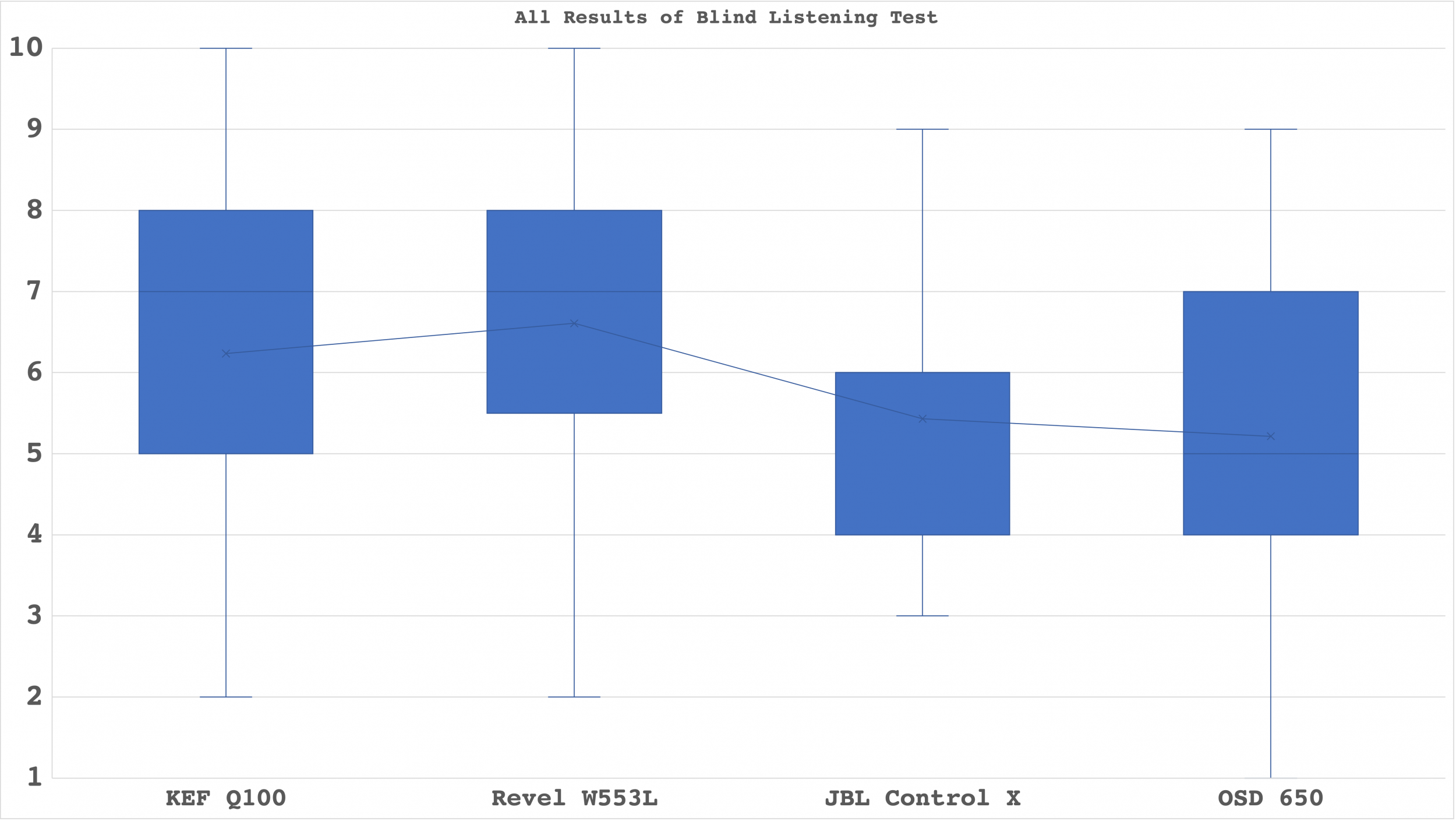

Here are the results of everyone that participated.

Average rating across all songs and participants:

Revel W553L: 6.6

KEF Q100: 6.2

JBL Control X: 5.4

OSD 650: 5.2

Plotted:

You can see that the Kef and Revel were preferred and that the JBL and OSD scored worse. The JBL really lacked bass and this is likely why it had such low scores. The OSD has a number of problems that can be seen on the spin data. That said, at least two participants generally preferred it.

Some interesting observations:

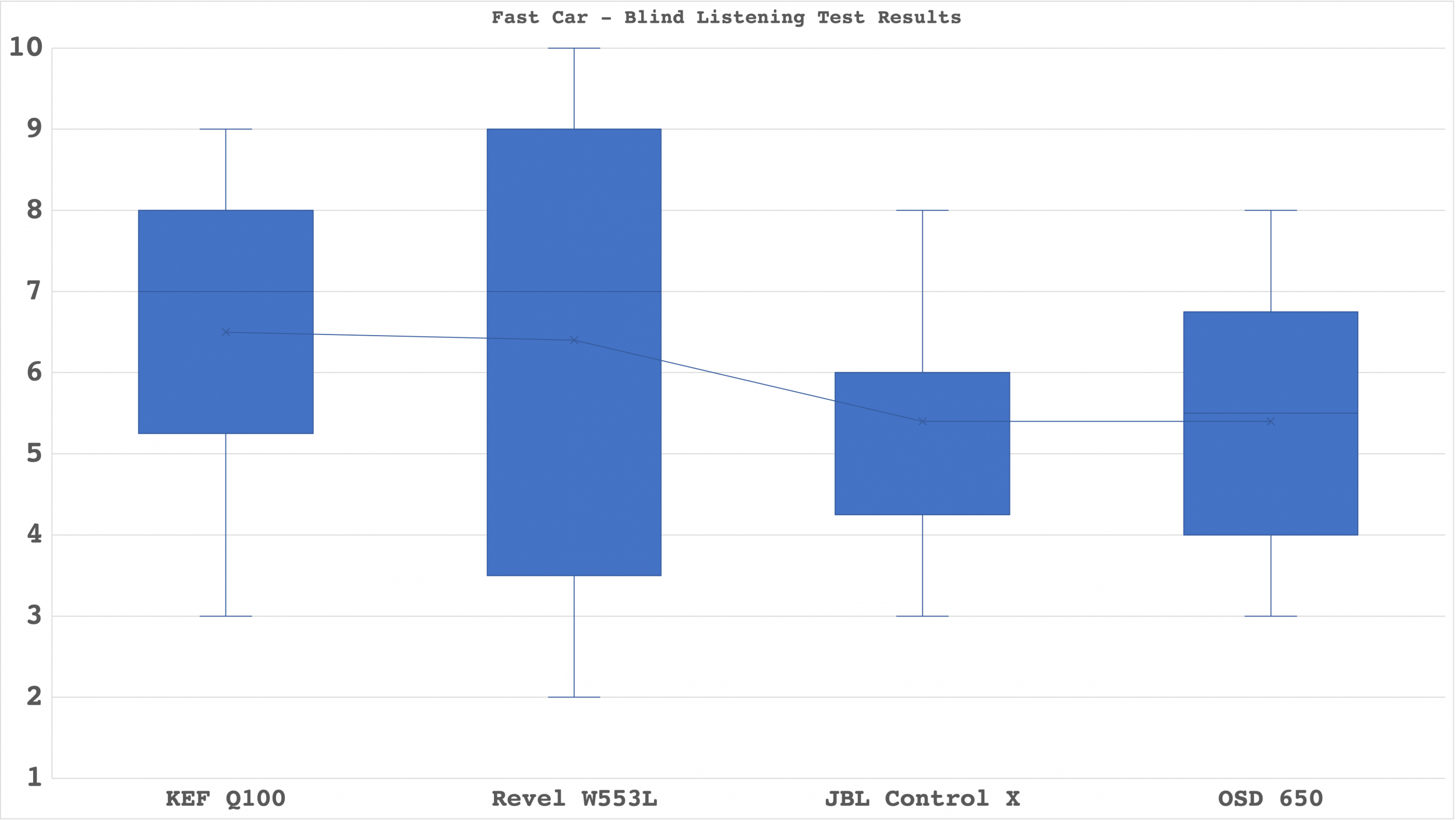

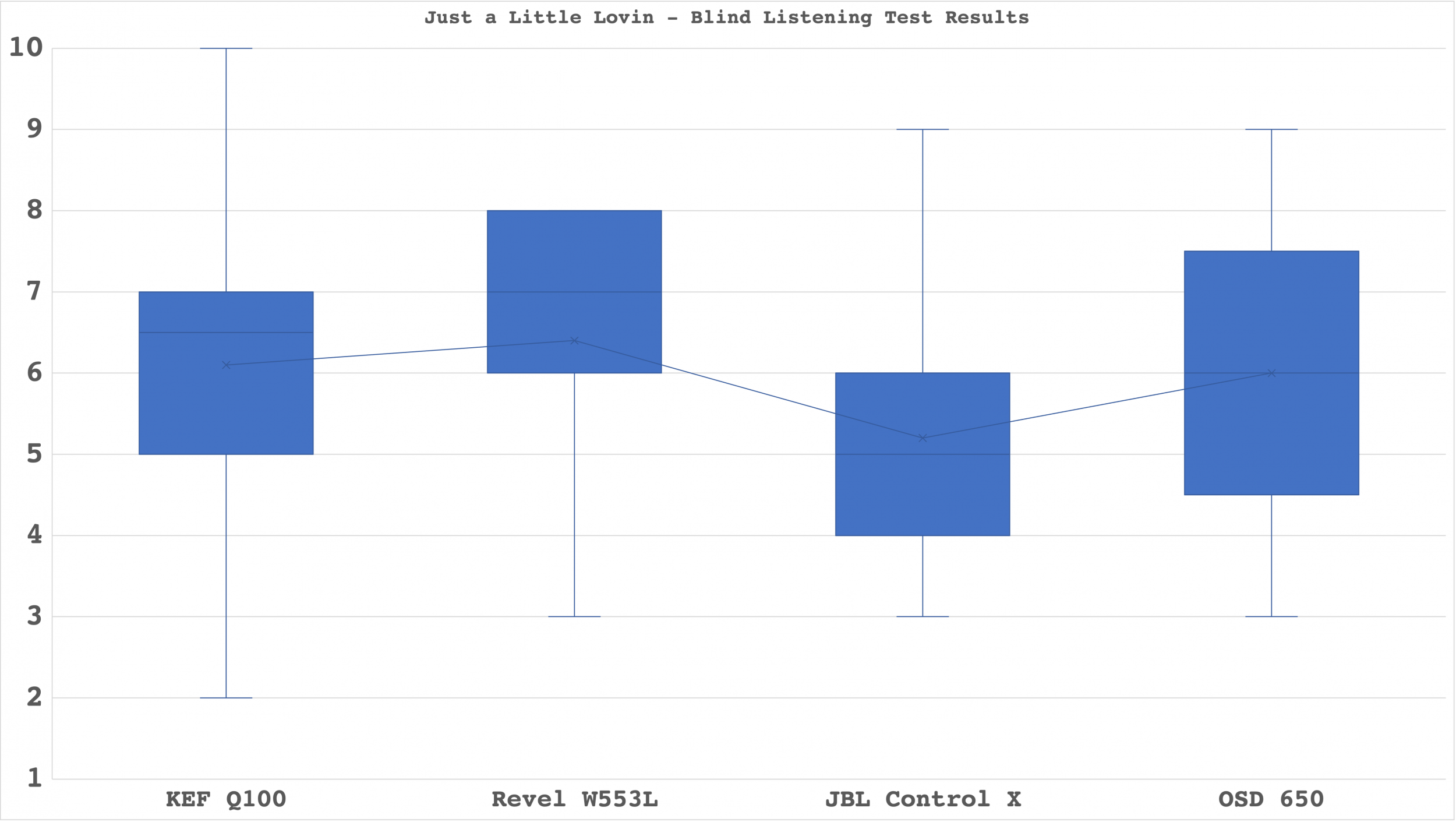

Hunter and Morph the Cat were the most revealing songs for these speakers and Fast Car was close behind. Tin Pan Alley was more challenging, and Just a Little Lovin was the most challenging. The research that indicates bass accounts for 30% of our preference was on display here. Speakers with poor bass had low scores.

Here is the distribution of scores by song:

Fast Car:

Just a Little Lovin:

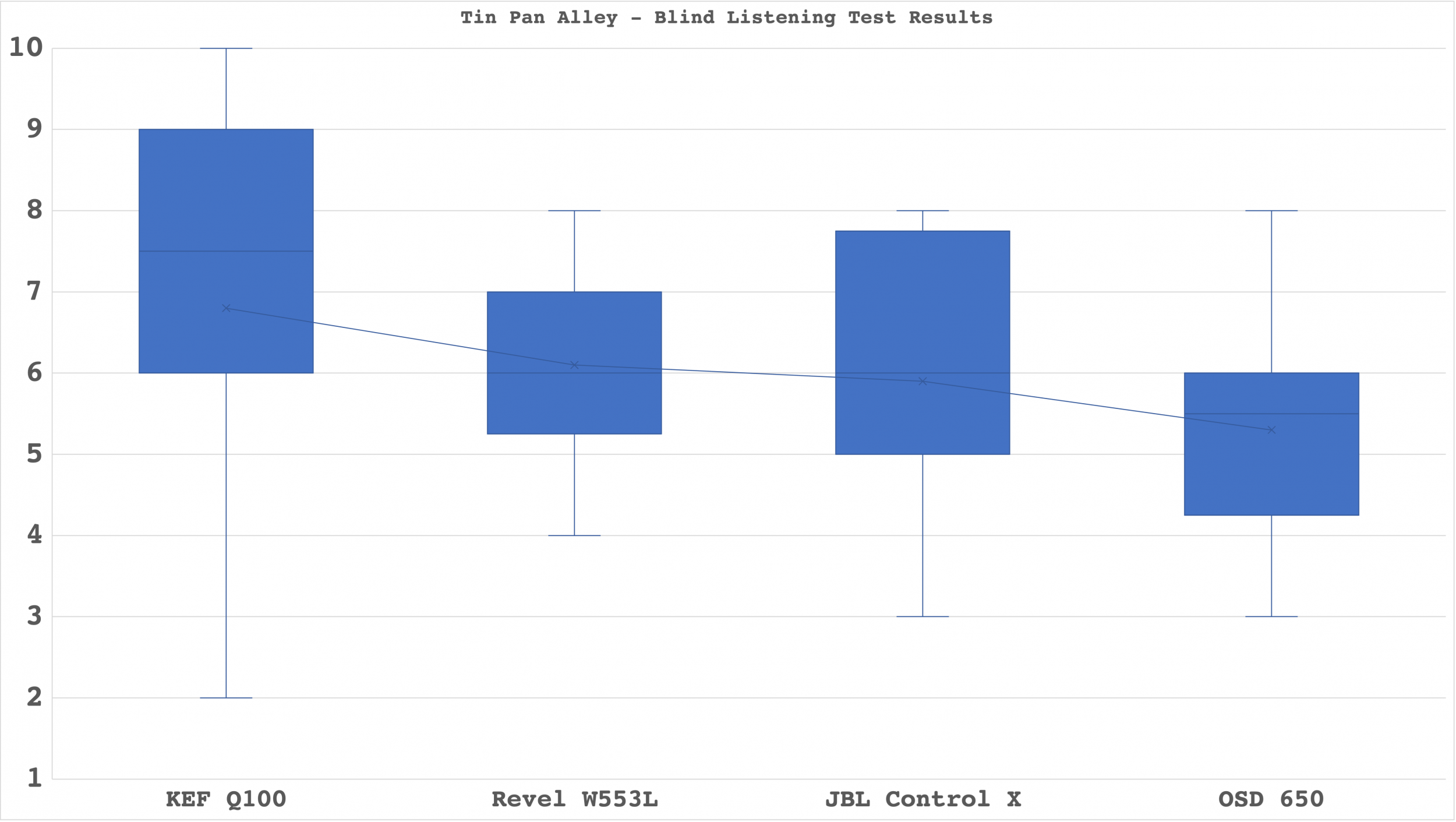

Tin Pan Alley:

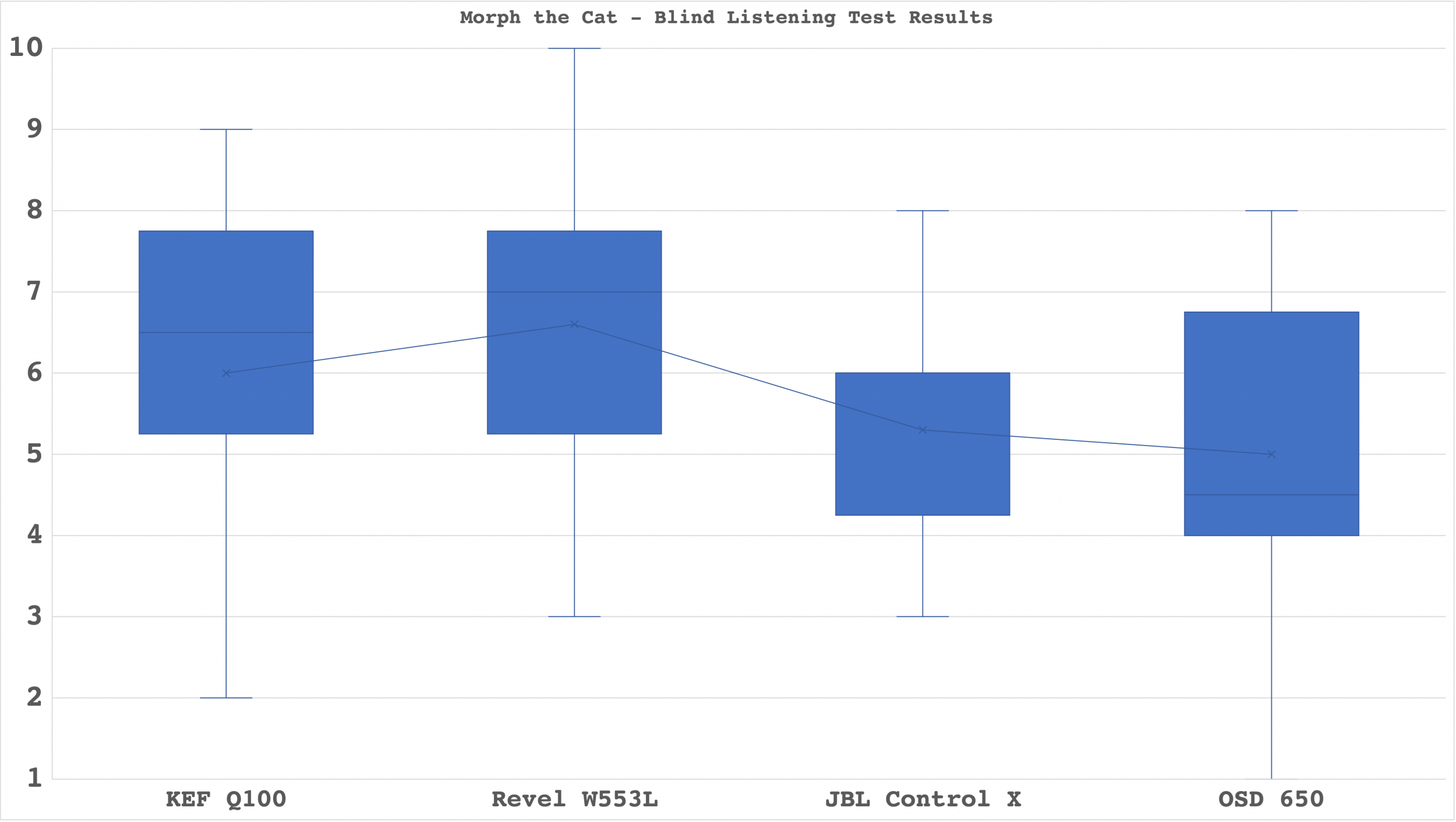

Morph the Cat:

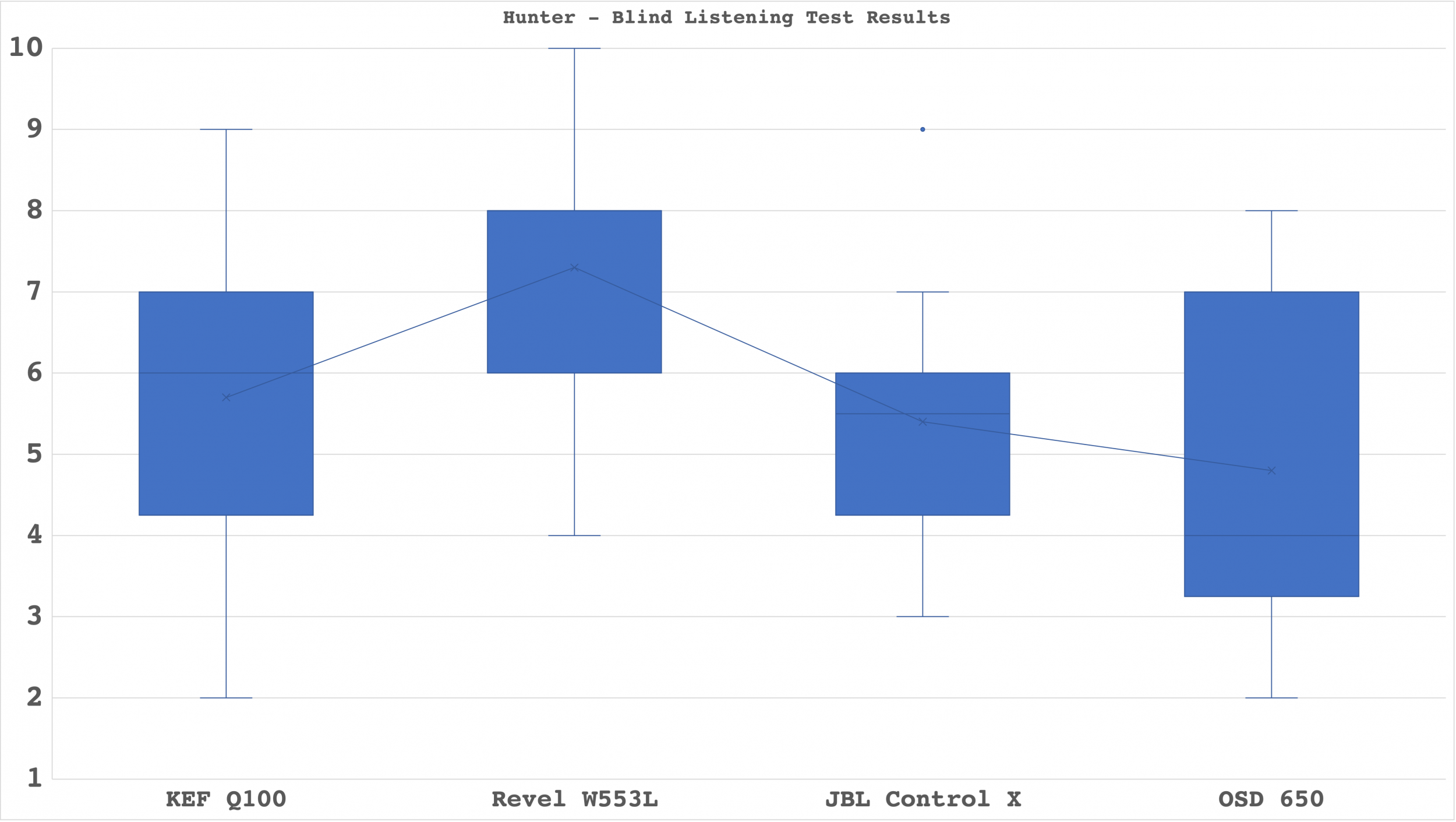

Hunter:

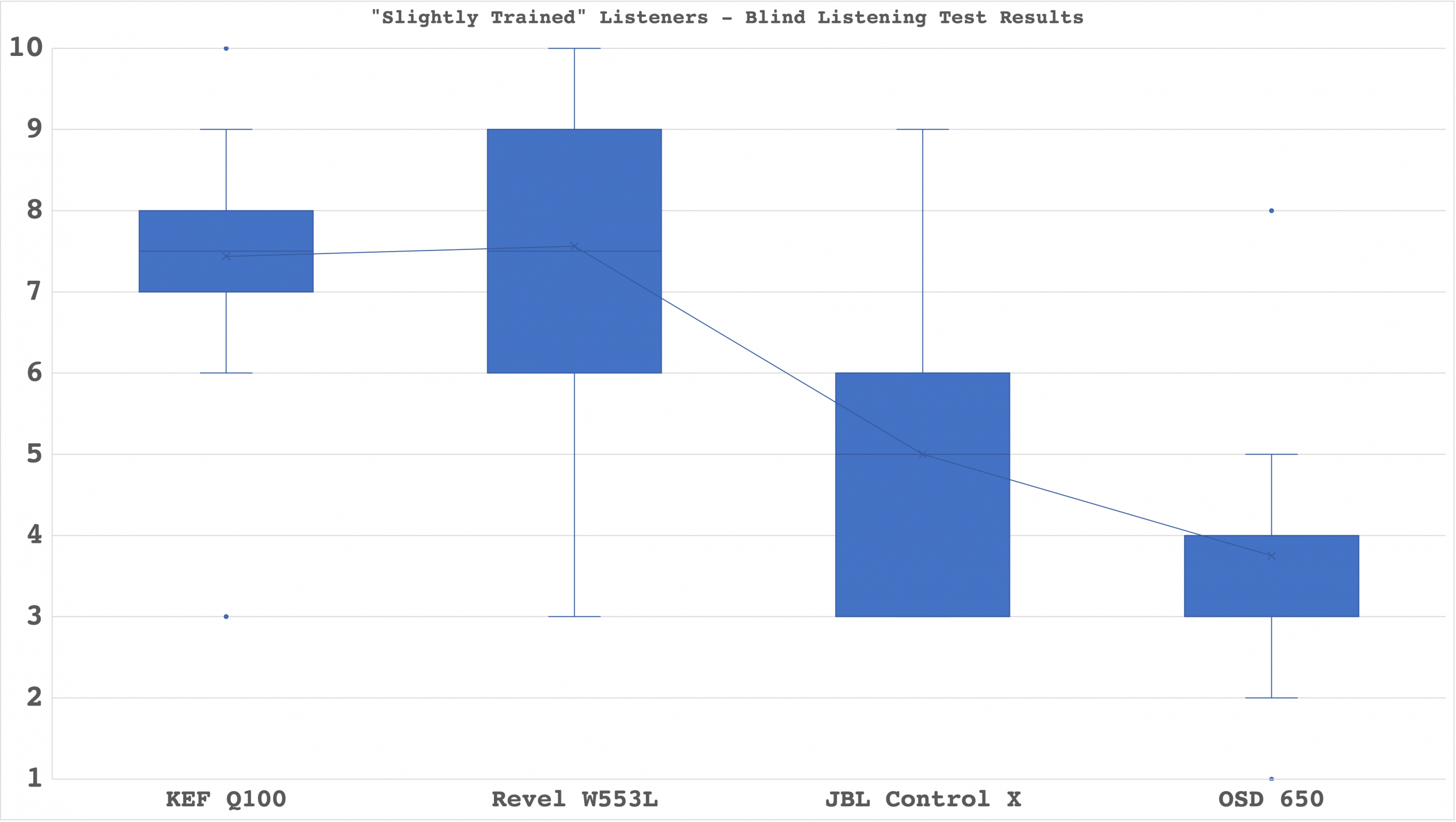

If we limit the data to the three most “trained” listeners (musicians, owners of studio monitors, etc) this is the result:

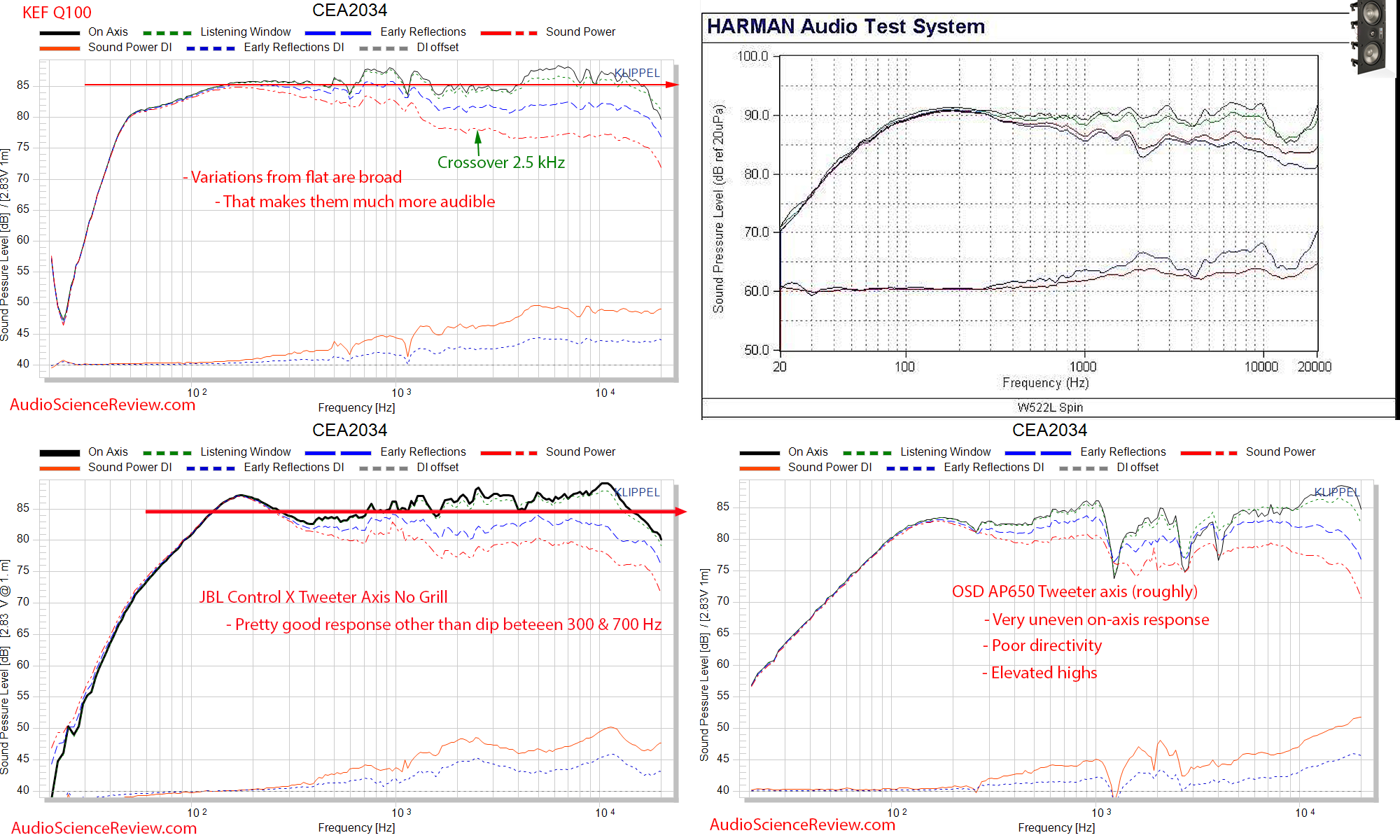

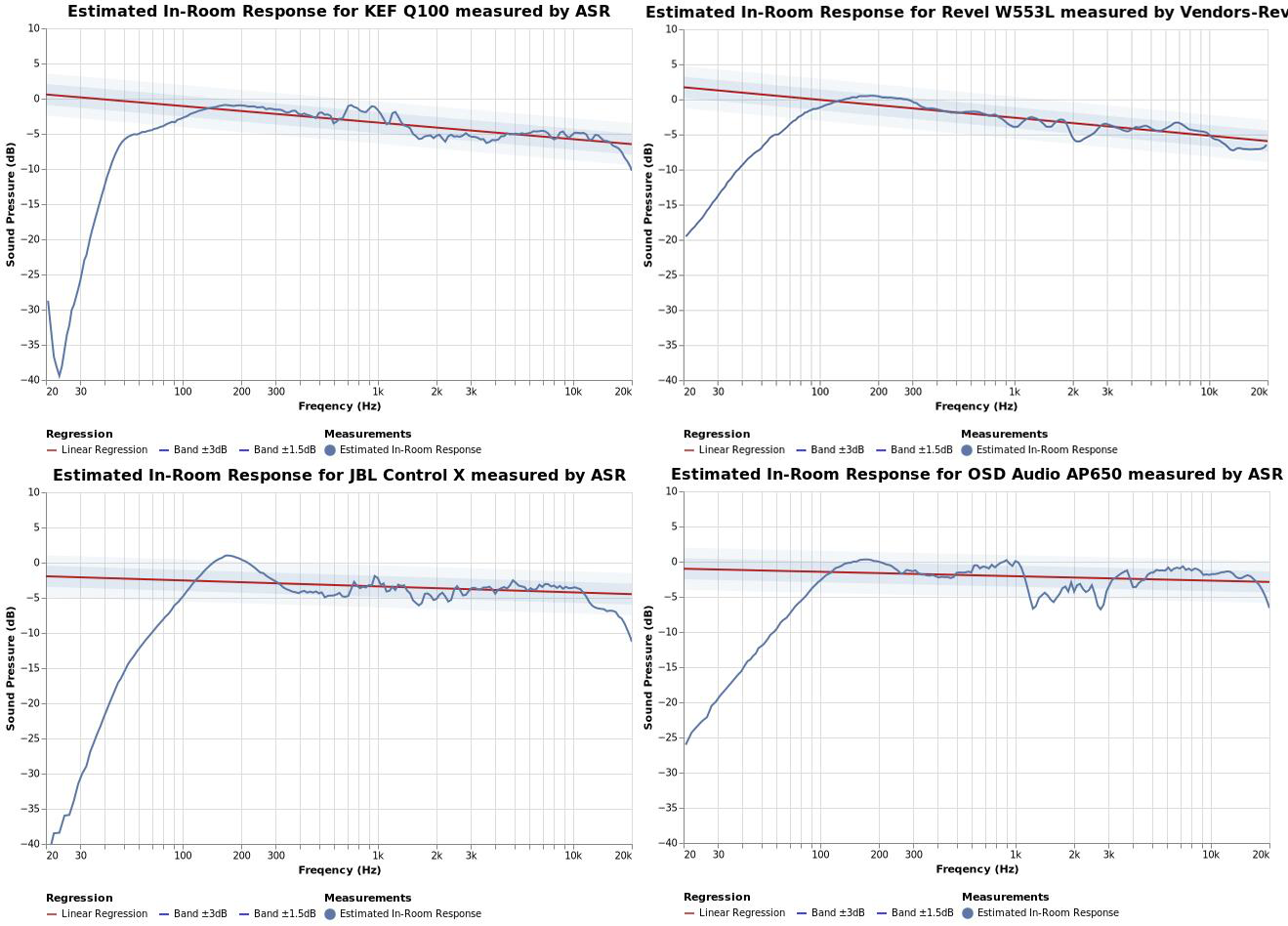

I’ve included the spin images here for everyone’s reference. ASR is the source for all but the Revel which comes directly from Harman. It says W552L, but the only change to the W553L was the mounting system.

My biggest takeaways were:

What the research to date indicates is correct; spinorama data correlates well with listener preferences even with untrained listeners.

Those small deviations, and even some big deviations on frequency response are not easy to pick out in a blind comparison of multiple speakers depending on the material being played. I have no doubt a trained listener can pick stuff out better—but the differences are subtle and in the case of the Kef and Revel, it really takes a lot of data before a pattern emerges.

The more trained listeners very readily picked out the OSD as sounding terrible on most tracks, but picking a winner between the Kef and Revel was much harder. It is interesting to note that the Kef and Revel both have a preference score of 5.1. The JBL has a 2.1 and the OSD has a 1.1

One final note, all but one listener was between 35-47 years old and it is unlikely any of us could hear much past 15 or 16khz. One participant was in their mid 20s.

EDIT:

Raw data is here.

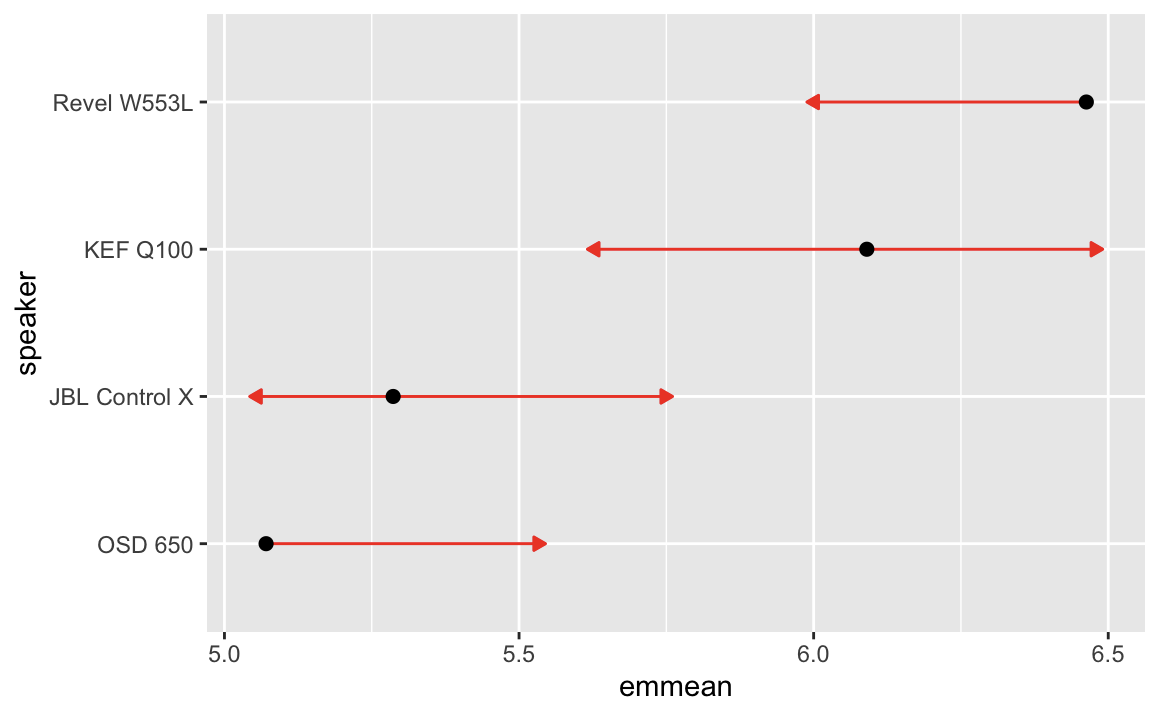

@Semla conducted some statistical analysis here, and here.

@Semla gave permission to reproduce this chart from one of those posts. Please read the above posts for detail and background on this chart.

"You can compare two speakers by checking for overlap between the red arrows.

All of the speakers in the test have spinorama data and the electronics have been measured on ASR.

Speakers (preference score in parentheses):

Kef Q100 (5.1)

Revel W553L (5.1)

JBL Control X (2.1)

OSD AP650 (1.1)

Test Tracks:

Fast Car – Tracy Chapman

Just a Little Lovin – Shelby Lynne

Tin Pan Alley – Stevie Ray Vaughan

Morph the Cat – Donald Fagen

Hunter – Björk

Amplifier:

2x 3e Audio SY-DAP1002 (with upgraded opamps)

DAC:

2x Motu M2

Soundboard / Playback Software:

Rogue Amoeba Farrago

The test tracks were all selected from Harman’s list of recommended tracks except for Hunter. All tracks were down mixed to mono as the test was conducted with single speakers. The speakers were set up on a table in a small cluster. We used pink noise and Room EQ and a MiniDSP UMik-1 to volume match the speakers to less than 0.5db variance. The speakers were hidden behind black speaker cloth before anyone arrived. We connected both M2 interfaces to a MacBook Pro, and used virtual interfaces to route output to the four channels. Each track was configured in Farrago to point to a randomly assigned speaker. This allowed us to click a single button on any track and hear it out of one of the speakers. We could easily jump around and allow participants to compare any speaker back to back on any track.

Our listeners were untrained, though we had a few musicians in the room and two folks that have read Toole’s book and spend a lot of time doing critical listening.

Participants were asked to rate each track and speaker combination on a scale from 0-10 where 10 represented the highest audio fidelity.

Here is a photo of what the setup looked like after we unblinded and presented results back to the participants:

Here are the results of everyone that participated.

Average rating across all songs and participants:

Revel W553L: 6.6

KEF Q100: 6.2

JBL Control X: 5.4

OSD 650: 5.2

Plotted:

You can see that the Kef and Revel were preferred and that the JBL and OSD scored worse. The JBL really lacked bass and this is likely why it had such low scores. The OSD has a number of problems that can be seen on the spin data. That said, at least two participants generally preferred it.

Some interesting observations:

Hunter and Morph the Cat were the most revealing songs for these speakers and Fast Car was close behind. Tin Pan Alley was more challenging, and Just a Little Lovin was the most challenging. The research that indicates bass accounts for 30% of our preference was on display here. Speakers with poor bass had low scores.

Here is the distribution of scores by song:

Fast Car:

Just a Little Lovin:

Tin Pan Alley:

Morph the Cat:

Hunter:

If we limit the data to the three most “trained” listeners (musicians, owners of studio monitors, etc) this is the result:

I’ve included the spin images here for everyone’s reference. ASR is the source for all but the Revel which comes directly from Harman. It says W552L, but the only change to the W553L was the mounting system.

My biggest takeaways were:

What the research to date indicates is correct; spinorama data correlates well with listener preferences even with untrained listeners.

Those small deviations, and even some big deviations on frequency response are not easy to pick out in a blind comparison of multiple speakers depending on the material being played. I have no doubt a trained listener can pick stuff out better—but the differences are subtle and in the case of the Kef and Revel, it really takes a lot of data before a pattern emerges.

The more trained listeners very readily picked out the OSD as sounding terrible on most tracks, but picking a winner between the Kef and Revel was much harder. It is interesting to note that the Kef and Revel both have a preference score of 5.1. The JBL has a 2.1 and the OSD has a 1.1

One final note, all but one listener was between 35-47 years old and it is unlikely any of us could hear much past 15 or 16khz. One participant was in their mid 20s.

EDIT:

Raw data is here.

@Semla conducted some statistical analysis here, and here.

@Semla gave permission to reproduce this chart from one of those posts. Please read the above posts for detail and background on this chart.

"You can compare two speakers by checking for overlap between the red arrows.

- If the arrows overlap: no significant difference between the rating given by the listeners.

- If they don't overlap: statistically significant difference between the rating given by the listeners."

Last edited: