It would not be a simpler version of this test, it would be a different test.I'd suggest some amount of tricking the listener in the testing.

Sometimes playing the same speaker more than once, sometimes adjusting the same speakers location and replaying.

I'd wager something really interesting would happen.

There is no reason every listener needs to hear each speaker.

Also the aforementioned ABX testing could be really fun. Can the listener determine which speaker is "X", (X is either the 2nd playing of A or B)

That would actually allow you to create a much simpler version of this test and perhaps more accurate in terms of listeners ability to hear variation.

In terms of level matching when I do testing I level match the midrange using pink noise generated by REW that is limited to 500hrz-2500hrz. I record the unweighted SPL and double check that the responses overlay by eye on a MMM RTA measurement.

I find this works well.

Obviously in the end level matching is actually nearly impossible with all the variables in play and choosing how this is done is a choice not an exact science. I doubt anyone has a method that doesn't have some issues. (As far as speaker testing goes)

-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Four Speaker Blind Listening Test Results (Kef, JBL, Revel, OSD)

- Thread starter MatthewS

- Start date

Yeah. I say this regretfully but... even if statistically valid (I'll let the statisticians argue over that) I'd unfortunately consider this test useless thanks to the extremely compromised acoustics.as the large close and reflective horizontal surface will muddle up their FR and imaging.

Sorry, it didn't feel good to type that.

I really appreciate the effort that went into this and there's much that was good about this setup. I think you'll get some really solid results if you can iterate on this a bit. I would eagerly read your next set of results if/when you give it another go.

I think it's unfortunate that you used the word "useless." Experiments don't need to be conclusive in order to be interesting, let alone useful. The fact is, the OP was brave enough to do this test and report the findings here. I'm very appreciative.Yeah. I say this regretfully but... even if statistically valid (I'll let the statisticians argue over that) I'd unfortunately consider this test useless thanks to the extremely compromised acoustics.

Sorry, it didn't feel good to type that.

I really appreciate the effort that went into this and there's much that was good about this setup. I think you'll get some really solid results if you can iterate on this a bit. I would eagerly read your next set of results if/when you give it another go.

Semla

Active Member

- Joined

- Feb 8, 2021

- Messages

- 170

- Likes

- 328

Here are some more results from the modeling I did. Thanks for posting the data!

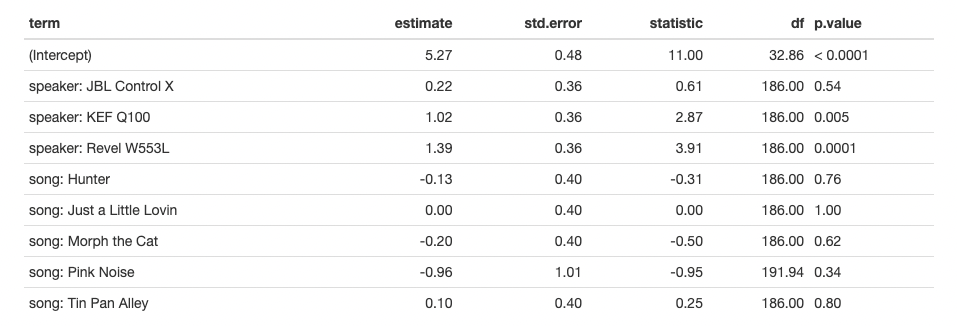

First off, here is the model table for a mixed-effects model with a random effect for listener and song and speakers as fixed effects and KR degrees of freedom (table 1):

An interaction effect between song and speaker is not needed (likelihood ratio test, p = 0.35, df = 15). This means that no particular speaker was especially better for a particular song. Listeners disliked pink noise!

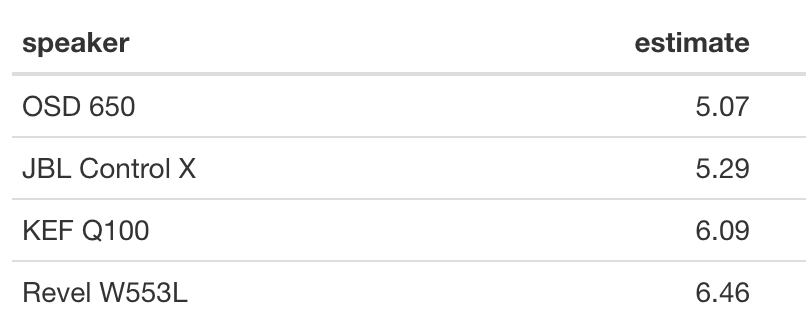

This model corrects for listener preference (assumed to be from a normal distribution with mean 0). We can use this to predict the score an "average" listener would give (table 2).

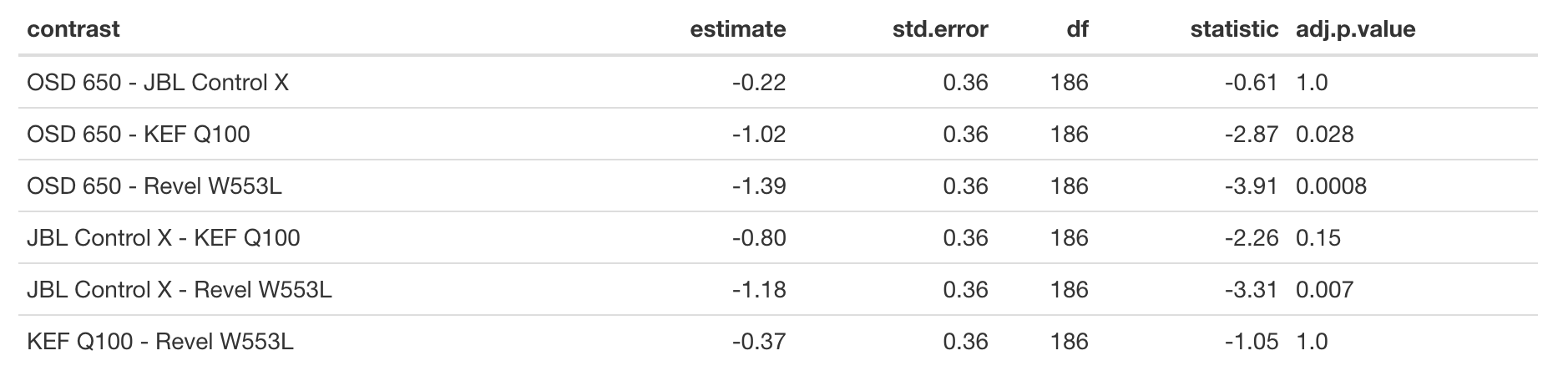

Finally, we can predict whether an "average" listener would be able to differentiate their scoring between the speakers (table 3). p-values were Bonferroni-adjusted for multiple testing.

As you can see there would be a statistically significant different score between the OSD and both the KEF and Revel, and between the JBL and the Revel.

Edit: "average" listener: a listener selected at random from a similar population as this listener panel. The population Harman used to build their scoring model might well be quite different.

First off, here is the model table for a mixed-effects model with a random effect for listener and song and speakers as fixed effects and KR degrees of freedom (table 1):

An interaction effect between song and speaker is not needed (likelihood ratio test, p = 0.35, df = 15). This means that no particular speaker was especially better for a particular song. Listeners disliked pink noise!

This model corrects for listener preference (assumed to be from a normal distribution with mean 0). We can use this to predict the score an "average" listener would give (table 2).

Finally, we can predict whether an "average" listener would be able to differentiate their scoring between the speakers (table 3). p-values were Bonferroni-adjusted for multiple testing.

As you can see there would be a statistically significant different score between the OSD and both the KEF and Revel, and between the JBL and the Revel.

Edit: "average" listener: a listener selected at random from a similar population as this listener panel. The population Harman used to build their scoring model might well be quite different.

Last edited:

I'm not sure if that will work like this in case of some MF-HF dips, bass peaks or excessive distortion, especially 2nd harmonic.If there's some MF-HF coloration you'll note it immediately and it sometimes turns out just unacceptable while you still can enjoy non-heavy genres on that speakers. The closer we come to pink noise in terms of spectre saturation the harder it gets

Even power compression on bass might be less audible at already heavily compressed tracks.

The closer we come to pink noise, the farther we go from any "music" tracks. It's almost as hard for non-trained listener as engine troubleshooting by ear.

lmao, yes point 1 is very important.For proper evaluation of any metal you'll need 1) good recordings and 2) listeners, familiar with this genre.

Both issues are really troublesome.

To discern record effects vs speaker distortion some sympho metal with live orchestra and choir and decent DR might be preferable.

Something like the Ocean - Phanerozoic, Devin Townsend - Terria, Katatonia - Dead End Kings, Meshuggah - Violent Sleep of Reason, Gojira - L'enfant Sauvage or the Way of All Flesh, Karnivool - Sound Awake, Ne Obliviscaris - Portal of I or Urn, golden age Opeth... there's hundreds of albums I would suggest before ever getting to stoner metal. That particular subgenre is not known for high quality recordings.

ROOSKIE

Major Contributor

I suppose what I meant was ABX is a simpler test to pull of and generate meaningful data.It would not be a simpler version of this test, it would be a different test.

This current test, while fun and appreciated for entertainment value, might have been to complex to pull off at home in a casual setting and in fact the understandable attempts to simplify it may have actually made it useless in terms of data.

There needed to be at least one "trick" to generate a reference of accuracy. Like if playing the same speaker twice fooled everyone and they perceived different audio then you know something.

Some sort of independent variable, Placebo or similar.

Last edited:

Maybe, It's just a fun experiment I think not a scientific research, but to me, it's more interesting like that, to know that in this flawed room and condition, a significant, but only significant in these condition, a significant number of participant have had preferences in perceived fidelity that correlate to the preference score, even if we can't reach wider conclusions than that, we know that it was the case that time in those conditions, with that choice of speaker. To me it's more interesting than to try to prove that some listener can be fooled even tough there are variations of more or less 3 dBs across the frequency band. This would also only be applicable to these conditions, not sure it is more meaningful. And Frequency responses already tells us these speakers sound different. As I said, not totally uninteresting test, but less interesting to me. In both case this doesn't lead to a scientifically valid conclusion applicable to a larger scope.I suppose what I meant was ABX is a simpler test to pull of and generate meaningful data.

This current test, while fun and appreciated for entertainment value, might have been to complex to pull off at home in a casual setting and in fact the understandable attempts to simplify it may have actually made it useless in terms of data.

There needed to be at least one "trick" to generate a reference of accuracy. Like if playing the same speaker twice fooled everyone and they perceived different audio then you know something.

Some sort of independent variable, Placebo or similar.

Last edited:

ROOSKIE

Major Contributor

A

The fact that the speakers corelate with the Harman score means maybe nothing without an independent variable/control and some proof that the listeners actually can pass a placebo test. Interestingly in some ways the speaker do not correlate with the Harman score depending on how you look at it. (such as the OSD speaker)

Anyway, not trying to poo-poo fun making. I do fun testing all the time, however all my comparison testing is rooted in subjective decisions due to my testing limitations and I am suggesting here that this test is more subjective than objective. They are 2 ends of the same stick and the best tests still must have subjective aspects to actually exist so having subjectivity influence the test is normal. I do think this test has quite a bit more subjective weight than idealized and it would be possible to address much of that. "tricking" the listener is one of the most common methods used. I mean literally every single pharmaceutical test uses a placebo. Maybe the word trick, triggers you. That trick is simply really important. You could use a reference instead/additional - such a a fulcrum speaker (which why the ABX is good model, it gets harder with more than two speakers though - think 3 body problem)

Yes, I completely understand the fun of this test. Should it make the front page? If you read your comment and really understand what "bias" is you will understand why without a control you have a lot of opportunity to create an exercise in expectation and confirmation bias. Entire multimillion dollar tests are often brought into question due to this sort of leaning toward a result.Maybe, It's just a fun experiment I think not a scientific research, but to me, it's more interesting like that, to know that in this flawed room and condition, a significant, but only significant in these condition, a significant number of participant have had preferences in perceived fidelity that correlate to the preference score, even if we can't reach wider conclusions than that, we know that it was the case that time in those conditions, with that choice of speaker. To me it's more interesting than to try to prove that some listener can be fooled even tough there are variations of more or less 3 dBs across the frequency band. This would also only be applicable to these conditions, not sure it is more meaningful. And Frequency responses already tells us these speakers sound different. As I said, not totally uninteresting test, but less interesting to me.

The fact that the speakers corelate with the Harman score means maybe nothing without an independent variable/control and some proof that the listeners actually can pass a placebo test. Interestingly in some ways the speaker do not correlate with the Harman score depending on how you look at it. (such as the OSD speaker)

Anyway, not trying to poo-poo fun making. I do fun testing all the time, however all my comparison testing is rooted in subjective decisions due to my testing limitations and I am suggesting here that this test is more subjective than objective. They are 2 ends of the same stick and the best tests still must have subjective aspects to actually exist so having subjectivity influence the test is normal. I do think this test has quite a bit more subjective weight than idealized and it would be possible to address much of that. "tricking" the listener is one of the most common methods used. I mean literally every single pharmaceutical test uses a placebo. Maybe the word trick, triggers you. That trick is simply really important. You could use a reference instead/additional - such a a fulcrum speaker (which why the ABX is good model, it gets harder with more than two speakers though - think 3 body problem)

After finishing Floyd Toole’s The Acoustics and Psychoacoustics of Loudspeakers and Rooms, I became interested in conducting a blind listening test. With the help of a similarly minded friend, we put together a test and ran 12 people through it. I’ll describe our procedures and the results. I’m aware of numerous limitations; we did our best with the space and technology we had.

All of the speakers in the test have spinorama data and the electronics have been measured on ASR.

Speakers (preference score in parentheses):

Kef Q100 (5.1)

Revel W553L (5.1)

JBL Control X (2.1)

OSD AP650 (1.1)

Test Tracks:

Fast Car – Tracy Chapman

Just a Little Lovin – Shelby Lynne

Tin Pan Alley – Stevie Ray Vaughan

Morph the Cat – Donald Fagen

Hunter – Björk

Amplifier:

2x 3e Audio SY-DAP1002 (with upgraded opamps)

DAC:

2x Motu M2

Soundboard / Playback Software:

Rogue Amoeba Farrago

The test tracks were all selected from Harman’s list of recommended tracks except for Hunter. All tracks were down mixed to mono as the test was conducted with single speakers. The speakers were set up on a table in a small cluster. We used pink noise and Room EQ and a MiniDSP UMik-1 to volume match the speakers to less than 0.5db variance. The speakers were hidden behind black speaker cloth before anyone arrived. We connected both M2 interfaces to a MacBook Pro, and used virtual interfaces to route output to the four channels. Each track was configured in Farrago to point to a randomly assigned speaker. This allowed us to click a single button on any track and hear it out of one of the speakers. We could easily jump around and allow participants to compare any speaker back to back on any track.

Our listeners were untrained, though we had a few musicians in the room and two folks that have read Toole’s book and spend a lot of time doing critical listening.

Participants were asked to rate each track and speaker combination on a scale from 0-10 where 10 represented the highest audio fidelity.

Here is a photo of what the setup looked like after we unblinded and presented results back to the participants:

View attachment 147701

Here are the results of everyone that participated.

Average rating across all songs and participants:

Revel W553L: 6.6

KEF Q100: 6.2

JBL Control X: 5.4

OSD 650: 5.2

Plotted:

View attachment 147692

You can see that the Kef and Revel were preferred and that the JBL and OSD scored worse. The JBL really lacked bass and this is likely why it had such low scores. The OSD has a number of problems that can be seen on the spin data. That said, at least two participants generally preferred it.

Some interesting observations:

Hunter and Morph the Cat were the most revealing songs for these speakers and Fast Car was close behind. Tin Pan Alley was more challenging, and Just a Little Lovin was the most challenging. The research that indicates bass accounts for 30% of our preference was on display here. Speakers with poor bass had low scores.

Here is the distribution of scores by song:

Fast Car:

View attachment 147689

Just a Little Lovin:

View attachment 147694

Tin Pan Alley:

View attachment 147695

Morph the Cat:

View attachment 147696

Hunter:

View attachment 147697

If we limit the data to the three most “trained” listeners (musicians, owners of studio monitors, etc) this is the result:

View attachment 147698

I’ve included the spin images here for everyone’s reference. ASR is the source for all but the Revel which comes directly from Harman. It says W552L, but the only change to the W553L was the mounting system.

View attachment 147699

View attachment 147700

My biggest takeaways were:

What the research to date indicates is correct; spinorama data correlates well with listener preferences even with untrained listeners.

Those small deviations, and even some big deviations on frequency response are not easy to pick out in a blind comparison of multiple speakers depending on the material being played. I have no doubt a trained listener can pick stuff out better—but the differences are subtle and in the case of the Kef and Revel, it really takes a lot of data before a pattern emerges.

The more trained listeners very readily picked out the OSD as sounding terrible on most tracks, but picking a winner between the Kef and Revel was much harder. It is interesting to note that the Kef and Revel both have a preference score of 5.1. The JBL has a 2.1 and the OSD has a 1.1

One final note, all but one listener was between 35-47 years old and it is unlikely any of us could hear much past 15 or 16khz. One participant was in their mid 20s.

It would have been very interesting to have the listeners rate the songs as well. Every song has people who love it and people who hate it. Surely that would bias the results. Would be interesting to see if it did in this small sample.

Very nice, except that based on the graphs posted it is likely that no significant difference was achieved. You need a bigger sample….

Technically, you cannot state that the ratings WERE different, except that they appear to trend to some difference.

Technically, you cannot state that the ratings WERE different, except that they appear to trend to some difference.

I find this hard to believe given the huge ranges and very small sample sizes.I ran a quick F statistics test between a couple of samples. First was JBL Control X against KEF Q100. That difference is quite statistically valid with p value of 0.001.

Same test against KEF and Revel gives a p value of 0.1 so 90% chance that the difference is genuine. So it fails the typical p = 0.05 or 95% confidence.

Semla

Active Member

- Joined

- Feb 8, 2021

- Messages

- 170

- Likes

- 328

That is not true. The ratings from this panel were significantly different for OSD vs KEF, OSD vs Revel and JBL vs Revel.Very nice, except that based on the graphs posted it is likely that no significant difference was achieved. You need a bigger sample….

Technically, you cannot state that the ratings WERE different, except that they appear to trend to some difference.

Pretty awesome experiment.

Unfortunately it demonstrates that untrained/inexperienced listeners were unable to reliably differentiatet their loudspeaker preferences. In other words, the predicted pref scores didn't really predict their blinded preferences. The predicted pref scores were only predictive with a subset of your listener sample.

My takeaway was that predicted preference scores may not be terribly helpful for predicting the preferences of the average consumer. Ouch.

yes, i really liked the test and also see this as well, that untrained ears easily might tend to like worse measured speaker with certain songs, not knowing that, maybe that song should sound less exciting lets say with a better speaker, so little preference bias is happening there...

still i like what was done here, a lot. Great!!!

The second: one-speaker listening tests. No offence but IMO it's just not_right. You're totally missing both real spatial image differencens (provided by directivity, reflections, etc; whatever, it just exists and you can't ignore it). At the same time, tonality is affected too (1 speaker vs 2 speakers in real world/room).

Please keep in mind that the spatial effect of stereo speaker listening is ironically, its biggest weakness: IMO the glaring issue with spatial stereo listening is that it masks many speaker flaws with this distraction called soundstage/imaging; yes, these spatial effects are appealing but also represent a completely different sort of review. On the one hand, reviewing properly placed speakers in stereo better reflects real world usage but on the other, it has now become the review of the room and the reviewers' competency in placement and room treatment for that specific speaker. Proper room treatment and speaker placement are so speaker specific that I can only imagine the impossible effort of double blind stereo speaker reviews where all speakers must be optimally placed with room treatment optimized for all speakers! Given that each speaker pair will have varying dispersion characteristics and given some reviewers prefer pin-point imaging over wide soundstaging while others prefer diffusely HUGE soundstage with more ambiguous imaging - how do you normalize this fairly for all speakers in the same room? This is why subjective reviews of soundstage/imaging is for all practical purposes useless (albeit greatly entertaining) because the reader can never re-produce the room acoustics of the reviewer and only by pure coincidence will the prospective consumer experience the soundstage/imaging that the reviewer describes.

You are absolutely correct in the limitations of mono speaker reviews but the biggest benefit is eliminating as many variables as possible to avoid conflating appealing spatial effects with speaker design competency - these 2 objectives are distinct and excelling in one does not necessarily raises the performance of the other. So one must understand the single minded objective of mono speaker reviews as described by F. Toole.

You forget the overriding "subjectivity test" because at the end of the day, after weeks of research and listening tests, we end up with a speaker short list we are so proud of only to hear our significant other simply say "they're all ugly, can you find something prettier?" Best to start with a list of pretty speakers, let the real decision maker select the top 3, then among those we apply our research and listening skills.Anyway, not trying to poo-poo fun making. I do fun testing all the time, however all my comparison testing is rooted in subjective decisions due to my testing limitations and I am suggesting here that this test is more subjective than objective. They are 2 ends of the same stick and the best tests still must have subjective aspects to actually exist so having subjectivity influence the test is normal.

LightninBoy

Addicted to Fun and Learning

Car washing (detailing?) is such an apt metaphor because many find it incredibly therapeutic as they pull out their car washing kit with clay bar, spritzer, sponges and exotic waxes, etc.

Semla

Active Member

- Joined

- Feb 8, 2021

- Messages

- 170

- Likes

- 328

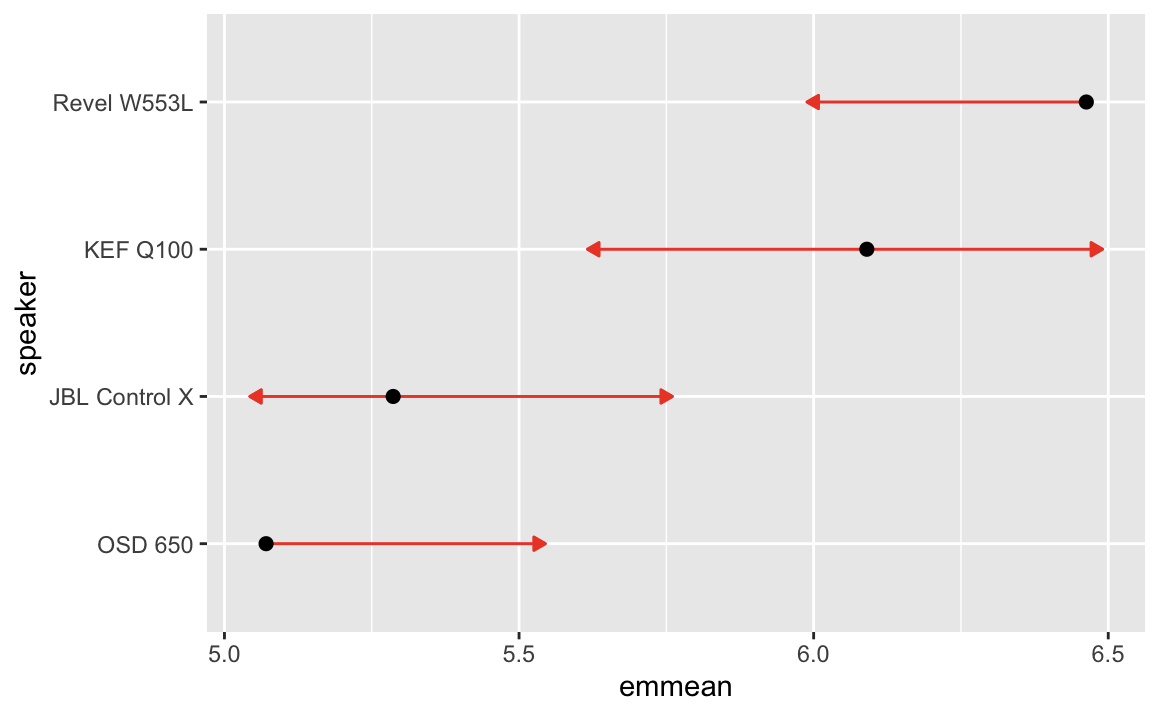

Statistical analysis of this experiment in one easily digestible graph:

You can compare two speakers by checking for overlap between the red arrows.

You can compare two speakers by checking for overlap between the red arrows.

- If the arrows overlap: no significant difference between the rating given by the listeners.

- If they don't overlap: statistically significant difference between the rating given by the listeners.

Last edited:

Similar threads

- Replies

- 129

- Views

- 16K

- Replies

- 299

- Views

- 56K

- Replies

- 9

- Views

- 1K

- Replies

- 14

- Views

- 7K

- Replies

- 413

- Views

- 76K