Hi folks, i am sorry if this is wrong place to ask but i would like to understand speakers measurement theory.

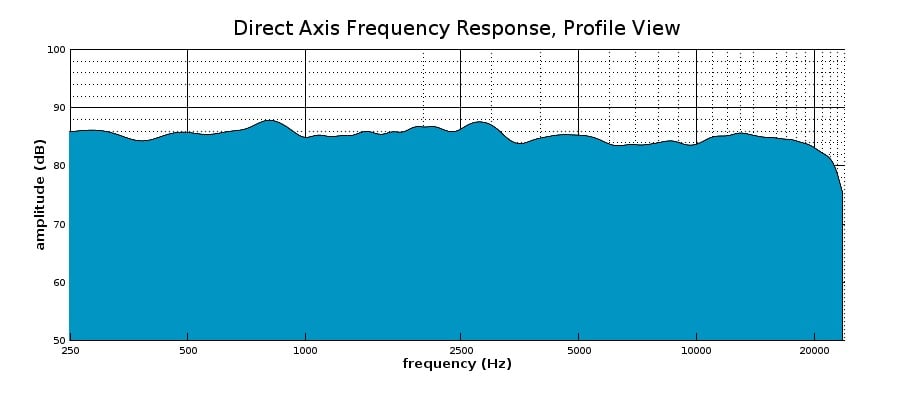

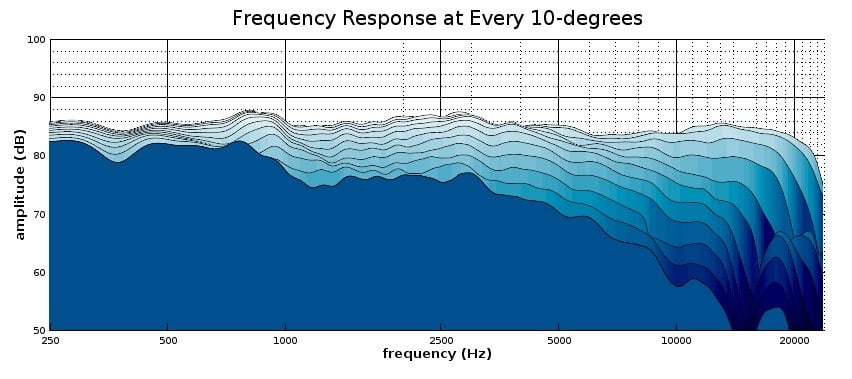

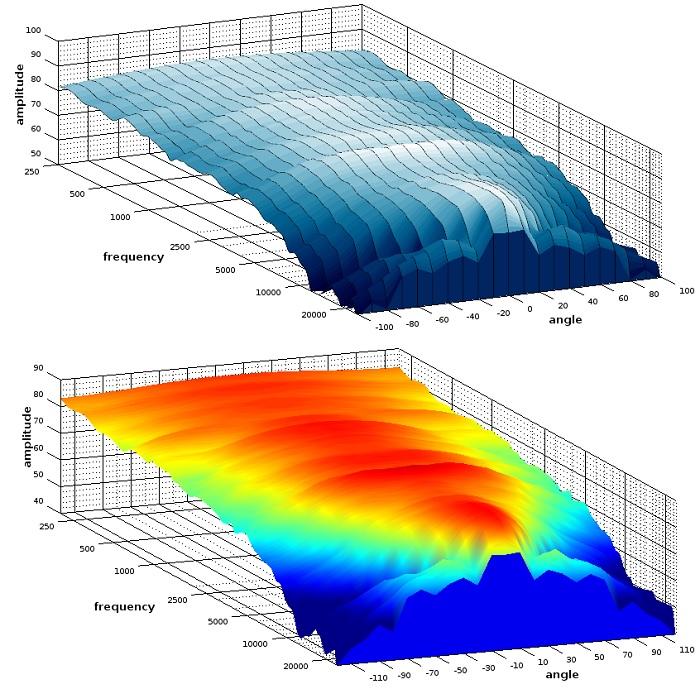

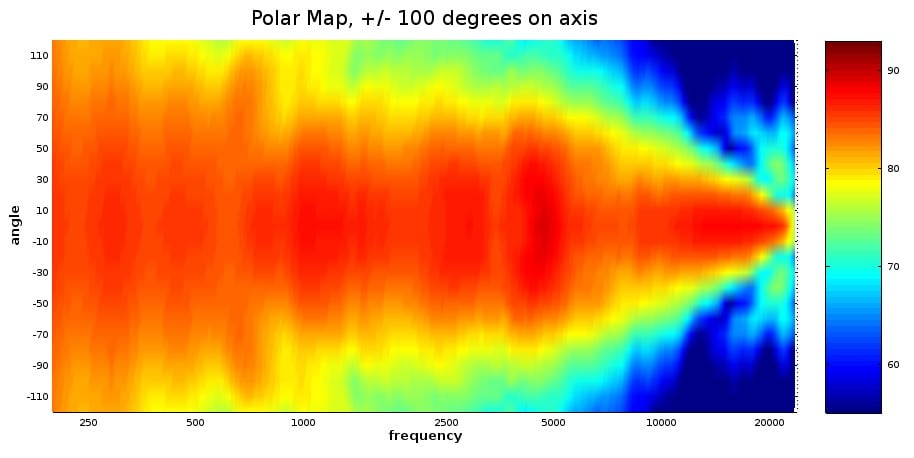

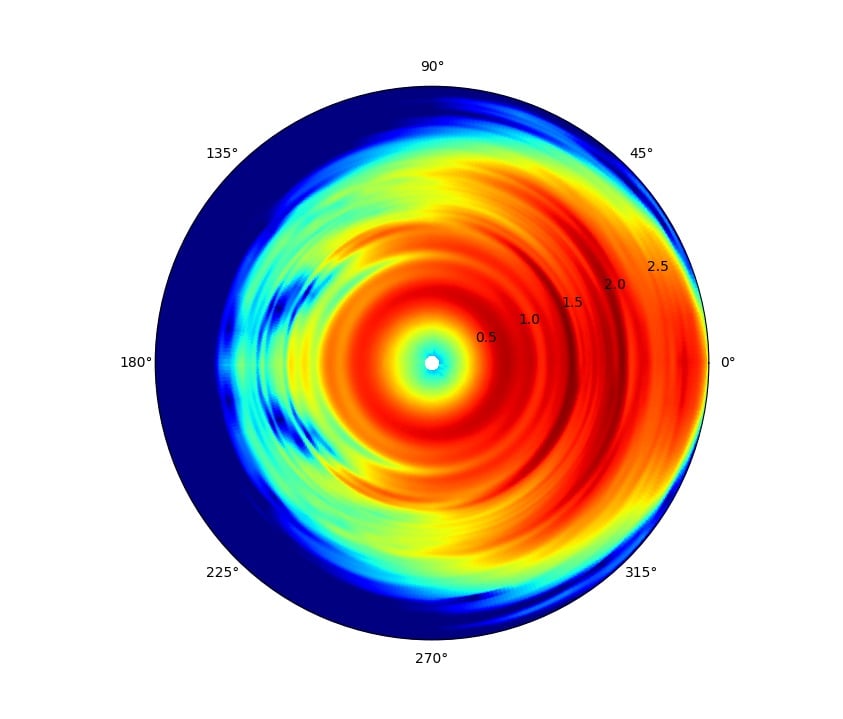

I understand really good measurements of DACs and amplifiers but those "3d direction plots" sounds like magic. Could you please provide good articles references. I think language doesn't matter, Google translate works)

I understand really good measurements of DACs and amplifiers but those "3d direction plots" sounds like magic. Could you please provide good articles references. I think language doesn't matter, Google translate works)