Rationalism *is* philosophy, and is the opposite of empiricism.They are usually rational thought processes which are not based on philosophy - unless you are stuck in the middle ages.

-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NORMS AND STANDARDS FOR DISCOURSE ON ASR

- Thread starter svart-hvitt

- Start date

No it is not. You may be confusing rationalism with logic.

Agreement(repeatability) is sought in scientific pursuit even if it is never totally resolved.

Disagreement is fundamental to philosophy and resolution is ephemeral, as displayed by your stance.

Philosophers can argue, ad nauseum, over which religion is correct or preferable, or,or,or,......

Science just gets on with new discoveries.

Agreement(repeatability) is sought in scientific pursuit even if it is never totally resolved.

Disagreement is fundamental to philosophy and resolution is ephemeral, as displayed by your stance.

Philosophers can argue, ad nauseum, over which religion is correct or preferable, or,or,or,......

Science just gets on with new discoveries.

You need to read this:No it is not. You may be confusing rationalism with logic.

Agreement(repeatability) is sought in scientific pursuit even if it is never totally resolved.

Disagreement is fundamental to philosophy and resolution is ephemeral, as displayed by your stance.

Philosophers can argue, ad nauseum, over which religion is correct or preferable, or,or,or,......

Science just gets on with new discoveries.

https://www.philosophybasics.com/movements_rationalism.html

If science just gets on with new discoveries, how does it make sense of what it finds? It's a multidimensional world, and a scientific experiment in itself cannot prove that it is dealing with all relevant dimensions of the 'space'. The philosophy/intellectual/rational part is crucial in understanding its limits. Experiments that examine existing speakers, for example, cannot on their own make the leap of understanding that says that perfectly uniform dispersion at all frequencies would be even better than a smooth trailing off. The person who makes that purely intellectual/philosophical/rational leap of understanding can then build a brand new speaker to test.

Too many people here think that 'data' alone is science, but data is dumb (in the UK sense of the word) in that it has no power of speech of its own. It is only useful when accompanied by a bit of philosophical reasoning.

You need to read this:

https://www.philosophybasics.com/movements_rationalism.html

If science just gets on with new discoveries, how does it make sense of what it finds? It's a multidimensional world, and a scientific experiment in itself cannot prove that it is dealing with all relevant dimensions of the 'space'. The philosophy/intellectual/rational part is crucial in understanding its limits. Experiments that examine existing speakers, for example, cannot on their own make the leap of understanding that says that perfectly uniform dispersion at all frequencies would be even better than a smooth trailing off. The person who makes that purely intellectual/philosophical/rational leap of understanding can then build a brand new speaker to test.

Too many people here think that 'data' alone is science, but data is dumb (in the UK sense of the word) in that it has no power of speech of its own. It is only useful when accompanied by a bit of philosophical reasoning.

Philosophy and science are related but not the same. Philosophy as a discipline does not contain the necessary checks and balances to make repeatable, testable conclusions. The process of formulating ideas is not science. Science is the process of eliminating ideas that don’t have a good explanatory value.

Humans are, by nature, explanation machines. We try to explain and predict the world around us. Explanations vary in quality, for example: magic, religion, heliocentric view, audio cable break-in, etc. are all explanations, although all have a somewhat low predictive value.

Science is the process for selecting explanations with better predictive value. Philosophy is the mechanism for generating explanations, those that can and those that cannot be tested. Any scientifically tested philosophy becomes science. Any scientifically falsified philosophy becomes trash, and any idea that cannot be tested remains a Philosophy. At least that’s how I see it

svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- Thread Starter

- #65

Grrc

@tmtomh , thanks for your long reply. I read it a couple of times to add a few comments.

I will try and explain where I think we differ.

And sorry to all who like short posts. I am not a big fan of twitter. I am not a fan either of posts that are short just to shout repeatedly “four legs good, two legs bad”, as if science were a choir session. It takes longer and more space to develop a minority, diverging argument.

You wrote:

«So yes, this forum - Audio SCIENCE Review - is drawn to measurements. And for reasons probably as much to do with cost and convenience (size and ease of shipping, for one), it has tended to measure mostly source components, DACs, and amps. And as it has become known for that, it's drawn more members who are interested in those things».

The main point of my article is to open one’s eyes for good and bad science.

Akerlof (2019) wrote:

«Before describing the implications of our analysis, it is important to emphasize what has—and what has not been—said. The theoretical and empirical accomplishments of modern economics, obtained with Hard standards for the conduct of research, should be rightly celebrated. But such standards should not be uniformly applied to all economic problems; especially, they should not be applied to those problems for which those standards are too restrictive: for lack of evidence or because motivation significantly differs from standard economic assumptions. Different terrains call for different vehicles. A sailboat is useless in crossing a (riverless) desert; a camel is useless in crossing a sea».

So Akerlof is not criticizing Hard per se. Hard is good science. It’s the narrowness in methods he points the finger at, lack of alternative methods that may create sins of omission; too much of a good thing. When you seem to liken science with measurements, you narrow - dumb down actually - science to an exercise for Measurement Man.

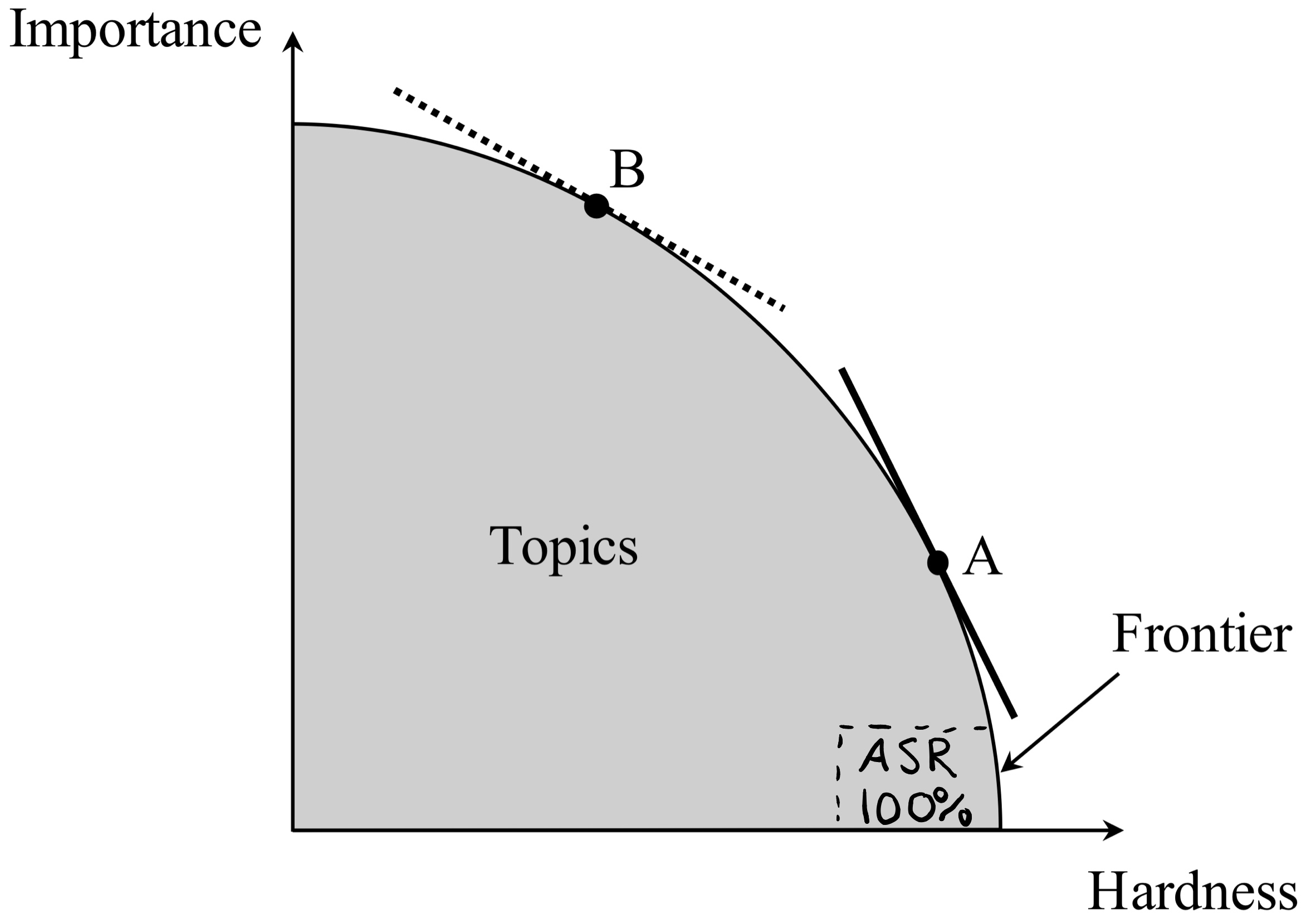

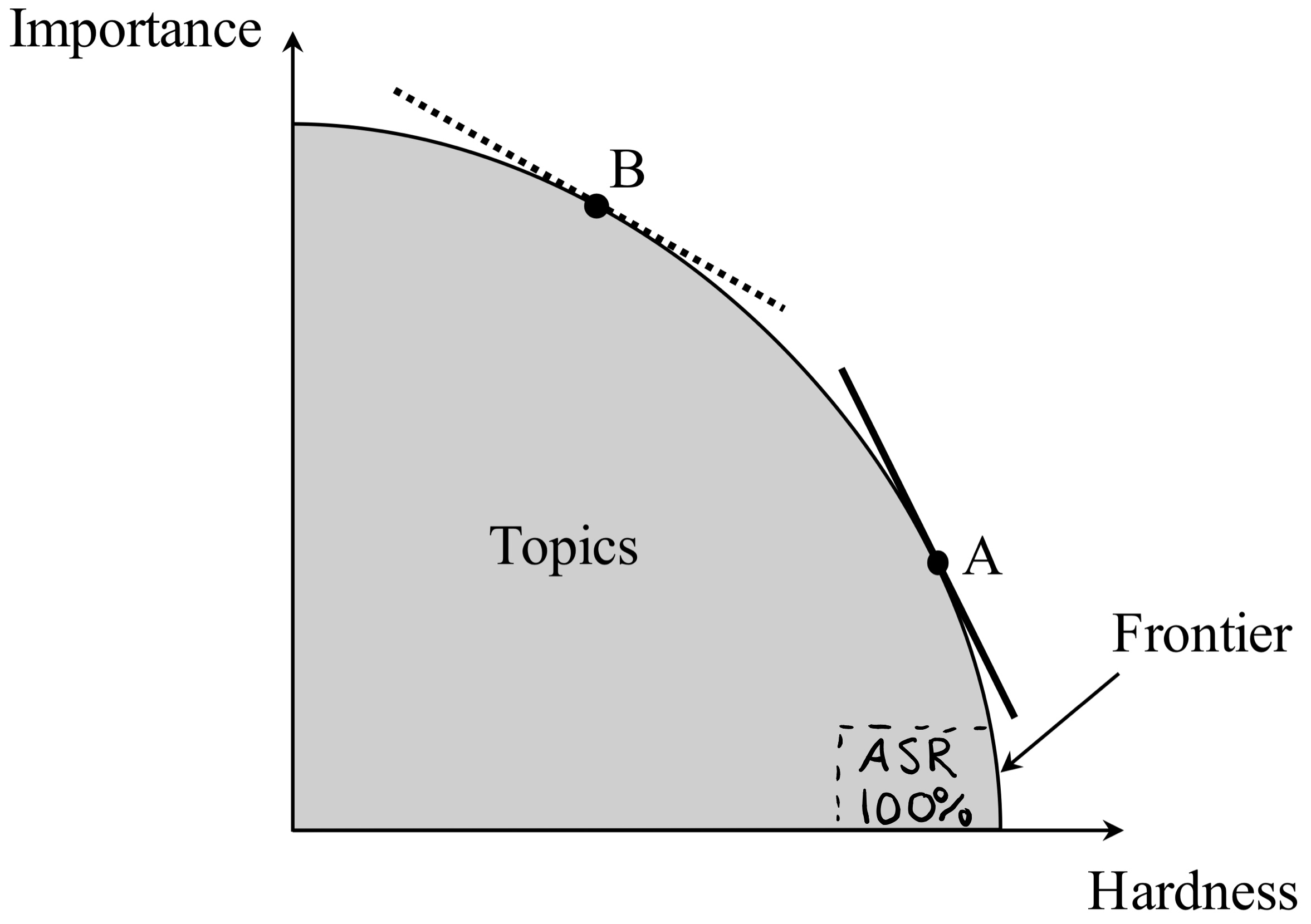

In the same spirit I am not criticizing measurements of DACs and other transparent electronics as such; I use these observations of an overweight of Low Importance to make the point that Akerlof’s figure 1 is relevant for ASR as well. See my applying observations from ASR to Akerlof’s figur 1 below.

Please note that Ellison (2002) does not predict only one outcome (like over-emphasis on Hard/Low Importance) as the outcome can go either way depending on social processes. I am making the case that all discussions on ASR gravitate towards south east in the figure above. Any attempt to try and lift the discussion northward are arrested as if by a thought police. I think this narrow focus on Hard measurements of low Importance on ASR carries over into other fields as well, like speaker discussions and other discussions of general interest. Let me provide an example.

Previously, I quoted a Danish JAES article from 2017, co-written by well-known professor Søren Bech, that opined:

«This disconnect between specifications and perception have made it challenging for acousticians and engineers (and consumers) to predict how a loudspeaker will sound on the basis of these specifications».

https://www.researchgate.net/public..._sound_quality_-_a_review_of_existing_studies

Now, let me quote AES Fellow John Watkinson:

«Traditional loudspeaker measurements are disreputable. It is widely known that several speakers having the same measurements can sound quite different. To a scientist this can only mean that the measurements are either insufficiently accurate or incomplete. Little wonder that extensive subjective assessment is traditionally thought necessary in the development of a loudspeaker».

Source: http://cyrille.pinton.free.fr/elect...nson-Putting_science_back_in_loudspeakers.pdf

So there seems to be a gap here between people like Watkinson and Bech - who speak about differences that were not apparent in readily available measurements - and people on ASR who act as if measurements yield a complete picture of reproduced sound. Is the ASR community interested in discussing inputs like those of Watkinson and Bech. If not, why is that? Please note that @Floyd Toole possibly gives an answer to the limits or obstacle of measurements when he says that a measurement device is not brain and a set of of ears. In other words, if audio measurements yielded a complete picture of sound, audio scientists would need to know more about the brain and ears than our best scientists from medical schools.

In your post, you wrote:

“Finally, with respect, I think there's a serious logical fallacy in proposing a "middle way" between science and listening based on all that you have written here. Apologies if I'm misunderstanding, but a "middle way" appears to presume that measurements are definitionally insufficient and so we must combine measurements and listening in some 50-50 (middle) way”.

A gorilla in the room is the fact that for example Harman listen too! A lot. Possibly more than anyone else. They have listening panels that give rise to measurements procedures based on statistical analyses. What came first; listening or science, listening or Idea? What do you think gave rise to Harman choosing listening to decide product development in the first place?

Having said that, I don’t know of any speaker producer who uses measurements only, having discarded all listening sessions. If you or somebody else know of a speaker producer who has skipped all listening in the R&D phase, please inform me.

And let me add that the gold standard in modern audio research, controlled listening, has a potentially conservative effect. Let me use an example to illustrate my point, in the spirit of Akerlof’s note on the sailboat and the camel. If we go back to the 1800s, and develop a measurement regime for testing taxis, that testing regime would ask very different questions than a measurement regime for taxis 100 years later. The vox populi based measurement regime for horse drawn taxis of the 1800 would probably conclude that the new motorcar taxis were of inferior quality (lack of space, repairs needed, few gas stations etc.) It’s just the more philosophical observer who would conclude that the testing regime for taxis of the 1800s were a limiting factor for New, not a factor that drove innovations and New. In other words, a certain testing regime - like vox populi - may be a conserving force for Old and an impediment for New. Is it coincidence that many people remark that modern speakers look very much the same as they used to?

Say a new speaker arrived in 2020, a true point source speaker. If one tested this version 1 of the new point source, one could conclude that the point source speaker is a bad design because it scores low on certain parameters where the Old got a better score. However, there may be ways to measure New, measurements that are not readily available, that would reveal that New is a better design than Old.

My point is, the controlled listening test method - vox populi - is a middle way and has always been so. Vox populi has many strong arguments in favor of it, and I am a big fan of it. But I also realize that vox populi has its limits. I am uncertain if vox populi is a practical driver of New in speaker design.

The most famous remark on the limits of vox populi a.k.a. democratic elections is associated with Churchill:

“Many forms of Government have been tried, and will be tried in this world of sin and woe. No one pretends that democracy is perfect or all-wise. Indeed it has been said that democracy is the worst form of Government except for all those other forms that have been tried from time to time.…”

I appreciate the thought you put into this, along with the references you cite (although not too many references - don't want to be part of the reference-creep documented in that study!).

There is one main takeaway I get from your post, which I wholeheartedly agree with: Many hobbyist forums tend to focus on certain components or aspects not because those aspects are decisive, but rather because those aspects are part of the forum's founding interest area. Put crudely, the forum is about hammers so all they want to talk about is nails. It's why the Computer Audiophile (now AudiophileStyle) forum has a contingent that wants to talk about things like how soundstage depth is impacted by SSDs vs spinning hard drives or USB vs optical vs coax. It's why high-end vinyl forums include a lot of talk about tonearm wiring and why vintage audio forums feature lots of passionate opinions about the relative sound quality of different receivers in the same manufacturer's model line from the same year. Those forums all draw people who are mainly interested in that stuff.

Even a place like Gearslutz, which IMHO has a very scientifically "healthy" focus on room treatments since the room is arguably the only thing more influential than the speakers, has that focus because it's a forum for DIYers; and acoustic treatments and studio-type room considerations are a major focus of the folks who are drawn to that forum.

So yes, this forum - Audio SCIENCE Review - is drawn to measurements. And for reasons probably as much to do with cost and convenience (size and ease of shipping, for one), it has tended to measure mostly source components, DACs, and amps. And as it has become known for that, it's drawn more members who are interested in those things.

However, where I part ways with your analysis is in your hypothesis that ASR focuses on "easy" measurements of DACs, amps, and so on because of larger cultural shifts in science and/or because a lot of members here are comforted by the certainty of "easy" (aka positivistic) measurements.

I think that hypothesis is weak. I think it's weak because for every comment here about how a DAC that has SINAD of 118 is better than one with SINAD of 112, you see a comment about how it's important to find amps with better SINAD because DAC measurements become pointless if there are no affordable amps with SINAD above the low 90s - in other words, folks here are very conscious of the importance of system balancing.

And that awareness has in recent months led to increasing requests and interest for Amir to test speaker amps, including larger (and more expensive, and more of a hassle ship back and forth) AB amps, multichannel receivers, and so on. And more recently, Amir himself has talked about speaker measurements. And again, even in that conversation the founding issue is that complexity and expense of doing proper measurements on speakers precisely because of what you cite as the difficulty of properly measuring speakers.

So can we all as a group and community here benefit from being aware that not all established measurements fully describe human perception when it comes to the least linear and most distorted part of a sound system (the speakers)? Yes - transducers are, by their nature, the "lowest-fi" and hardest to measure in meaningful ways (that would include mics and headphones too).

But I think this community clearly has demonstrated a willingness and desire to learn, and so I would disagree with your claim that we are fiddling away with DAC SINAD and ignoring the sonic elephant in the room.

Finally, with respect, I think there's a serious logical fallacy in proposing a "middle way" between science and listening based on all that you have written here. Apologies if I'm misunderstanding, but a "middle way" appears to presume that measurements are definitionally insufficient and so we must combine measurements and listening in some 50-50 (middle) way.

I would argue against that - not because I don't value listening (it's the whole point!), but rather because IMHO measurements and listening are qualitatively different and so one cannot simply split the difference between them. If Speaker A measures better than Speaker B but I like Speaker B better, I don't say, "Can you show me a Speaker C that measures better than Speaker B but not as good as Speaker A," and hope that Speaker C will conversely sound better than Speaker A but not as good as Speaker B. That would be a middle way between measurement and listening, but it would end up with me buying a speaker that I didn't like as much as Speaker B.

Instead, I would either buy Speaker B, or I would double-check my source material and the listening space where I heard the speakers. After making a reasonable effort to rule out factors that might be making Speaker B falsely seem better, if I still liked Speaker B, I'd buy it. There is no middle way there.

@tmtomh , thanks for your long reply. I read it a couple of times to add a few comments.

I will try and explain where I think we differ.

And sorry to all who like short posts. I am not a big fan of twitter. I am not a fan either of posts that are short just to shout repeatedly “four legs good, two legs bad”, as if science were a choir session. It takes longer and more space to develop a minority, diverging argument.

You wrote:

«So yes, this forum - Audio SCIENCE Review - is drawn to measurements. And for reasons probably as much to do with cost and convenience (size and ease of shipping, for one), it has tended to measure mostly source components, DACs, and amps. And as it has become known for that, it's drawn more members who are interested in those things».

The main point of my article is to open one’s eyes for good and bad science.

Akerlof (2019) wrote:

«Before describing the implications of our analysis, it is important to emphasize what has—and what has not been—said. The theoretical and empirical accomplishments of modern economics, obtained with Hard standards for the conduct of research, should be rightly celebrated. But such standards should not be uniformly applied to all economic problems; especially, they should not be applied to those problems for which those standards are too restrictive: for lack of evidence or because motivation significantly differs from standard economic assumptions. Different terrains call for different vehicles. A sailboat is useless in crossing a (riverless) desert; a camel is useless in crossing a sea».

So Akerlof is not criticizing Hard per se. Hard is good science. It’s the narrowness in methods he points the finger at, lack of alternative methods that may create sins of omission; too much of a good thing. When you seem to liken science with measurements, you narrow - dumb down actually - science to an exercise for Measurement Man.

In the same spirit I am not criticizing measurements of DACs and other transparent electronics as such; I use these observations of an overweight of Low Importance to make the point that Akerlof’s figure 1 is relevant for ASR as well. See my applying observations from ASR to Akerlof’s figur 1 below.

Please note that Ellison (2002) does not predict only one outcome (like over-emphasis on Hard/Low Importance) as the outcome can go either way depending on social processes. I am making the case that all discussions on ASR gravitate towards south east in the figure above. Any attempt to try and lift the discussion northward are arrested as if by a thought police. I think this narrow focus on Hard measurements of low Importance on ASR carries over into other fields as well, like speaker discussions and other discussions of general interest. Let me provide an example.

Previously, I quoted a Danish JAES article from 2017, co-written by well-known professor Søren Bech, that opined:

«This disconnect between specifications and perception have made it challenging for acousticians and engineers (and consumers) to predict how a loudspeaker will sound on the basis of these specifications».

https://www.researchgate.net/public..._sound_quality_-_a_review_of_existing_studies

Now, let me quote AES Fellow John Watkinson:

«Traditional loudspeaker measurements are disreputable. It is widely known that several speakers having the same measurements can sound quite different. To a scientist this can only mean that the measurements are either insufficiently accurate or incomplete. Little wonder that extensive subjective assessment is traditionally thought necessary in the development of a loudspeaker».

Source: http://cyrille.pinton.free.fr/elect...nson-Putting_science_back_in_loudspeakers.pdf

So there seems to be a gap here between people like Watkinson and Bech - who speak about differences that were not apparent in readily available measurements - and people on ASR who act as if measurements yield a complete picture of reproduced sound. Is the ASR community interested in discussing inputs like those of Watkinson and Bech. If not, why is that? Please note that @Floyd Toole possibly gives an answer to the limits or obstacle of measurements when he says that a measurement device is not brain and a set of of ears. In other words, if audio measurements yielded a complete picture of sound, audio scientists would need to know more about the brain and ears than our best scientists from medical schools.

In your post, you wrote:

“Finally, with respect, I think there's a serious logical fallacy in proposing a "middle way" between science and listening based on all that you have written here. Apologies if I'm misunderstanding, but a "middle way" appears to presume that measurements are definitionally insufficient and so we must combine measurements and listening in some 50-50 (middle) way”.

A gorilla in the room is the fact that for example Harman listen too! A lot. Possibly more than anyone else. They have listening panels that give rise to measurements procedures based on statistical analyses. What came first; listening or science, listening or Idea? What do you think gave rise to Harman choosing listening to decide product development in the first place?

Having said that, I don’t know of any speaker producer who uses measurements only, having discarded all listening sessions. If you or somebody else know of a speaker producer who has skipped all listening in the R&D phase, please inform me.

And let me add that the gold standard in modern audio research, controlled listening, has a potentially conservative effect. Let me use an example to illustrate my point, in the spirit of Akerlof’s note on the sailboat and the camel. If we go back to the 1800s, and develop a measurement regime for testing taxis, that testing regime would ask very different questions than a measurement regime for taxis 100 years later. The vox populi based measurement regime for horse drawn taxis of the 1800 would probably conclude that the new motorcar taxis were of inferior quality (lack of space, repairs needed, few gas stations etc.) It’s just the more philosophical observer who would conclude that the testing regime for taxis of the 1800s were a limiting factor for New, not a factor that drove innovations and New. In other words, a certain testing regime - like vox populi - may be a conserving force for Old and an impediment for New. Is it coincidence that many people remark that modern speakers look very much the same as they used to?

Say a new speaker arrived in 2020, a true point source speaker. If one tested this version 1 of the new point source, one could conclude that the point source speaker is a bad design because it scores low on certain parameters where the Old got a better score. However, there may be ways to measure New, measurements that are not readily available, that would reveal that New is a better design than Old.

My point is, the controlled listening test method - vox populi - is a middle way and has always been so. Vox populi has many strong arguments in favor of it, and I am a big fan of it. But I also realize that vox populi has its limits. I am uncertain if vox populi is a practical driver of New in speaker design.

The most famous remark on the limits of vox populi a.k.a. democratic elections is associated with Churchill:

“Many forms of Government have been tried, and will be tried in this world of sin and woe. No one pretends that democracy is perfect or all-wise. Indeed it has been said that democracy is the worst form of Government except for all those other forms that have been tried from time to time.…”

Last edited:

svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- Thread Starter

- #66

DOUBLE STANDARDS OR ONE GOLD STANDARD?

In a previous post, @amirm used the term “gold standard”:

“Sound evaluation by ear is the gold standard in audio science as long as it is controlled. You know, a proper blind AB comparison with levels matched”.

Source:

https://www.audiosciencereview.com/...-av8805-av-processor.6926/page-17#post-174818

The term “gold standard” is borrowed from economists and is a monetary concept, a regime under which you could exchange a country’s currency for physical gold. This monetary regime made it possible to compare the price of goods and services across country borders. In other words, the term could be seen as an attempt to make cross-border comparisons to facilitate economic activity, say trade. The interpretation of the term could also mean that a standard is state-of-the-art, so good it doesn’t make sense to settle for different standards.

As we know, the real gold standard was finally buried about 50 years ago (Nixon shock), a reminder that the gold standard was not necessarily so good it would exist for ever.

I wonder if the use of the term “gold standard” on ASR is more of a rhetorical move, to settle a debate on methods so that nobody questions the choice of standard. Who in his right mind would question a gold standard?

In my last post I used the following adaption of Ekerlof’s figure 1 to illustrate my point that focus on ASR is on matters of Low Importance.

I believe the illustration above is not for discussing as it’s based on observations for everyone to see. However, please speak up if you disagree.

My point is that ASR - without much debate - have accepted that the gold standard for evaluation of sound reproduction in point B in the figure is summed up as previously quoted (point B is High Importance, like speakers):

“Sound evaluation by ear is the gold standard in audio science as long as it is controlled. You know, a proper blind AB comparison with levels matched”.

Source: https://www.audiosciencereview.com/...-av8805-av-processor.6926/page-17#post-174818

However, in point A, where we find dacs and amps (i.e. factors of Low Importance), other standards are used, based on another test regime than the gold standard (this test regime probably gives much higher thresholds of audibility than the speaker listening tests by Harman would have done).

Does this mean we have different gold standards based on different situations?

A dac or an amplifier is of little use if not used to reproduce sound coming out of speakers. In other words, why not use the gold standard as defined by @amirm above when evaluating dacs and amplifiers?

My point may seem pedantic, but that is the nature of science too where the devil is in the detail. Why not use one standard, the gold standard, across all tests?

In a previous post, @amirm used the term “gold standard”:

“Sound evaluation by ear is the gold standard in audio science as long as it is controlled. You know, a proper blind AB comparison with levels matched”.

Source:

https://www.audiosciencereview.com/...-av8805-av-processor.6926/page-17#post-174818

The term “gold standard” is borrowed from economists and is a monetary concept, a regime under which you could exchange a country’s currency for physical gold. This monetary regime made it possible to compare the price of goods and services across country borders. In other words, the term could be seen as an attempt to make cross-border comparisons to facilitate economic activity, say trade. The interpretation of the term could also mean that a standard is state-of-the-art, so good it doesn’t make sense to settle for different standards.

As we know, the real gold standard was finally buried about 50 years ago (Nixon shock), a reminder that the gold standard was not necessarily so good it would exist for ever.

I wonder if the use of the term “gold standard” on ASR is more of a rhetorical move, to settle a debate on methods so that nobody questions the choice of standard. Who in his right mind would question a gold standard?

In my last post I used the following adaption of Ekerlof’s figure 1 to illustrate my point that focus on ASR is on matters of Low Importance.

I believe the illustration above is not for discussing as it’s based on observations for everyone to see. However, please speak up if you disagree.

My point is that ASR - without much debate - have accepted that the gold standard for evaluation of sound reproduction in point B in the figure is summed up as previously quoted (point B is High Importance, like speakers):

“Sound evaluation by ear is the gold standard in audio science as long as it is controlled. You know, a proper blind AB comparison with levels matched”.

Source: https://www.audiosciencereview.com/...-av8805-av-processor.6926/page-17#post-174818

However, in point A, where we find dacs and amps (i.e. factors of Low Importance), other standards are used, based on another test regime than the gold standard (this test regime probably gives much higher thresholds of audibility than the speaker listening tests by Harman would have done).

Does this mean we have different gold standards based on different situations?

A dac or an amplifier is of little use if not used to reproduce sound coming out of speakers. In other words, why not use the gold standard as defined by @amirm above when evaluating dacs and amplifiers?

My point may seem pedantic, but that is the nature of science too where the devil is in the detail. Why not use one standard, the gold standard, across all tests?

DDF

Addicted to Fun and Learning

- Joined

- Dec 31, 2018

- Messages

- 617

- Likes

- 1,360

Exposing poorly engineered product, as Amir's tests frequently do, isn't "low importance".

While we can debate audibility thresholds, poorly engineered performance surely is a red flag that reliability is potentially a greater risk, due to the same poor engineering.

Secondly, after 30 years of engineering design and test in high tech mixed signal processing environments, I can confidently tell you that its completely a fallacy to assume that "hard+high performance" doesn't exist: the continuum is a rectangle, not a quarter circle

While we can debate audibility thresholds, poorly engineered performance surely is a red flag that reliability is potentially a greater risk, due to the same poor engineering.

Secondly, after 30 years of engineering design and test in high tech mixed signal processing environments, I can confidently tell you that its completely a fallacy to assume that "hard+high performance" doesn't exist: the continuum is a rectangle, not a quarter circle

svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- Thread Starter

- #68

Exposing poorly engineered product, as Amir's tests frequently do, isn't "low importance".

While we can debate audibility thresholds, poorly engineered performance surely is a red flag that reliability is potentially a greater risk, due to the same poor engineering.

Secondly, after 30 years of engineering design and test in high tech mixed signal processing environments, I can confidently tell you that its completely a fallacy to assume that "hard+high performance" doesn't exist: the continuum is a rectangle, not a quarter circle

Kudos to @amirm for exposing incompetence and greed. It’s a great service to the consumer.

However, have in mind that @Floyd Toole did audibility tests for NRC decades ago and he never complained about amplifiers as a source of raising questions about his results.

So it seems like amplifiers (and of course, dacs) have quite low levels above which differences become audible in gold standard tests.

It’s a shame Harman never (?) has made gold standard tests of amplifiers (and dacs), particularly because they are in this business and their customers would have had interest in this kind of research.

- Joined

- Aug 14, 2018

- Messages

- 2,773

- Likes

- 8,155

The purpose of science, very simply, is to produce repeatable practical outcomes from intellectual endeavours.

Philosophy may exercise the mind but I have yet to see it stand up to a similar test. Economists keep yearning for credibility in the same way as philosophers.

Philosophers and economists are renowned for trying to justify why previous tenets didn't work.

I have to agree with @Cosmik here - I don't know that I'd necessarily agree that repeatable experimental outcomes is the purpose of science. It's certainly the standard of experimental validity - nothing can be said to be scientifically established without independent repeatability for sure. But the purpose of science is something that I would describe as a... philosophical matter.

I'm glad I'm just an engineer, I just do as I'm told, somebody gives me a job and I do it (weather and other commitments such as listening to music permitting, obviously).

One thing that I do find interesting in reading technical papers in my own field is the risk of false consensus and the effect it can have on engineering design and practice. About six years ago there was a particularly controversial issue where some results of lab tests called into question a principle that had underpinned a rather important safety requirement in an international regulation. Because of the reaction it provoked my employer was asked by a government agency to repeat the tests and also to review the literature and basis of the then consensus on the matter in question. The job ended up on my desk, much to my surprise (I'm as guilty of following the heard as anyone) I repeated the tests and got the same results. Several times. I then went through the archives and the relevant technical papers. There were hundreds of papers on the subject, but what became apparent was that all of them were based on a single piece of original research and experiments done in the 1950's which had been accepted by, and which underpinned, all subsequent papers on the subject. There appeared to be an overwhelming body of research and consensus when in fact it was all predicated on the efficacy of a single set of experiments done 60 odd years ago.

One thing that I do find interesting in reading technical papers in my own field is the risk of false consensus and the effect it can have on engineering design and practice. About six years ago there was a particularly controversial issue where some results of lab tests called into question a principle that had underpinned a rather important safety requirement in an international regulation. Because of the reaction it provoked my employer was asked by a government agency to repeat the tests and also to review the literature and basis of the then consensus on the matter in question. The job ended up on my desk, much to my surprise (I'm as guilty of following the heard as anyone) I repeated the tests and got the same results. Several times. I then went through the archives and the relevant technical papers. There were hundreds of papers on the subject, but what became apparent was that all of them were based on a single piece of original research and experiments done in the 1950's which had been accepted by, and which underpinned, all subsequent papers on the subject. There appeared to be an overwhelming body of research and consensus when in fact it was all predicated on the efficacy of a single set of experiments done 60 odd years ago.

- Joined

- Feb 23, 2016

- Messages

- 20,771

- Likes

- 37,636

But was that research from 60 years ago correct, and the use of it since then appropriate?I'm glad I'm just an engineer, I just do as I'm told, somebody gives me a job and I do it (weather and other commitments such as listening to music permitting, obviously).

One thing that I do find interesting in reading technical papers in my own field is the risk of false consensus and the effect it can have on engineering design and practice. About six years ago there was a particularly controversial issue where some results of lab tests called into question a principle that had underpinned a rather important safety requirement in an international regulation. Because of the reaction it provoked my employer was asked by a government agency to repeat the tests and also to review the literature and basis of the then consensus on the matter in question. The job ended up on my desk, much to my surprise (I'm as guilty of following the heard as anyone) I repeated the tests and got the same results. Several times. I then went through the archives and the relevant technical papers. There were hundreds of papers on the subject, but what became apparent was that all of them were based on a single piece of original research and experiments done in the 1950's which had been accepted by, and which underpinned, all subsequent papers on the subject. There appeared to be an overwhelming body of research and consensus when in fact it was all predicated on the efficacy of a single set of experiments done 60 odd years ago.

A dac or an amplifier is of little use if not used to reproduce sound coming out of speakers. In other words, why not use the gold standard as defined by @amirm above when evaluating dacs and amplifiers?

Because it is much better to measure. Much faster, easier, simpler, more accurate. The listening has already been done, by identifying and defining requirement limits for accuracy in the signal processing. We know what is audible, and thus it is not necessary to listen to a dac or amplifier to know whether it will sound good enough.

Just finished testing some new amplifiers today, and of course everything was sorted using measurements. When I had completed the testing, I connected one amp to one of the main front channels in the media room, just to listen to it. Part from sticking my head into the hf horn to verify that the noise level is tolerable, I do not expect the sound to be different. If it sounds different, something is very wrong. And indeed, the correlated noise did not have a solid center, and the drums on Keltner sounded quite diffuse and lacking in attack and tactility. Something was wrong, and that was of course phase inversion due to wrong polarity on the connected speaker. Once that was sorted, the sound was just like it should be, and the Keltner drums hit with a hard, physical punch when I turn up the volume.

Testing a speaker is something entirely different in complexity.

But was that research from 60 years ago correct, and the use of it since then appropriate?

Within limits yes. It was a product of its time, the problem was that new research indicated that there were significant limitations to the original theory. Fortunately the original theory erred on the side of caution (it resulted in an over engineered and conservative explosion prevention arrangement) but advances in CFD, high speed photography and a correction to the underpinning assumption from all those years ago altered our understanding of the events leading up to a particular type of explosion with consequent significant changes in detection and prevention. The people that did the revisionist research were subject to quite a lot of ridicule as it challenged such a deeply entrenched principle.

svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- Thread Starter

- #74

Because it is much better to measure. Much faster, easier, simpler, more accurate. The listening has already been done, by identifying and defining requirement limits for accuracy in the signal processing. We know what is audible, and thus it is not necessary to listen to a dac or amplifier to know whether it will sound good enough.

Just finished testing some new amplifiers today, and of course everything was sorted using measurements. When I had completed the testing, I connected one amp to one of the main front channels in the media room, just to listen to it. Part from sticking my head into the hf horn to verify that the noise level is tolerable, I do not expect the sound to be different. If it sounds different, something is very wrong. And indeed, the correlated noise did not have a solid center, and the drums on Keltner sounded quite diffuse and lacking in attack and tactility. Something was wrong, and that was of course phase inversion due to wrong polarity on the connected speaker. Once that was sorted, the sound was just like it should be, and the Keltner drums hit with a hard, physical punch when I turn up the volume.

Testing a speaker is something entirely different in complexity.

I think you missed my point (or maybe I missed yours...).

My point is, if listening tests using special test tones say that you need say 120 dB SNR for transparency in amplifiers and dacs, while the “gold standard” would say anything above 85 dB is inaudible when those amps and dacs are put into a full audio chain with speakers, then amplifiers and dacs that have 100 dB in SNR are overengineered for the purpose.

Producers of amplifiers and dacs profit on this double standard. The user is better off with one standard that takes a more holistic approach in the test regime design.

And to repeat my overarching point: There cannot be one gold standard if it’s not used throughout.

Can we please stop inventing definitions or embellishing them without justification for rhetorical purposes when actual, simple dictionary definitions exist?

The attempt in this thread to construct a "personal definition" of "gold standard" from the primary literal definition of "gold standard" is a bit silly when the second definition fits the usage by Amir.

Am I the only person here who accepts dictionary definitions when they are appropriate? Does anyone here not accept the likelihood that someone like Amir normally uses words based on their dictionary definitions?

And I ask Amir - did you use "gold standard" as meaning anything more than an emphatic endorsement of a highly qualified benchmark?

------------------

Definition #2 of "gold standard" by Merriam-Webster is "benchmark sense 1a" which is:

Merriam Webster Definition of "benchmark"

1a: something that serves as a standard by which others may be measured or judged.

The attempt in this thread to construct a "personal definition" of "gold standard" from the primary literal definition of "gold standard" is a bit silly when the second definition fits the usage by Amir.

Am I the only person here who accepts dictionary definitions when they are appropriate? Does anyone here not accept the likelihood that someone like Amir normally uses words based on their dictionary definitions?

And I ask Amir - did you use "gold standard" as meaning anything more than an emphatic endorsement of a highly qualified benchmark?

------------------

Definition #2 of "gold standard" by Merriam-Webster is "benchmark sense 1a" which is:

Merriam Webster Definition of "benchmark"

1a: something that serves as a standard by which others may be measured or judged.

Seeing how he uses the word 'audiophile', I would say noDoes anyone here not accept the likelihood that someone like Amir normally uses words based on their dictionary definitions?

In reality, like many other words, audiophile has a simple meaning - "a person who is enthusiastic about high-fidelity sound reproduction."Seeing how he uses the word 'audiophile', I would say no

I try to use adjectives to narrow my definition when appropriate, because many people associate "audiophile" strictly with high-end subjectivists who are totally detached from the objective side of audiophilia. In the absence of adjectives, I can generally detect the subset of meaning in Amir's comments from context.

By the most basic definition, I have been an audiophile - but not a "high-end subjectivist" who buys into snake-oil-based idiocy - for 62 years. Hard to believe, but at age 77, my hearing is still reasonably good - at least good enough to thoroughly enjoy high-fidelity recorded music.

svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- Thread Starter

- #78

Can we please stop inventing definitions or embellishing them without justification for rhetorical purposes when actual, simple dictionary definitions exist?

The attempt in this thread to construct a "personal definition" of "gold standard" from the primary literal definition of "gold standard" is a bit silly when the second definition fits the usage by Amir.

Am I the only person here who accepts dictionary definitions when they are appropriate? Does anyone here not accept the likelihood that someone like Amir normally uses words based on their dictionary definitions?

And I ask Amir - did you use "gold standard" as meaning anything more than an emphatic endorsement of a highly qualified benchmark?

------------------

Definition #2 of "gold standard" by Merriam-Webster is "benchmark sense 1a" which is:

Merriam Webster Definition of "benchmark"

1a: something that serves as a standard by which others may be measured or judged.

For a more in-depth discussion of «gold standard» in academic research, please see Dr. Jurgen A H R Claassen’s essay:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC557893/#__ffn_sectitle

Excerpt:

«In a Medline search from 1955 onwards, the first emergence of the term—albeit in a different meaning—was in 1962, in an anonymous commentary in the Lancet. Entitled “Towards a gold standard,” it pleaded to set a standard for the use of gold salts in patients with rheumatoid arthritis. It may well have been Rudd who first introduced the “gold standard” in medicine in its current sense in 1979.1 In the following years, the number of publications that employed the term grew rapidly. This was much to the dismay of one biochemist, who thought the term was “presumptuous” for a biological test, since “the subject is in perpetual evolution [and] gold standards are by definition never reached.”2 He proposed abolishing the term “because the phrase smacks of dogma... After all, the financiers gave up on the idea of a gold standard decades ago.” He failed in his mission, however: since 1995, over 10 000 publications mentioned “gold standard”».

No. I used the term generically as people do in conversation. For @svart-hvitt to run with that word is beyond pedantic. I have explained the reason for not doing DAC listening tests countless times. It is #1 in the FAQ for measurements, linked to from the home page: https://www.audiosciencereview.com/...rstanding-audio-measurements.2351/#post-65101And I ask Amir - did you use "gold standard" as meaning anything more than an emphatic endorsement of a highly qualified benchmark?

If he believes DACs should be double blind tested, he should take the initiative to conduct them, together with resources to run the tests and publish them. Until then, I appreciate not hearing yet again "why do you measure." I do because hardly anyone in the industry does. Many of you can't do these measurements but can conduct blind tests. So if it is important to you, go and do it. You don't need a $30,000 instrument.

If all of this is cover by him to complain for the sake of complaining (which is what it reads to me), then stop. None of this is constructive and is wasting the forum resources.

- Joined

- Feb 23, 2016

- Messages

- 20,771

- Likes

- 37,636

Regarding the situation you are describing here, it is just a messy old world.I think you missed my point (or maybe I missed yours...).

My point is, if listening tests using special test tones say that you need say 120 dB SNR for transparency in amplifiers and dacs, while the “gold standard” would say anything above 85 dB is inaudible when those amps and dacs are put into a full audio chain with speakers, then amplifiers and dacs that have 100 dB in SNR are overengineered for the purpose.

Producers of amplifiers and dacs profit on this double standard. The user is better off with one standard that takes a more holistic approach in the test regime design.

And to repeat my overarching point: There cannot be one gold standard if it’s not used throughout.

Let us say the gold standard is proper blind listening tests. You are acting as if that can gives us a clear black and white definition of this is enough and that isn't scale. Yet it can't do that. There are things that are somewhat audible if you can test it for 200 trials blind. Yet they will not show up in 20 trial tests. Others would never show up in 100,000 trials. That also points to your use of a gold standard in this case has been mis-applied.

The other thing I notice in your original post in regards to that large chart of pages and and sources quoted in articles, is engineering or manufacturing topics had the least growth. Others like the social sciences were larger, almost to the point it looked like a creative venture not tied very closely to any reality. In between were areas that benefited from increased data previously not available in abundance or like in computer systems where hardware really is allowing creation of things not previously possible. I think the point that chart does or doesn't support is muddled by this variance.

I do agree with you in that too much emphasis on DACs having sinad of 110db+ vs only 100db is navel gazing largely. And maybe you are thinking of something like the following. We agree if a 1 khz test shows distortion and noise is below -120 db the distortion is inaudible. Does this mean any DAC or amp with a worse test is audible? The answer is it likely could be 20 or 30 db worse and be inaudible. So a nice thing to do might be to incorporate this masking curve as an overlay to 1 khz test results. There is good reason to think even this is a fairly strict standard. As few individual levels of tones reach this in a music signal. And due to all the frequencies in music the results might be even a bit less good than meets this criteria to be transparent. Yet this relaxes requirements much closer toward those of the ear itself instead of a maximally unassailable standard. Wouldn't this put a different spin on importance of DACs in regards to your quarter circle chart in the original post?

@amirm do you think an overlay like this for the spectrum of the 1 khz test would be useful for DACs, and power amps? With the assumption the max signal you test is in a system where it would result in 100 db SPL.

So maybe ASR could do something of more importance to show instead of how many DACs overstate their capabilities (and yet their actual performance is still clean enough) if they found those few that are really dirty. In fact you probably will have difficulty finding those that are actually dirty sounding. It makes sense to continue testing them to uncover the charlatans claiming incredible performance at high prices while delivering performances sub-par for a $300 DAC.

Power amps on the other hand, in the affordable range have been hard to find that are clean. And you have the added complexity of interfacing with a multitude of loads. I'm also reminded of those Swedish AES tests of series connected amplifiers where only one was ever found transparent to blind testing. I wish I knew more about what made them audible. If someone can contact the people who published those, it would be very nice to have them take part in this forum.

Attachments

Similar threads

- Replies

- 26

- Views

- 1K

- Replies

- 56

- Views

- 4K

- Poll

- Replies

- 237

- Views

- 40K

D

- Poll

- Replies

- 362

- Views

- 50K