svart-hvitt

Major Contributor

- Joined

- Aug 31, 2017

- Messages

- 2,375

- Likes

- 1,253

- - - warning ! ! ! - - - very long post, please take your time  - - -

- - -

Have the standards for discourse on ASR led us to a place where irrelevance is celebrated, while difficult questions are not pursued? Do the norms and standards on ASR lead to sins of emission?

I will present a model of sins of emission in spirit of Ellison (2002) and named after Akerlof (2019). As you will see, the Akerlof model makes immediate sense for the audio science interested person too. From Akerlof (2019):

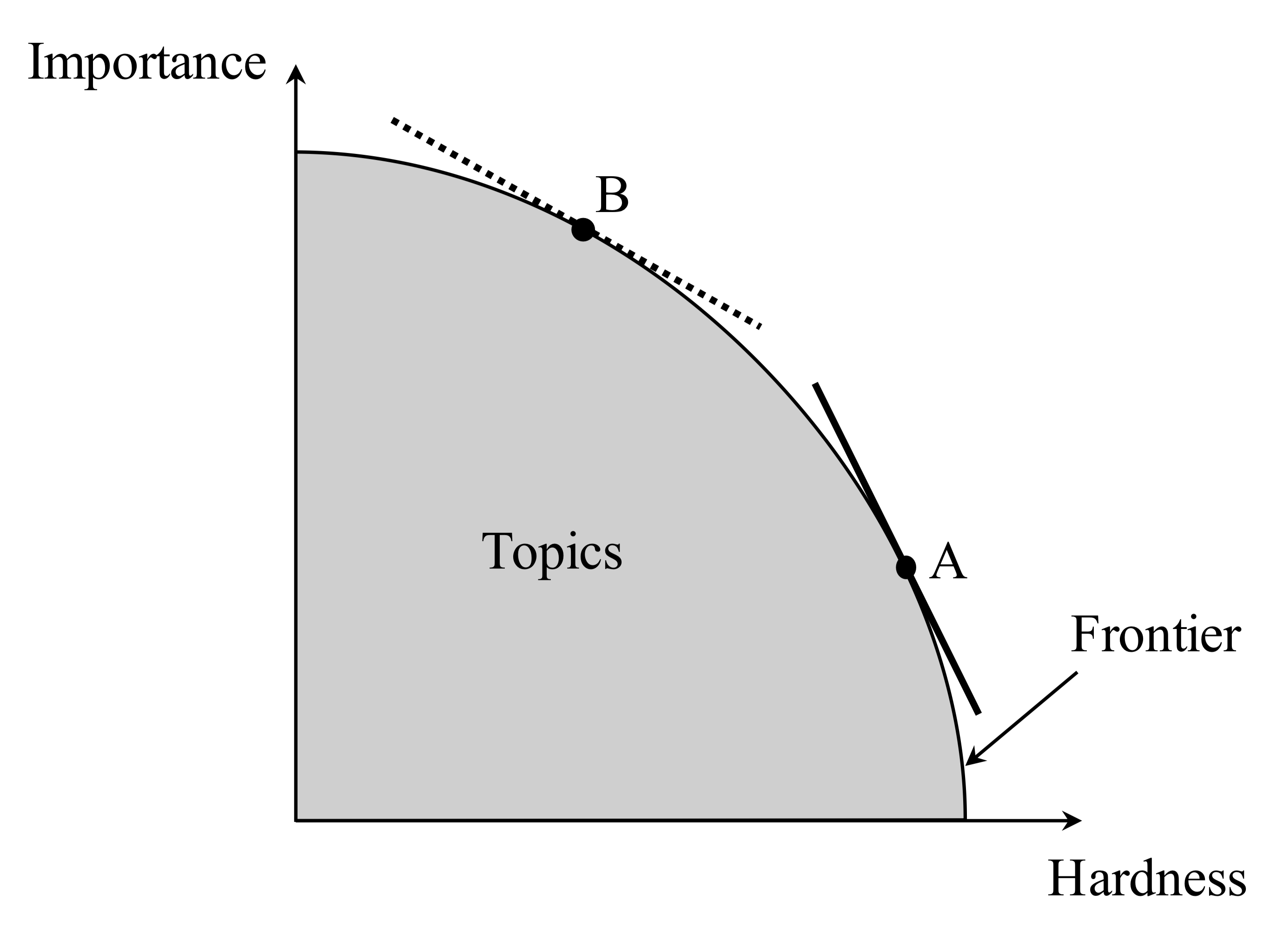

“An academic researcher selects from a set of possible research topics. These topics can be characterized along two dimensions: (1) Hardness (i.e., the ease or difficulty of producing precise work on the topic) and (2) Importance.

The researcher values both Hardness and Importance; but the weight he places on Hardness leads him to trade off Hardness and Importance in a non-optimal way. In this sense, he is biased.

Figure 1 depicts the solution to the researcher’s problem. While the researcher chooses a topic lying along the “frontier,” the frontier topic he chooses differs from the social optimum. His chosen topic (Topic A) is both Harder and less Important than the social optimum (Topic B).

If we aggregate across all researchers, we obtain a prediction about the “cloud” of topics the profession will address. Observe that there will be a set of Important but Soft topics which will not be pursued; in this sense, bias towards the Hard in the profession generates “sins of omission.””

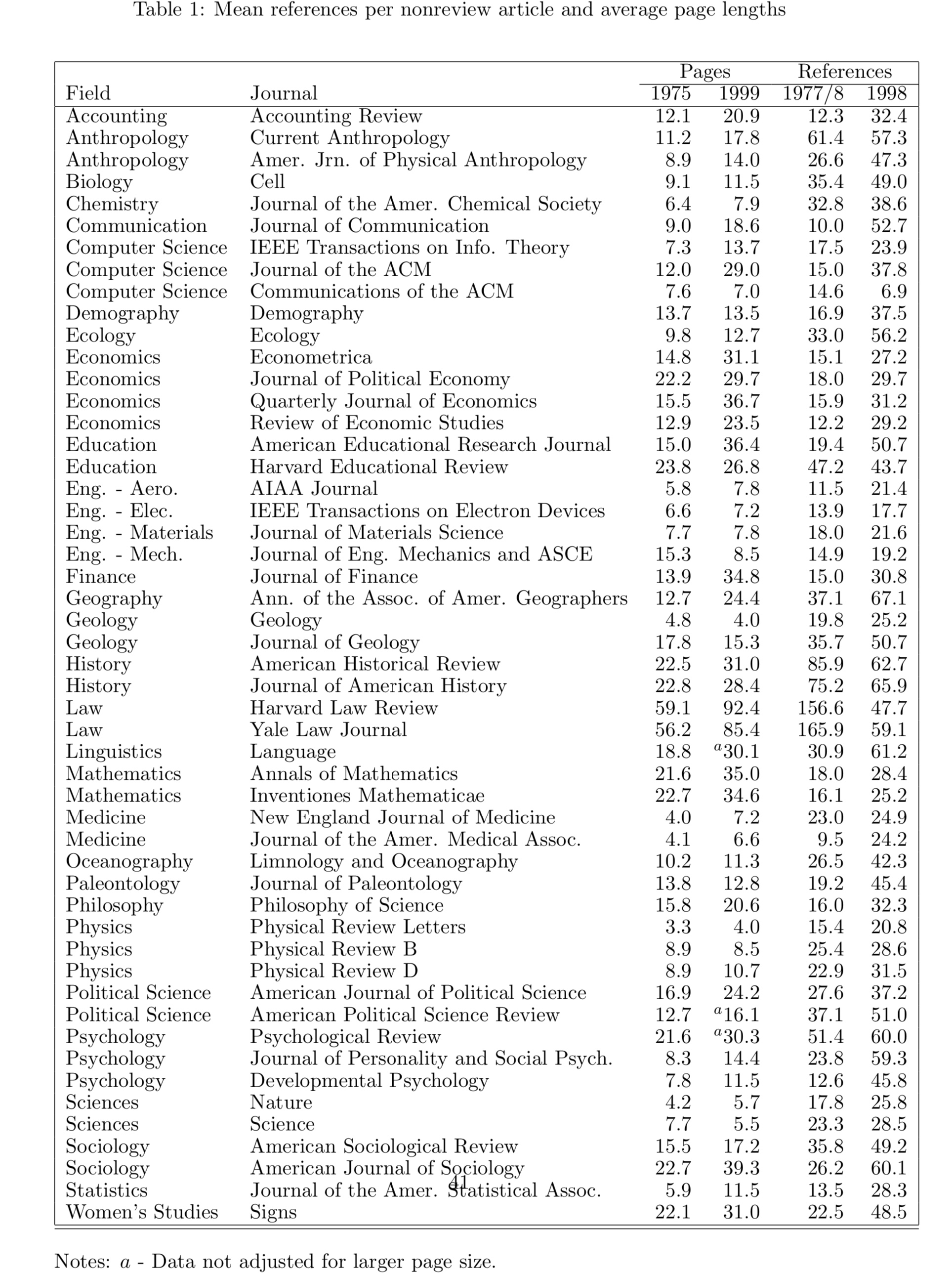

Ellison (2002), which inspired Akerlof’s model, documented an important shift in academic research. Academic articles are longer and take longer to publish than in previous decades. This may not be clear to younger researchers, but is obvious to older professors and if one looks at the form of older journal articles. The table below, from Ellison, gives an overview.

Both Ellison and Akerlof are economists. However, Ellison noted:

“The phenomenon I am describing is not unique to economics. Similar trends can be seen in many other academic disciplines”.

Both Ellison and Akerlof argue that the evolving standards gradually reflect gradually changing social norms.

“The slowdown of the process and the increased length of papers may reflect an increase in [Hardness]”, Ellison wrote (I have consistently used Akerlof’s concept of “Hardness” instead of Ellison’s more general “r-quality”).

“Furthermore, the emphasis on Hardness is likely at the expense of Importance”, Akerlof said.

Akerlof, recipient of the “Nobel prize” in economics in 2001, listed several consequences of the Hardness bias:

“Consequence 1. Bias against New Ideas. So far, we have classified topics according to their “Importance” and their “Hardness.” Another relevant dimension is whether topics are New or Old—or, in Kuhn’s (2012) terminology, whether they entail “normal" or “revolutionary” science. Not all New topics are Important; but, clearly, the most Important topics are New. Hardness bias inhibits acceptance of New topics in at least two different ways.

First, Old topics/paradigms have a variety of tools that aid precision: such as established terminologies, conceptual frameworks, and empirical methodologies. With bias toward the Hard, academics working within such accepted paradigms have an advantage, since they can borrow at will from such toolkits to state their ideas precisely. In contrast, those who are presenting a New idea, are disadvantaged, since they must develop their own tools. As expressed by Frey (2003, p. 212)): "a new idea is less well-formulated than ... well-established ideas and therefore rejected for lack of rigor." In this way, demand for precision (for Hardness) impedes the introduction of New ideas.

Second, Hardness bias reduces the ability to challenge existing paradigms. According to usual procedure in economics, as in science more generally, Old ideas are only rejected when they are shown to be inferior in tests against New ideas”.

“Consequence 2. Over-specialization. Bias towards the Hard also encourages over-specialization. Generalists need to meet the standards of precision for multiple fields, while specialists need only meet the standards of one. Hence, it is easier to be Hard as a specialist than as a generalist”.

“ Consequence 3. Evaluations based on publications in "Top Five". As we have seen, Hardness bias results in specialization. That, in turn, results in increased use of journal metrics for evaluations. In economics, this has especially taken the form of evaluations based on number of "Top Five" publications”.

So what does this mean for ASR?

I believe many on ASR suffer from “science envy” in the sense that they pick up bad habits (as well as some good habits) from their idols in science. In other words, I think the problems in the scientific community that Ellison (2002) and Akerlof (2019) discuss are reflected by ASR members too, possibly to a greater extent than in “real” science communities. One observation: @oivavoi is one of the most highly trained professionals on ASR in scientific discourse. Still, I get the impression that his writing sometimes makes friction where his intention was just to discuss an idea.

I have previously likened the focus on ASR with the so called streetlight effect (https://en.m.wikipedia.org/wiki/Streetlight_effect):

“A policeman sees a drunk man searching for something under a streetlight and asks what the drunk has lost. He says he lost his keys and they both look under the streetlight together. After a few minutes the policeman asks if he is sure he lost them here, and the drunk replies, no, and that he lost them in the park. The policeman asks why he is searching here, and the drunk replies, "this is where the light is".”

My point was the fact that DACs are so transparent, one cannot argue that this part of the audio chain scores high on Importance. Amplifiers, too, have become so transparent it should be obvious where focus should be, i.e. on speakers.

Especially DACs, but modern amplifiers too, fit nicely into the Ellison-Akerlof models, where we observe that something unimportant can be dealt with using the Hard tools. As we move towards something of real importance, however, like speakers, it’s questionable if we have a readily available toolkit to separate good speakers from better speakers.

Not long ago, I quoted a JAES article from 2017:

“Loudspeaker specifications have traditionally described the physical properties and characteristics of loudspeakers: frequency response, dimensions and volume of the cabinet, diameter of drivers, impedance, total harmonic distortion, sensitivity, etc. Few of these directly describe the sound reproduction and none directly describe perception of the reproduction, i.e., takes into account that the human auditory system is highly non-linear in terms of spectral-, temporal-, and sound level processing (see, e.g., [3]). This disconnect between specifications and perception have made it challenging for acousticians and engineers (and consumers) to predict how a loudspeaker will sound on the basis of these specifications”.

Source: http://www.aes.org/tmpFiles/elib/20190728/18729.pdf

In a previous article from 2016, one of the authors, Pedersen, wrote something which seems to have inspired the later JAES article:

“ In other words, if you want to know how a loudspeaker sounds, it is more sensible to use a perceptual assessment of a loudspeaker’s sound based on a listening test rather than taking outset in the technical data”.

Source: https://assets.madebydelta.com/assets/docs/senselab/publications/TEKnotat_TN11_UK_v5.pdf

One of the same authours, professor Bech, made an interesting observation almost a decade (2009) earlier:

“It is evident that the dependence of listeners’ fidelity ratings on position (and room) is also important. Whilst the dipole is rated as worst in Position 2 (less than 1m from the back wall, central), it is rated as best when moved to Position 1 (over 1m from back and side wall). This suggests that the perceived influence of directivity is dependent on both position and room type”.

Source: https://www.researchgate.net/public..._sound_quality_-_a_review_of_existing_studies

Note that the often quoted Harman research found dipole to be no good. Which begs the question, how robust is Hard?

Why are the above quotes important? The above quotes from the Danish researchers are important because we have many measurements of speakers that don’t help us to predict how these loudspeakers will sound based on these Hard data. I illustrated this point by the case method (see Flyvbjerg for a discussion of the case method: https://arxiv.org/pdf/1304.1186.pdf), where I asked ASR members to try and figure out which is the best speaker, the Revel Salon or the JBL M2. Both speakers are made by Harman, which are regarded by ASR members as best in class when it comes to audio science, measurements and specifications. Besides, @Floyd Toole has written a book of 568 pages on the reproduction of audio during his time at Harman. Despite all the measurements and with Toole’s book in hand, nobody wanted to apply this science to predict how the M2 would sound compared to the Salon and which speaker is the best one based on Hard data. How many more pages of Toole research do people need to predict the sound of two good speakers for which we have lots of Hard data?

Let me quote Ellison (2002) again to make my point clearer:

“The other basic observation I make about the static model is that a continuum of social norms are possible. If the community agrees that quality is very important, then authors will spend most of their time developing main ideas. If [Hardness] is very important, then authors spend very little time on ideas and focus on revisions. Nothing in the model prevents either extreme or something in the middle from being part of an equilibrium. Differences in social norms provide another potential explanation for differences across fields or over time”.

The quotes from the Danish articles serve to illustrate my point that many on ASR prefer to continue using Hard methods that cannot predict the sound and quality of speakers to separate the good from the best speakers, while new ideas for evaluation of sound reproduction are met with hostility due to lack of Hardness. So Hardness wins over ideas. The same hostility to new ideas was registered when @oivavoi picked up the concept of “slow listening” in an AES paper (https://www.audiosciencereview.com/...f-benchmark-ahb2-amp.7628/page-45#post-190550). The article was waved off by ASR members due to lack of Hardness.

@Kvalsvoll criticised me for using the concept of “middle way” in a setting where science is celebrated. But isn’t the Ellison quote just above a reminder that there is a trade-off between Importance and Hardness, that Ideas and Data are two factors that need balancing, i.e. finding a middle way?

Audio is a science which combines multiple research fields; physics, psychoacoustics, psychology, neurology and more. Isn’t such a multi-disciplinar field a place where ideas are even more important than in a narrower field, like say mathematics or “pure” physics? Do people on ASR welcome input from other fields, or is such input better omitted?

Toole’s 568 pages long book is also a reminder, isn’t it, that legacy research brings you only so far, but not further as speakers start to reach a consensus on the “old” consensus factors like “flat” and “smooth”? Are we in need of new ideas to design, measure and describe speakers that go beyond “good”? Will we ever be able to describe say Salon and M2 in ways that make meaning to people and let us decide which speaker is the best for a majority of users and use cases?

And one more thing to disturb a little more. The celebrated research on ASR is heavily leaning towards vox populi, i.e. polls to find correlation between preferences and speaker attributes. What I have found as fascinating as lacking in logic, is the fact that people often celebrate certain vox populi processes (say market prices or speaker quality) while they at the same time attack the outcome of other vox populi processes (say democratic elections). Personally, I like the idea of vox populi as much as I am aware of its shortcomings.

Lastly, a point on behaviour in science and a point that is made by both Ellison (2002) and Akerlof (2019). The point is about what decides what is important and what is not in research and science. Akerlof wrote:

“This tendency for disagreement on Importance is exacerbated by tendencies to inflate the Importance of one’s own work and deflate the Importance of others’”.

A similar observation by Ellison (2002):

“Section VII adds the assumption that academics are biased and think that their work is slightly better than it really is”.

One final note. Why is this post so long? By now you have learned that academic articles have become longer, but this post is not long because of my “science envy”. The post is long because it takes more space and effort to make a divergent point, to present ideas that collide with consensus and status quo in a social setting.

- - - - - - -

REFERENCES:

Ellison (2002): https://pdfs.semanticscholar.org/8429/7d83186f86c963c61556e1e2d954b8fbed37.pdf

Akerlof (2019): https://assets.aeaweb.org/asset-server/files/9185.pdf

Have the standards for discourse on ASR led us to a place where irrelevance is celebrated, while difficult questions are not pursued? Do the norms and standards on ASR lead to sins of emission?

I will present a model of sins of emission in spirit of Ellison (2002) and named after Akerlof (2019). As you will see, the Akerlof model makes immediate sense for the audio science interested person too. From Akerlof (2019):

“An academic researcher selects from a set of possible research topics. These topics can be characterized along two dimensions: (1) Hardness (i.e., the ease or difficulty of producing precise work on the topic) and (2) Importance.

The researcher values both Hardness and Importance; but the weight he places on Hardness leads him to trade off Hardness and Importance in a non-optimal way. In this sense, he is biased.

Figure 1 depicts the solution to the researcher’s problem. While the researcher chooses a topic lying along the “frontier,” the frontier topic he chooses differs from the social optimum. His chosen topic (Topic A) is both Harder and less Important than the social optimum (Topic B).

If we aggregate across all researchers, we obtain a prediction about the “cloud” of topics the profession will address. Observe that there will be a set of Important but Soft topics which will not be pursued; in this sense, bias towards the Hard in the profession generates “sins of omission.””

Ellison (2002), which inspired Akerlof’s model, documented an important shift in academic research. Academic articles are longer and take longer to publish than in previous decades. This may not be clear to younger researchers, but is obvious to older professors and if one looks at the form of older journal articles. The table below, from Ellison, gives an overview.

Both Ellison and Akerlof are economists. However, Ellison noted:

“The phenomenon I am describing is not unique to economics. Similar trends can be seen in many other academic disciplines”.

Both Ellison and Akerlof argue that the evolving standards gradually reflect gradually changing social norms.

“The slowdown of the process and the increased length of papers may reflect an increase in [Hardness]”, Ellison wrote (I have consistently used Akerlof’s concept of “Hardness” instead of Ellison’s more general “r-quality”).

“Furthermore, the emphasis on Hardness is likely at the expense of Importance”, Akerlof said.

Akerlof, recipient of the “Nobel prize” in economics in 2001, listed several consequences of the Hardness bias:

“Consequence 1. Bias against New Ideas. So far, we have classified topics according to their “Importance” and their “Hardness.” Another relevant dimension is whether topics are New or Old—or, in Kuhn’s (2012) terminology, whether they entail “normal" or “revolutionary” science. Not all New topics are Important; but, clearly, the most Important topics are New. Hardness bias inhibits acceptance of New topics in at least two different ways.

First, Old topics/paradigms have a variety of tools that aid precision: such as established terminologies, conceptual frameworks, and empirical methodologies. With bias toward the Hard, academics working within such accepted paradigms have an advantage, since they can borrow at will from such toolkits to state their ideas precisely. In contrast, those who are presenting a New idea, are disadvantaged, since they must develop their own tools. As expressed by Frey (2003, p. 212)): "a new idea is less well-formulated than ... well-established ideas and therefore rejected for lack of rigor." In this way, demand for precision (for Hardness) impedes the introduction of New ideas.

Second, Hardness bias reduces the ability to challenge existing paradigms. According to usual procedure in economics, as in science more generally, Old ideas are only rejected when they are shown to be inferior in tests against New ideas”.

“Consequence 2. Over-specialization. Bias towards the Hard also encourages over-specialization. Generalists need to meet the standards of precision for multiple fields, while specialists need only meet the standards of one. Hence, it is easier to be Hard as a specialist than as a generalist”.

“ Consequence 3. Evaluations based on publications in "Top Five". As we have seen, Hardness bias results in specialization. That, in turn, results in increased use of journal metrics for evaluations. In economics, this has especially taken the form of evaluations based on number of "Top Five" publications”.

So what does this mean for ASR?

I believe many on ASR suffer from “science envy” in the sense that they pick up bad habits (as well as some good habits) from their idols in science. In other words, I think the problems in the scientific community that Ellison (2002) and Akerlof (2019) discuss are reflected by ASR members too, possibly to a greater extent than in “real” science communities. One observation: @oivavoi is one of the most highly trained professionals on ASR in scientific discourse. Still, I get the impression that his writing sometimes makes friction where his intention was just to discuss an idea.

I have previously likened the focus on ASR with the so called streetlight effect (https://en.m.wikipedia.org/wiki/Streetlight_effect):

“A policeman sees a drunk man searching for something under a streetlight and asks what the drunk has lost. He says he lost his keys and they both look under the streetlight together. After a few minutes the policeman asks if he is sure he lost them here, and the drunk replies, no, and that he lost them in the park. The policeman asks why he is searching here, and the drunk replies, "this is where the light is".”

My point was the fact that DACs are so transparent, one cannot argue that this part of the audio chain scores high on Importance. Amplifiers, too, have become so transparent it should be obvious where focus should be, i.e. on speakers.

Especially DACs, but modern amplifiers too, fit nicely into the Ellison-Akerlof models, where we observe that something unimportant can be dealt with using the Hard tools. As we move towards something of real importance, however, like speakers, it’s questionable if we have a readily available toolkit to separate good speakers from better speakers.

Not long ago, I quoted a JAES article from 2017:

“Loudspeaker specifications have traditionally described the physical properties and characteristics of loudspeakers: frequency response, dimensions and volume of the cabinet, diameter of drivers, impedance, total harmonic distortion, sensitivity, etc. Few of these directly describe the sound reproduction and none directly describe perception of the reproduction, i.e., takes into account that the human auditory system is highly non-linear in terms of spectral-, temporal-, and sound level processing (see, e.g., [3]). This disconnect between specifications and perception have made it challenging for acousticians and engineers (and consumers) to predict how a loudspeaker will sound on the basis of these specifications”.

Source: http://www.aes.org/tmpFiles/elib/20190728/18729.pdf

In a previous article from 2016, one of the authors, Pedersen, wrote something which seems to have inspired the later JAES article:

“ In other words, if you want to know how a loudspeaker sounds, it is more sensible to use a perceptual assessment of a loudspeaker’s sound based on a listening test rather than taking outset in the technical data”.

Source: https://assets.madebydelta.com/assets/docs/senselab/publications/TEKnotat_TN11_UK_v5.pdf

One of the same authours, professor Bech, made an interesting observation almost a decade (2009) earlier:

“It is evident that the dependence of listeners’ fidelity ratings on position (and room) is also important. Whilst the dipole is rated as worst in Position 2 (less than 1m from the back wall, central), it is rated as best when moved to Position 1 (over 1m from back and side wall). This suggests that the perceived influence of directivity is dependent on both position and room type”.

Source: https://www.researchgate.net/public..._sound_quality_-_a_review_of_existing_studies

Note that the often quoted Harman research found dipole to be no good. Which begs the question, how robust is Hard?

Why are the above quotes important? The above quotes from the Danish researchers are important because we have many measurements of speakers that don’t help us to predict how these loudspeakers will sound based on these Hard data. I illustrated this point by the case method (see Flyvbjerg for a discussion of the case method: https://arxiv.org/pdf/1304.1186.pdf), where I asked ASR members to try and figure out which is the best speaker, the Revel Salon or the JBL M2. Both speakers are made by Harman, which are regarded by ASR members as best in class when it comes to audio science, measurements and specifications. Besides, @Floyd Toole has written a book of 568 pages on the reproduction of audio during his time at Harman. Despite all the measurements and with Toole’s book in hand, nobody wanted to apply this science to predict how the M2 would sound compared to the Salon and which speaker is the best one based on Hard data. How many more pages of Toole research do people need to predict the sound of two good speakers for which we have lots of Hard data?

Let me quote Ellison (2002) again to make my point clearer:

“The other basic observation I make about the static model is that a continuum of social norms are possible. If the community agrees that quality is very important, then authors will spend most of their time developing main ideas. If [Hardness] is very important, then authors spend very little time on ideas and focus on revisions. Nothing in the model prevents either extreme or something in the middle from being part of an equilibrium. Differences in social norms provide another potential explanation for differences across fields or over time”.

The quotes from the Danish articles serve to illustrate my point that many on ASR prefer to continue using Hard methods that cannot predict the sound and quality of speakers to separate the good from the best speakers, while new ideas for evaluation of sound reproduction are met with hostility due to lack of Hardness. So Hardness wins over ideas. The same hostility to new ideas was registered when @oivavoi picked up the concept of “slow listening” in an AES paper (https://www.audiosciencereview.com/...f-benchmark-ahb2-amp.7628/page-45#post-190550). The article was waved off by ASR members due to lack of Hardness.

@Kvalsvoll criticised me for using the concept of “middle way” in a setting where science is celebrated. But isn’t the Ellison quote just above a reminder that there is a trade-off between Importance and Hardness, that Ideas and Data are two factors that need balancing, i.e. finding a middle way?

Audio is a science which combines multiple research fields; physics, psychoacoustics, psychology, neurology and more. Isn’t such a multi-disciplinar field a place where ideas are even more important than in a narrower field, like say mathematics or “pure” physics? Do people on ASR welcome input from other fields, or is such input better omitted?

Toole’s 568 pages long book is also a reminder, isn’t it, that legacy research brings you only so far, but not further as speakers start to reach a consensus on the “old” consensus factors like “flat” and “smooth”? Are we in need of new ideas to design, measure and describe speakers that go beyond “good”? Will we ever be able to describe say Salon and M2 in ways that make meaning to people and let us decide which speaker is the best for a majority of users and use cases?

And one more thing to disturb a little more. The celebrated research on ASR is heavily leaning towards vox populi, i.e. polls to find correlation between preferences and speaker attributes. What I have found as fascinating as lacking in logic, is the fact that people often celebrate certain vox populi processes (say market prices or speaker quality) while they at the same time attack the outcome of other vox populi processes (say democratic elections). Personally, I like the idea of vox populi as much as I am aware of its shortcomings.

Lastly, a point on behaviour in science and a point that is made by both Ellison (2002) and Akerlof (2019). The point is about what decides what is important and what is not in research and science. Akerlof wrote:

“This tendency for disagreement on Importance is exacerbated by tendencies to inflate the Importance of one’s own work and deflate the Importance of others’”.

A similar observation by Ellison (2002):

“Section VII adds the assumption that academics are biased and think that their work is slightly better than it really is”.

One final note. Why is this post so long? By now you have learned that academic articles have become longer, but this post is not long because of my “science envy”. The post is long because it takes more space and effort to make a divergent point, to present ideas that collide with consensus and status quo in a social setting.

- - - - - - -

REFERENCES:

Ellison (2002): https://pdfs.semanticscholar.org/8429/7d83186f86c963c61556e1e2d954b8fbed37.pdf

Akerlof (2019): https://assets.aeaweb.org/asset-server/files/9185.pdf

Last edited: