I have trouble finding digital audio IO that is compatible with Raspberry Pi, so while the idea to build standalone programmable DSP with Raspberry Pi is amazing, I would like it to support something like 12+ ch input and 16+ ch output to be really versatile like Mac/PC processing.

I hear you and been meaning to get back to you. I am working with a company that develops custom digital audio I/O boards compatible with Raspberry Pi. Can't go into too much detail yet but likely be a 1U chassis and the number of digital I/O channels will be configurable. Trying for the "Swiss Army" knife of DSP I/O supporting HDMI, TOSLINK, AES, DANTE, USB, and …

If you, or others, have specific requirements or features, please let me know or drop me an email.

In the meantime, I can share some test results with a client that needed DRC/convolution for a 7.1.4 Dolby Atmos playback studio for both music and movies. Since the studio was already outfitted with Lynx Studio gear, it was simple to build a standalone DSP Processor using Lynx's AES16e 16 channel digital I/O card on Windows. But first, had to prove one can use long tap length (65,536 taps) convolution FIR filters for movies with less than

22ms of round trip latency. Long tap FIR filters (e.g. 65,536 taps) provide excellent low frequency control below 100 Hz, right where you need the power for effective digital room correction.

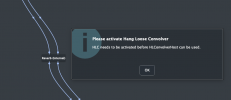

Note: for music/movie production, studios use DAW’s that has latency compensation built in which takes care of any latency reported by the VST3/AU plugins and adjusts the video so that both the audio and video are in sync. Same approach for tracking/overdubbing. Some consumer applications like

JRiver also can do this. But for "standalone" music and movie sound reproduction rooms that are not outfitted with DAW's, there is currently no way to report audio latency back to the media player application that’s running in a separate process. This is an issue for lipsync. However, one can mitigate this by using a 0ms latency convolver and pro audio gear with direct audio connections via ASIO protocol.

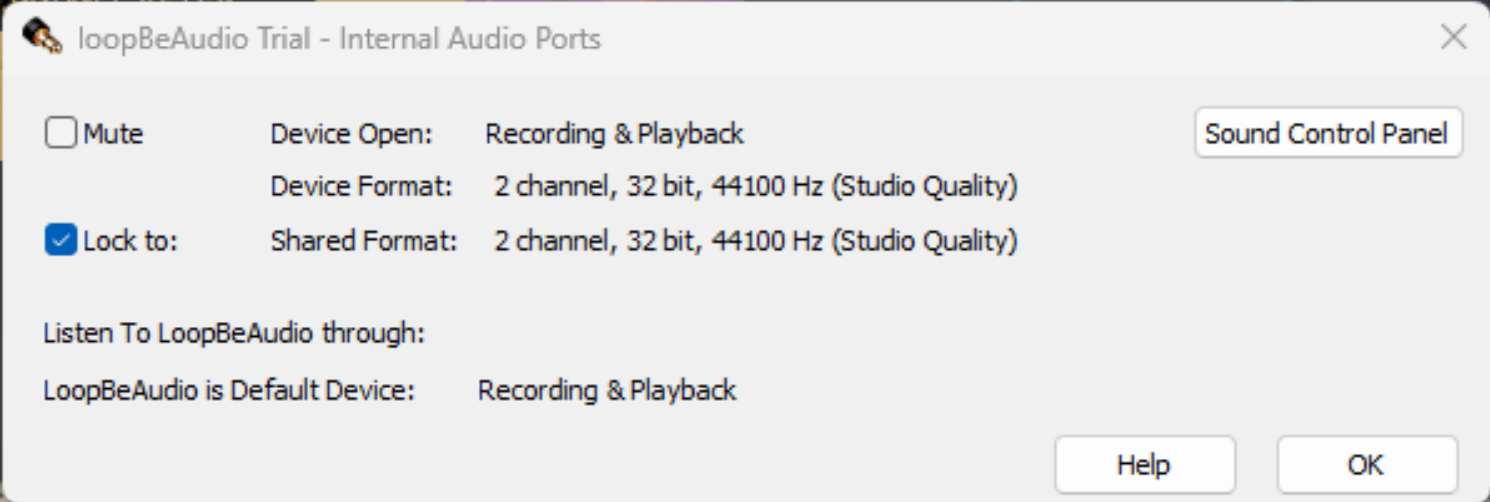

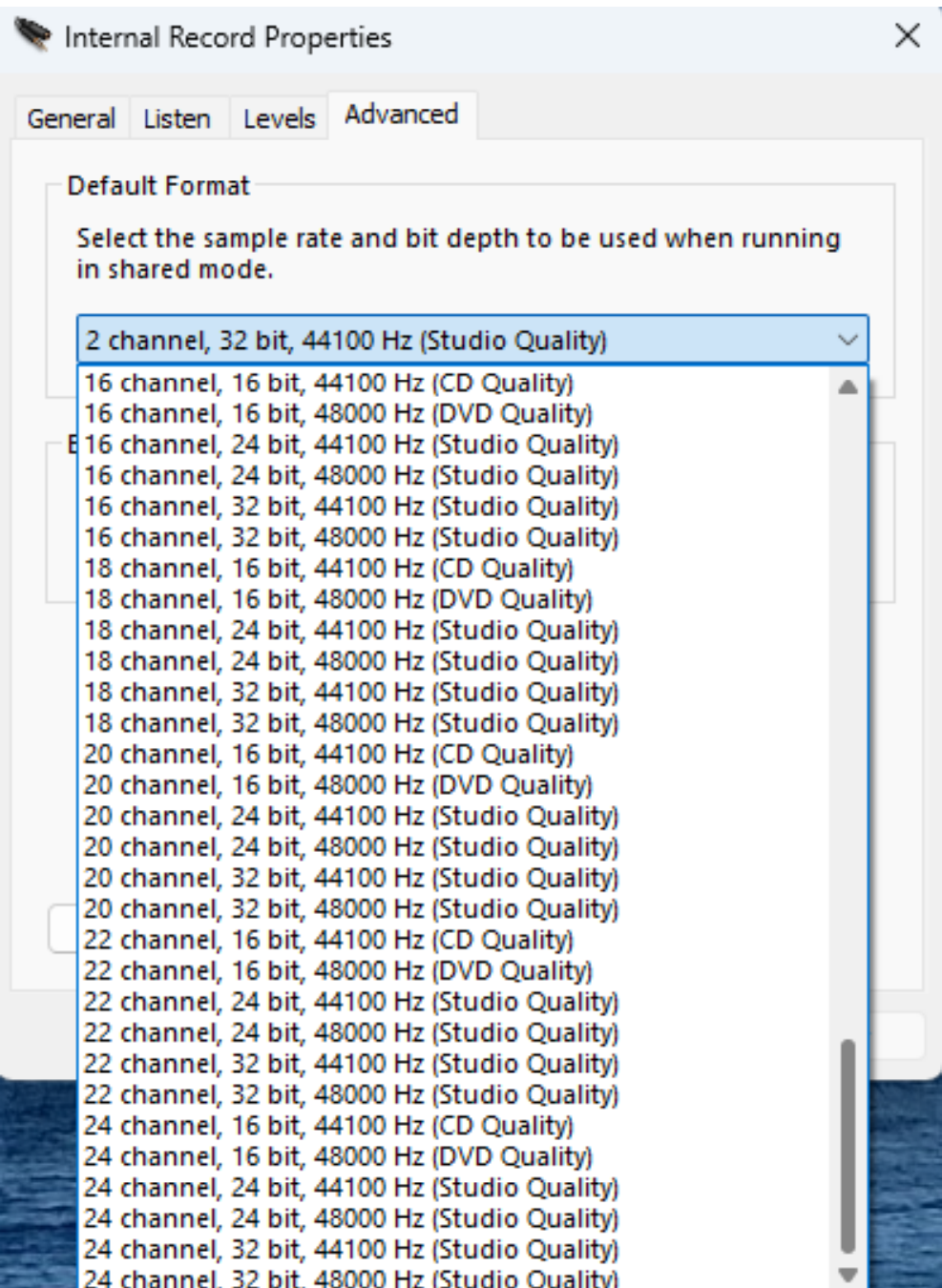

My test setup is computer 1 with a Lynx Hilo attached using a media player application to play music and movies. The Hilo's AES output is AES input to the AES16e card in a 2nd computer. HLConvolver “Host” application hosts the HLC VST3 convolution plugin on the 2nd computer. HLC is processing a 65,536-tap minimum phase FIR filter, with AES16e output back to the Hilo AES digital input. Hilo DAC output is connected to amps/speakers and headphones. The AES16e was slave to the Hilo; tested using sync from the AES digital input signal or Hilo's external clock output. Results were identical with either setting.

Step 1 was use REW on computer 1 to produce a loopback sweep through the entire digital I/O chain, including convolution with a Dirac pulse 65,536 tap minimum phase FIR filter. As expected, perfectly flat frequency and phase response. Looking at REW's distortion tab was about -150 dBFS across the frequency spectrum. Computer 1 is a 12-year-old i5 CPU with Windows 10 loaded to the hilt with apps and services. Still, -150 dBFS is well below our hearing threshold. Fyi, MSFT has Windows IoT for embedded applications with short boot times/minimal processes. I did not get a chance to test using Windows IoT.

Next up was to play movies while reducing both interfaces ASIO audio buffer sizes until dropouts/static was heard and then backed off until the audio was solid with no dropouts. This is done before measuring the round-trip latency so as not to "game" the numbers. It is easy to reduce the buffer sizes to next to nothing when sending a test pulse to get the lowest latency numbers, but it won’t play continuous audio without massive dropouts/static.

I use

Round Trip Latency (RTL) Utility to measure the end-to-end latency. The utility is run on computer 1 and reported the following latencies with convolution engaged at different sample rates on the 2nd computer. This test at 96 kHz sample rate with 65,536 tap minphase FIR filter:

Other tested sample rate latencies:

48 kHz: 14.875ms.

192 kHz: 8.625ms.

Bypass: 48 kHz: 14.875ms.

48 kHz 262,144 taps minphase FIR filter: 14.896ms.

As an amateur drummer, and ex pro recording/mixing engineer, I am sensitive to drum sticks not in sync with video. Typically, during tracking/overdubbing one wants about 5ms of delay between live playing and track playback. But for movie watching, I find even the <15ms delay at 48 kHz is almost imperceptible and soon forgotten about. At 96 kHz with <9ms round trip latency with 65,536 tap minphase FIR filters, it was imperceptible to me even watching Keith Moon’s manic drumming. So all measured times below the film threshold of 22ms (and up to 45ms for video).

If you have a Windows PC laying around, an AES16e card is about $500 used and with HLC ($129) one can have 16 channels of digital I/O (albeit AES) of standalone convolution to run higher tap filters for better low frequency control for around $750. Works for music and video. It's just one approach of many.

Back to the Raspberry Pi based DSP product, I would be interested in hearing people’s requirements and/or features. Thanks.