Richx200

Active Member

- Joined

- May 20, 2024

- Messages

- 127

- Likes

- 53

While reading Dr. Toole's book "Sound Reproduction" I came across the attached chapter 13.2.3 "Room Correction" And "Room Equalization" Are Misnomers. If I'm correct, Dr. Toole is saying that very good loudspeakers should not be equalized above transition 300-400 Hz and doing so could degrade a very good loudspeaker. All that is needed are adequate specifications on the loudspeakers. Anechoic data on the loudspeakers would indicate that the loudspeaker was not responsible for the small acoustical interference irregularities seen in the curve.

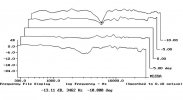

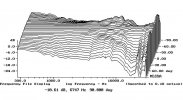

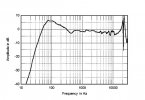

So I went about in search of the adequate specifications for my Focal Aria 936. I couldn't get them from Focal, and only one place had them;

So I copied the data (attached) and it looks like I may not need to use any automatic calibration at all. I think I can correct the low frequency hump with the subwoofer's PEQ if I need a little more; I may have to touch it up with equalization; nothing above 400 Hz.

I hope I'm right about what Dr. Toole is saying and this information maybe valuable to others. Please excuse the sloppy attachments, I'm not very good at copying pages from books.

So I went about in search of the adequate specifications for my Focal Aria 936. I couldn't get them from Focal, and only one place had them;

So I copied the data (attached) and it looks like I may not need to use any automatic calibration at all. I think I can correct the low frequency hump with the subwoofer's PEQ if I need a little more; I may have to touch it up with equalization; nothing above 400 Hz.

I hope I'm right about what Dr. Toole is saying and this information maybe valuable to others. Please excuse the sloppy attachments, I'm not very good at copying pages from books.

Attachments

-

AutomaticRoom Correction Toole Part 1.jpg112.8 KB · Views: 216

AutomaticRoom Correction Toole Part 1.jpg112.8 KB · Views: 216 -

Focal Aria 936 Vertical Response.jpg26.3 KB · Views: 177

Focal Aria 936 Vertical Response.jpg26.3 KB · Views: 177 -

Focal Aria 936 Lateral Response.jpg49.6 KB · Views: 184

Focal Aria 936 Lateral Response.jpg49.6 KB · Views: 184 -

Focal Aria 936 Anechoic Responsejpg.jpg49.8 KB · Views: 202

Focal Aria 936 Anechoic Responsejpg.jpg49.8 KB · Views: 202 -

13.2.jpg206.6 KB · Views: 182

13.2.jpg206.6 KB · Views: 182 -

12.3.jpg209.3 KB · Views: 196

12.3.jpg209.3 KB · Views: 196 -

AutomaticRoom Correction Toole Part 2.jpg339.9 KB · Views: 194

AutomaticRoom Correction Toole Part 2.jpg339.9 KB · Views: 194