thewas

Master Contributor

- Joined

- Jan 15, 2020

- Messages

- 8,221

- Likes

- 23,057

Room curve targets

Every so often it is good to review what we know about room curves, targets, etc.

Almost 50 years of double-blind listening tests have shown persuasively that listeners like loudspeakers with flat, smooth, anechoic on-axis and listening-window frequency responses. Those with smoothly changing or relatively constant directivity do best. When such loudspeakers are measured in typically reflective listening rooms the resulting steady-state room curves exhibit a smooth downward tilt. It is caused by the frequency dependent directivity of standard loudspeakers - they are omnidirectional at low bass frequencies, becoming progressively more directional as frequency rises. More energy is radiated at low than at high frequencies. Cone/dome loudspeakers tend to show a gently rising directivity index (DI) with frequency, and well designed horn loudspeakers (like the M2) exhibit quite constant DI over their operating frequency range. There is no evidence that either is advantageous - both are highly rated by listeners.

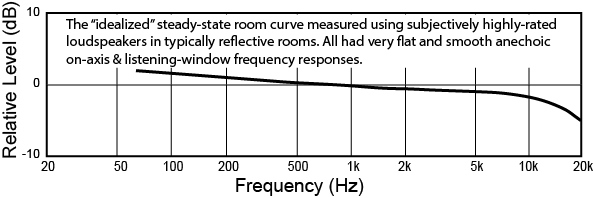

Figure 12.4 in the third edition of my book shows the evolution of a steady-state "room curve" using very highly rated loudspeakers as a guide. The population includes several cone/dome products and the cone/horn M2. The result is a tightly grouped collection of room curves, from which an average curve is easily determined. It is a gently downward tilted line with a slight depression around 2 kHz - the consequence of the nearly universal directivity discontinuity at the woofer/midrange-to-tweeter crossover. I took the liberty of removing that small dip and creating an "idealized" room curve which I attach. The small dip should not be equalized because it alters the perceptually dominant direct sound.

It is essential to note that this is the room curve that would result from subjectively highly-rated loudspeakers. It is predictable from comprehensive anechoic data (the "early reflections curve in a spinorama). If you measure such a curve in your room, you can take credit for selecting excellent loudspeakers. If not, it is likely that your loudspeakers have frequency response or directivity irregularities. Equalization can address frequency response issues, but cannot fix directivity issues. Consider getting better loudspeakers. Equalizing flawed loudspeakers to match this room curve does not guarantee anything in terms of sound quality.

When we talk about a "flat" frequency response, we should be talking about anechoic on-axis or listening window data, not steady-state room curves. A flat room curve sounds too bright.

Conclusion: the evidence we need to assess potential sound quality is in comprehensive anechoic data, not in a steady-state room curve. It's in the book.

The curve is truncated at low frequencies because the in-situ performance is dominated by the room, including loudspeaker and listener locations. With multiple subwoofers is it possible to achieve smoothish responses at very low frequencies for multiple listening locations - see Chapters 8 and 9 in my book. Otherwise there are likely to be strong peaks and dips. Peaks can be attenuated by EQ, but narrow dips should be left alone - fortunately they are difficult to hear: an absence of sound is less obvious than an excess. Once the curve is smoothed there is the decision of what the bass target should be. Experience has shown that one size does not fit all. Music recordings can vary enormously in bass level, especially older recordings - it is the "circle of confusion" discussed in the book. Modern movies are less variable, but music concerts exhibit wide variations. The upshot is that we need a bass tone control and the final setting may vary with what is being listened to, and perhaps also personal preference. In general too much bass is a "forgivable sin" but too little is not pleasant.

An addendum: If you think about it, many/most? suppliers of "room EQ" algorithms do not manufacture loudspeakers. If they did, they might treat them more kindly. This is not a blanket statement, but one with significant truth. The stated or implied sales pitch is: give me any loudspeaker in any room and my process will make it "perfect". A moment of thought tells you that this cannot be true.

Check out: Toole, F. E. (2015). “The Measurement and Calibration of Sound Reproducing Systems”, J. Audio Eng. Soc., vol. 63, pp.512-541. This is an open-access paper available to non-members at

www.aes.org.

www.aes.org.

http://www.aes.org/e-lib/browse.cfm?elib=17839

http://www.aes.org/e-lib/browse.cfm?elib=17839

Research has shown that approximately 30% of one's judgment of sound quality is a reaction to bass performance - both extension and smoothness. Given this, if one has selected well-designed loudspeakers, the dominant problems are likely to be associated with the room itself and the physical arrangement of loudspeakers and listeners within it - i.e. the bass.

Conclusion: full bandwidth equalization may not be desirable, especially if any significant portion of the target curve is flat. On the other hand, some amount of bass equalization is almost unavoidable, and will be most effective in multiple sub systems (Chapter8). It is useful if the EQ algorithm can be disabled at frequencies above about 400-500 Hz. There should be no difference to equalization for music or movies. Good sound is good sound, and listeners tell us that the most preferred sound is "neutral". Because of the circle of confusion, some tone control tweaking may be necessary to get it at times.

When I read the manuals for some room EQ systems, they usually offer suggested target curves. If they happen to work, fine, but if they don't, they often provide user friendly controls to adjust the shape of the target curve. This is nothing more than an inconvenient, inflexible tone control. It is a subjective judgment based on what is playing at the moment. It is not a calibration.

Sources: https://www.avsforum.com/forum/89-s...aster-reference-monitor-143.html#post57291428 https://www.avsforum.com/forum/89-s...aster-reference-monitor-143.html#post57293354

(I had asked Dr. Toole if its ok for him to repost them and he not only agreed but said he is thankful if the knowledge is spread around)

Every so often it is good to review what we know about room curves, targets, etc.

Almost 50 years of double-blind listening tests have shown persuasively that listeners like loudspeakers with flat, smooth, anechoic on-axis and listening-window frequency responses. Those with smoothly changing or relatively constant directivity do best. When such loudspeakers are measured in typically reflective listening rooms the resulting steady-state room curves exhibit a smooth downward tilt. It is caused by the frequency dependent directivity of standard loudspeakers - they are omnidirectional at low bass frequencies, becoming progressively more directional as frequency rises. More energy is radiated at low than at high frequencies. Cone/dome loudspeakers tend to show a gently rising directivity index (DI) with frequency, and well designed horn loudspeakers (like the M2) exhibit quite constant DI over their operating frequency range. There is no evidence that either is advantageous - both are highly rated by listeners.

Figure 12.4 in the third edition of my book shows the evolution of a steady-state "room curve" using very highly rated loudspeakers as a guide. The population includes several cone/dome products and the cone/horn M2. The result is a tightly grouped collection of room curves, from which an average curve is easily determined. It is a gently downward tilted line with a slight depression around 2 kHz - the consequence of the nearly universal directivity discontinuity at the woofer/midrange-to-tweeter crossover. I took the liberty of removing that small dip and creating an "idealized" room curve which I attach. The small dip should not be equalized because it alters the perceptually dominant direct sound.

It is essential to note that this is the room curve that would result from subjectively highly-rated loudspeakers. It is predictable from comprehensive anechoic data (the "early reflections curve in a spinorama). If you measure such a curve in your room, you can take credit for selecting excellent loudspeakers. If not, it is likely that your loudspeakers have frequency response or directivity irregularities. Equalization can address frequency response issues, but cannot fix directivity issues. Consider getting better loudspeakers. Equalizing flawed loudspeakers to match this room curve does not guarantee anything in terms of sound quality.

When we talk about a "flat" frequency response, we should be talking about anechoic on-axis or listening window data, not steady-state room curves. A flat room curve sounds too bright.

Conclusion: the evidence we need to assess potential sound quality is in comprehensive anechoic data, not in a steady-state room curve. It's in the book.

The curve is truncated at low frequencies because the in-situ performance is dominated by the room, including loudspeaker and listener locations. With multiple subwoofers is it possible to achieve smoothish responses at very low frequencies for multiple listening locations - see Chapters 8 and 9 in my book. Otherwise there are likely to be strong peaks and dips. Peaks can be attenuated by EQ, but narrow dips should be left alone - fortunately they are difficult to hear: an absence of sound is less obvious than an excess. Once the curve is smoothed there is the decision of what the bass target should be. Experience has shown that one size does not fit all. Music recordings can vary enormously in bass level, especially older recordings - it is the "circle of confusion" discussed in the book. Modern movies are less variable, but music concerts exhibit wide variations. The upshot is that we need a bass tone control and the final setting may vary with what is being listened to, and perhaps also personal preference. In general too much bass is a "forgivable sin" but too little is not pleasant.

An addendum: If you think about it, many/most? suppliers of "room EQ" algorithms do not manufacture loudspeakers. If they did, they might treat them more kindly. This is not a blanket statement, but one with significant truth. The stated or implied sales pitch is: give me any loudspeaker in any room and my process will make it "perfect". A moment of thought tells you that this cannot be true.

Check out: Toole, F. E. (2015). “The Measurement and Calibration of Sound Reproducing Systems”, J. Audio Eng. Soc., vol. 63, pp.512-541. This is an open-access paper available to non-members at

Research has shown that approximately 30% of one's judgment of sound quality is a reaction to bass performance - both extension and smoothness. Given this, if one has selected well-designed loudspeakers, the dominant problems are likely to be associated with the room itself and the physical arrangement of loudspeakers and listeners within it - i.e. the bass.

Conclusion: full bandwidth equalization may not be desirable, especially if any significant portion of the target curve is flat. On the other hand, some amount of bass equalization is almost unavoidable, and will be most effective in multiple sub systems (Chapter8). It is useful if the EQ algorithm can be disabled at frequencies above about 400-500 Hz. There should be no difference to equalization for music or movies. Good sound is good sound, and listeners tell us that the most preferred sound is "neutral". Because of the circle of confusion, some tone control tweaking may be necessary to get it at times.

When I read the manuals for some room EQ systems, they usually offer suggested target curves. If they happen to work, fine, but if they don't, they often provide user friendly controls to adjust the shape of the target curve. This is nothing more than an inconvenient, inflexible tone control. It is a subjective judgment based on what is playing at the moment. It is not a calibration.

Sources: https://www.avsforum.com/forum/89-s...aster-reference-monitor-143.html#post57291428 https://www.avsforum.com/forum/89-s...aster-reference-monitor-143.html#post57293354

(I had asked Dr. Toole if its ok for him to repost them and he not only agreed but said he is thankful if the knowledge is spread around)

Last edited: