Serge Smirnoff

Active Member

- Joined

- Dec 7, 2019

- Messages

- 240

- Likes

- 137

We’re a bit late to this party, but that’s mainly because we spent a lot of time exploring this device from various angles. We ultimately reached the same conclusion and so figured at this point we may as well share what we’ve found.

We ended up testing two of these devices. We tried tweaking the various coefficients and filters in the companion app, applying our standard 32 Ohm load vs no load, varying output power, etc. Nothing significantly changed our results.

TL;DR – We don’t want to rain on anybody’s parade, but we found the 9038D to perform worse than most other ESS-DAC devices. You can see where it sits in the hierarchy of DACs we’ve measured here (default rank is by the median waveform error):

One important disclaimer here. The tests we perform are looking for total waveform error from time-domain measurements. Metrics are only computed within the audible frequency range, but not all errors in that range are equally audible. In the OEM audiophile world, there’s obviously the potential claim that these ‘errors’ are either a) completely inaudible to the human ear and/or b) enhance the audio, such that what you hear is even better than what the artist and studio engineer intended. We are also aware that there are other tests that attempt to include psychoacoustic effects to strip out or minimize errors that are, by some algorithm, deemed inaudible. We do not want to get into such debates here. Our interest is simply in why the raw waveform errors from all other ESS DACs we’ve measured are so much better than those from the 9038D, and why Amir’s complex waveform test result looks so amazing when ours – for this specific ESS device - doesn’t.

Here are some example results using the 9038D’s brick-wall and linear phase slow roll-off filters:

Total waveform error (BW filter):

![[df40]E1DA-9038D(BW)[Wf].png [df40]E1DA-9038D(BW)[Wf].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-bw-wf-png.312352/)

Magnitude degradation (BW filter):

![[df40]E1DA-9038D(BW)[Mg].png [df40]E1DA-9038D(BW)[Mg].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-bw-mg-png.312354/)

Phase degradation (BW filter):

![[df40]E1DA-9038D(BW)[Ph].png [df40]E1DA-9038D(BW)[Ph].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-bw-ph-png.312355/)

Total waveform error (LPSR filter):

![[df40]E1DA-9038D(LS)[Wf].png [df40]E1DA-9038D(LS)[Wf].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-ls-wf-png.312358/)

Magnitude degradation (LPSR filter):

![[df40]E1DA-9038D(LS)[Mg].png [df40]E1DA-9038D(LS)[Mg].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-ls-mg-png.312359/)

Phase degradation (LPSR filter):

![[df40]E1DA-9038D(LS)[Ph].png [df40]E1DA-9038D(LS)[Ph].png](https://www.audiosciencereview.com/forum/index.php?attachments/df40-e1da-9038d-ls-ph-png.312360/)

We have more graphics for anybody who’s interested, but adjusting parameters like filters and harmonic compensation coefficients, load impedance, etc., made no appreciable change to the main (music signal) histogram error.

The impact of the anti-aliasing filter is noticeable with certain 44/16 test signals (but barely noticeable with any 96/24 signals). The main music signal (the error shown in the largest histogram at the foot of each slide) is largely unaffected by the filter as music spectra (and PSN spectra) tends to taper away approaching 20 kHz. We suspect blind listening tests with actual music tracks would not reveal differences between these two filters. Errors from sinusoids are always consistently low, so SINAD should be great; this is an awesome device for lovers of the classic Concerto in 1 kHz

Error from our white noise test signal is lower with the BW filter, due to less magnitude degradation at high frequencies. Here’s a comparison of best-case-scenario errors from white noise and program-simulation noise at 96 kHz/24-bit:

White Noise(@96kHz), Brick-Wall filter, 32 Ohm:

Program Simulation Noise(@96kHz), Brick-Wall filter, 32 Ohm:

For comparison, here are those same signals from the Shanling M0 Pro:

White Noise(@96kHz), Apodizing filter, 32 Ohm:

Program Simulation Noise(@96kHz), Apodizing filter, 32 Ohm:

The two main problems with the 9038D seem to be a phase inaccuracy, especially at the lower frequencies (below 1kHz) and jitter or some other source of time inconsistency, which is visible on the above magnitude diffrograms (vertical yellow-ish bars).

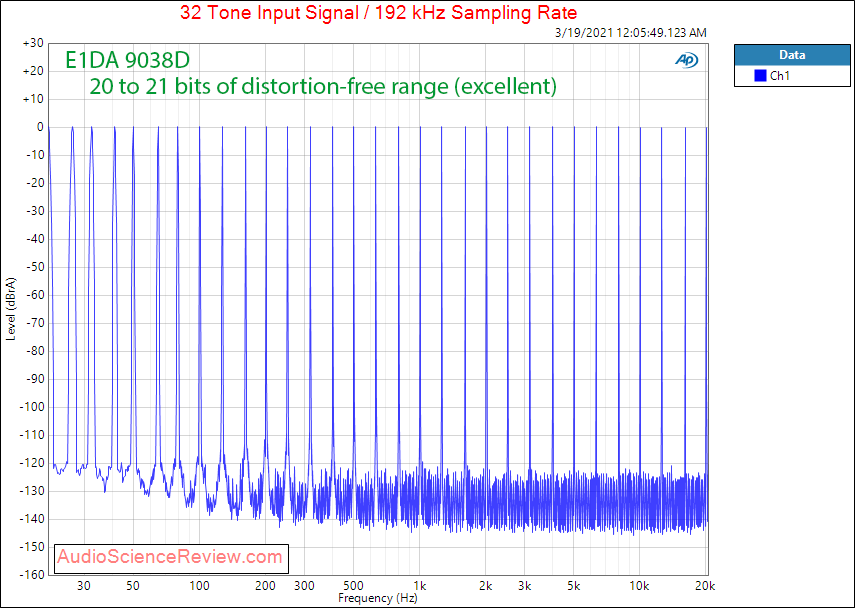

We applaud Amir for testing with a more complex waveform. His multi-tone/pseudo white noise signal certainly looks nice and complex in the time domain:

However, we suspect the amplitude peaks of those spectral components are fluctuating slightly with time and a statistical/periodic FFT showing only averaged amplitude vs frequency simply isn’t showing these errors.

We ended up testing two of these devices. We tried tweaking the various coefficients and filters in the companion app, applying our standard 32 Ohm load vs no load, varying output power, etc. Nothing significantly changed our results.

TL;DR – We don’t want to rain on anybody’s parade, but we found the 9038D to perform worse than most other ESS-DAC devices. You can see where it sits in the hierarchy of DACs we’ve measured here (default rank is by the median waveform error):

One important disclaimer here. The tests we perform are looking for total waveform error from time-domain measurements. Metrics are only computed within the audible frequency range, but not all errors in that range are equally audible. In the OEM audiophile world, there’s obviously the potential claim that these ‘errors’ are either a) completely inaudible to the human ear and/or b) enhance the audio, such that what you hear is even better than what the artist and studio engineer intended. We are also aware that there are other tests that attempt to include psychoacoustic effects to strip out or minimize errors that are, by some algorithm, deemed inaudible. We do not want to get into such debates here. Our interest is simply in why the raw waveform errors from all other ESS DACs we’ve measured are so much better than those from the 9038D, and why Amir’s complex waveform test result looks so amazing when ours – for this specific ESS device - doesn’t.

Here are some example results using the 9038D’s brick-wall and linear phase slow roll-off filters:

Total waveform error (BW filter):

Magnitude degradation (BW filter):

Phase degradation (BW filter):

Total waveform error (LPSR filter):

Magnitude degradation (LPSR filter):

Phase degradation (LPSR filter):

We have more graphics for anybody who’s interested, but adjusting parameters like filters and harmonic compensation coefficients, load impedance, etc., made no appreciable change to the main (music signal) histogram error.

The impact of the anti-aliasing filter is noticeable with certain 44/16 test signals (but barely noticeable with any 96/24 signals). The main music signal (the error shown in the largest histogram at the foot of each slide) is largely unaffected by the filter as music spectra (and PSN spectra) tends to taper away approaching 20 kHz. We suspect blind listening tests with actual music tracks would not reveal differences between these two filters. Errors from sinusoids are always consistently low, so SINAD should be great; this is an awesome device for lovers of the classic Concerto in 1 kHz

Error from our white noise test signal is lower with the BW filter, due to less magnitude degradation at high frequencies. Here’s a comparison of best-case-scenario errors from white noise and program-simulation noise at 96 kHz/24-bit:

White Noise(@96kHz), Brick-Wall filter, 32 Ohm:

| Total waveform DF = -51.4 dB | Magnitude DF = -49.9 dB | Phase DF = -54.9 dB |

Program Simulation Noise(@96kHz), Brick-Wall filter, 32 Ohm:

| Total waveform DF = -38.7 dB | Magnitude DF = -54.7 dB | Phase DF = -46.3 dB |

For comparison, here are those same signals from the Shanling M0 Pro:

White Noise(@96kHz), Apodizing filter, 32 Ohm:

| Total waveform DF = -69.7 dB | Magnitude DF = -63.5 dB | Phase DF = -80.2 dB |

Program Simulation Noise(@96kHz), Apodizing filter, 32 Ohm:

| Total waveform DF = -83.1 dB | Magnitude DF = -84.2 dB | Phase DF = -70.0 dB |

The two main problems with the 9038D seem to be a phase inaccuracy, especially at the lower frequencies (below 1kHz) and jitter or some other source of time inconsistency, which is visible on the above magnitude diffrograms (vertical yellow-ish bars).

We applaud Amir for testing with a more complex waveform. His multi-tone/pseudo white noise signal certainly looks nice and complex in the time domain:

However, we suspect the amplitude peaks of those spectral components are fluctuating slightly with time and a statistical/periodic FFT showing only averaged amplitude vs frequency simply isn’t showing these errors.

![9038BW32-02.wav(192)_ref2.wav(96)_mono_400_Wf-51.41[-50.37-52.39]v3.3.png](/forum/data/attachments/312/312352-f31154a88c81697ccabeac67c5efd030.jpg?hash=8xFUqIyBaX)