-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

- Forums

- Audio, Audio, Audio!

- Amplifiers, Phono preamp, and Analog Audio Review

- Stereo and Multichannel Amplifier Reviews

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Audibility of Low Damping Factor? - Benchmark Myth-Busting White Paper

- Thread starter MediumRare

- Start date

Vladimir Filevski

Addicted to Fun and Learning

- Joined

- Mar 22, 2020

- Messages

- 564

- Likes

- 756

There will be no difference in REW measurements.Well, in my case REW was showing a pretty flat freq. response, but maybe I'll try sometimes to measure with 1.5mm2 cables instead of 4mm2.

Driver damping is another reason to use electronic crossover networks/ active speakers. Or multiway powered speakers that don't really HAVE speaker cables and hopefully not crossovers after the amplifier.

solderdude

Grand Contributor

So is the series resistance of the speaker cable here an argument in favor of locating the amps at or inside the speaker itself?

I think the DC resistance of series inductors for XO's, certainly with 3 or 4 way passive speakers adds way more resistance than the cable.

Indeed an active XO with speakers wired directly to the woofer increases DF.

The question remains... is 0.1dB in extreme situations not too much of it ? When it is one can relax the DF for FR changes.

According to Benchmark's app. note the damping factor will suffer a lot when swapping 4mm2 speaker cables with 1.5mm2 ones, so why do you think REW will not capture any difference, please?There will be no difference in REW measurements.

- Joined

- Jul 23, 2019

- Messages

- 480

- Likes

- 505

Here's an example that may clarify the situation. 1.5 mm2 cables are about 15 AWG, while 4.0 mm2 cables are about 11 AWG. Assume further that we have a loudspeaker with nominal, minimum and maximum impedances of 8, 5, and 45 ohms. Next, assume a cable length of 3 metres (9.84 ft). If you have an amplifier with a damping factor of 50, then the Benchmark spreadsheet shows that the total frequency response error will be 0.28 dB for the 4.0 mm2 cable, increasing to 0.34 dB for the 1.5 mm2 cable. The difference between these two cables is only 0.06 dB. This small difference is highly unlikely to be audible (it's much less that 0.1 dB).According to Benchmark's app. note the damping factor will suffer a lot when swapping 4mm2 speaker cables with 1.5mm2 ones, so why do you think REW will not capture any difference, please?

Here's an example that may clarify the situation. 1.5 mm2 cables are about 15 AWG, while 4.0 mm2 cables are about 11 AWG. Assume further that we have a loudspeaker with nominal, minimum and maximum impedances of 8, 5, and 45 ohms. Next, assume a cable length of 3 metres (9.84 ft). If you have an amplifier with a damping factor of 50, then the Benchmark spreadsheet shows that the total frequency response error will be 0.28 dB for the 4.0 mm2 cable, increasing to 0.34 dB for the 1.5 mm2 cable. The difference between these two cables is only 0.06 dB. This small difference is highly unlikely to be audible (it's much less that 0.1 dB).

Said differently:

- If the dominant contribution to the output impedance seen by the speakers is the amplifier, then changing the cable won't help much.

- Conversely, if the dominant contribution to the output impedance is the cable, then lowering the output impedance of the amplifier won't help much. (E.g.: in the above example, the Purifi amp yields a net damping factor of 251; the Hypex yields 240. The difference is small, because the cable dominates the combined output impedance.)

Referring to Benchmark's examples provided: with 4mm2 cables, "the effective damping factor" is 171, while with 1.5mm2 (assuming 1.5mm2 cables resistance of 0.013 Ohms/meter) cables the damping factor will get as low as 80, so a variation of 0.5 dB.

I get the point, makes lot of sense, thanks for the answer.

- If the dominant contribution to the output impedance seen by the speakers is the amplifier, then changing the cable won't help much.

In my case the speakers have 3...20 Ohms, wires are 6 meters long (0.0045 Ohms/m) and amp has 0.033 Ohms output impedance, so swapping 4mm2 wires with 1.5mm2 ones (0.013 Ohms/m) may not be very wise. Of course, the equation will be different with a different audio setup.

Vladimir Filevski

Addicted to Fun and Learning

- Joined

- Mar 22, 2020

- Messages

- 564

- Likes

- 756

Just to be clear: In the Benchmark's Excel spreadsheet the damping factor (i.e. amp output impedance) is a constant, variables (parameters) are cable resistance, minimum and maximum speaker impedance. So, you have to pick only the "240 damp. factor" row (your amplifier) and look for a voltage difference expressed in dB (last column). With your amp output impedance of 0.033333 ohm (240 damp. factor) and with 6 meters of 11 AWG cable and with 3-20 ohm speaker impedance variation, you have total difference of 0.2 dB. With the same amplifier (0.033333 ohm = 240 damp. factor) and with 6 meters of 16 AWG cable and with the same 3-20 ohm speaker, you have a total difference of 0.45 dB.Referring to Benchmark's examples provided: with 4mm2 cables, "the effective damping factor" is 171, while with 1.5mm2 (assuming 1.5mm2 cables resistance of 0.013 Ohms/meter) cables the damping factor will get as low as 80, so a variation of 0.5 dB.

On the end, the total difference between the two cables is only 0.45 - 0.2 = 0.25 dB.

tuga

Major Contributor

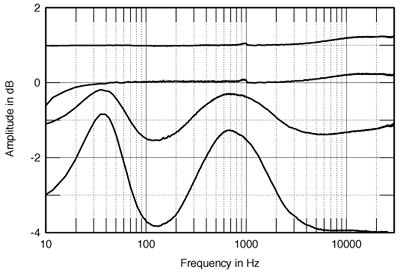

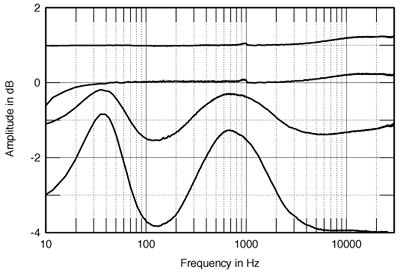

An interesting plot showing the effects of the combined amplifier/loudspeaker response when using high vs. low output-impedance amplifiers:

From top to bottom, frequency response of Jeff Rowland Model One, Mark Levinson No.23.5,

Audio Research Classic 60 (4 ohm tap), and VTL Compact 160 monoblock,

all measured at their output terminals when loaded by the Avalon Eclipse

connected by 2m of bi-wired Cardas Hexlink. (1dB/vertical div.)

https://www.stereophile.com/content/avalon-eclipse-loudspeaker-measurements

From top to bottom, frequency response of Jeff Rowland Model One, Mark Levinson No.23.5,

Audio Research Classic 60 (4 ohm tap), and VTL Compact 160 monoblock,

all measured at their output terminals when loaded by the Avalon Eclipse

connected by 2m of bi-wired Cardas Hexlink. (1dB/vertical div.)

https://www.stereophile.com/content/avalon-eclipse-loudspeaker-measurements

Last edited:

Might worth reading: https://www.stereophile.com/content/what-difference-wire-makes.

solderdude

Grand Contributor

I think everyone knows that when one measures 'deep enough' you can find measurable differences between (speaker) cables.

They all have different L, C and R so there is no surprise there.

Also there was a thread lately by someone asking why they could hear >20kHz being switched on and off using a burst.

This is what is happening here as well.

Compound the effect of back EMF from speakers (the acoustical 'ringing' is worse than the electrical) then it isn't weird one can find measurable differences at all.

The real question is how audible is this when using music signals that do not start (and certainly stop) as instantly as the bursts and what if the test signal has been run through a steep lowpass first ? The test signal would be distorted anyway.

Another test could have been to use the 'best' cable as a reference and show the relative differences between them.

They all have different L, C and R so there is no surprise there.

Also there was a thread lately by someone asking why they could hear >20kHz being switched on and off using a burst.

This is what is happening here as well.

Compound the effect of back EMF from speakers (the acoustical 'ringing' is worse than the electrical) then it isn't weird one can find measurable differences at all.

The real question is how audible is this when using music signals that do not start (and certainly stop) as instantly as the bursts and what if the test signal has been run through a steep lowpass first ? The test signal would be distorted anyway.

Another test could have been to use the 'best' cable as a reference and show the relative differences between them.

I remember those.Except for a few special cases (like the old Kenwood Sigma-Drive and a couple of other amps) the cable's impedance is not in the amplifier's feedback loop and thus is not compensated.

Why is that not used anymore ?

Probably because it's easier and cheaper to just have the cable and amp impedance low enough ?

I would imagine for several reasons:-I remember those.

Why is that not used anymore ?

Probably because it's easier and cheaper to just have the cable and amp impedance low enough ?

1) It needs a third 'sensing' cable to the loudspeaker and all 'speaker cables are just two wires

2) If the sensing cable isn't connected or strapped at the amplifier, then it's much more difficult to make the amplifier unconditionally stable.

3) Lack of understanding of why it's being done.

4) Difficulty in inventing a convincing marketing story to justify the additional bother.

5) As mentioned above, the lack of any real benefit.

S.

Was most likely another brilliant marketing idea.I would imagine for several reasons:-

1) It needs a third 'sensing' cable to the loudspeaker and all 'speaker cables are just two wires

2) If the sensing cable isn't connected or strapped at the amplifier, then it's much more difficult to make the amplifier unconditionally stable.

3) Lack of understanding of why it's being done.

4) Difficulty in inventing a convincing marketing story to justify the additional bother.

5) As mentioned above, the lack of any real benefit.

S.

Was around the time when "damping factor" was finding its way in HiFi marketing litterature.

- Joined

- Oct 10, 2020

- Messages

- 804

- Likes

- 2,634

First of all, just wanted to say I hugely appreciate what you are doing here @amirm!He is not the only one. I did that too.Indeed I built the same load he did and ran it on a few amps. Net result was that the frequency response variations were very small as to not be worth continuing to test with it. JA uses much magnified scale to show variations.

Anyway, I was wondering wouldn't it still make sense to measure amplifier output impedance (ideally vs frequency) in your reviews as a sanity check / confirmation of good product design? Information on amplifier output impedance is not always available in manufacturer specs or other test reports, and unfortunately commercial amplifiers with relatively high output impedance are still being manufactured. E.g. I just recently posted my measurements of the Denon Ceol mini stereo system where amp output impedance is >0,5 Ohm, causing >1 dB variation in the frequency response driving a 6 Ohm nominal impedance speaker (Revel M16).

If I was buying a new amp, it having reasonably low output impedance would surely be a consideration I'd take into account.

Sorry for coming into this discussion a bit late!

Last edited:

valerianf

Addicted to Fun and Learning

I see another side effect of a low damping factor for an amplifier output: the design of the output stage is modified.

It could be a class D design or a FET design that goes very fast in high current conduction.

Then the sound could be very different because of the design change, not because of the numerical value of the damping factor.

Without any space/money constraint I would choose a low damping factor amplifier.

It could be a class D design or a FET design that goes very fast in high current conduction.

Then the sound could be very different because of the design change, not because of the numerical value of the damping factor.

Without any space/money constraint I would choose a low damping factor amplifier.

High damping factor is only relevant in the EMK area, meaning from about 500 Hz down to 20 Hz or lower.

For tweeters, theres often ( always?) an optimal driving impedance where the distortion is the lowest and the sound is the best. So, the ”high damping factor” importance is not always necessary in the higher frequency domain- meaning from about 500 Hz - 20000 Hz.

There are often gains in soundquality NOT using a directly coupled tweeter to the amplifier. A resistor about 2-3 ohms can bring sonic benefits in an active system if put in series with the tweeter . Ofcourse you have to compensate the gain when using resistors.

You also get less dynamic compression doing so.

For tweeters, theres often ( always?) an optimal driving impedance where the distortion is the lowest and the sound is the best. So, the ”high damping factor” importance is not always necessary in the higher frequency domain- meaning from about 500 Hz - 20000 Hz.

There are often gains in soundquality NOT using a directly coupled tweeter to the amplifier. A resistor about 2-3 ohms can bring sonic benefits in an active system if put in series with the tweeter . Ofcourse you have to compensate the gain when using resistors.

You also get less dynamic compression doing so.

Last edited:

Similar threads

- Replies

- 68

- Views

- 8K

- Replies

- 12

- Views

- 2K

- Replies

- 195

- Views

- 31K

- Replies

- 72

- Views

- 23K