-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What budget speakers you like to see reviewed?

- Thread starter amirm

- Start date

twofires

Member

- Joined

- Jan 4, 2020

- Messages

- 41

- Likes

- 63

Again a strange sounding speaker, an overall nice frequency response but the mids-hi mids sounded harsh, almost distorted. It was “unlistenable.” I had high hopes because KEF really talks up the coaxial driver.

I don't want to be one of those "if you don't like my favourite speakers you must have bad amps/sources/room etc." guys, but the KEF do dip down to about 3 ohms so they need a bit of juice to work well (which undermines the budget aspect completely, sadly) . I had your exact midrange harshness issue running my Q700 towers with a 90ish wpc @ 8 ohms receiver, and it seemed to go away completely with a 200 wpc 8 ohm / 400 wpc 4 ohm stereo amp.

Tks

Major Contributor

- Joined

- Apr 1, 2019

- Messages

- 3,221

- Likes

- 5,497

When I was still PC gaming, every forums had huge thread wars, where the majority of arguments are based on subjective and anecdotal evidence. AMD vs Intel, AMD/ATI vs Nvidia arguments threads are full of it.

Those still go on, but that is more of fanboyism that discusses product offering superiority and ethics behind pricing competition. It's the same nonsense that goes on in the home-console wars since Sega and Nintendo used to go at it back in the day, only now it's between Sony and Microsoft and who has the best platform for the best exclusives.

But none of these wars are fought on the battle-lines of who has better performing products. Performance-wise and feature-wise Nvidia obliterates AMD(RTG is their graphics division more specifically), and only lunatics would ever dare contest this for last three generations. Likewise with console wars, these are fought simply as to which company (Microsoft or Sony) has provided a larger, or more enjoyable catalogue of exclusive titles. No person is ever taken seriously if they were to talk about which console has more processing horsepower (The XboxOneX holds that title currently, as it's the latest and set out to be with it's hardware specs), and a few other factors like OS implementations/feature set, or silly little things like handheld controller designs etc..

Though I do admit, gamers (console ones mostly) are a bunch of morons for the most part. Once you throw multiple-factors into their decision making algorigthms, you can see their eventual conclusions are no better than if you were to exclude objective facts of performance, and they mostly go with their pre deterministic values and feels on the matter. All of them (including the slightly more intelligent PC crowd) regularly get swindled by marketing efforts of the next best thing per generation of hardware revision. Currently the PC stupidity is one where a section of the community thinks Ray Tracing in real-time graphics pipelines is something worthy of consideration in mainstream products (pretty dumb unless you fork over over $1,000 for the 2080Ti GPU or higher, like the Titan series), and even then, the performance penalty is staggering. That feature alone currenty (the die space allocated to the specific sort of compute cores required for hardware acceleration of real-time Ray Tracing) is one of the factors that has contributed for such a massive price hike of all GPU's from Nvidia accross the board. And lamentation over such things is what these thread wars are about. Not so much the actual performance metrics being in dispute.

It's the same sort of shit you see here in in audio, like people fiending for MQA, or heck even DSD (meanwhile such folks fail regularly to AB 320kbps MP3's and FLACs). The amount of game titles that use Ray Tracing are only a handful, in the same fashion something like DSD exists.. Not much, so paying for dedicated hardware for it seems like such a waste in my opinion (though for the sake of preserving the native format the music was produced in, I think personally there is some peice of mind value to maintaining playback without conversion).

You see the same horseshit now with display manufacturers as well. Things like: fudging the meanings of "8K"

or

HDR "standards" being the biggest laughing stock in the display tech industry I've ever seen that is poised to take consumers for every dime that they have with some of the shit being advertised.

So while every industry will have it's fair share of producers trying to veil reality with advertising efforts that induce feeling-driven decision making. The gaming crowd (and the video display consumers) are a bit more prepared to see through the nonsense most times. See for yourself when Intel, the leading processor manufacturer on the planet with all it's skilled engineers and scientists makes a mockery of itself and it's marketing efforts only but a few days ago at the Consumer Electronics Show (CES 2020, the biggest event of the year with respect to tech). Though with their 10nm catastrophe, you can see the nerves getting the better of them. In the conclusion it writes:

Ultimately Intel is in its own catch-22. It needs to show off the fact that it has processors with acceleratable elements that can smash the competition (and previous generations), but at the same time, because those features aren’t widespread, it’s trying to combine it with the ‘performance in the real world’ messaging and asking reviewers to target the more popular workloads, even if Intel isn’t doing that itself in its own performance comparisons. The easiest way for Intel to approach this is to remove this notion of ‘real-world performance’ being the ultimate goal, because with it the company is shooting itself in the foot. It can’t both push reviewers to focus on real world performance and yet highlight its accelerators for niche applications. Sure, going after real world performance is a laudable goal, but Intel has to understand that it needs to market its data appropriately. At the minute it’s coming up in a big jumbled mess that doesn’t do anyone any favors - least of all Intel.

EDIT: I forgot to conclude with Intel, and how even objective initiatives can be perverted and leave people in a more confused state of affairs than they were before. And those sorts of mistakes is what gives rise to the lunatics that become fanboys.

Last edited:

Depends on what features you consider. Extremely good OpenGL, Vulkan and now OpenCL (with RocM) "open source" drivers for Linux are a feature worth its weight; the supercomputer world is especially interested in RocM.Performance-wise and feature-wise Nvidia obliterates AMD(RTG is their graphics division more specifically), and only lunatics would ever dare contest this for last three generations.

Totally agree with you on HDR, until the zone hack is gone (OLED or MicroLED), this means absolutely nothing. A bit like 4k on TVs: no point if you're sitting at a reasonable distance, just a way to sell you the entire catalogue once again.

Read https://semiaccurate.com/ if you want to know how deep in it Intel is. And if like most computer enthusiasts you remember the ICC cheating scandal or the OEM bribing fiasco - or just follow the endless stream of vulnerabilities which made Sandy/Ivy Bridge probably slower than Piledriver - your wallet is going to act like a magnet with the opposite polarity of Intel's.

- Joined

- Oct 1, 2018

- Messages

- 826

- Likes

- 1,226

These are not budget by any means but I would love spinoramas (and THD % please!) of the following speakers:

Harbeth P3ESR, Buchdart S400, Triangle Borea 03, Klipsh RP500M.

Harbeth P3ESR, Buchdart S400, Triangle Borea 03, Klipsh RP500M.

A JBL 530 is coming for review.

This speakers look so strange. A huge compressiondriver horn and this tiny bass driver. Realy a not often seen combination. Very interesting.

It's the same sort of shit you see here in in audio, like people fiending for MQA, or heck even DSD (meanwhile such folks fail regularly to AB 320kbps MP3's and FLACs).

I disagree. There's a huge difference of degree: you can indeed argue that Ray Tracing or HDR are not worth the money, and that's fine. But you definitely can't argue that they don't make any difference - the effect of HDR in movies, and Ray Tracing in games like Control, is highly visible, you'd be blind not to see it. That's very different from debates about MQA or DSD (or cables, etc.) where people are arguing endlessly about differences that simply don't exist (or if they do, they are extremely subtle and hard to spot).

There's a really, really big difference between, say, "Ray Tracing is nice but is it really worth a $1,000 GPU?" and "Should I spent $1,000 on a DAC so that I can upgrade my SINAD from 90 dB to 100 dB?". In the first case you're still acting rationally because you know you're going to see some improvement, albeit at a steep price. In the second case you're just burning money.

Tks

Major Contributor

- Joined

- Apr 1, 2019

- Messages

- 3,221

- Likes

- 5,497

Depends on what features you consider. Extremely good OpenGL, Vulkan and now OpenCL (with RocM) "open source" drivers for Linux are a feature worth its weight; the supercomputer world is especially interested in RocM.

Totally agree with you on HDR, until the zone hack is gone (OLED or MicroLED), this means absolutely nothing. A bit like 4k on TVs: no point if you're sitting at a reasonable distance, just a way to sell you the entire catalogue once again.

Read https://semiaccurate.com/ if you want to know how deep in it Intel is. And if like most computer enthusiasts you remember the ICC cheating scandal or the OEM bribing fiasco - or just follow the endless stream of vulnerabilities which made Sandy/Ivy Bridge probably slower than Piledriver - your wallet is going to act like a magnet with the opposite polarity of Intel's.

For GPUs, I was only considering consumer applications since we were refering to the gaming crowd. Also what you listed is simply API's not necessarily something dealing with the hardware levels of throughput for various compute types. Sure AMD has some marketshare with respect to their relatively inexpensive Radeon's Vega FE cards with HBM2. But in any academic setting, or supercomputing setting where High Performance Compute is the expectation, Nvidia owns the majority of that whole ordeal with their Tesla series of cards like the V100, or T4 accelerator card. But I do admit, over the years some benchmarks favored AMD's cards for a while rather than Nvidia (but that was usually followed up with a quick software unlocking of this supposedly missing performance). By the way this is the sort of shit that creates fanboys I want to mention again, Nvidia's scum practices like this (artificially gating performance already there in-hardware). You mention similar like the Intel bribing scandal (of which I also recall when Dell openly said they were accepting for years). To which I agree is enough to warrant my next system being AMD based just out of such a violation in ethical principles of which I don't want to be a party to, seeing as how now the Zen architecture is spanking Intel like no one would ever have thought. Though in the GPU realm, regardless of Nvidia's blatant bullshit, there simply is no choice if performance is what you desire, AMD will forever stay stommped in this respect as Nvidia doesn't hibernate like Intel did the past half decade at least.

As for HDR, you mentioned MicroLED. Those people annoy me to death, talking about what amounts to vaporware nonsense I doubt will ever see the light of day in mainstream consumer use outside of very expensive home theaters in next half-decade. They talk about it like it's the next big thing, as if the market could bare the brunt of the cost.. The tech demo's I've seen of it are amazing, but this stuff has no future barring any manufacturing advances in the next few years that would drive costs down to less than OLED. But who knows, lets see what the smart folks at these companies manage to figure out when it comes to commercialized feasible production techniques.

Their Linux drivers are simply of a higher quality/stability, if I make it brief. Still, you're right, it doesn't matter in the grand scheme of things where Windows users are the big majority.For GPUs, I was only considering consumer applications since we were refering to the gaming crowd. Also what you listed is simply API's not necessarily something dealing with the hardware levels of throughput for various compute types. Sure AMD has some marketshare with respect to their relatively inexpensive Radeon's Vega FE cards with HBM2. But in any academic setting, or supercomputing setting where High Performance Compute is the expectation, Nvidia owns the majority of that whole ordeal with their Tesla series of cards like the V100, or T4 accelerator card. But I do admit, over the years some benchmarks favored AMD's cards for a while rather than Nvidia (but that was usually followed up with a quick software unlocking of this supposedly missing performance). By the way this is the sort of shit that creates fanboys I want to mention again, Nvidia's scum practices like this (artificially gating performance already there in-hardware). You mention similar like the Intel bribing scandal (of which I also recall when Dell openly said they were accepting for years). To which I agree is enough to warrant my next system being AMD based just out of such a violation in ethical principles of which I don't want to be a party to, seeing as how now the Zen architecture is spanking Intel like no one would ever have thought. Though in the GPU realm, regardless of Nvidia's blatant bullshit, there simply is no choice if performance is what you desire, AMD will forever stay stommped in this respect as Nvidia doesn't hibernate like Intel did the past half decade at least.

As for HDR, you mentioned MicroLED. Those people annoy me to death, talking about what amounts to vaporware nonsense I doubt will ever see the light of day in mainstream consumer use outside of very expensive home theaters in next half-decade. They talk about it like it's the next big thing, as if the market could bare the brunt of the cost.. The tech demo's I've seen of it are amazing, but this stuff has no future barring any manufacturing advances in the next few years that would drive costs down to less than OLED. But who knows, lets see what the smart folks at these companies manage to figure out when it comes to commercialized feasible production techniques.

Honestly, AMD's GPU division is sleeping right now, probably since they're busy with next-gen consoles. Their midrange is still a very good value (as usual).

About MicroLED, technologically, it IS the next big thing. At least for computer monitors, where we're currently stuck with IPS and its ridiculous glow/bad response time. And I say that as a ColorEdge user. Basically, it's not a concurrent to OLED, which we can't really have on monitors due to burn-in.

Tks

Major Contributor

- Joined

- Apr 1, 2019

- Messages

- 3,221

- Likes

- 5,497

I disagree. There's a huge difference of degree: you can indeed argue that Ray Tracing or HDR are not worth the money, and that's fine. But you definitely can't argue that they don't make any difference - the effect of HDR in movies, and Ray Tracing in games like Control, is highly visible, you'd be blind not to see it. That's very different from debates about MQA or DSD (or cables, etc.) where people are arguing endlessly about differences that simply don't exist (or if they do, they are extremely subtle and hard to spot).

There's a really, really big difference between, say, "Ray Tracing is nice but is it really worth a $1,000 GPU?" and "Should I spent $1,000 on a DAC so that I can upgrade my SINAD from 90 dB to 100 dB?". In the first case you're still acting rationally because you know you're going to see some improvement, albeit at a steep price. In the second case you're just burning money.

Yeah I agree, I was trying to talk about the formation of fanboys for tech that is fledgling, or potentially still-born/pointless. The comparison being drawn here was improper, but then again my main blunder was comparing two senses(sight and sound), and that's always a can of worms, especially with my rudimentary knowledge of sound. Maybe you could help me draw a better comparison in this respect?

Though you made some claims about HDR there...

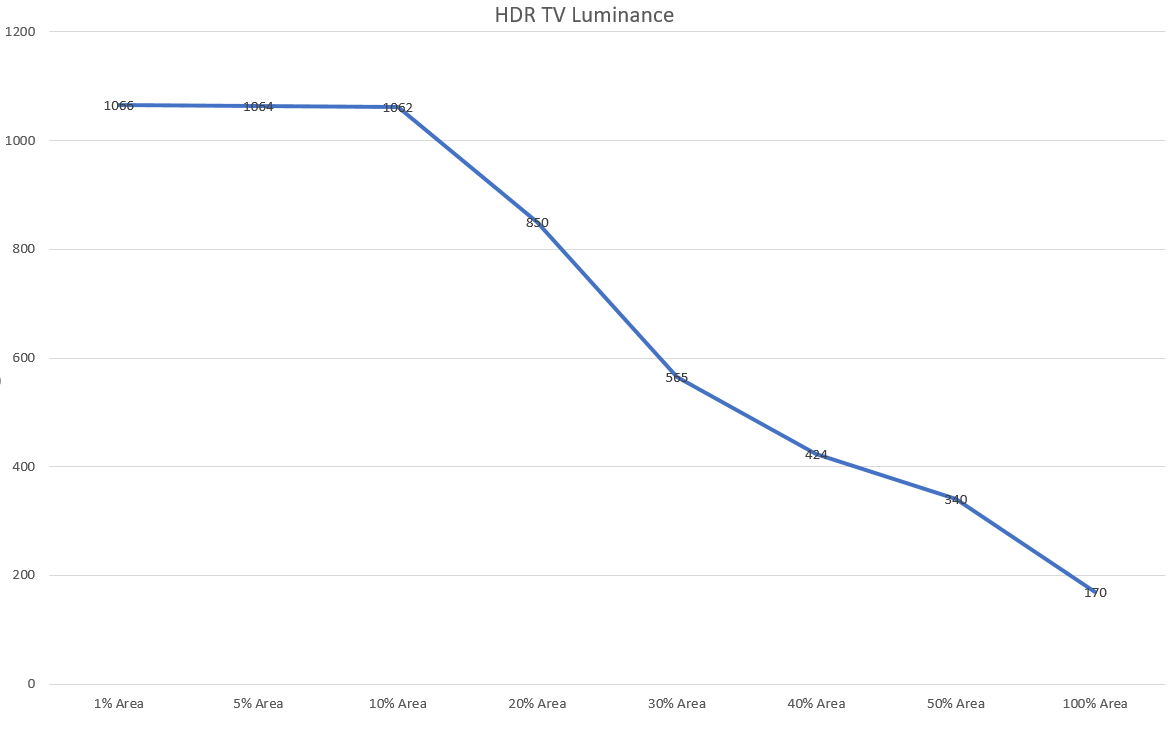

What HDR are you talking about, because I sure as heck haven't seen any of the sort you speak of in my life time.. If you're talking about displays capable of the full DolbyVision spec, that is just a fantasy that doesn't even exist in R&D labs. Still haven't seen a 12-bit panel, nor have I seen content mastered and rendered on 10,000 peak nit brightness screens (though Sony did demo a 10K nit screen I think last CES). HDR is a massive blunder on all fronts simply because even the reference displays the colorists master on, like the Sony BVM-300 series reference display exhibits a massive sink in brightness as the brighter element on-screen captures more of the screen:

This is hardly what I would call some crazy massively amazing difference in a leisurely setting to a laymen comparing a reference display's HDR performance compared to a high end consumer television model (heck that person, like most wouldn't even know what to even be looking out for as you know). Most of the HDR content you view on a high-end UHD display is great (if not better than reference displays) simply because the rest of the performance metrics of the display are great. Show me a garbage display with great HDR rendering, then maybe we can better discuss how great something is in some isolation. But alas, I do admit my comparison wasn't drawn and spelled out properly with much thought with respect to MQA/DSD, it was more to demonstrate the annoying fanboyism surrounding these supposedly paradigm altering claims from HDR/RayTracing proponents.

As for Ray Tracing, take a look at Red Dead Redemption 2's handling of lightening with pre-baked light maps, and general volumetric lightening employed. It was great when it released on consoles, and even better not that the title came to PC this year. No Ray Tracing, and still looks better than the majority of games employing Ray Tracing at a glance. Sure the lighting may not be as good as Ray Tracing, but the performance penalty is nothing to snuff at either. Ray Tracing performance penalty reminds me perhaps the difference between Class A and Class D amp designs with respect to efficiency.. :} maybe that's a better comparison. Especially considering most graphics in games, developers attempt to cheat and hack the systems in order to create illusions of lighting, when Ray Tracing is actual compute attempts at proper lighting emulation. With enough compute power though I think you can cheat and hack away enough to make someone wonder if Ray Tracing was being used (that's why I talked about Red Dead 2). The only question remains which approach scales better (I'd argue Ray Tracing if hardware acceleration designs keep proliferating, as cheating in-software becomes more of a hands-on thing that gets more demanding as fidelity of graphics increases, at that point it just pays off to bite the cost bullet on dedicated hardware for lighting).

Also you spoke about:

"Ray Tracing is nice but is it really worth a $1,000 GPU?" "Should I spent $1,000 on a DAC so that I can upgrade my SINAD from 90 dB to 100 dB?".

There is an equivocation error here, you don't get to choose a $1,000 GPU where the manufacturer offers a SKU with no Ray Tracing hardware, where you somehow get that performance back in contemporary rasterization techniques. In the same way I don't get to choose my my RME without the DSD playback capability, when the reason I spent the thousand dollars was mostly for the creature comforts. Likewise for Ray Tracing when I buy a 2080Ti, I'll be buying it and turning off Ray Tracing in-game to salvage the huge FPS performance that Ray Tracing obliterated when it's turned on. BUT EVEN THAT sort of approach as diminishing returns, as having maximum frames-per-second throughput begins to exhibit severe diminishing returns in current display tech viewing. Asus has a now a 360Hz refresh rate monitor they showed off at CES 2020, I'm sure you would be on my side and say most folks won't be seeing the difference between 240Hz and 360Hz monitors for casual gaming or general computing of any kind outside of gaming.

As for people talking about spending $1,000 to upgrade SINAD 90dB to 100dB, that's not needed anymore. In the interest of maintaining the spirit of what you were implying, I'll steelman your case. If you're spending $1,000 to go from 120dB, to something higher, then that is going to cost something. As that point such a DAC can be employed in scientific monitoring use cases, or simply serve to quell your own self recognized placebo biases perhaps you're unable to shake for whatever reason. In which case I'd argue spending more wouldn't be out of the question. Though I am still in agreement with you, that my comparison sucked, as we were talking about consumer use-cases.

Eventually though, things like HDR, and Ray Tracing will be incorporated into the main-stack of general computing paradigms, in the same way 3D accelerators are virtually a part of every piece of graphics hardware today, but no so much in the 80's as you could imagine with respect to games. But currently, the stuff is handled very poorly. Ray Tracing isn't anything new, but having accelerator hardware cores dedicated to it, brings it to the forefront of potentially using it in more consumer oriented experience enhancing avenues. Likewise with HDR, not new, but unless it seemlessly applied without regressions, or compromises to achieve it at the expense of color uniformity or things of that nature, a bit of a waste..

Again, I understand why my DSD/MQA comparison was a bit trash reading it over again (I don't proof read as you can tell)

;(

Tks

Major Contributor

- Joined

- Apr 1, 2019

- Messages

- 3,221

- Likes

- 5,497

Their Linux drivers are simply of a higher quality/stability, if I make it brief. Still, you're right, it doesn't matter in the grand scheme of things where Windows users are the big majority.

Honestly, AMD's GPU division is sleeping right now, probably since they're busy with next-gen consoles. Their midrange is still a very good value (as usual).

About MicroLED, technologically, it IS the next big thing. At least for computer monitors, where we're currently stuck with IPS and its ridiculous glow/bad response time. And I say that as a ColorEdge user. Basically, it's not a concurrent to OLED, which we can't really have on monitors due to burn-in.

I agree with all that. (Oh and RTG's hibernation has irked me more than Intel's 10nm woes, they really should stop fucking around and release a flagship card already, because as things stand, they simply cannot compete in the top end, like... at all. And I want to see the same competition Ryzen brought against Intel's endless Lake series of CPU's, but hopefully Navi does the same against Nvidia, though Nvidia's latest architecture seems a bit long in the tooth, and is due to be superceded the moment a high-end Navi card hits from AMD. They just seem incapable of ever beating Nvidia on the performance front sadly, then again the budgets are vastly skewed)

Also want to say, it seems we're hitting economy of scale limits on many pieces of tech that is forever doomed to the R&D labs. Gone it seems are the days jumps from like CRT, or from dumb phones to smart phones. We seem to be in a rut just trying to make what we have marginally better since diminishing returns are hitting real hard, and costs for any serious movement are far more than can be afforded.

Whatever happened to this for example ;(

mhardy6647

Grand Contributor

- Joined

- Dec 12, 2019

- Messages

- 11,415

- Likes

- 24,786

I am (still), as mentioned earlier in this thread, in pretty much the same boat with the second generation model. They were on sale, though, so they are still here.Elac Debut 6 V2. I purchased the original Debut 6 in a whim a few years back when it was “crowned” king of the budget bookshelves. I found it to be a strangely voiced speaker, amazing amounts of bass for the size, smooth high end, but a kind of “sucked out” midrange which is the most important frequency range for detail in music. I tried to like it, but got no feeling whatsoever. Had to sell.

I think it's used in the Eizo Prominence CG3145. But yeah, it suffered the same fate as SED and FED; actually Panasonic simply exited the panel business. Too bad since they were the best. I'm sure an all Japanese manufacturer couldn't get over the pressure from China and other low/lower cost labor countries.I agree with all that. (Oh and RTG's hibernation has irked me more than Intel's 10nm woes, they really should stop fucking around and release a flagship card already, because as things stand, they simply cannot compete in the top end, like... at all. And I want to see the same competition Ryzen brought against Intel's endless Lake series of CPU's, but hopefully Navi does the same against Nvidia, though Nvidia's latest architecture seems a bit long in the tooth, and is due to be superceded the moment a high-end Navi card hits from AMD. They just seem incapable of ever beating Nvidia on the performance front sadly, then again the budgets are vastly skewed)

Also want to say, it seems we're hitting economy of scale limits on many pieces of tech that is forever doomed to the R&D labs. Gone it seems are the days jumps from like CRT, or from dumb phones to smart phones. We seem to be in a rut just trying to make what we have marginally better since diminishing returns are hitting real hard, and costs for any serious movement are far more than can be afforded.

Whatever happened to this for example ;(

Honestly, the real problem is the average consumer's willingness to eat shit. Why bother making anything else than shit in that situation? This is why buying from the "pro" market is usually the only way to get some quality.

"...Honestly, the real problem is the average consumer's willingness to eat shit. Why bother making anything else than shit in that situation? This is why buying from the "pro" market is usually the only way to get some quality..."

Thats why i'am interestet in the cheap hifi market speakers. Where is the hifi for the money? The JBL's set a fu**ing high mark for the typicly passive hifi speaker market. 220€ for a pair of decent active speakers. So i want the same and better from hifi market. For 200€ i want to see some good passive speakers a pair. Crazy wishing? I dont think so.

Thats why i'am interestet in the cheap hifi market speakers. Where is the hifi for the money? The JBL's set a fu**ing high mark for the typicly passive hifi speaker market. 220€ for a pair of decent active speakers. So i want the same and better from hifi market. For 200€ i want to see some good passive speakers a pair. Crazy wishing? I dont think so.

This doesn't follow.You could make the same argument about sub $200 DACs and headphone amps, but that's probably the most tested price range here, and some of them measure close to the best tested. Until there's some test data we won't know whether speakers will be similar, or more like power amps where you need to spend a bit more to get the performance.

The chips for a DAC cost pennies, the most expensive bits will be the teensy little case and feeble power supply.

Active speakers already necessarily have more expensive electronic parts and much bigger power supplies in each speaker than a complete DAC then the drive units are mechanical items more expensive to make than chips and a bigger and more expensive box.

I would expect the ratio to be nearer 5:1, maybe more.

Tks

Major Contributor

- Joined

- Apr 1, 2019

- Messages

- 3,221

- Likes

- 5,497

I think it's used in the Eizo Prominence CG3145. But yeah, it suffered the same fate as SED and FED; actually Panasonic simply exited the panel business. Too bad since they were the best. I'm sure an all Japanese manufacturer couldn't get over the pressure from China and other low/lower cost labor countries.

Honestly, the real problem is the average consumer's willingness to eat shit. Why bother making anything else than shit in that situation? This is why buying from the "pro" market is usually the only way to get some quality.

Tbh, I don't know how many people are willing to eat shit. It seems they're being sold shit to eat, that they're either under the illusion isn't shit (because it's been dressed up and seasoned so much, you can't see the shit), or they're just too oblivious to care (meaning simply uneducated).

Kinda reminds me of fast food joints that sometimes have parts of meat that resemble what the animal looked like before being processed. Then you see the shock and anger when people find that in their food, while others simply realize that's what they're actually buying anyway (chopped up animal bits and pieces turned into "burgers" or "nuggets" rather than "flesh").

You likewise have companies selling low-tier electronics passing them off as either mid-tier, or upscale. It gets to the point we're at now, when stuff like that is allowed to fester.

I thought that too but it adjusts itself to suit the location it is in using built in microphones and I don't know how long that would take and/or be confused and wrong using test signals.I guess it is a little over budget for this thread. It would be interesting however to measure the Apple HomePod speaker. Maybe you know someone with one they could let you measure.

HorizonsEdge

Active Member

- Joined

- Dec 20, 2019

- Messages

- 224

- Likes

- 319

HTD Level 2's

Sony SSCS5

Pioneer SP-BS22

Sony SSCS5

Pioneer SP-BS22

I think you all want me to spend what free time I have measuring speakers, not building them.So I am going to pass on kits even though I am very interested in measuring some of these.

Ah yes, I remember the buzz about this. Ordered.

If built correctly, DIY speakers are almost always a better value than off the shelf ones. At all price points, I think DIY speakers would be a invaluable addition to ASR’s measurement database. I have built several and would be willing to build and test them prior to shipping to ASR for testing. I own Zaph ZA5.2 TMs and have parts to build a spare. Even for DIY, the under $200 price point is a challenge. The ZA5.2s parts somewhat exceed the price point, but depends on cabinetry and how the crossovers are supplied. Unless cabinets are supplied, suggest sticking to MDF for those that do not. As am typing, realize that may need to some more thinking about costing cabinetry...

I would need some help to offset the cost and would limit finishing to a coat of Duratec, but if ASR members want DIY speakers tested, am willing to make it happen.

Ww

- Joined

- Oct 25, 2019

- Messages

- 134

- Likes

- 139

Adam t5v!

This! - even though a bit out of the price range stated.

I am fascinated by the powered ribbon tweeter speakers like the Adam t5 and the old Emotiva Airmotiv 4/5 that seem to have a strong following as computer desktop speakers for relatively low price.

Last edited:

Similar threads

- Replies

- 154

- Views

- 11K

D

- Replies

- 7

- Views

- 622

- Replies

- 16

- Views

- 4K

- Replies

- 28

- Views

- 2K