dasdoing

Major Contributor

lol at that funky rise of FR in the highs. you would expect that you can identify this in the silabance

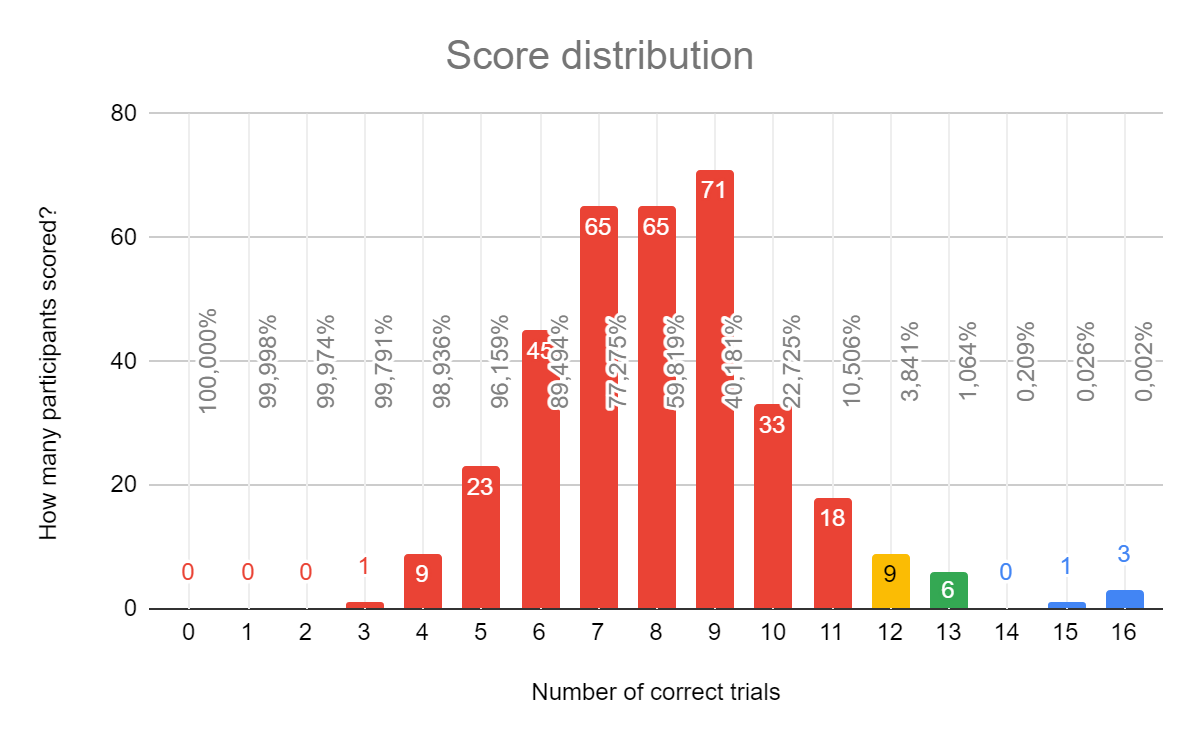

As I've commented before, statistically there are two populations here, as the 4 cases on the right are very unlikely to be generated by a gaussian with the sample mean and variance. A simple t-test would confirm that, but it's quite obvious. Of course it can happen by change, but this chance is really low, or put in another way, the probability of the outcome you get, the distribution, is generated by a gaussian with the sample mean and variance is very low, so it's more likely there are two populations. Intuitively, gaussians decay very quickly and very deeply, they don't revive so to speak.

As we see, out of the total 350 attempts we have a total of 19 attempts that beat the lax <5% p-value criteria; out of those 19 attempts 10 were borderline for the more strict <1% criteria, and only 4 were well below it - scoring 15 or all 16 correct out of 16 trials.

Do you know if the 4 attempts on the right are performed by people with other attempts? Are they performed by 4 different subjects? I ask this because the variable person is meaningful here, as one's knwoledge, training and capacity make all the difference, so we can't loose track of this variable.Note that here I'm saying 'attempts' instead of 'participants' - this is because a few participants reported they took the test more than once.

I cannot reliably say, sadly - there are clues in the metadata sometimes that may indicate the same person took the test multiple times, but really I cannot be certain. There is no way for me to identify individual test participants - it is an anonymous test.Do you know if the 4 attempts on the right are performed by people with other attempts? Are they performed by 4 different subjects? I ask this because the variable person is meaningful here, as one's knwoledge, training and capacity make all the difference, so we can't loose track of this variable.

To be honest, I was actually originally expecting more participants would be able to tell these DACs apart because there are frequency response differences between them. I'm not sure if that is what most would call 'subtle differences', as many good DACs will have better-matched frequency responses than these two - usually the differences would be in noise level and distortion.From here perhaps next steps would be to confirm that these people are indeed capable of always telling apart the tow DACs, and then just ask them how they do it, so we all can learn. Then this knowledge could be taught to a small random sample of people of the read reagion and make them do the test again, and if they can then prove to be able to tell the DACs apart, you would have a very nice proof that with training, anyone can hear very subtle differences. Or I'm just building castles in the air?

lol at that funky rise of FR in the highs. you would expect that you can identify this in the silabance

got 12 of 16 on first try, but the first 5 or so I was still figuering out where to listen to.

curious if I can do better on second try, later perhaps.

I see, thanks for all the references. I agree with you then, controls aren't solid enough to conclude anything, more experiments should be performed to confirm if there is someone really capable of telling the tow DACs apart. My suggestion is a bit naive actually.Also, please note that it is possible to 'cheat' in this test, as the FR differences between sound clips in the stream can be measured - see post #76 where I show the difference between the files, and then posts #140 and #141 where another user suggested this. Another example of how the test can be made easier can be found in post #98.

IMHO there is unfortunately not enough control in this test to assume that an individual participant who scored highly actually heard a difference, and it is also quite possible that some of those who scored under the threshold could score better under controlled circumstances and/or with training.

IMO you accomplished that, and this post is very useful to provide people an example whenever someone says they can hear a difference between DACs etc etcTBH my intention here was just to provide a simple-to-use demonstration illustrating that differences between very different measuring DAC can be much smaller than anticipated (looking at the data and/or price) - and I hoped this would be especially interesting to those that otherwise hadn't had a chance to participate in a level-matched, double-blind ABX test.

If you try 10 times and get 11 or 12 each time, is more or less the same as scoring 15 one time (havent done the numbers, just want to make my point). It's very unlikely that you can score 11 or 12 five or ten times in a row acting randomly. So it looks you have your foot on its throught.did again and got 11.

So I guess I am not fully guessing, but the diference is too small to be sure?

That's a possible explanation of the outliers. Another is that a subset of people can genuinely hear the difference, as discussed in #182 above.I think the shape of the result shows a clear image. those 4 results on the right are clear outliners which suggest some kind of cheat was used.

That's a possible explanation of the outliers. Another is that a subset of people can genuinely hear the difference, as discussed in #182 above.

If you only did these two trials (1st with 12/16 correct, and then 2nd with 11/16) and we assume them to be a single trial with 23 correct out of 32 attempts we get p-value of 1,0031%:did again and got 11.

So I guess I am not fully guessing, but the diference is too small to be sure?

I guess I could do better with a high pass, but I don't want to mess up the results.

I focussed on the "s" of "myself", SINAD diference seams impossible

So while probability of the result being caused by chance is relatively low (but not zero!), the number of incorrect trials is a testament that the audible differences are far from obvious.

Thanks, I'm very glad you found it interesting!I find this thread fascinating and an eye-opener!

I can't identify users, so I can't really say for sure. I also can't be sure whether or not some (of the very few that did score well) used spectrum meters or similar to 'cheat'. There's unfortunately no way for me to control for that - it is a limitation of a remote/online test format.Maybe somebody already asked....in the 350 plus tests taken, can we identify some users who consistenly pick above 12? (or where ever you'd think the number of correct answers become statistically significant).

On the bright side, no matter what the outcome is, subjectivists will go on believing what they wish. So it all works out.That might be due to timing. Your test has coincided with holiday season. Many people are traveling or hosting relatives and probably haven't had a good time to sit and do such a test yet. Be patient.

OTOH, when I've posted actual files for people to listen to and choose without knowing, the participation levels have always been abysmal.