It seems intuitive that we would all have different tastes in sound reproduction. After all, there are thousands of different brands and models of speakers each with a different sound. Surely that is due to different people liking different sounds. Another fact that bolsters this intuition is that there is no reference for audio. So in that sense, there is no metric of accuracy either meaning it is a free for all, allowing anyone to pick any sound as being what they prefer.

Well, everything I just said is wrong! Turns out we are remarkably alike in what we prefer subjectively. We seem to have an internal compass that points to good sound and that when we only use that compass, we are able to determine what is proper and what is not. Dr. Toole in his book, Sound Reproduction, Loudspeakers and Rooms puts this most eloquently:

I will provide further proof of this but think of what our reaction would be to a cheap, boomy sub added to a system. I think we would all hear the same artifacts. The same overboosted bass.

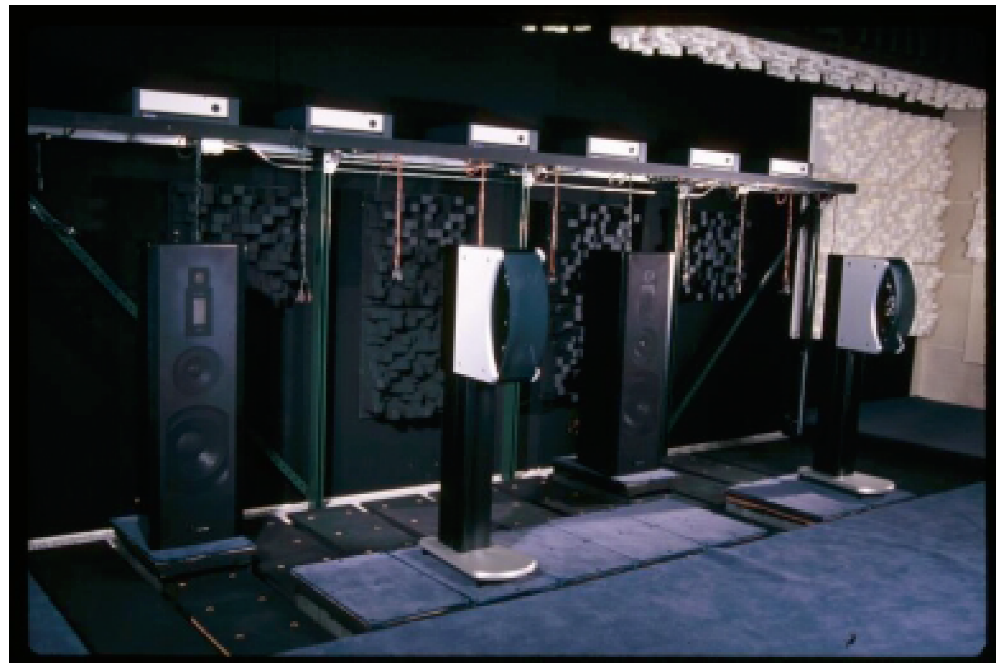

As some of you know, Harman has built on work done by Dr. Toole and others while at Canadian National Research council (NRC) to attempt to better understand our subjective preferences in loudspeakers. This is done by performing double blind listening tests to see what makes a person like one speaker more than others. The latest incarnation of this is a speaker shuffler that is able to switch speakers in and out in about 4 seconds. Here is the fixture in their multi-channel listening room:

The platform under the speaker moves allowing the speakers to be swapped. Put a curtain in front of it and a computer controlled switching apparatus and you have a double blind test where the listener has to judge which speaker sounds better without any other influence.

Here is another room they have with the same capability that I attended:

The story goes that Dr. Sean Olive who is in charge of this testing decided to measure the correlation between a specific set of measurements in the anechoic chamber and subjective preference in these double blind tests. His work is documented in a two part AES paper: http://www.aes.org/e-lib/browse.cfm?elib=12847

A Multiple Regression Model for Predicting Loudspeaker Preference Using Objective Measurements

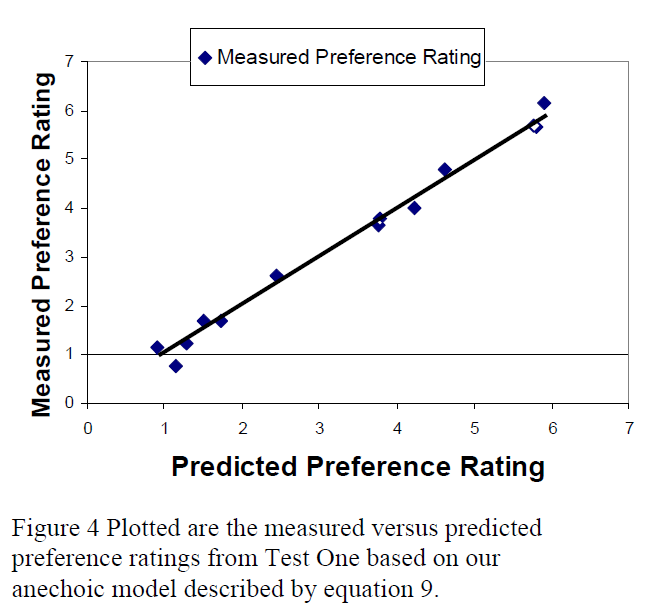

The results were remarkable. Not only did he find a correlation but the match was nearly perfect in the same class as the speaker:

Note that this was not some specially selected speakers. On the contrary, it was a wide variety as Dr. Olive writes in the paper:

The scale of testing was quite wide:

I won't bore with pages and pages of statistics and analysis in the paper and go right to the punchline:

Still not convinced? Well, let's move to a different paper: http://www.aes.org/e-lib/browse.cfm?elib=12581

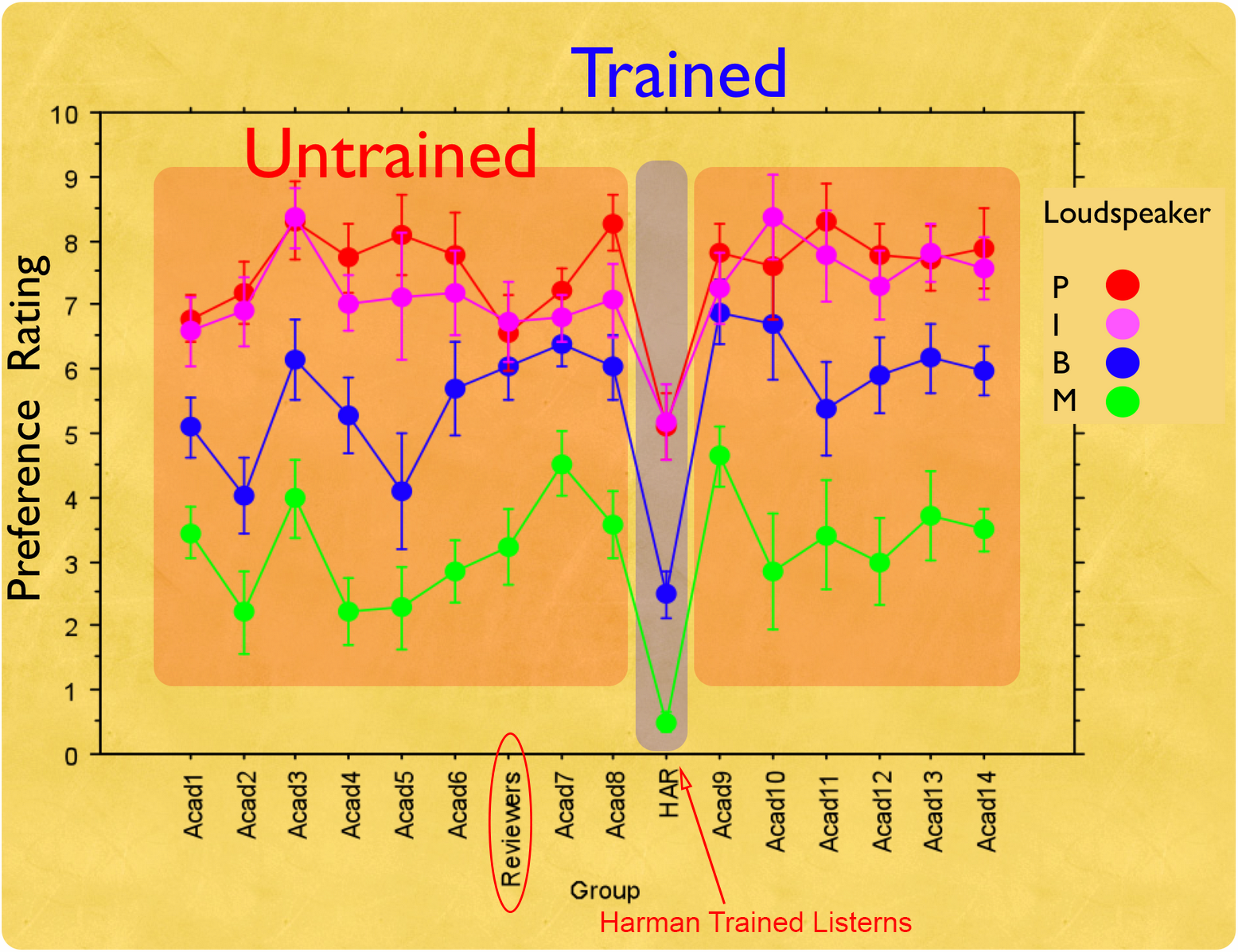

Differences in Performance and Preference of Trained versus Untrained Listeners In Loudspeaker Tests: A Case Study

As the title indicates, Dr. Olive wanted to see if their trained speaker listeners were biased to like their own speakers and hence, their preference did not match the general population. Instead of quoting the paper, allow me to post a graph from a powerpoint by Dr. Olive on this research:

The horizontal axis is the name of the different groups that participated in the test. As you can see, one group was HiFi reviewers and the other of course the HARman trained listeners. The vertical scale is the preference. Each horizontal line graph represents a different speaker being blind tested by each group.

We see that the groups differ on what scores they give from each other. HARman trained listeners were highly critical, giving much lower scores with the green speaker for example getting nearly zero on preference. Reviewer group gave a score of 3 to the same speaker.

The important bit is the relative ranking of the four speakers tested. Harman trained listeners like every other group gave the green speaker the worst scores. At the other extreme the speaker in read garnered almost the same highest score from every group of listener which participated in the test.

On a personal level, I have take the same test and my votes were exactly the same as the larger population. I took the test twice, once with a group of dealers, and once with Harman employees and top acoustic experts. One Harman employee voted differently than the rest of us. I voted the same in both tests. And majority of dealers voted the same as me and the population.

So no, we do not have different preferences as a rule. You may be the exception but the odds are well against you being so.

Well, everything I just said is wrong! Turns out we are remarkably alike in what we prefer subjectively. We seem to have an internal compass that points to good sound and that when we only use that compass, we are able to determine what is proper and what is not. Dr. Toole in his book, Sound Reproduction, Loudspeakers and Rooms puts this most eloquently:

"Descriptors like pleasantness and preference must therefore be considered

as ranking in importance with accuracy and fidelity. This may seem like a dangerous

path to take, risking the corruption of all that is revered in the purity of

an original live performance. Fortunately, it turns out that when given the

opportunity to judge without bias, human listeners are excellent detectors of

artifacts and distortions; they are remarkably trustworthy guardians of what is

good. Having only a vague concept of what might be correct, listeners recognize

what is wrong. An absence of problems becomes a measure of excellence. By

the end of this book, we will see that technical excellence turns out to be a high

correlate of both perceived accuracy and emotional gratification, and most of us

can recognize it when we hear it."

as ranking in importance with accuracy and fidelity. This may seem like a dangerous

path to take, risking the corruption of all that is revered in the purity of

an original live performance. Fortunately, it turns out that when given the

opportunity to judge without bias, human listeners are excellent detectors of

artifacts and distortions; they are remarkably trustworthy guardians of what is

good. Having only a vague concept of what might be correct, listeners recognize

what is wrong. An absence of problems becomes a measure of excellence. By

the end of this book, we will see that technical excellence turns out to be a high

correlate of both perceived accuracy and emotional gratification, and most of us

can recognize it when we hear it."

I will provide further proof of this but think of what our reaction would be to a cheap, boomy sub added to a system. I think we would all hear the same artifacts. The same overboosted bass.

As some of you know, Harman has built on work done by Dr. Toole and others while at Canadian National Research council (NRC) to attempt to better understand our subjective preferences in loudspeakers. This is done by performing double blind listening tests to see what makes a person like one speaker more than others. The latest incarnation of this is a speaker shuffler that is able to switch speakers in and out in about 4 seconds. Here is the fixture in their multi-channel listening room:

The platform under the speaker moves allowing the speakers to be swapped. Put a curtain in front of it and a computer controlled switching apparatus and you have a double blind test where the listener has to judge which speaker sounds better without any other influence.

Here is another room they have with the same capability that I attended:

The story goes that Dr. Sean Olive who is in charge of this testing decided to measure the correlation between a specific set of measurements in the anechoic chamber and subjective preference in these double blind tests. His work is documented in a two part AES paper: http://www.aes.org/e-lib/browse.cfm?elib=12847

A Multiple Regression Model for Predicting Loudspeaker Preference Using Objective Measurements

The results were remarkable. Not only did he find a correlation but the match was nearly perfect in the same class as the speaker:

Note that this was not some specially selected speakers. On the contrary, it was a wide variety as Dr. Olive writes in the paper:

"The selection of 70 loudspeakers was based on the

the competitive samples purchased for performance

benchmarking tests performed for each new JBL,

Infinity and Revel model.

The price range of samples varied from $100 to

$25,000 per pair and includes models from 22

different brands from 7 different countries: United

States, Canada, Great Britain, France, Germany,

Denmark and Japan. The loudspeakers included

designs that incorporated horns and more traditional

designs configured as 1-way to 4-ways. Some used

waveguides, while others did not. The sample also

included four professional 2-way active models

referred to as “near-field” monitors. The vast

majority of the speakers were forward-facing driver

designs, with one electrostatic dipole sample."

the competitive samples purchased for performance

benchmarking tests performed for each new JBL,

Infinity and Revel model.

The price range of samples varied from $100 to

$25,000 per pair and includes models from 22

different brands from 7 different countries: United

States, Canada, Great Britain, France, Germany,

Denmark and Japan. The loudspeakers included

designs that incorporated horns and more traditional

designs configured as 1-way to 4-ways. Some used

waveguides, while others did not. The sample also

included four professional 2-way active models

referred to as “near-field” monitors. The vast

majority of the speakers were forward-facing driver

designs, with one electrostatic dipole sample."

The scale of testing was quite wide:

"The preference ratings for the 70 loudspeakers were

based on a total of 19 listening tests conducted over

the course of 15 months. All of the tests were

performed under identical double-blind listening

conditions, as described in Part One (see section 3).

Controlled variables common to all 19 tests include

listening room, program material, loudspeaker and

listener location, playback level, experimental

procedure and loudness normalization between

speakers.

The preference ratings in one of the tests are based on

the mean preferences of 268 listeners (12 trained and

256 untrained) reported in [24]. All other tests were

done using trained listeners"

based on a total of 19 listening tests conducted over

the course of 15 months. All of the tests were

performed under identical double-blind listening

conditions, as described in Part One (see section 3).

Controlled variables common to all 19 tests include

listening room, program material, loudspeaker and

listener location, playback level, experimental

procedure and loudness normalization between

speakers.

The preference ratings in one of the tests are based on

the mean preferences of 268 listeners (12 trained and

256 untrained) reported in [24]. All other tests were

done using trained listeners"

I won't bore with pages and pages of statistics and analysis in the paper and go right to the punchline:

"A new model has been developed that accurately

predicts preference ratings of loudspeakers based on

their anechoic measured frequency response. Our

model produced near-perfect correlation (r = 0.995)

with measured preferences based on a sample of 13

loudspeakers reported in Part One. Our generalized

model produced a correlation of 0.86 using a

sample of 70 loudspeakers evaluated in 19 listening

tests. Higher correlations may be possible as we

improve the accuracy and resolution of our subjective

measurements,which is a current limiting factor."

Let me repeat. A set of objective measurements with no notion of what humans like or don't like, is able to highly predict what we like in the sound of speakers!predicts preference ratings of loudspeakers based on

their anechoic measured frequency response. Our

model produced near-perfect correlation (r = 0.995)

with measured preferences based on a sample of 13

loudspeakers reported in Part One. Our generalized

model produced a correlation of 0.86 using a

sample of 70 loudspeakers evaluated in 19 listening

tests. Higher correlations may be possible as we

improve the accuracy and resolution of our subjective

measurements,which is a current limiting factor."

Still not convinced? Well, let's move to a different paper: http://www.aes.org/e-lib/browse.cfm?elib=12581

Differences in Performance and Preference of Trained versus Untrained Listeners In Loudspeaker Tests: A Case Study

As the title indicates, Dr. Olive wanted to see if their trained speaker listeners were biased to like their own speakers and hence, their preference did not match the general population. Instead of quoting the paper, allow me to post a graph from a powerpoint by Dr. Olive on this research:

The horizontal axis is the name of the different groups that participated in the test. As you can see, one group was HiFi reviewers and the other of course the HARman trained listeners. The vertical scale is the preference. Each horizontal line graph represents a different speaker being blind tested by each group.

We see that the groups differ on what scores they give from each other. HARman trained listeners were highly critical, giving much lower scores with the green speaker for example getting nearly zero on preference. Reviewer group gave a score of 3 to the same speaker.

The important bit is the relative ranking of the four speakers tested. Harman trained listeners like every other group gave the green speaker the worst scores. At the other extreme the speaker in read garnered almost the same highest score from every group of listener which participated in the test.

On a personal level, I have take the same test and my votes were exactly the same as the larger population. I took the test twice, once with a group of dealers, and once with Harman employees and top acoustic experts. One Harman employee voted differently than the rest of us. I voted the same in both tests. And majority of dealers voted the same as me and the population.

So no, we do not have different preferences as a rule. You may be the exception but the odds are well against you being so.