Would a recommendation based on objective rankings be possible in the ASR equipment tests?

I am thinking if it would be possible and if it would make a sense to give a component (at least electronic components like amplifiers and DACs) recommendations based on a set of objective measurements. The main ASR reviews are supported by a wide set of measured data, however the final verdict “recommended” or “not recommended” is based on the verdict of @amirm , and this verdict as such is subjective rather than objective. Is it OK? Maybe yes, maybe no. My doubts are based on several review recommendations, I would name two of them, when

www.audiosciencereview.com

www.audiosciencereview.com

is a recommended component, but

www.audiosciencereview.com

www.audiosciencereview.com

is not recommended with explanation: “I can't recommend it on pure performance”.

I think that it might have been nice to have an automated system that would evaluate a full set of measurements (frequency response, noise, dynamic range, THD and THD+N, THD+N vs. frequency sweep, IMD, IMD+N vs. frequency sweep …..) in a similar way as it is done in RightMark Audio Analyzer software (RMAA). The resulting ranking would be, in my opinion, much more objective than the subjective opinion of one person. Based on a fact we are on Audioscience Review forum, it would probably fit better with the forum goal – to bring to the readers independent objective information.

For those who are not familiar with RMAA, this is how the RMAA chart looks like. An example of a full report may be seen here:

and this is the header only (from another test):

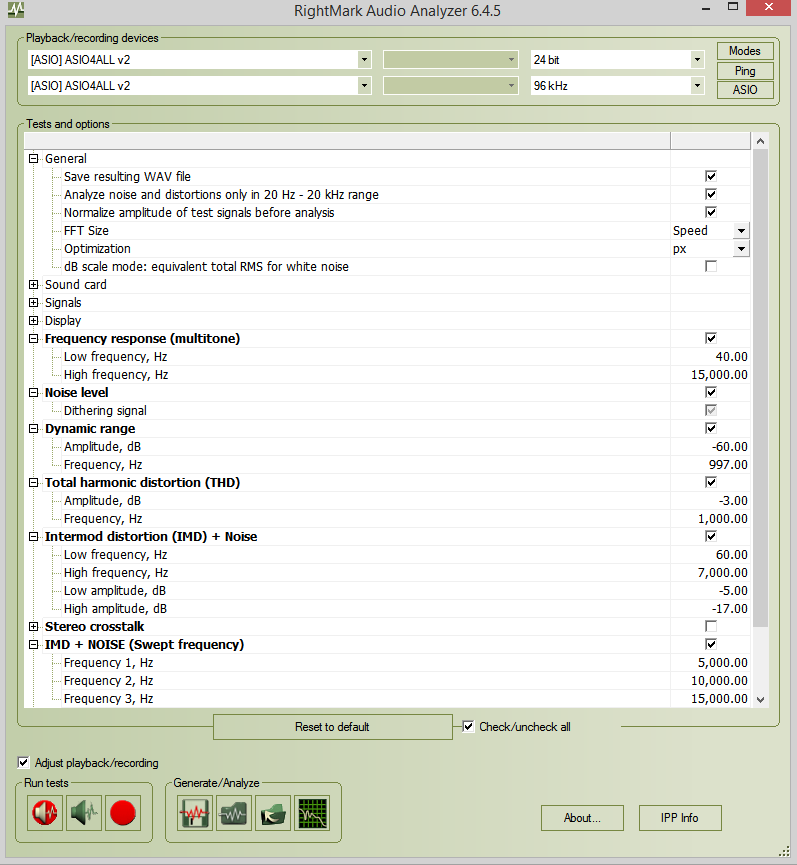

The test conditions may be chosen and displayed in the RMAA main window:

I especially like the IMD swept tones test, which performs a twin-tone sweep of equidistantly spaced (1kHz) two tone over the measuring band.

I would appreciate your thoughts on equipment recommendation based on objective component test ranking.

I am thinking if it would be possible and if it would make a sense to give a component (at least electronic components like amplifiers and DACs) recommendations based on a set of objective measurements. The main ASR reviews are supported by a wide set of measured data, however the final verdict “recommended” or “not recommended” is based on the verdict of @amirm , and this verdict as such is subjective rather than objective. Is it OK? Maybe yes, maybe no. My doubts are based on several review recommendations, I would name two of them, when

Crown 4|300N Amplifier Review

This is a review and detailed measurements of the Crown DCI 4 | 300N four channel DSP and networked professional amplifier. It was kindly purchased (used) and sent to me for testing and costs US $3,511. There is only a power on/off on the front. I wish there was a processing bypass as well. The...

www.audiosciencereview.com

www.audiosciencereview.com

is a recommended component, but

Review and Measurements of Musical Fidelity M2si Amp

This is a review and detailed measurements of the Musical Fidelity M2si integrated stereo amplifier. It was kindly sent in by a member. I think the list price is US $999. However I see it selling for just US $599 from the outfit the owner purchased it. Other places have it as high as US...

www.audiosciencereview.com

www.audiosciencereview.com

is not recommended with explanation: “I can't recommend it on pure performance”.

I think that it might have been nice to have an automated system that would evaluate a full set of measurements (frequency response, noise, dynamic range, THD and THD+N, THD+N vs. frequency sweep, IMD, IMD+N vs. frequency sweep …..) in a similar way as it is done in RightMark Audio Analyzer software (RMAA). The resulting ranking would be, in my opinion, much more objective than the subjective opinion of one person. Based on a fact we are on Audioscience Review forum, it would probably fit better with the forum goal – to bring to the readers independent objective information.

For those who are not familiar with RMAA, this is how the RMAA chart looks like. An example of a full report may be seen here:

and this is the header only (from another test):

The test conditions may be chosen and displayed in the RMAA main window:

I especially like the IMD swept tones test, which performs a twin-tone sweep of equidistantly spaced (1kHz) two tone over the measuring band.

I would appreciate your thoughts on equipment recommendation based on objective component test ranking.