Validity Of X-curve For Cinema Sound

In my article in Widescreen Review Magazine published recently titled “There Is No “There” in Audio,” I made a brief reference to X-curve used for calibration of Cinema Sound and mentioned that there is no good reason to follow it for home theater applications. In this article I will provide a deeper dive into the X-curve, not as much for its direct applicability to home theater, but in the interest of covering some core concepts of good sound reproduction, be it in small or large spaces.

Background

There is a lot of controversy regarding the X-curve in the industry as I write this article. While I think everyone understands that there are issues with this very aging standard, there are two schools of thought: one that seeks to defend what is there and only wants incremental changes, and the other that says let’s abandon the whole thing and start fresh. So it is clear, I am in the latter camp.

Those in favor of keeping the system more or less the same, make the following arguments:

1. The X-curve is not an equalization/target curve.[1] Rather, it is a response that shows up if you measure a loudspeaker with flat response, in a large room with a real-time analyzer and pink noise as specified in the standard. And that such a measurement will tend to show a decline in frequency response starting around 2 kHz at a rate of 3 dB per octave.

2. Since the X-curve is a measurement error, it is not a “target curve” in the sense of trying to modify the sound. That is, the goal is to get at flat response and compensate for the measurement error.

3. The reason behind the measurement error is the inclusion of the reverberations in the room. That is, when the direct (“on-axis”) sound of a flat response speaker is combined with reflections/reverberations in the room, the high frequencies when measured continuously using Real-Time Analyzer (RTA) and pink noise. There is also an effect stated to be there in low frequencies which I am not addressing in this article.

If these arguments are right, then sure, we should stay the course and make fine corrections. Let’s examine if that is the case.

Background and History Of The X-Curve For Cinema Sound

One of my favorite TV cooking shows is the Japanese “Iron Chef.” The program starts with a great line from Brillat-Savarin: “tell me what you eat and I'll tell you what you are.” By the same token, to understand X-curve, it is essential that we examine the history and motivation behind it. We need to travel back to circa 1970 when the initiative started, leading to standardization by both SMPTE and international ISO organizations.

The history lesson comes ready for us in the form of Ioan Allen’s paper, “The X-Curve: Its Origins And History.” No, this is not some dusty type-written document from the 1970s but one penned for the SMPTE Motion Imaging Journal in 2006! Yes, 2006. It seems strange to have the documentation for such a standard come so late but here we are. The good news is that we can see if the author and champion of X-curve, which in this case is a film sound luminary, has any second thoughts multiple decades later.

Allen worked for Dolby Laboratories then and continues to do so as of this writing. You no doubt know Dolby as the pioneer of surround sound but the fame and fortune of the company came from another more mundane technology, namely noise reduction for analog sources such as professional multichannel tape recorders and later on, the Cassette tape.[2] That invention was quite simple but very clever. The noise on tape is focused predominantly in the high frequencies (better called a “hiss” as a result). At recording time, the music level is equalized to boost its high frequencies, a process that is called “emphasis.” At playback, the inverse correction, called “de-emphasis,” is performed by lowering the levels of high frequencies by the same amount. This killed two birds with one stone: it restored the level of music high frequencies to what it was prior to emphasis, while also lowering the noise level. The result was a much-improved signal to noise ratio, and made Dolby Noise Reduction a standard in professional tape recorders and consumer cassette tapes. This resulted in great royalty stream for Dolby. Bless their hearts for creating customer value and financial rewards for themselves.

As an interesting aside, the emphasis/de-emphasis technique is also part of the CD specifications! It is there to help reduce high-frequency quantization noise. While I recall some titles using it back when the CD format was introduced, I don’t believe it is anything but distant memory in digital systems.

On a personal note, and I don’t think I was remotely alone in doing it [2], I would record on my cassette tape with Dolby noise reduction which would boost the highs due to pre-emphasis, but then playback without it. Why? Because you got more highs. The cassette decks of the time had limited high frequency response and recording without this pre-emphasis resulted muffled sound to me. My method didn’t extend the frequency response but subjectively resulted in hearing more high frequencies. In other words, having hiss was less of an issue than restoring impression of high frequencies. Our perception is quite complex than one intuits on the surface. A lesson to keep in mind as we think through cinema sound.

Anyway, why am I telling you all of this? Because as they say, necessity is the mother of invention. In this case, having successfully gotten A-type noise reduction into the professional music recording space, Dolby wanted to expand its market into movie sound but ran into a snag which later led to development of the X-curve. Here is Allen telling it in his paper:

First Dolby Excursions into Film Sound

“The year 1969 marked the first use of Dolby® noise reduction in the film industry, with the music recording of ‘Oliver.’ At that time, Dolby A-type noise reduction was widely used by the music recording industry, initially for two-track classical recording and then to counteract the noise build-up inherent with eight, and later sixteen, track recorders. A-type noise reduction was next used in the film industry for music recording and some magnetic generations on’ Ryan’s Daughter’ (1970).

The author was frustrated at the limited benefit heard from this process when the films were released in theatres. In 1970, a test was conducted at Pinewood Studios in England with a remixed reel of ‘Jane Eyre,’ with A-type noise reduction actually applied to the mono-optical release print itself. Again, the results were disappointing in playback, as the reduction in noise did nothing to help the limited bandwidth and audible distortion.”

In principal Dolby Noise Reduction should have worked the same way it did for music and reduce noise of the analog (optical) recordings of movie sound. But it didn’t. The problem they ran into was that the recording/playback chain of movie sound already had its own ad-hoc equalization and piling on additional amount in the form of Dolby NR pre-emphasis, created a mess as Allen explains:

“It seemed obvious that the monitor characteristic for conventional optical sound mixing caused severe quality limitations. An (A+B) response [source + amplification/reproduction frequency responses] well over 20 dB down at 8 kHz led to excessive pre-emphasis. This in turn led to excessive distortion. Limited high-frequency response led to the mixer rolling off low-frequencies, to make the sound “more balanced” (see below). If the monitor response was flattened, less equalization would be required, and the distortion would be lower.”

So the mission turned into improving the end-to-end movie sound reproduction chain. At high level, the goal was simple: have every venue that plays back the soundtrack, sound the same as every place the movie sound is created/previewed. Logical enough. To get there, one would have to first determine what difference existed in the two preview/playback environments.

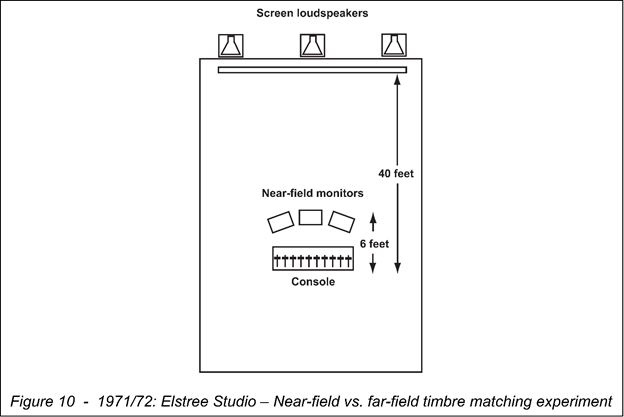

Comparing the production chain with playback was not easy to do. After all, how would you compare the sound produced in two different locations? Listening to one and then running to the other venue is not a practical thing. There is technology that allows for this by the way in the form binaural recording where we capture with two microphones what a prototype human head would “hear.” The recording can then be used to compare to the same process performed in another venue. The experimental set up is show in Figure 1 (Figure 10 in Allen’s paper) with the mixing/dubbing stage called the “near-field” and the cinema sound, “far field.” With this setup the larger theatre’s sound could be equalized iteratively until it perceptually matched what the near-field system was playing.

Figure 1: Ioan Allen's experiment comparing the sound of the mixing/dubbing stage to that of the larger theater.

Figure 1: Ioan Allen's experiment comparing the sound of the mixing/dubbing stage to that of the larger theater.

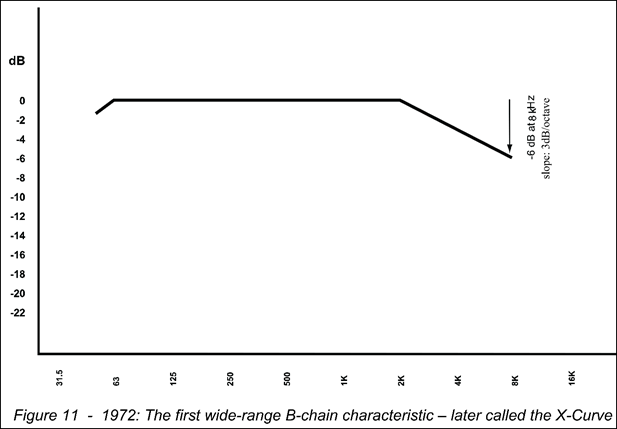

What did the differential equalization look like? Here again is Allen [emphasis mine]:

“A surprising development was the discovery that the best subjective match still showed an apparent slight HF droop. A slope of around 3 dB per octave from about 2 kHz seemed to give the best results, along with a slight limitation to low-frequency bandwidth, as seen in Fig. 11. […] The reason for the apparently desirable HF droop is not very easy to explain. “

And with it, the X-curve was born as Allen shows in Figure 2 (his Figure 11).

Figure 2: Original shape of the X-curve as a result of Allen's experiment.

Figure 2: Original shape of the X-curve as a result of Allen's experiment.

The experiment seemed well enough but we have a serious protocol problem. We can’t possibly assess cause and effect with just one listening test. Imagine trying to prove the efficacy of a drug by just testing it on one person. The single experiment would let us create a hypothesis, i.e. the larger the room, the more response variation starting at 2 kHz. It would be the follow up controlled experiments that would validate it. Without that kind of follow up testing, we could very well fall victim of other anomalies that led to these results.

Here is an example of that. In this test, two different brands of loudspeakers were used. The near-field was KEF and the far field, Vitavox. Surely the first order difference that one would hear is the timbre contrast of the two loudspeakers. Before jumping on a much more obscure cause, i.e. reverberations in the room, this factor should have been ruled out with other set of Loudspeakers to see if the X-curve shows up more or less the same, i.e. it is a function of the room and not loudspeaker. Ditto for using different set of listeners who may have more acute timbre discrimination than the one used here.

And oh, you want to match levels, and have such listening tests be blind –– neither of which is stated by Allen to have been the case. Yes, these measures are necessary. Sighted evaluation even when differences are big is subject to placebo and bias. Here is a personal example. Last year we previewed at Madrona Digital the incredible new JBL M2 loudspeaker in our reference theatre in front of a group of audiophiles. These loudspeakers have superlatively smooth on- and off-axis response whose efficacy has been verified in double blind controlled listening tests. Yet when you look at the M2s, they look like the typical “pro loudspeaker” with big horns and such, resulting in the immediate expression of “these can’t sound good.” While a number of listeners in our demonstrations were quite impressed with the sound of the M2s, some others insisted that the bass was wrong. The M2s have a sophisticated parametric EQ that allows their response to be shaped however you want. But knowing that the sound was already correct and it was possibly the biased perception that was the issue, we made a tiny, inaudible change (a fraction of a dB) and then asked if that fixed the problem. The answer? It did! What we changed there was perception, not reality. Without controlled, level matched, blind testing we simply can’t fully really trust the results of such tests.

Allen hypothesized on the causes he thought were in play to generate the unusual shape of the X-curve:

“There are three possibilities, singly or in combination:

(1) Some psychoacoustic phenomena involving faraway sound and picture.

(2) Some distortion components in the loudspeaker, making more HF objectionable.

(3) The result of reverberation buildup, as described below.”

Sadly, only #3 is discussed in the paper. The others just get a passing remark. The focus and justification of the X-curve in the minds of Allen (and as a group, people defending it today) then rests almost entirely on the shoulders of effect of reverberations on measurements. Unfortunately, even this hypothesis gets passing, light treatment. Now we could forgive some of the crudeness of the research because of timing, this being back in early 1970s. But in short order, i.e. early 1980s, acoustic research had advanced significantly as evidenced by the extensive research by likes of Dr. Floyd Toole and publishing of the same in Audio Engineering Society which involved truly understanding sound reproduction and importance of controlled testing to determine listener preference. Yet the movie sound industry kept marching on, as if we continued to live in early 1970s.

Good news is that the industry is now aware of issues around X-curve and for the last few years there has been considerable work in both AES and SMPTE to revise cinema sound. What the final outcome will be, I can’t say. What I can explain is the justification for setting aside the X-curve and starting fresh.

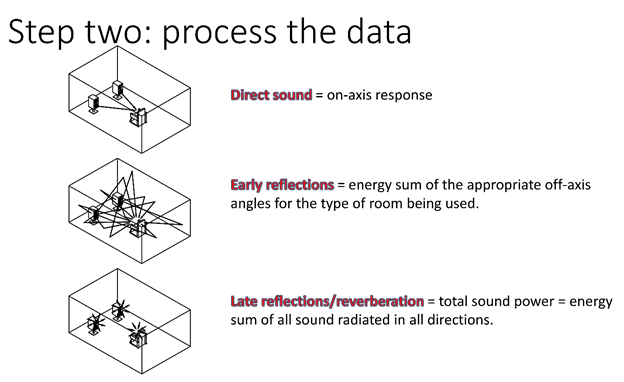

Loudspeaker In A Room

At the risk of getting pedantic, what we hear in a room is a combination of what the loudspeaker produces and all the sound waves reflecting and intermixing with the direct sound and each other over time as shown in Dr. Toole’s presentation on the state of Cinema sound in Figure 3. [3] Fancy term for this is “superposition: where the amplitude and phase of each waveform determines what the combination looks like and potentially sound like.

Figure 3: Direct and indirect sound created by a loudspeaker in a room.[3]

Figure 3: Direct and indirect sound created by a loudspeaker in a room.[3]

As shown in Figure 3, the direct sound from the loudspeaker arrives at our ear first but shortly thereafter, the reflections also get there. The total sonic experience then is a combination of direct, on-axis sound and indirect off-axis radiations combining with it.

So what does this do to the sound we hear? At the extreme, the answer is way too complicated to provide. Every room is different. Every loud speaker is different. Every listening position is a different. The permutations are immense. We can use a computer model to analyze it all but ideally, we would have rules of thumb that would let us make generalized observations without it.

This is the crux of acoustic research that has been going on for more than a century –– reducing the complexity yet maintaining sufficient accuracy to make correct judgments. There is no better version of this than the insight provided in 1962 by the German physicist Manfred Schroder, a Bell Labs researcher, and all around “father of modern acoustics.”

Before I get into what Schroder taught us, let’s imagine the simple example of throwing a stone in a pond. Assuming an ideal case, we will see pretty concentric circles emanating from the stone. At some point one of these circles hits the side of the pond, reverses direction and proceeds to combine with the waves that are still traveling from the source. The result is an interference pattern. Sometimes the waves cancel out. Sometimes they combine. These are the distinct patterns we see. Other times we get a combination of the two extreme. This is the superposition concept in play.

Now imagine the much more complex scenario of the waves hitting a number of reflecting surfaces and allowing for those reflections in turn to hit other shores and creating secondary reflections –– or tertiary. Now the outcome is not a predictable, geometric pattern. We get what boaters would call a “confused sea.” The surface of the water will appear chaotic and random.

Mathematically, a principal called the Central Limit Theorem says that if we have large enough number of independent events, the distribution of events starts to approach a “normal”/bell curve (properly called a Poisson distribution). While the combination of reflected waves is not random per se, it can almost be treated that way as the number of waves combining with each other becomes large.

What this means that we can start with an orderly event, in this case a pebble falling in a pond, but what we end up at the end, is a random event with predictable distribution. Same is true of sound in a room. [5][7][8][9][10][11][12][13][14][15][16] The pebble is replaced by our loudspeaker generating sound energy. Air is the water in the pond. And the walls, ceiling and floor in our listening space play the role of the shores of the pond, reflecting sound waves back. When we hit the point where the room behavior becomes random, we call this behavior “statistical.”

In contrast to statistical mode, when just a few waves combining, we call that “modal behavior.”

From the point of view of proper sound reproduction in a room, statistical behavior is much preferred. In modal behavior, which as you will see occurs in low frequencies, the frequency response of the loudspeaker is heavily modified in distinct patterns much like the initial waves of the stone hitting the shores and coming back. Such pronounced and predictable patterns cause colorations that are readily audible. “Boomy bass” is the phrase here.

Not so with statistical behavior. Here, we have so many waves combining that the outcome while “rough,” is not deterministic. And that is a “good” thing when it comes to sound reproduction.

Think of whether you prefer to drive your car at speed on a smooth dirt road, i.e. lots of small variations, versus a paved road with huge potholes. The former will provide a smoother ride even though it has considerably more variations per feet travelled. The suspension in your car will filter out the small variations but pass through the large ones. Same is true of sound reproduction in a room. The filter is the auditory response of our hearing system, which lacks the resolution to detect compacted ups and downs in frequency response as represented by statistical room mode. [5] Yet it passes through the large undulations of the model mode.

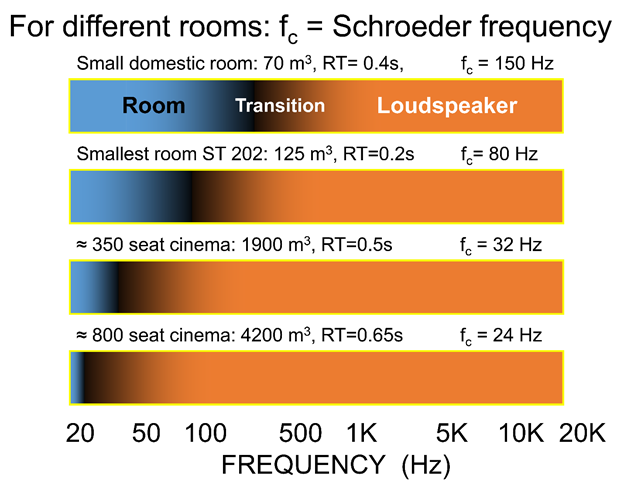

Every room has these modes and a region in between called the transition. And the person who tells us how to determine these regions was Schroder. His mathematical analysis led to a very simple formula that gives us the frequency at which the room behavior changes from modal to statistical. [8]

The formula is, Fc = 2000·(T60/V)^0.5 where Fc is the frequency of transition from modal to statistical (not really, see the next paragraph). T60 is RT60 or the amount of time it takes for room reverberations to die down by 60 dB (one million times lower), and V is the volume of the room. RT60 is a measured value, which is easily done today with a microphone and a computer program.

Even though Schroeder’s formula produces a single transition frequency, the reality is that there is no one magical point where nature abruptly acts differently. If you read Schroeder’s research and the mountain of other references I have provided you will see that the formula is an approximation based on the “modal density” which indicates how many waves are combining with each other in each unit of frequency (Hertz). It is the point of chaos if you will, i.e. how many waves have to pile up on top of each other before order can no longer be recognized. That point to some extent then is subjective and at any rate, not a single disconnected event.

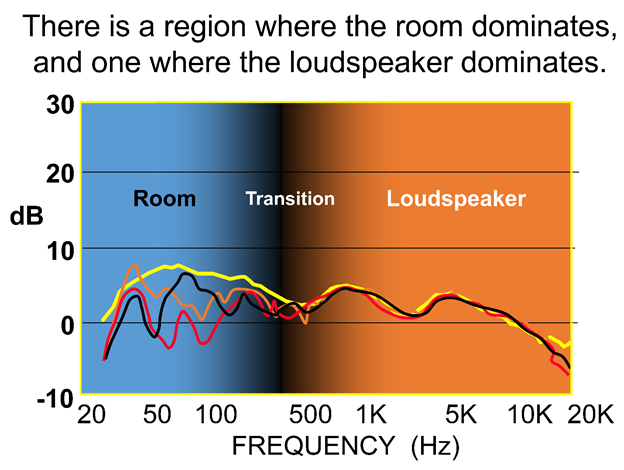

Recall that the X-curve says that there is a transformation in room sound around 2 KHz. As you can see from Dr. Toole’s presentation slide in Figure 4, for any room of interest, 2 KHz lands well above transition frequency, whether we are talking a home theater or massive 800-seat theater. [1]

Figure 4: Transition frequency range for modal versus random effect of the room.

Figure 4: Transition frequency range for modal versus random effect of the room.

Due to dependency of Fc on volume, the transition frequency inversely scales with the size of the venue. For the smallest room representing what we may have in our home, the transition frequency is in the range of 125 Hz. This means a good bit of the spectrum of what we play, movies or music, will land at or below this and hence will have the “bad” modal behavior. Whereas for the 800-seat cinema, that problem is all but gone with a transition of just 24 Hz; only the LFE channel is impacted. The large room then is very much advantaged and will have exceptionally smooth bass response compared to our small home listening spaces.

Yes, there is a point to this discussion relative to the X-curve, namely the fact that as we go above the transition frequencies, the room imprint on the sound of the loudspeaker becomes smaller and smaller. What dominates the timbre and tonal quality then is mostly determined by the loudspeaker, not the room. This is shown nicely with actual measurements in another one of Dr. Toole’s slides in Figure 5, and in the detailed articles I have written on optimizing bass response in home theaters for Widescreen Review. [17]

Figure 5: Transition Frequency where the room matters versus loudspeaker.

Figure 5: Transition Frequency where the room matters versus loudspeaker.

The measurements in different colors show what happens if we move the microphone around the room. At frequencies below transition, the variations are quite large because the room response is modal. How a handful of waves combine heavily depends on where in the room we measure them. Go well above the transition frequency though and the room variations vanish. Mind you, they are still there but by matching our measurement resolution to that of the auditory filter/discrimination, we see that what is perceived is now location independent (tonally speaking). And if that is the case then the room is not imprinting its signature in those frequencies. Hence the categorization by Dr. Toole of that region being dominated by the “Loudspeaker.”

What this says is that if you don’t like what you are hearing above the transition frequencies, the problem is much more likely to be a loudspeaker than the room. A lousy loudspeaker will sound bad in many rooms. A good loudspeaker will sound good in many rooms.

It also says that if we care about the fidelity of the sound above transition frequencies, the place to look first and foremost is the loudspeaker. Not equalization of whatever is there. And certainly not with a one-third-octave equalizer stipulated in the X-curve standard (its resolution is too poor in the lower frequencies).

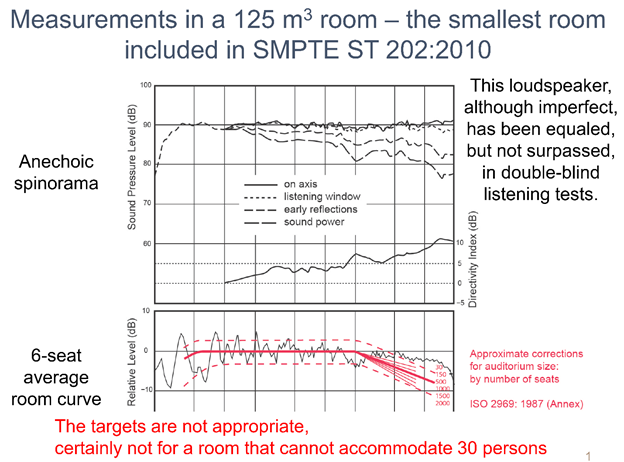

Authoritative research shows that a good loudspeaker produces on-axis, i.e. direct sound coming at you, and off-axis, i.e. sound going in other directions and hitting room surfaces, that are similar.[20] This is remarkable observation as it means we can design a loudspeaker, measure it in an anechoic chamber and have that be enough to tell us how good it sounds in just about any room! Dr. Toole brings this insight together with the concept of X-curve in Figure 6.

Figure 6: Response curve of an excellent sounding loudspeaker compared to X-curve equalization curves. Even though the loudspeaker response (in black on the bottom) violates the X-curve for a 30-seat theatre, it has managed to win preference tests of listeners in controlled listening tests.

Figure 6: Response curve of an excellent sounding loudspeaker compared to X-curve equalization curves. Even though the loudspeaker response (in black on the bottom) violates the X-curve for a 30-seat theatre, it has managed to win preference tests of listeners in controlled listening tests.

There is a lot going on here so let me explain the components. The top graph shows the on-axis frequency response of a loudspeaker (top, nearly flat line) in addition to the average of a set of off-axis responses categorized as early and late reflections. Other than an unwanted dip around 2 kHz in the off-axis response, this loudspeaker is very well behaved, producing similar tonal quality whether you face the loudspeaker directly, or listen to its side reflections coming at you at different angles. This loudspeaker, as noted by Dr. Toole, has performed superbly in controlled double-blind listening tests.

Now, let’s take the same loudspeaker and place it in a SMPTE classified small theatre of 30 seats and measure its response as shown in the bottom graph in black. Predictably per Schroeder, we see the highly modal and hence uneven low frequency response. But by the time we get to the knee of the X-curve, overlaid in red for different sized theatres, all of those variations are long gone. What then determines the fidelity is the Loudspeaker and there, we have a speaker that has won subjective tests yet the X-curve tells us it has the wrong response!

In other words, correlation between X-curve response and subject preference of human listeners is poor. Since the standard stipulates that we get that X-curve, then compliance with it would mean heavy handed equalization of this excellent speaker which would damage its performance.

Later in his presentation, Dr. Toole proposes that we aim to improve cinema sound the same way as we have done for home listening spaces –– attempt to correlate anechoic measurements to controlled listening tests. Once there, we know how to design our loudspeakers and equalization to get great sound. Nothing like an ad-hoc X-curve may apply I am afraid.

Effect Of Reverberation On Room Measurements

Let’s put aside the theory and just measure some rooms and see if the core concept, i.e. measurement error, is at play. We have such data in the form of paper, “Is The X Curve Damaging Our Enjoyment Of Cinema?” [18] I will give you the punch line first: they say yes! The X-curve is damaging our enjoyment of Cinema. This is how the authors set the stage:

“Complaints about poor speech intelligibility and music fidelity in cinema soundtracks persist despite advancements in sound reproduction technology and accreditation of cinema sound systems. The X-curve for the equalization of cinema sound systems has been used for many years and is a foundational element for setting up the tonal balance and achieving accreditation of cinemas.

This paper challenges the acoustic theory behind the X-curve, using analysis of acoustic and electro-acoustic measurements made in cinemas and in other spaces where high fidelity and high intelligibility is required. Both the temporal and tonal developments of the sound field in a number of rooms are examined in detail and related to temporal properties of speech and music and humans’ perception of tonality.

This work follows a series of conference papers that a number of the authors have presented about the sound quality in cinemas.”

The authors highlight, and agree with Allen’s point that the perceived sound in the room must be flat, not sloped down:

So, according to Allen, if the requirement is to have the short-duration sounds reproduced with a flat frequency response, then the steady-state condition [real-time measurements] will show an apparent high-frequency droop. To achieve the X-curve characteristic, the steady-state frequency response as measured using a spectrum analyzer with pink noise as a test signal, should be equalized to show a -3 dB per octave slope above 2 kHz”.

And later (emphasis mine):

“At present, dubbing theatres and commercial cinemas seeking Dolby certification are equalized as closely as possible to the X-curve at a point roughly two-thirds of the distance from the screen to the rear wall of the rooms. This region of the room has traditionally been considered to be the most representative average of the whole room and is generally where the mixing desk is located in a commercial dubbing facility.”

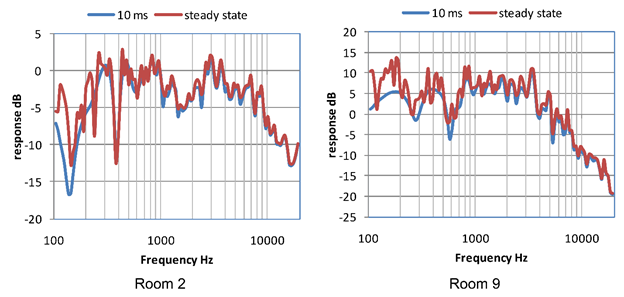

Now on to the data which is based on measurements of 18 private and commercial Dolby certified cinemas. If Allen’s theory of X-curve is right, we should see a predictable difference in the response of the rooms when measured using short and long time intervals starting around 2 KHz. Figure 7 shows exactly that: using a short 10 millisecond window and hence, eliminating much of the reverberations in the room, and the “stead-state,” with its longer time window that allows the reverberations to be incorporated in the measurements.

Figure 7: Comparison of 10 millisecond window frequency response measurement and steady state.

Figure 7: Comparison of 10 millisecond window frequency response measurement and steady state.

Huston we have a problem. Or I should say, the theory of reverberations causing a predictable droop in high frequencies starting around 2 kHz has a problem. What we see above 2 kHz is that the two graphs land almost completely on top of each other, indicating little to no differential.

The ramifications are quite sad here. It means that to get their Dolby certification the technicians had to create that slopping effect on purpose, which could only happen by using an equalizer and pulling down the high frequencies starting at 2 kHz. And with it, created a muffled high-frequency response.

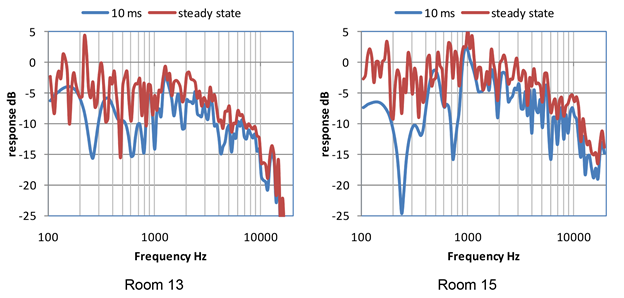

To be fair, there were other rooms where the short and long term measurements did differ in high frequencies as shown in Figure 8.

Figure 8: Comparison of 10 millisecond measurement window and steady state.

Figure 8: Comparison of 10 millisecond measurement window and steady state.

Interpreting these visually is harder than the last two sets but suffice it to say, they do not follow the X-curve either. Because if they did, they would have an orderly departure starting at 2 kHz that would keep getting wider and wider.

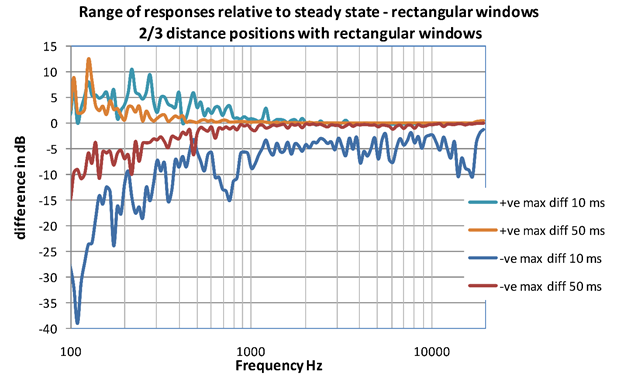

Figure 9 shows the differential data in a simpler-to-digest form. Its Y axis shows how different the response is between the short term measurements of 10, 50, 80 and 300 milliseconds versus the stead-state. Let’s take the 10-millisecond line at the bottom. At 2 kHz it indeed shows a differential relative to the zero line. But that difference more or less stays constant as we climb up the frequencies. The X-curve says the difference should be almost zero at 2 kHz and then increasing. We don’t see that here.

What we do see is that if we increase our short-term measurement window a bit, the difference quickly vanishes even though we are way short of the time window of continuous measurement. Clearly, later reverberations are not contributing to frequency response variations.

Figure 9: Relative comparison showing the worst case difference between steady-state and short-term measurement.

Figure 9: Relative comparison showing the worst case difference between steady-state and short-term measurement.

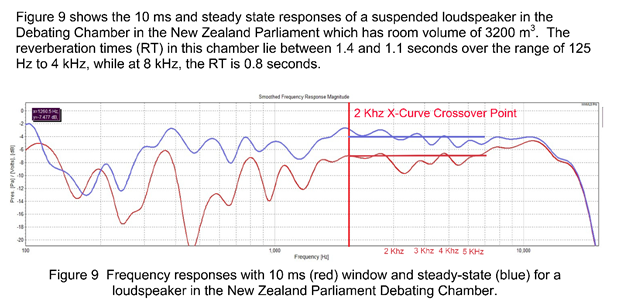

The authors set out to test the theory at the limit: measuring a massive room. Surely if reverberations cause the droop at 2 kHz, this should show it. Figure 10 (authors’ Figure 8) are the measurements of the New Zealand Parliament, again using short term 10-millisecond and stead-state measurements.

Figure 10: Comparison of response in a super-sized room of short-term measurement versus steady state. As seen from my mark up, the average level difference between the two responses is more or less flat up to about 4 kHz, after which it actually shrinks. This is nothing like what the X-curve is supposed to predict.

Figure 10: Comparison of response in a super-sized room of short-term measurement versus steady state. As seen from my mark up, the average level difference between the two responses is more or less flat up to about 4 kHz, after which it actually shrinks. This is nothing like what the X-curve is supposed to predict.

As I have highlighted on their graph, from 2 kHz on, we yet again have a constant differential between the two types of measurements. No 2 kHz knee as in the X-curve. This is not looking good for the theory of reverberations causing a predictable measurement error embodied in the X-curve.

The authors don’t mince words given this strong evidence:

“The reverberation times of a number of the Dolby-accredited rooms above 1 kHz are commensurate with those recommended in the 1994 Dolby Standard and yet these rooms do not show the assumed frequency-response characteristic during reverberant build up. We therefore conclude that it is likely that the X-curve has not been valid at least since 1994, and quite possibly earlier.”

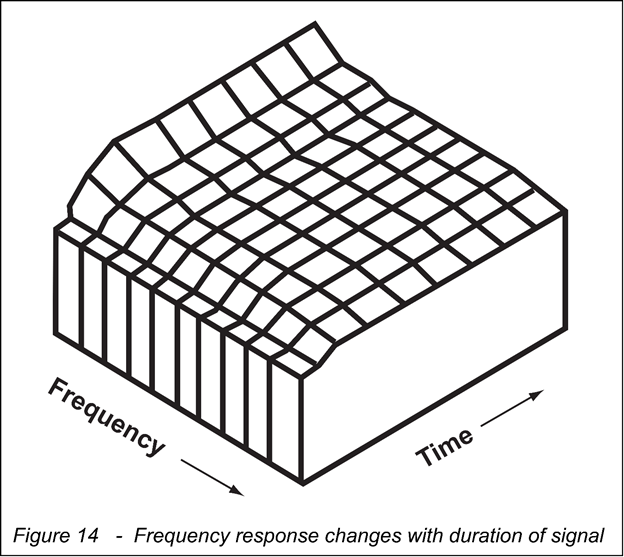

These are strong words to be sure but the data is compelling and professionally gathered and presented. Contrast it with the graph in Figure 11 in Allen’s paper (his Figure 14). This is a generic graph with no scale, and no source or back up to prove its correctness. I hate to be so harsh but the only time I have seen graphs like this is in marketing brochures. No scientific paper would ever present such a thing.

Figure 11: Allen's attempt at showing the relationship between measurement time and change in frequency response.

Figure 11: Allen's attempt at showing the relationship between measurement time and change in frequency response.

Anyway back to our research paper, the authors provide these subjective observations related to the use of the X-curve:

“Our listening work for these situations has universally supported the notion that a relatively flat high-frequency response is critical for clarity, comfort and enjoyment of the sound. Our speech sound systems invariably are equalized to be ostensibly flat up to 12 kHz approximately, when measured with any time window.

“In contrast to the type of sound we deliver to our clients, is our perception of cinema sound, which is not hi-fi-like and sometimes causes difficulties for us in understanding speech when there are accent differences and/or Foley effects.”

They end the paper with this strong statement:

“The authors conclude that the use of the X-curve is detrimental to the enjoyment of cinema.”

I think everyone would agree that the data is the data and it shows that theory of X-curve does not hold true when applied to a decent sample size.

Frequency Response and Reverberations

Earlier I discussed the simple fact of direct sound of speaker hitting the room surfaces and reflecting back. In an ideal situation, this is a linear system meaning no new frequencies are generated. The reflected waves will be identical to the ones arrive at the surface and what comes back will have the same spectrum. In this regard, whether you added the reflections to the direct sound or not, the spectrum will be the same and hence no response changes.

In a real life situation, the sound emanating from the speaker will have a different response depending on the angle and frequency being played. As mentioned research shows that good speakers will have spectrum that is similar in both situations but we are not assured of perfect off-axis response. Referring to Figure 6, the spectrum measurements of a real speaker, we see that the on-axis is nearly a flat line around 90 dB SPL whereas the sum total of direct and indirect sound called Sound Power drops down to less than 80 dB SPL at the highest measured frequency.

What the above says is that if you combine the on-axis sound of the loudspeaker with off-axis radiations you are bound to get a drop in high frequencies. But that this variation will be speaker dependent.

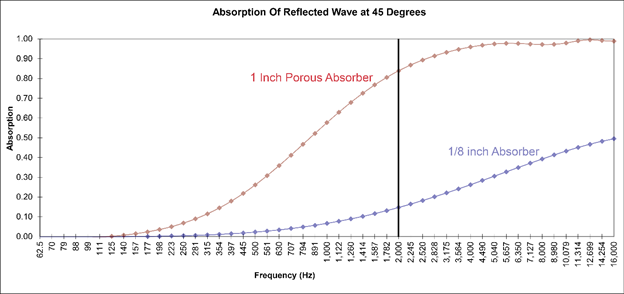

Real listening rooms have another property: the surfaces absorb sound frequencies differently. In Figure 12, I have simulated what happens when sound waves hit either a 1/8-inch porous absorber such as fabric on the wall versus a 1 inch one e.g. acoustic panel, at a 45 degree angle. We see wide difference between the two. Change the thickness, material resistive flow (to sound), air gap behind the absorber, and the impacted response changes drastically. Yes, your wall surfaces act like equalizers! It is for this reason that if you are going to put any acoustic products on your walls, they better be such that they have even response starting from transition frequencies. For a porous absorber such as the common fiberglass acoustic panels, this calls for a minimum of four inches. If have put fabric panels on all of your panels, you killed the of-axis high frequencies of a good speaker, reducing its performance! See the blue line in Figure 12.

So now have a second factor that modifies the frequency response of the reflected waves based on type of surface material. And not the mere inclusion of reflected waves as X-curve postulates.

Figure 12: Simulated absorption coefficient of a thin 1/8-inch absorber such as a thin carpet and 1 inch purpose built acoustic panel on the wall. A 1.0 value means 100% absorption at that frequency.

Figure 12: Simulated absorption coefficient of a thin 1/8-inch absorber such as a thin carpet and 1 inch purpose built acoustic panel on the wall. A 1.0 value means 100% absorption at that frequency.

Other facts such as listening distance also change high frequency response. As do utilization of the common perforated screen. Likely these were the factors that were being seen by Allen and crew in their experiment.

All in all, the measured response of a Loudspeaker in a room when including the reverberations, is subject to many, many factors that don’t lend themselves to the simplified curve of X-curve.

Summary

So here we are. No matter which way we look at it, the X-curve does not pass the test of scrutiny. It would be wonderful if sound reproduced in a room followed such a simplified behavior. But it just doesn’t. There is much work to tweak the measured time interval and such, hoping to get that to correlate with real situations. But all of this I am afraid is misguided. The right knowledge of psychoacoustics and sound reproduction in the room needs to be used to determine the guidelines. Not something we cook up on the back of the envelop.

Let’s hope that the standardization leads us to proper scientific solution. Until then, it is my sincere hope that people calibrating sound in theaters, do so using their ears and not compliance with X-curve.

References

[1] X-Curve Is Not An EQ Curve, Michael Karagosian, SMPTE 2013

[2] Ioan Allen describes the history of Dolby Sound at the University of San Francisco,

[3] Presentation on Cinema Audio, PowerPoint presentation prepared by Dr. Floyd Toole concerning issues of audio reproduction and the current standards, Audio Engineering Society, 2012

[4] The X-curve: Its Origins and History, Allen, SMPTE Journal, Vol.115, Nos. 7 & 8, pp. 264-275 (2006)

[5] Perceptual Effects of Room Reflections, Majidimehr, Widescreen Review Magazine, http://www.madronadigital.com/Library/RoomReflections.html

[6] Frequency-Correlation Functions of Frequency Responses in Rooms, Schroeder, Journal of the Acoustical Society of America, Vol. 34, No. 12. (1962), pp. 1819-1823.

[7] On Frequency Response Curves in Rooms. Comparison of Experimental, Theoretical, and Monte Carlo Results for the Average Frequency Spacing Between Maxima, Schroeder and Kuttruff, Journal of Acoustic. Society of America 34, 76 (1962)

[8] Room acoustic transition time based on reflection overlap, Jeong, Brunskog, and Jacobsen, Journal of Acoustic. Society of America 127, 2733 (2010)

[9] Uncertainties of Measurements in Room Acoustics, Lundeby, Vigran and Vorländer, Acta Acustica Journal, 1995

[10] Estimation of Modal Decay Parameters from Noisy Response Measurements, Karjalainen, Antsalo, Mäkivirta, Peltonen, and Välimäki, Helsinki University of Technology, Laboratory of Acoustics and Audio Signal Processing

[11] About this Reverberation Business, Moorer, Computer Music Journal, Vol. 3, No. 2 (Jun., 1979), pp. 13-28

[12] New Method of Measuring Reverberation Time, Schroeder, the Acoustical Society of America, 1965

[13] Sound power radiated by sources in diffused field, Baskind and Polack, AES Convention 108 (February 2000)

[14] Master Handbook of Acoustics, Alton, 2009 [book]

[15] La transmission de l'énergie sonore dans les salles, Polack, 1988 [book]

[16] Analysis and synthesis of room reverberation based on a statistical time-frequency model, Jot, Cerveau, Warusfel, AES Convention:103 (September 1997)

[17] Subwoofer / Low Frequency Optimization, Majidimehr, Widescreen Review Magazine, May/June 2012, http://www.madronadigital.com/Library/BassOptimization.html

[18] Is the X Curve Damaging Our Enjoyment of Cinema? SMPTE 2011 Australia, Leembruggen, Philip Newell, Joules Newell, Gilfillan, Holland, and McCarty

[19] A New Draught[draft] Proposal for the Calibration of Sound in Cinema Rooms, Philip Newell, AES Technical Committee paper, January 2012

[20] Sound Reproduction: The Acoustics and Psychoacoustics of Loudspeakers and Rooms, Dr. Floyd Toole, 2008 [book]

Amir Majidimehr is the founder of Madrona Digital (www.madronadigital.com) which specializes in custom home electronics. He started Madrona after he left Microsoft where he was the Vice President in charge of the division developing audio/video technologies. With more than 35 years in the technology industry, he brings a fresh perspective to the world of home electronics.

In my article in Widescreen Review Magazine published recently titled “There Is No “There” in Audio,” I made a brief reference to X-curve used for calibration of Cinema Sound and mentioned that there is no good reason to follow it for home theater applications. In this article I will provide a deeper dive into the X-curve, not as much for its direct applicability to home theater, but in the interest of covering some core concepts of good sound reproduction, be it in small or large spaces.

Background

There is a lot of controversy regarding the X-curve in the industry as I write this article. While I think everyone understands that there are issues with this very aging standard, there are two schools of thought: one that seeks to defend what is there and only wants incremental changes, and the other that says let’s abandon the whole thing and start fresh. So it is clear, I am in the latter camp.

Those in favor of keeping the system more or less the same, make the following arguments:

1. The X-curve is not an equalization/target curve.[1] Rather, it is a response that shows up if you measure a loudspeaker with flat response, in a large room with a real-time analyzer and pink noise as specified in the standard. And that such a measurement will tend to show a decline in frequency response starting around 2 kHz at a rate of 3 dB per octave.

2. Since the X-curve is a measurement error, it is not a “target curve” in the sense of trying to modify the sound. That is, the goal is to get at flat response and compensate for the measurement error.

3. The reason behind the measurement error is the inclusion of the reverberations in the room. That is, when the direct (“on-axis”) sound of a flat response speaker is combined with reflections/reverberations in the room, the high frequencies when measured continuously using Real-Time Analyzer (RTA) and pink noise. There is also an effect stated to be there in low frequencies which I am not addressing in this article.

If these arguments are right, then sure, we should stay the course and make fine corrections. Let’s examine if that is the case.

Background and History Of The X-Curve For Cinema Sound

One of my favorite TV cooking shows is the Japanese “Iron Chef.” The program starts with a great line from Brillat-Savarin: “tell me what you eat and I'll tell you what you are.” By the same token, to understand X-curve, it is essential that we examine the history and motivation behind it. We need to travel back to circa 1970 when the initiative started, leading to standardization by both SMPTE and international ISO organizations.

The history lesson comes ready for us in the form of Ioan Allen’s paper, “The X-Curve: Its Origins And History.” No, this is not some dusty type-written document from the 1970s but one penned for the SMPTE Motion Imaging Journal in 2006! Yes, 2006. It seems strange to have the documentation for such a standard come so late but here we are. The good news is that we can see if the author and champion of X-curve, which in this case is a film sound luminary, has any second thoughts multiple decades later.

Allen worked for Dolby Laboratories then and continues to do so as of this writing. You no doubt know Dolby as the pioneer of surround sound but the fame and fortune of the company came from another more mundane technology, namely noise reduction for analog sources such as professional multichannel tape recorders and later on, the Cassette tape.[2] That invention was quite simple but very clever. The noise on tape is focused predominantly in the high frequencies (better called a “hiss” as a result). At recording time, the music level is equalized to boost its high frequencies, a process that is called “emphasis.” At playback, the inverse correction, called “de-emphasis,” is performed by lowering the levels of high frequencies by the same amount. This killed two birds with one stone: it restored the level of music high frequencies to what it was prior to emphasis, while also lowering the noise level. The result was a much-improved signal to noise ratio, and made Dolby Noise Reduction a standard in professional tape recorders and consumer cassette tapes. This resulted in great royalty stream for Dolby. Bless their hearts for creating customer value and financial rewards for themselves.

As an interesting aside, the emphasis/de-emphasis technique is also part of the CD specifications! It is there to help reduce high-frequency quantization noise. While I recall some titles using it back when the CD format was introduced, I don’t believe it is anything but distant memory in digital systems.

On a personal note, and I don’t think I was remotely alone in doing it [2], I would record on my cassette tape with Dolby noise reduction which would boost the highs due to pre-emphasis, but then playback without it. Why? Because you got more highs. The cassette decks of the time had limited high frequency response and recording without this pre-emphasis resulted muffled sound to me. My method didn’t extend the frequency response but subjectively resulted in hearing more high frequencies. In other words, having hiss was less of an issue than restoring impression of high frequencies. Our perception is quite complex than one intuits on the surface. A lesson to keep in mind as we think through cinema sound.

Anyway, why am I telling you all of this? Because as they say, necessity is the mother of invention. In this case, having successfully gotten A-type noise reduction into the professional music recording space, Dolby wanted to expand its market into movie sound but ran into a snag which later led to development of the X-curve. Here is Allen telling it in his paper:

First Dolby Excursions into Film Sound

“The year 1969 marked the first use of Dolby® noise reduction in the film industry, with the music recording of ‘Oliver.’ At that time, Dolby A-type noise reduction was widely used by the music recording industry, initially for two-track classical recording and then to counteract the noise build-up inherent with eight, and later sixteen, track recorders. A-type noise reduction was next used in the film industry for music recording and some magnetic generations on’ Ryan’s Daughter’ (1970).

The author was frustrated at the limited benefit heard from this process when the films were released in theatres. In 1970, a test was conducted at Pinewood Studios in England with a remixed reel of ‘Jane Eyre,’ with A-type noise reduction actually applied to the mono-optical release print itself. Again, the results were disappointing in playback, as the reduction in noise did nothing to help the limited bandwidth and audible distortion.”

In principal Dolby Noise Reduction should have worked the same way it did for music and reduce noise of the analog (optical) recordings of movie sound. But it didn’t. The problem they ran into was that the recording/playback chain of movie sound already had its own ad-hoc equalization and piling on additional amount in the form of Dolby NR pre-emphasis, created a mess as Allen explains:

“It seemed obvious that the monitor characteristic for conventional optical sound mixing caused severe quality limitations. An (A+B) response [source + amplification/reproduction frequency responses] well over 20 dB down at 8 kHz led to excessive pre-emphasis. This in turn led to excessive distortion. Limited high-frequency response led to the mixer rolling off low-frequencies, to make the sound “more balanced” (see below). If the monitor response was flattened, less equalization would be required, and the distortion would be lower.”

So the mission turned into improving the end-to-end movie sound reproduction chain. At high level, the goal was simple: have every venue that plays back the soundtrack, sound the same as every place the movie sound is created/previewed. Logical enough. To get there, one would have to first determine what difference existed in the two preview/playback environments.

Comparing the production chain with playback was not easy to do. After all, how would you compare the sound produced in two different locations? Listening to one and then running to the other venue is not a practical thing. There is technology that allows for this by the way in the form binaural recording where we capture with two microphones what a prototype human head would “hear.” The recording can then be used to compare to the same process performed in another venue. The experimental set up is show in Figure 1 (Figure 10 in Allen’s paper) with the mixing/dubbing stage called the “near-field” and the cinema sound, “far field.” With this setup the larger theatre’s sound could be equalized iteratively until it perceptually matched what the near-field system was playing.

What did the differential equalization look like? Here again is Allen [emphasis mine]:

“A surprising development was the discovery that the best subjective match still showed an apparent slight HF droop. A slope of around 3 dB per octave from about 2 kHz seemed to give the best results, along with a slight limitation to low-frequency bandwidth, as seen in Fig. 11. […] The reason for the apparently desirable HF droop is not very easy to explain. “

And with it, the X-curve was born as Allen shows in Figure 2 (his Figure 11).

The experiment seemed well enough but we have a serious protocol problem. We can’t possibly assess cause and effect with just one listening test. Imagine trying to prove the efficacy of a drug by just testing it on one person. The single experiment would let us create a hypothesis, i.e. the larger the room, the more response variation starting at 2 kHz. It would be the follow up controlled experiments that would validate it. Without that kind of follow up testing, we could very well fall victim of other anomalies that led to these results.

Here is an example of that. In this test, two different brands of loudspeakers were used. The near-field was KEF and the far field, Vitavox. Surely the first order difference that one would hear is the timbre contrast of the two loudspeakers. Before jumping on a much more obscure cause, i.e. reverberations in the room, this factor should have been ruled out with other set of Loudspeakers to see if the X-curve shows up more or less the same, i.e. it is a function of the room and not loudspeaker. Ditto for using different set of listeners who may have more acute timbre discrimination than the one used here.

And oh, you want to match levels, and have such listening tests be blind –– neither of which is stated by Allen to have been the case. Yes, these measures are necessary. Sighted evaluation even when differences are big is subject to placebo and bias. Here is a personal example. Last year we previewed at Madrona Digital the incredible new JBL M2 loudspeaker in our reference theatre in front of a group of audiophiles. These loudspeakers have superlatively smooth on- and off-axis response whose efficacy has been verified in double blind controlled listening tests. Yet when you look at the M2s, they look like the typical “pro loudspeaker” with big horns and such, resulting in the immediate expression of “these can’t sound good.” While a number of listeners in our demonstrations were quite impressed with the sound of the M2s, some others insisted that the bass was wrong. The M2s have a sophisticated parametric EQ that allows their response to be shaped however you want. But knowing that the sound was already correct and it was possibly the biased perception that was the issue, we made a tiny, inaudible change (a fraction of a dB) and then asked if that fixed the problem. The answer? It did! What we changed there was perception, not reality. Without controlled, level matched, blind testing we simply can’t fully really trust the results of such tests.

Allen hypothesized on the causes he thought were in play to generate the unusual shape of the X-curve:

“There are three possibilities, singly or in combination:

(1) Some psychoacoustic phenomena involving faraway sound and picture.

(2) Some distortion components in the loudspeaker, making more HF objectionable.

(3) The result of reverberation buildup, as described below.”

Sadly, only #3 is discussed in the paper. The others just get a passing remark. The focus and justification of the X-curve in the minds of Allen (and as a group, people defending it today) then rests almost entirely on the shoulders of effect of reverberations on measurements. Unfortunately, even this hypothesis gets passing, light treatment. Now we could forgive some of the crudeness of the research because of timing, this being back in early 1970s. But in short order, i.e. early 1980s, acoustic research had advanced significantly as evidenced by the extensive research by likes of Dr. Floyd Toole and publishing of the same in Audio Engineering Society which involved truly understanding sound reproduction and importance of controlled testing to determine listener preference. Yet the movie sound industry kept marching on, as if we continued to live in early 1970s.

Good news is that the industry is now aware of issues around X-curve and for the last few years there has been considerable work in both AES and SMPTE to revise cinema sound. What the final outcome will be, I can’t say. What I can explain is the justification for setting aside the X-curve and starting fresh.

Loudspeaker In A Room

At the risk of getting pedantic, what we hear in a room is a combination of what the loudspeaker produces and all the sound waves reflecting and intermixing with the direct sound and each other over time as shown in Dr. Toole’s presentation on the state of Cinema sound in Figure 3. [3] Fancy term for this is “superposition: where the amplitude and phase of each waveform determines what the combination looks like and potentially sound like.

As shown in Figure 3, the direct sound from the loudspeaker arrives at our ear first but shortly thereafter, the reflections also get there. The total sonic experience then is a combination of direct, on-axis sound and indirect off-axis radiations combining with it.

So what does this do to the sound we hear? At the extreme, the answer is way too complicated to provide. Every room is different. Every loud speaker is different. Every listening position is a different. The permutations are immense. We can use a computer model to analyze it all but ideally, we would have rules of thumb that would let us make generalized observations without it.

This is the crux of acoustic research that has been going on for more than a century –– reducing the complexity yet maintaining sufficient accuracy to make correct judgments. There is no better version of this than the insight provided in 1962 by the German physicist Manfred Schroder, a Bell Labs researcher, and all around “father of modern acoustics.”

Before I get into what Schroder taught us, let’s imagine the simple example of throwing a stone in a pond. Assuming an ideal case, we will see pretty concentric circles emanating from the stone. At some point one of these circles hits the side of the pond, reverses direction and proceeds to combine with the waves that are still traveling from the source. The result is an interference pattern. Sometimes the waves cancel out. Sometimes they combine. These are the distinct patterns we see. Other times we get a combination of the two extreme. This is the superposition concept in play.

Now imagine the much more complex scenario of the waves hitting a number of reflecting surfaces and allowing for those reflections in turn to hit other shores and creating secondary reflections –– or tertiary. Now the outcome is not a predictable, geometric pattern. We get what boaters would call a “confused sea.” The surface of the water will appear chaotic and random.

Mathematically, a principal called the Central Limit Theorem says that if we have large enough number of independent events, the distribution of events starts to approach a “normal”/bell curve (properly called a Poisson distribution). While the combination of reflected waves is not random per se, it can almost be treated that way as the number of waves combining with each other becomes large.

What this means that we can start with an orderly event, in this case a pebble falling in a pond, but what we end up at the end, is a random event with predictable distribution. Same is true of sound in a room. [5][7][8][9][10][11][12][13][14][15][16] The pebble is replaced by our loudspeaker generating sound energy. Air is the water in the pond. And the walls, ceiling and floor in our listening space play the role of the shores of the pond, reflecting sound waves back. When we hit the point where the room behavior becomes random, we call this behavior “statistical.”

In contrast to statistical mode, when just a few waves combining, we call that “modal behavior.”

From the point of view of proper sound reproduction in a room, statistical behavior is much preferred. In modal behavior, which as you will see occurs in low frequencies, the frequency response of the loudspeaker is heavily modified in distinct patterns much like the initial waves of the stone hitting the shores and coming back. Such pronounced and predictable patterns cause colorations that are readily audible. “Boomy bass” is the phrase here.

Not so with statistical behavior. Here, we have so many waves combining that the outcome while “rough,” is not deterministic. And that is a “good” thing when it comes to sound reproduction.

Think of whether you prefer to drive your car at speed on a smooth dirt road, i.e. lots of small variations, versus a paved road with huge potholes. The former will provide a smoother ride even though it has considerably more variations per feet travelled. The suspension in your car will filter out the small variations but pass through the large ones. Same is true of sound reproduction in a room. The filter is the auditory response of our hearing system, which lacks the resolution to detect compacted ups and downs in frequency response as represented by statistical room mode. [5] Yet it passes through the large undulations of the model mode.

Every room has these modes and a region in between called the transition. And the person who tells us how to determine these regions was Schroder. His mathematical analysis led to a very simple formula that gives us the frequency at which the room behavior changes from modal to statistical. [8]

The formula is, Fc = 2000·(T60/V)^0.5 where Fc is the frequency of transition from modal to statistical (not really, see the next paragraph). T60 is RT60 or the amount of time it takes for room reverberations to die down by 60 dB (one million times lower), and V is the volume of the room. RT60 is a measured value, which is easily done today with a microphone and a computer program.

Even though Schroeder’s formula produces a single transition frequency, the reality is that there is no one magical point where nature abruptly acts differently. If you read Schroeder’s research and the mountain of other references I have provided you will see that the formula is an approximation based on the “modal density” which indicates how many waves are combining with each other in each unit of frequency (Hertz). It is the point of chaos if you will, i.e. how many waves have to pile up on top of each other before order can no longer be recognized. That point to some extent then is subjective and at any rate, not a single disconnected event.

Recall that the X-curve says that there is a transformation in room sound around 2 KHz. As you can see from Dr. Toole’s presentation slide in Figure 4, for any room of interest, 2 KHz lands well above transition frequency, whether we are talking a home theater or massive 800-seat theater. [1]

Due to dependency of Fc on volume, the transition frequency inversely scales with the size of the venue. For the smallest room representing what we may have in our home, the transition frequency is in the range of 125 Hz. This means a good bit of the spectrum of what we play, movies or music, will land at or below this and hence will have the “bad” modal behavior. Whereas for the 800-seat cinema, that problem is all but gone with a transition of just 24 Hz; only the LFE channel is impacted. The large room then is very much advantaged and will have exceptionally smooth bass response compared to our small home listening spaces.

Yes, there is a point to this discussion relative to the X-curve, namely the fact that as we go above the transition frequencies, the room imprint on the sound of the loudspeaker becomes smaller and smaller. What dominates the timbre and tonal quality then is mostly determined by the loudspeaker, not the room. This is shown nicely with actual measurements in another one of Dr. Toole’s slides in Figure 5, and in the detailed articles I have written on optimizing bass response in home theaters for Widescreen Review. [17]

The measurements in different colors show what happens if we move the microphone around the room. At frequencies below transition, the variations are quite large because the room response is modal. How a handful of waves combine heavily depends on where in the room we measure them. Go well above the transition frequency though and the room variations vanish. Mind you, they are still there but by matching our measurement resolution to that of the auditory filter/discrimination, we see that what is perceived is now location independent (tonally speaking). And if that is the case then the room is not imprinting its signature in those frequencies. Hence the categorization by Dr. Toole of that region being dominated by the “Loudspeaker.”

What this says is that if you don’t like what you are hearing above the transition frequencies, the problem is much more likely to be a loudspeaker than the room. A lousy loudspeaker will sound bad in many rooms. A good loudspeaker will sound good in many rooms.

It also says that if we care about the fidelity of the sound above transition frequencies, the place to look first and foremost is the loudspeaker. Not equalization of whatever is there. And certainly not with a one-third-octave equalizer stipulated in the X-curve standard (its resolution is too poor in the lower frequencies).

Authoritative research shows that a good loudspeaker produces on-axis, i.e. direct sound coming at you, and off-axis, i.e. sound going in other directions and hitting room surfaces, that are similar.[20] This is remarkable observation as it means we can design a loudspeaker, measure it in an anechoic chamber and have that be enough to tell us how good it sounds in just about any room! Dr. Toole brings this insight together with the concept of X-curve in Figure 6.

There is a lot going on here so let me explain the components. The top graph shows the on-axis frequency response of a loudspeaker (top, nearly flat line) in addition to the average of a set of off-axis responses categorized as early and late reflections. Other than an unwanted dip around 2 kHz in the off-axis response, this loudspeaker is very well behaved, producing similar tonal quality whether you face the loudspeaker directly, or listen to its side reflections coming at you at different angles. This loudspeaker, as noted by Dr. Toole, has performed superbly in controlled double-blind listening tests.

Now, let’s take the same loudspeaker and place it in a SMPTE classified small theatre of 30 seats and measure its response as shown in the bottom graph in black. Predictably per Schroeder, we see the highly modal and hence uneven low frequency response. But by the time we get to the knee of the X-curve, overlaid in red for different sized theatres, all of those variations are long gone. What then determines the fidelity is the Loudspeaker and there, we have a speaker that has won subjective tests yet the X-curve tells us it has the wrong response!

In other words, correlation between X-curve response and subject preference of human listeners is poor. Since the standard stipulates that we get that X-curve, then compliance with it would mean heavy handed equalization of this excellent speaker which would damage its performance.

Later in his presentation, Dr. Toole proposes that we aim to improve cinema sound the same way as we have done for home listening spaces –– attempt to correlate anechoic measurements to controlled listening tests. Once there, we know how to design our loudspeakers and equalization to get great sound. Nothing like an ad-hoc X-curve may apply I am afraid.

Effect Of Reverberation On Room Measurements

Let’s put aside the theory and just measure some rooms and see if the core concept, i.e. measurement error, is at play. We have such data in the form of paper, “Is The X Curve Damaging Our Enjoyment Of Cinema?” [18] I will give you the punch line first: they say yes! The X-curve is damaging our enjoyment of Cinema. This is how the authors set the stage:

“Complaints about poor speech intelligibility and music fidelity in cinema soundtracks persist despite advancements in sound reproduction technology and accreditation of cinema sound systems. The X-curve for the equalization of cinema sound systems has been used for many years and is a foundational element for setting up the tonal balance and achieving accreditation of cinemas.

This paper challenges the acoustic theory behind the X-curve, using analysis of acoustic and electro-acoustic measurements made in cinemas and in other spaces where high fidelity and high intelligibility is required. Both the temporal and tonal developments of the sound field in a number of rooms are examined in detail and related to temporal properties of speech and music and humans’ perception of tonality.

This work follows a series of conference papers that a number of the authors have presented about the sound quality in cinemas.”

The authors highlight, and agree with Allen’s point that the perceived sound in the room must be flat, not sloped down:

So, according to Allen, if the requirement is to have the short-duration sounds reproduced with a flat frequency response, then the steady-state condition [real-time measurements] will show an apparent high-frequency droop. To achieve the X-curve characteristic, the steady-state frequency response as measured using a spectrum analyzer with pink noise as a test signal, should be equalized to show a -3 dB per octave slope above 2 kHz”.

And later (emphasis mine):

“At present, dubbing theatres and commercial cinemas seeking Dolby certification are equalized as closely as possible to the X-curve at a point roughly two-thirds of the distance from the screen to the rear wall of the rooms. This region of the room has traditionally been considered to be the most representative average of the whole room and is generally where the mixing desk is located in a commercial dubbing facility.”

Now on to the data which is based on measurements of 18 private and commercial Dolby certified cinemas. If Allen’s theory of X-curve is right, we should see a predictable difference in the response of the rooms when measured using short and long time intervals starting around 2 KHz. Figure 7 shows exactly that: using a short 10 millisecond window and hence, eliminating much of the reverberations in the room, and the “stead-state,” with its longer time window that allows the reverberations to be incorporated in the measurements.

Huston we have a problem. Or I should say, the theory of reverberations causing a predictable droop in high frequencies starting around 2 kHz has a problem. What we see above 2 kHz is that the two graphs land almost completely on top of each other, indicating little to no differential.

The ramifications are quite sad here. It means that to get their Dolby certification the technicians had to create that slopping effect on purpose, which could only happen by using an equalizer and pulling down the high frequencies starting at 2 kHz. And with it, created a muffled high-frequency response.

To be fair, there were other rooms where the short and long term measurements did differ in high frequencies as shown in Figure 8.

Interpreting these visually is harder than the last two sets but suffice it to say, they do not follow the X-curve either. Because if they did, they would have an orderly departure starting at 2 kHz that would keep getting wider and wider.

Figure 9 shows the differential data in a simpler-to-digest form. Its Y axis shows how different the response is between the short term measurements of 10, 50, 80 and 300 milliseconds versus the stead-state. Let’s take the 10-millisecond line at the bottom. At 2 kHz it indeed shows a differential relative to the zero line. But that difference more or less stays constant as we climb up the frequencies. The X-curve says the difference should be almost zero at 2 kHz and then increasing. We don’t see that here.

What we do see is that if we increase our short-term measurement window a bit, the difference quickly vanishes even though we are way short of the time window of continuous measurement. Clearly, later reverberations are not contributing to frequency response variations.

The authors set out to test the theory at the limit: measuring a massive room. Surely if reverberations cause the droop at 2 kHz, this should show it. Figure 10 (authors’ Figure 8) are the measurements of the New Zealand Parliament, again using short term 10-millisecond and stead-state measurements.

As I have highlighted on their graph, from 2 kHz on, we yet again have a constant differential between the two types of measurements. No 2 kHz knee as in the X-curve. This is not looking good for the theory of reverberations causing a predictable measurement error embodied in the X-curve.

The authors don’t mince words given this strong evidence:

“The reverberation times of a number of the Dolby-accredited rooms above 1 kHz are commensurate with those recommended in the 1994 Dolby Standard and yet these rooms do not show the assumed frequency-response characteristic during reverberant build up. We therefore conclude that it is likely that the X-curve has not been valid at least since 1994, and quite possibly earlier.”

These are strong words to be sure but the data is compelling and professionally gathered and presented. Contrast it with the graph in Figure 11 in Allen’s paper (his Figure 14). This is a generic graph with no scale, and no source or back up to prove its correctness. I hate to be so harsh but the only time I have seen graphs like this is in marketing brochures. No scientific paper would ever present such a thing.

Anyway back to our research paper, the authors provide these subjective observations related to the use of the X-curve:

“Our listening work for these situations has universally supported the notion that a relatively flat high-frequency response is critical for clarity, comfort and enjoyment of the sound. Our speech sound systems invariably are equalized to be ostensibly flat up to 12 kHz approximately, when measured with any time window.

“In contrast to the type of sound we deliver to our clients, is our perception of cinema sound, which is not hi-fi-like and sometimes causes difficulties for us in understanding speech when there are accent differences and/or Foley effects.”

They end the paper with this strong statement:

“The authors conclude that the use of the X-curve is detrimental to the enjoyment of cinema.”

I think everyone would agree that the data is the data and it shows that theory of X-curve does not hold true when applied to a decent sample size.

Frequency Response and Reverberations

Earlier I discussed the simple fact of direct sound of speaker hitting the room surfaces and reflecting back. In an ideal situation, this is a linear system meaning no new frequencies are generated. The reflected waves will be identical to the ones arrive at the surface and what comes back will have the same spectrum. In this regard, whether you added the reflections to the direct sound or not, the spectrum will be the same and hence no response changes.

In a real life situation, the sound emanating from the speaker will have a different response depending on the angle and frequency being played. As mentioned research shows that good speakers will have spectrum that is similar in both situations but we are not assured of perfect off-axis response. Referring to Figure 6, the spectrum measurements of a real speaker, we see that the on-axis is nearly a flat line around 90 dB SPL whereas the sum total of direct and indirect sound called Sound Power drops down to less than 80 dB SPL at the highest measured frequency.

What the above says is that if you combine the on-axis sound of the loudspeaker with off-axis radiations you are bound to get a drop in high frequencies. But that this variation will be speaker dependent.

Real listening rooms have another property: the surfaces absorb sound frequencies differently. In Figure 12, I have simulated what happens when sound waves hit either a 1/8-inch porous absorber such as fabric on the wall versus a 1 inch one e.g. acoustic panel, at a 45 degree angle. We see wide difference between the two. Change the thickness, material resistive flow (to sound), air gap behind the absorber, and the impacted response changes drastically. Yes, your wall surfaces act like equalizers! It is for this reason that if you are going to put any acoustic products on your walls, they better be such that they have even response starting from transition frequencies. For a porous absorber such as the common fiberglass acoustic panels, this calls for a minimum of four inches. If have put fabric panels on all of your panels, you killed the of-axis high frequencies of a good speaker, reducing its performance! See the blue line in Figure 12.

So now have a second factor that modifies the frequency response of the reflected waves based on type of surface material. And not the mere inclusion of reflected waves as X-curve postulates.

Other facts such as listening distance also change high frequency response. As do utilization of the common perforated screen. Likely these were the factors that were being seen by Allen and crew in their experiment.

All in all, the measured response of a Loudspeaker in a room when including the reverberations, is subject to many, many factors that don’t lend themselves to the simplified curve of X-curve.

Summary

So here we are. No matter which way we look at it, the X-curve does not pass the test of scrutiny. It would be wonderful if sound reproduced in a room followed such a simplified behavior. But it just doesn’t. There is much work to tweak the measured time interval and such, hoping to get that to correlate with real situations. But all of this I am afraid is misguided. The right knowledge of psychoacoustics and sound reproduction in the room needs to be used to determine the guidelines. Not something we cook up on the back of the envelop.

Let’s hope that the standardization leads us to proper scientific solution. Until then, it is my sincere hope that people calibrating sound in theaters, do so using their ears and not compliance with X-curve.

References

[1] X-Curve Is Not An EQ Curve, Michael Karagosian, SMPTE 2013

[2] Ioan Allen describes the history of Dolby Sound at the University of San Francisco,

[3] Presentation on Cinema Audio, PowerPoint presentation prepared by Dr. Floyd Toole concerning issues of audio reproduction and the current standards, Audio Engineering Society, 2012

[4] The X-curve: Its Origins and History, Allen, SMPTE Journal, Vol.115, Nos. 7 & 8, pp. 264-275 (2006)

[5] Perceptual Effects of Room Reflections, Majidimehr, Widescreen Review Magazine, http://www.madronadigital.com/Library/RoomReflections.html

[6] Frequency-Correlation Functions of Frequency Responses in Rooms, Schroeder, Journal of the Acoustical Society of America, Vol. 34, No. 12. (1962), pp. 1819-1823.

[7] On Frequency Response Curves in Rooms. Comparison of Experimental, Theoretical, and Monte Carlo Results for the Average Frequency Spacing Between Maxima, Schroeder and Kuttruff, Journal of Acoustic. Society of America 34, 76 (1962)

[8] Room acoustic transition time based on reflection overlap, Jeong, Brunskog, and Jacobsen, Journal of Acoustic. Society of America 127, 2733 (2010)

[9] Uncertainties of Measurements in Room Acoustics, Lundeby, Vigran and Vorländer, Acta Acustica Journal, 1995

[10] Estimation of Modal Decay Parameters from Noisy Response Measurements, Karjalainen, Antsalo, Mäkivirta, Peltonen, and Välimäki, Helsinki University of Technology, Laboratory of Acoustics and Audio Signal Processing

[11] About this Reverberation Business, Moorer, Computer Music Journal, Vol. 3, No. 2 (Jun., 1979), pp. 13-28

[12] New Method of Measuring Reverberation Time, Schroeder, the Acoustical Society of America, 1965

[13] Sound power radiated by sources in diffused field, Baskind and Polack, AES Convention 108 (February 2000)

[14] Master Handbook of Acoustics, Alton, 2009 [book]