Owned the Pro and another edition of this headphone, thinking it might sound different or better, hated them both and couldn't sell them fast enough. Only headphones I thought were worse than the DT990 was the T90, like drills in my ears.

-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

- Forums

- Audio, Audio, Audio!

- Headphones and Headphone Amplifier Reviews

- Headphone & IEM Reviews & Discussions

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Beyerdynamic DT990 Pro Review (headphone)

- Thread starter amirm

- Start date

??? My subjective reaction after extensive listening tests match the objective data. People arguing otherwise are in a battle with both types of evaluations. There was no pleasure for me in listening to this headphone.

I'm just here to watch the show, it very clear that you've had a tremendous influence on the influencers in terms of dacs and amps, as well as the manufacturers (except tube amps obviously). Headphones are a different beast and it will be interesting whether credibility is questioned after some of the consistently highly recommended headphones fail the Harmon test, which I'm sure they will. But also people like what they like so you're absolutely correct that arguing otherwise is an argument against the type of evaluation, which in itself is subjective and based on popular opinion.

I really have no dog in this fight and don't doubt your experience with them. I had DT990s years ago and returned them, although, if I remember correctly I very much liked their sparkly sound for videogames, it was music where they disappointed. Really, I love the work you do. I'm just another guy on the internet looking for a free show, however lame that may be. Wouldn't mind seeing you test the SPH9500s, I had those and thought they were crap at times. I remember in FF15 DLC, the bass sounded broken in the snow mobile area, couldn't repeat it anywhere else. Also had Sundara's, thought they sounded great and natural for music (I listen to) but very flat in games. Curious, what contributes to a natural sound in headphones? Some sound more like instruments do in real life to me than others but I have no idea why cause I'm a noob when it comes to these things.

IME I don't think there is a perfect headphone for all use cases (movies, music, games) and even within genres of music, preferences vary greatly. I just find it interesting that if any of the Youtube influencers take issue, then there's going to be a popularity fight that determines the correctness of opinion - for nescient consumers like myself at least that don't have the luxury of trying everything. But again, I have tremendous respect for the work you do, and probably spend way too much time looking at consumer audio products.

- Thread Starter

- #303

I am testing Harman research with every review: I not only measure, but spend quite a bit of time listening to headphones and using equalization to validate the results. My goal actually isn't to generate measurements but rather, determine if a headphone is good out of box, and if not, whether it can be made good using equalization. These are the two things that matter ultimately if you want to know about a headphone. So whether a headphone matches the Harman curve or not, is secondary in my book.Headphones are a different beast and it will be interesting whether credibility is questioned after some of the consistently highly recommended headphones fail the Harmon test, which I'm sure they will.

What I also test for which is not addressed in research is power capability and by implication distortion. A headphone needs to pass these objective and subjective tests for it to get my nod.

All of this said, yes, people will complain and they think they can complain louder because we are testing headphones so as long as they have a pair of ears, they must have a valid opinion. So be it.

I am testing Harman research with every review: I not only measure, but spend quite a bit of time listening to headphones and using equalization to validate the results. My goal actually isn't to generate measurements but rather, determine if a headphone is good out of box, and if not, whether it can be made good using equalization. These are the two things that matter ultimately if you want to know about a headphone. So whether a headphone matches the Harman curve or not, is secondary in my book.

What I also test for which is not addressed in research is power capability and by implication distortion. A headphone needs to pass these objective and subjective tests for it to get my nod.

All of this said, yes, people will complain and they think they can complain louder because we are testing headphones so as long as they have a pair of ears, they must have a valid opinion. So be it.

Do you listen to establish your subjective view prior to taking measurements.

- Thread Starter

- #305

If I have already owned the headphone, obviously yes. Otherwise no. I don't like hunting in the dark.Do you listen to establish your subjective view prior to taking measurements.

Implicit in your question is that I have preconceived notions of the sound of a headphone based on measurements alone. If this were the case, then I would not bother with listening! I listen because I can't strongly determine how good or bad some response error is until I have tested that part by itself. EQ allows me to remove or add back in any deviation in response on demand, and if needed blindly. This is a powerful technique.

And no, it is rarely if ever subject to bias. I can't tell you how many times I have clicked on and off and wondering why there was no difference in sound, only to find out that EQ was turned off altogether. In other words, I am able to easily catch mistakes this way. To the extent an EQ correction is too minor, and hence have some placebo factor, then I mark it such and note it in the review. Most of the corrections are quite large and clearly audible as is the case with this headphone.

Unless there is something I don't understand, shouldn't you do it the other way around? Listening to the headphones first, write down your opinion then do the measurements? Isn't knowing the measurements beforehand kind of unfair since your perception has already been influenced?I listen because I can't strongly determine how good or bad some response error is until I have tested that part by itself.

I think the Beyer is HP26 and that Shure is HP29.

Nah, the only two headphones in the paper that are listed as costing $200 are the DT990 and SRH840, and in the slide below the HP26 and HP17 are the only two that line up exactly with the $200 mark:

DT990 (Oratory):

Note the resonances at ~80-90Hz and 400-500Hz, and the very similar error response shapes (upper red curves) for the large majority of the frequency range (only really deviating significantly above ~8kHz where measurements start to become less reliable).

SRH840 (Oratory):

This is less obvious, but again the error response curves are generally of the same shape, and with the two main broad peaks matching in center-frequency (~100-150Hz and 3kHz). I suspect the larger differences here between Harman and Oratory's measurements are due to poor frequency response consistency of this headphone (and possible unit variation) - the former may also explain why it received a lower average rating from listeners (31/100) than its measurements suggest (and its preference rating from the formula predicts). Just as suggestive corollary evidence, here's the frequency response consistency of the SRH440 (which looks to have a similar design and pads to the 840), as measured by Rtings (bass on real people with in-ear mics, mids to treble on HATS):

Compare with the DT990:

So the data show Oratory's DT990 measurements matching better with HP26 than HP17, and his SRH840 measurements matching better with HP17 than HP26. Therefore as HP26 and HP17 must be either the DT990 or SRH840 as shown by the first slide in this post and the headphone price listing in the paper, from all this I think it's reasonable to conclude that HP26 = DT990 and HP17 = SRH840, just as @flipflop originally concluded from his analysis.

As hard as it may be for some people to accept, this means the DT990's frequency response is on average rated as an 'excellent' (Harman's criteria) 9/10 by listeners in controlled, double-blind tests (done at a reasonable average level of 85 dB), which is the gold standard metric, for which I think we should all remember headphone measurements are a useful but imperfect proxy, with single-user, sighted (headphone and measurement), non-level matched listening an unreliable distant third place with all the uncontrolled-for nuisance variables that entails, not least subconscious bias influencing preference, which is repeatedly commonly confused with bias influencing discrimination / the placebo effect, the latter requiring differences between stimuli to be small but the former most certainly does not.

Last edited:

- Thread Starter

- #309

No. I just got done explaining why.Unless there is something I don't understand, shouldn't you do it the other way around? Listening to the headphones first, write down your opinion then do the measurements? Isn't knowing the measurements beforehand kind of unfair since your perception has already been influenced?

Let's test your theory: if I see heavily boosted lows in measurements, are you saying that I should be perceiving something else? On what basis? That our understanding of measurements are so wrong as to have the opposite effect in this regard? Why would we measure then?

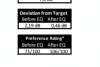

Remember, whether I listen first or second, the test is sighted. So ignore all the reasons we normally use blind tests (price, brand, design, etc.). For there to be an issue, frequency response errors must be so small as to have false perception overwhelm it -- not for any other kind of reason. If I had proposed 0.1 dB correction, then sure, you could easily make such a case. But none of my filters are that fine:

Except for band 4 which could be outside of hearing range for some, the rest of these filters have massive impact on tonality. It is hard to imagine them making a difference bigger than they really are.

Anyone who tries these EQ settings report backs significant difference. To the extent they like the post EQ better, you have a heck of a time arguing against that saying that in blind tests they could say otherwise.

So to summarize, when performing sighted evaluation of headphones and speakers, I prefer to start with the measurements and test out whether the deviations shown in the graphs have the audible effects we think they have. If they do, then I correct for them with the deliverables being a set of EQ filters. That filter then gets tested by you all and we learn if they are correct. To the extent they are, then we gain confidence in the measurements. In other words, I am a proxy for you all to test the validity of measurements.

The entire reason I got into headphone testing is the above discovery and realization. Me presenting subjective listening tests to you all didn't need me buying measurement gear. And me presenting measurements alone would not work either due to variability of such.

No. I just got done explaining why.

Let's test your theory: if I see heavily boosted lows in measurements, are you saying that I should be perceiving something else? On what basis? That our understanding of measurements are so wrong as to have the opposite effect in this regard? Why would we measure then?

What I meant is that let's say the measurements show that there is a big peak in the treble. If you listen to it knowing that this peak exists, aren't you afraid that you brain might overexaggerate said peak and make it sound worse than what it actually is just because you expected it?

I'm not trying to question your method or the measurements, I was just wondering if you ever considered that option.

- Thread Starter

- #311

The heck you talking about?As hard as it may be for some people to accept, this means the DT990's frequency response is on average rated as an 'excellent' (Harman's criteria) 9/10 by listeners in controlled, double-blind tests (done at a reasonable average level of 85 dB), which is the gold standard metric, for which I think we should all remember headphone measurements are a useful but imperfect proxy, with single-user, sighted (headphone and measurement), non-level matched listening an unreliable distant third place with all the uncontrolled-for nuisance variables that entails, not least subconscious bias influencing preference, which is repeatedly commonly confused with bias influencing discrimination / the placebo effect, the latter requiring differences between stimuli to be small but the former most certainly does not.

Here is Oratory's Harman score:

It is 78, not 91.

Since you consider both Harman's and Oratory measurements as bible, then you have a serious problem rationalizing this large difference. My measurements will likely generate even lower scores. Until you explain this difference, quoting Harman's measurements and listening tests results don't make any sense. You are either wrong about what headphone that goes with, they made a mistake in the paper, or something else. Either way, we are on our own using our own measurements and developing our own equalization to determine the truth. You want to think this headphone is the most perfect there is based on 91 score, you are welcome to do so. Just don't mention it to me because it is not true in any form or fashion.

- Thread Starter

- #312

That expectation is a guess that you could make with or without measurements. Me making such a guess without measurements is more subject to error than not. You would have to have ultimate trust in my listening abilities to want me to pass my judgements that way!What I meant is that let's say the measurements show that there is a big peak in the treble. If you listen to it knowing that this peak exists, aren't you afraid that you brain might overexaggerate said peak and make it sound worse than what it actually is just because you expected it?

Using measurements and then developing inverse filters, I can test my preconceptions. Often I make corrections at very high frequencies and realize that they don't fix the brightness that I expected they would. So I throw away the filter and try something else. EQ development takes me a long time when it comes to headphones. I usually measure one day and then spend the evening and sometimes the next day experimenting to find optimal correction.

So let me summarize again: we need to get to a more accurate presentation of headphone performance than pure measurements. I have found that this occurs by developing effective EQ and iterative listening tests. I know of no other way to bring sanity to this. Maybe there is some error in my process but I assure there is far more error than running off with some measurement.

samwell7

Senior Member

The heck you talking about?

Here is Oratory's Harman score:

View attachment 110285

It is 78, not 91.

Since you consider both Harman's and Oratory measurements as bible, then you have a serious problem rationalizing this large difference. My measurements will likely generate even lower scores. Until you explain this difference, quoting Harman's measurements and listening tests results don't make any sense. You are either wrong about what headphone that goes with, they made a mistake in the paper, or something else. Either way, we are on our own using our own measurements and developing our own equalization to determine the truth. You want to think this headphone is the most perfect there is based on 91 score, you are welcome to do so. Just don't mention it to me because it is not true in any form or fashion.

Just an add-on to this, the Shure SRH840 is 71/100 from the Oratory1990 measurements.

Attachments

- Thread Starter

- #314

Thanks. It shows as 30 or so on Harman graph for preference rating. It is very hard to rationalize such data...Just an add-on to this, the Shure SRH840 is 71/100 from the Oratory1990 measurements.

samwell7

Senior Member

Yeah that's a huge variance, I haven't been able to find the referenced paper; does it mention the headphones and their respective prices in the paper? Is this how people are deducing that the Beyer and 840s are the two at $200?Thanks. It shows as 30 or so on Harman graph for preference rating. It is very hard to rationalize such data...

solderdude

Grand Contributor

compelling evidence ...

I would agree with this judging from the plots.

The problem here is the rating which was derived in the study.

That is the incorrect part and is logical to be incorrect.

That preference is derived from an emulation of the DT990 and SRH840 (at least it looks to be those) done on an AKG headphone.

This obviously has (substantial) errors in it when compared to the actual models.

A perfect example of why I don't care for ratings. They are just numbers based on either listening or test results with a certain weighting assuming everyone likes the same sound or the reviewer(s) have the same preference.

I believe oratory's preference ratings are auto computed based on some formula that could probably do with a bit of extra tuning and according to him the values it produces hold little meaning, especially when you start getting close to 100.

The numbers from the harman research on the other hand are actual preference ratings from the participants of the studies. People indeed liked the dt990's default tuning quite a lot. That's just a fact.

Trying to find ways to invalidate this data due to imperfect emulation, source material or whatever else, can only lead to invalidating the results of the study, which is probably not prudent if your aim is to take shots at the beyers for failing to conform with the very results you're now invalidating.

The numbers from the harman research on the other hand are actual preference ratings from the participants of the studies. People indeed liked the dt990's default tuning quite a lot. That's just a fact.

Trying to find ways to invalidate this data due to imperfect emulation, source material or whatever else, can only lead to invalidating the results of the study, which is probably not prudent if your aim is to take shots at the beyers for failing to conform with the very results you're now invalidating.

killdozzer

Major Contributor

controlled, double-blind tests, which of course eliminate confounding variables from sighted listening

OK, I'll take one for the team (and I'm really asking this), how do you imagine a double blind test for headphone?

ZööZ

Addicted to Fun and Learning

- Joined

- Nov 10, 2020

- Messages

- 572

- Likes

- 361

Not familiar with those, do they have toe-in instructions that deviate from the mainstream practice which would incite animosity among audio enthusiasts?PMC speakers

And to stay on topic:

Are there any "gold standard" test tracks where the tonality issues are very clear and easily heard or is it more of an acquired talent, through experience, to be able to ballpark what/if something is off.

I actually modified amir's dt990 eq a little yesterday, for my speakers of all things because I could hear very similar treble peaks in them.

After a while of playing around with the settings I came to the conclusion that I actually managed to improve the sound (but shaving the treble peaks also took some of the spaciousness of the sound away) but I might just be deluding myself to thinking so.

They test frequency responses, not headphones themselves.OK, I'll take one for the team (and I'm really asking this), how do you imagine a double blind test for headphone?

Similar threads

- Replies

- 135

- Views

- 23K

- Replies

- 8

- Views

- 2K

- Replies

- 48

- Views

- 7K