-

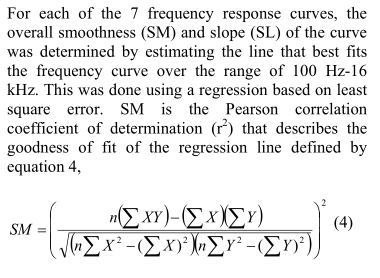

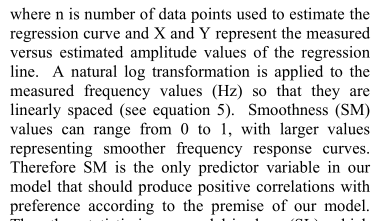

Welcome to ASR. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Master Preference Ratings for Loudspeakers

- Thread starter MZKM

- Start date

I could also do the reverse, find the EQ such that it maximise the score.

I guess coming up with the ideal EQ frequency response to maximize the score for a speaker would be an interesting optimization problem. I worry that it could be pushing the statistical reasoning behind the Olive score a bit too far (correlation, causality, etc.), but it's worth a try I guess.

Coming up with the ideal EQ response would be a great feature, however do keep in mind that there's a difference between a "desired" EQ response and an EQ response one can actually implement in a FIR or IIR filter due to constraints. Anyone who tried to use the "automatic" EQ generation in REW knows this is not an easy problem to solve.

How would one do that?

i would define eq as a set of IIR (2 parameters per filter) ± 10 parameter with 5 eq.

pref_score is a function of this 10 parameters. just derive it (by hand or automatically) and then optimise.

pref score is a sum of mostly quadratic functions. that should work well.

i would define eq as a set of IIR (2 parameters per filter) ± 10 parameter with 5 eq.

pref_score is a function of this 10 parameters. just derive it (by hand or automatically) and then optimise.

pref score is a sum of mostly quadratic functions. that should work well.

From time to time there are papers published at the AES about optimization of IIR filters. Considering the number of papers still being published on this topic, I doubt it's an easy problem to solve, but hey, that sounds like a fun project. FIR could be easier because a FIR filter can be generated directly from a "desired" frequency response (as long as one is not too demanding wrt filter length and slopes).

i would define eq as a set of IIR (2 parameters per filter)

Why 2? There are 3 parameters for a IIR biquad peak EQ filter: frequency, gain, and Q (slope).

Why 2? There are 3 parameters for a IIR biquad peak EQ filter: frequency, gain, and Q (slope).

yes 3. I will look at a few papers.

I agree on the fun part. Let's first compute a gradient and then I will experiment. The benefit of using some IIR biquad is that they are boxed (each value as a min and a max). That will create some inequality constraints that I hope to deal with a lagragian (min max). If the function is quasi convex, life is easy, if not we will see. Using TF I can also parallelise quiet well the expensive part of the computation and can distribute it if needed. I may have access to some idle resources if needed.

after reading https://www.sciencedirect.com/science/article/pii/S1319157814000032 and http://www.aes.org/e-lib/browse.cfm?elib=15778

i will start with a GFA or a genetic algorithm. I don't need to find an optimum, any improvement is good.

Last edited:

In light of this I'm wondering if SM, instead of being called "smoothness" should instead be called "tilt compensation factor" or something like that. Its effect (whether intentional or not) is to compensate for the effect of tilt on other variables, most notably NBD.

I just implemented slope and SM calculations in Loudspeaker Explorer, so let's revisit this in more detail with charts and examples. It turns out the latest 3 measured speakers (JBL XPL 90, KEQ Q350, and PreSonus Eris 5 XT) make good examples as they exhibit significant differences in SM calculation.

So, first things first, we start with the usual linear regression:

It's a bit hard to tell from the chart, but the degree of tilt is actually different between speakers: -0.7 dB/octave for the JBL, -1.1 dB/octave for the KEF, and -0.6 dB/octave for the PreSonus.

Then, one way to approach SM is to first generate two curves from the original response: one that has merely been mean-adjusted (i.e. normalized), and one that is slope-adjusted (i.e. with the slope linear regression subtracted):

The above chart can be useful in and of itself because it shows what the response of the speaker would be if there was no overall tilt.

Sadly the easy stuff stops there; now, we have to get deep into the weeds of r² calculation, and the charts become a bit harder to interpret. Reminding ourselves of the definition of r², we can square the deviation in the above chart and come up with the numerator and denominator of the fraction used in r²:

The blue part (TSS) shows deviation around the mean. Higher TSS improves r² and thus SM; this is the part that is highly counter-intuitive and that almost everyone got wrong initially.

The orange part (RSS) shows deviation around the linear regression (or "tilt-compensated deviation" if you like). Higher RSS, unsurprisingly, degrades SM.

The important thing to remember is that a larger slope increases deviation around the mean, therefore it increases TSS, but it does not increase RSS. Therefore, larger slopes improve SM. Hence the conclusion that SM is biased towards tilted responses.

In the above chart, one would intuitively expect the Q350 to win by a wide margin, due to its high TSS and low RSS, which is expected from its more tilted response. We would expect it to be followed by the JBL, which looks similar but with a smaller divergence between TSS and RSS. Finally, we would expect the PreSonus to come in last due to its RSS looking quite large compared to its TSS.

We can get confirmation on that by looking at the RSS/TSS ratio, which is basically SM modulo an offset:

And from there we finally arrive at the final SM score, and it looks just like we intuitively expected from the above charts:

OP

- Thread Starter

- #388

I just implemented slope and SM calculations in Loudspeaker Explorer, so let's revisit this in more detail with charts and examples. It turns out the latest 3 measured speakers (JBL XPL 90, KEQ Q350, and PreSonus Eris 5 XT) make good examples as they exhibit significant differences in SM calculation.

So, first things first, we start with the usual linear regression:

View attachment 65113

It's a bit hard to tell from the chart, but the degree of tilt is actually different between speakers: -0.7 dB/octave for the JBL, -1.1 dB/octave for the KEF, and -0.6 dB/octave for the PreSonus.

Then, one way to approach SM is to first generate two curves from the original response: one that has merely been mean-adjusted (i.e. normalized), and one that is slope-adjusted (i.e. with the slope linear regression subtracted):

View attachment 65114

The above chart can be useful in and of itself because it shows what the response of the speaker would be if there was no overall tilt.

Sadly the easy stuff stops there; now, we have to get deep into the weeds of r² calculation, and the charts become a bit harder to interpret. Reminding ourselves of the definition of r², we can square the deviation in the above chart and come up with the numerator and denominator of the fraction used in r²:

View attachment 65115

The blue part (TSS) shows deviation around the mean. Higher TSS improves r² and thus SM; this is the part that is highly counter-intuitive and that almost everyone got wrong initially.

The orange part (RSS) shows deviation around the linear regression (or "tilt-compensated deviation" if you like). Higher RSS, unsurprisingly, degrades SM.

The important thing to remember is that a larger slope increases deviation around the mean, therefore it increases TSS, but it does not increase RSS. Therefore, larger slopes improve SM. Hence the conclusion that SM is biased towards tilted responses.

In the above chart, one would intuitively expect the Q350 to win by a wide margin, due to its high TSS and low RSS, which is expected from its more tilted response. We would expect it to be followed by the JBL, which looks similar but with a smaller divergence between TSS and RSS. Finally, we would expect the PreSonus to come in last due to its RSS looking quite large compared to its TSS.

We can get confirmation on that by looking at the RSS/TSS ratio, which is basically SM modulo an offset:

View attachment 65116

And from there we finally arrive at the final SM score, and it looks just like we intuitively expected from the above charts:

View attachment 65117

I still don’t understand how including the target slope on the SM calculation does anything, as I don’t think I’ve seen it impact the results, so it is indeed seems to be favoring more tilt (narrow directivity) rather than favoring closeness to the target slope.

This is likely where it is important to note the speakers being used in the testing, 2/3/4 way designs. A more tilted response only improves the score if the NBD score doesn’t decrease proportionately. The speakers used for testing will have different levels of directivity, but they are more similar than not when compared to say an MBL omnidirectional speaker, which would have a slope much more close to 0, and thus negatively impact the score. So, I wonder if this formula is valid for anything other than monopole speakers; would an Ohm Walsh tower be totally unable to be reliably scored (omnidirectional woofer with the tweeter aimed upwards towards the middle of the room. Could it even be reliably measured?).

This is where experimentation can come into play, and I‘ve been meaning to do this, but take the normalized PIR and use that to calculate NBD_PIR, get rid of SM_PIR, and weight it the combined PIR weight (38%), and see how that impacts the score.

Also, I have made a Tonal Balance graph, which is the average of the normalized PIR and on-axis using their respective weights, I wonder if I use that to calculate NBD and only pair it with LFX, how that would look, likely a lot less worthwhile than the above experiment.

Last edited:

I still don’t understand how including the target slope on the SM calculation does anything.

The target slope is not "included on the SM calculation". SL is the variable that relates to the target slope, not SM. (SL did not make it into the final model, and that's not necessarily a problem.) The Olive paper never suggests that the target slope has anything to do with SM. Sure, SL and SM are described in the same section of the paper, but that's presumably because they are calculated from the same linear regression. I don't understand what you find problematic here (aside from the weird definition of SM, of course).

This is likely where it is important to note the speakers being used in the testing, 2/3/4 way designs. A more tilted response only improves the score if the NBD score doesn’t decrease proportionately. The speakers used for testing will have different levels of directivity, but they are more similar than not when compared to say an MBL omnidirectional speaker, which would have a slope much more close to 0, and thus negatively impact the score. So, I wonder if this formula is valid for anything other than monopole speakers; would an Ohm Walsh tower be totally unable to be reliably scored (omnidirectional woofer with the tweeter aimed upwards towards the middle of the room. Could it even be reliably measured?).

I agree that the weird and seemingly ill-defined SM is likely compromising the accuracy of the model when applied to speakers whose behaviour differs significantly from the sample of loudspeakers used in the test. That's unfortunate

This is where experimentation can come into play, and I‘ve been meaning to do this, but take the normalized PIR and use that to calculate NBD_PIR, get rid of SM_PIR, and weight it the combined PIR weight (38%), and see how that impacts the score. Also, I have made a Tonal Balance graph, which is the average of the normalized PIR and on-axis using their respective weights, I wonder if I use that to calculate NBD and only pair it with LFX, how that would look, likely a lot less worthwhile than the above experiment.

Why not… but at the risk of sounding like @bobbooo, we still have the ever-recurring problem of not being able to check any modified model against the data. It's basically an exercise in fumbling in the dark: you can twist the numbers all you want, you will never know if you're actually improving the model or breaking it in subtle ways that are practically impossible to know.

Heck, we don't even know for sure if the variables that made it in the Olive model are representative of what is causing us to prefer a loudspeaker over another, or if they are merely correlated with preference. (If it's the latter, then it would mean the model is not a true explanation of how listeners came up with their ratings, meaning the model is basically a black box and therefore impossible to tweak in a sensible way.)

I'm starting to wonder whether we should have two models: the original Olive model, for those who only trust the original double-blind peer-reviewed paper, don't like wild guesses, and/or are comparing speakers that are known to be similar to those in the Olive test (i.e. non-coaxial monopoles). And a separate "experimental" model that could be built from scratch based on what we know about perception of spinoramas (e.g. the importance of the DI curve, the relative unimportance of overall tilt), that might make more sense to use for non-standard speakers and would come with a fat warning that it is not directly backed by double-blind testing data and should therefore be taken with a huge grain of salt. The experimental model could be calibrated against the Olive model by aligning the scores for "standard" speakers. But even then, if we are asked to quantify how accurate that experimental model would be, our best answer would be ¯\_(ツ)_/¯

Last edited:

OP

- Thread Starter

- #390

The target slope is not "included on the SM calculation". SL is the variable that relates to the target slope, not SM. (SL did not make it into the final model, and that's not necessarily a problem.) The Olive paper never suggests that the target slope has anything to do with SM.

You have to find the estimated (predicted) values:

Slope (SL) is used to find the estimated/predicted values:

Target slope is used to find Slope (SL):

You have to find the estimated (predicted) values:

Slope (SL) is used to find the estimated/predicted values:

I think maybe I understand what you don't understand

But then, a different definition appears!

So, which is it? Is SL defined as b, or is it the distance between the target b and the b of the best-fit linear regression?

The reason why it didn't seem that confusing to me is because I simply ignored the first definition, treating it as just a poorly worded description. To me, the second equation is the true definition of SL - indeed that makes way more sense as a candidate variable in the preference model. If I use that interpretation, then everything seems clear to me, and SL does not participate in the definition of SM at all. (b does participate, but only because it defines the best-fit linear regression, which SM uses.)

I'm not sure I understand your point about "estimated amplitude values". In this context I interpret "estimated" to mean "the values from the best-fit linear regression", nothing else. That is a standard meaning of the word "estimated" in statistics.

Last edited:

@MZKM Let me try to explain this from a different perspective.

Assume for the sake of the argument that the text that starts with "The other statistic in our model is slope (SL)…" is not there. Or just assume it's in a different section. The remaining text would be titled "smoothness" and it would look like this:

My point is: you don't need anything else to successfully compute SM. Everything is in that blob of text, and that blob of text alone. It stands on its own and you don't need the part I removed to make sense of it. Here's the gist of it (paraphrasing):

SM is the r² of the least-squares linear regression that best fits the curve over the range 100 Hz-16 kHz.

That's it. It's all you need to know. No SL, no slope, no b, no nothing.

Is the text poorly worded and confusing? Yes. (Clearly, the text about SL should have been moved to a separate section!) Can we make sense of it nonetheless with a high degree of certainty? Yes, I believe so. This interpretation makes perfect sense to me.

Assume for the sake of the argument that the text that starts with "The other statistic in our model is slope (SL)…" is not there. Or just assume it's in a different section. The remaining text would be titled "smoothness" and it would look like this:

My point is: you don't need anything else to successfully compute SM. Everything is in that blob of text, and that blob of text alone. It stands on its own and you don't need the part I removed to make sense of it. Here's the gist of it (paraphrasing):

SM is the r² of the least-squares linear regression that best fits the curve over the range 100 Hz-16 kHz.

That's it. It's all you need to know. No SL, no slope, no b, no nothing.

Is the text poorly worded and confusing? Yes. (Clearly, the text about SL should have been moved to a separate section!) Can we make sense of it nonetheless with a high degree of certainty? Yes, I believe so. This interpretation makes perfect sense to me.

OP

- Thread Starter

- #393

I’m reading it as:I think maybe I understand what you don't understandIt is true that, strictly speaking, the doc does contradicts itself. First, it states:

View attachment 65147

But then, a different definition appears!

View attachment 65146

Estimated values = abs(target-measured) • ln(Hz) + intercept

Y = m • x + b

The slope (m) is calculated from the absolute difference of the of the measured slope from the target slope.

I’m reading it as not two definitions, b = abs(target-measured).So, which is it? Is SL defined as b, or is it the distance between the b of the target and the b of the best-fit linear regression?

Olive simply turned the SL into variable b.

It’s not “b of the best-fit linear regression”, it’s “b of the raw slope”.

Last edited:

I’m reading it as:

Estimated values = abs(target-measured) • ln(Hz) + intercept

Y = m • x + b

I assume you meant Y = m • x + a? Otherwise I am very, very deeply confused by your post…

The slope (m) is calculated from the absolute difference of the of the measured slope from the target slope.

b = abs(target-measured).

Olive simply turned the SL into variable b.

Err…

I mean, sure, that's one way to reconcile the two seemingly contradictory definitions of SL. If your interpretation is valid then the text is even more convoluted and confusing than I thought! But okay, let's assume for the sake of the argument that your interpretation is correct and see where that leads us.

Before we can do anything, we have to calculate b_measured. Already there is a problem, because b_measured itself is not defined. (It is perfectly well-defined in my interpretation, because in my interpretation it's defined by Equation 5.)

Okay, let's ignore that problem and come up with a best guess for the definition of b_measured: it's the slope of the best-fit least-squares linear regression line on the original curve in log-frequency space. There is literally nothing in the doc that states that it is, but okay, let's assume that's the definition so that we can make progress.

Okay, so b = abs(b_target - b_measured). At this point b is determined. (This makes the subscript notation used in the text quite confusing, by the way, because b_target and b_measured are not just special cases or "examples" of b. In my interpretation, they are.) Equation 5 now defines another best-fit least-squares linear regression line where the slope is fixed and only the intercept (a) needs fitting. (This is where our interpretations diverge sharply - in my interpretation, b is an output of the linear regression process, not an input.)

Okay, so now we are fitting Y, a linear regression with a predetermined slope (b) that is the difference between target slope and the measured slope we computed earlier.

I will point out that at this point things become, quite frankly, completely bonkers: we are computing a best-fit linear regression with a slope that is fixed to a value that itself is a function of the slope of another best-fit regression we did earlier on the same data. I mean, WTF? That just sounds incredibly convoluted for no good reason. If we only had a single regression where the slope is forced to b_target, then I'd understand. But this? Come on, this is silly.

But okay, let's move on to SM. Now looking at the text this is a bit awkward, because, according to your interpretation, SM uses Y which itself uses b, but SM is discussed before Y and b. That's a weird way to order the text, but okay. (My interpretation doesn't have that problem, as I showed in my previous post.)

SM is the r² of the regression. Let's consider a speaker with an on-target slope (b_measured=b_target). That speaker will have b=0, and for that speaker Y is therefore a horizontal line through the mean. Now things become… interesting: our speaker with perfect slope will have an SM of… zero. Indeed, the r² of a straight horizontal line through the mean is always zero (RSS=TSS).

If the response is flat (b_measured=0), then Y uses a slope of b_target, which is a worse fit than the mean itself and SM becomes… negative! That directly contradicts the text, which states that SM values can only range from 0 to 1. (That holds in my interpretation, because in my interpretation the regression is always a better fit than the mean (RSS<TSS). That's not true in your interpretation because the slope of the regression is fixed.)

Generalizing to values of b_measured that are neither 0 nor b_target, it quickly becomes apparent that SM computes the r² of a regression whose slope seems almost random and unrelated to anything of relevance. (Especially since b is defined to always be positive, which makes exactly zero sense no matter how you look at it.) SM seems completely broken and pointless at this point.

In light of the above, I will simply invoke Occam's razor and stick to my interpretation. I'm not saying my interpretation is free of problems: it doesn't explain why the text seems to initially define SL as b, and the definition of SM as r² is still a bit bizarre, as we've discussed at length already. But that's the only issues with my interpretation, as far as I can tell. Your interpretation has way, waaaay bigger issues and leads to a definition of SM that doesn't seem to make any kind of sense on any level.

(One has to wonder why the document was phrased in such a confusing way. Here's what I think might have happened: initially, SM and SL were defined in two completely separate sections and everything was clear. Then, Olive or one of his proofreaders might have noticed that, hey, SM and SL use the same linear regression under the hood, so we might as well put them in the same section. So the two sections were simply merged together and no-one noticed that the text became really confusing as a result. That, and the poor wording of "SL is defined as b", which I strongly believe is simply a mistake in the text.)

Last edited:

OP

- Thread Starter

- #395

Geez that’s a lot of investigation.I assume you meant Y = m • x + a? Otherwise I am very, very deeply confused by your post…

Err…

I mean, sure, that's one way to reconcile the two seemingly contradictory definitions of SL. If your interpretation is valid then the text is even more convoluted and confusing than I thought! But okay, let's assume for the sake of the argument that your interpretation is correct and see where that leads us.

Before we can do anything, we have to calculate b_measured. Already there is a problem, because b_measured itself is not defined. (It is perfectly well-defined in my interpretation, because in my interpretation it's defined by Equation 5.)

Okay, let's ignore that problem and come up with a best guess for the definition of b_measured: it's the slope of the best-fit least-squares linear regression line on the original curve in log-frequency space. There is literally nothing in the doc that states that it is, but okay, let's assume that's the definition so that we can make progress.

Okay, so b = abs(b_target - b_measured). At this point b is determined. (This makes the subscript notation used in the text quite confusing, by the way, because b_target and b_measured are not just special cases or "examples" of b.) Equation 5 now defines another best-fit least-squares linear regression line where the slope is fixed and only the intercept (a) needs fitting. (This is where our interpretations diverge sharply - in my interpretation, b is an output of the linear regression process, not an input.)

Okay, so now we are fitting Y, a linear regression with a predetermined slope (b) that is the difference between target slope and the measured slope we computed earlier.

I will point out that at this point things become, quite frankly, completely bonkers: we are computing a best-fit linear regression with a slope that is fixed to a value that itself is a function of the slope of another best-fit regression we did earlier on the same data. I mean, WTF? That just sounds incredibly convoluted for no good reason. If we only had a single regression where the slope is forced to b_target, then I'd understand. But this? Come on, this is silly.

But okay, let's move on to SM. Now looking at the text this is a bit awkward, because, according to your interpretation, SM uses Y which itself uses b, but SM is discussed before Y and b. That's a weird way to order the text, but okay. (My interpretation doesn't have that problem, as I showed in my previous post.)

SM is the r² of the regression. Let's consider a speaker with an on-target slope (b_measured=b_target). That speaker will have b=0, and for that speaker Y is therefore a horizontal line through the mean. Now things become… interesting: our speaker with perfect slope will have an SM of… zero. Indeed, the r² of a straight horizontal line through the mean is always zero (RSS=TSS).

If the response is flat (b_measured=0), then Y uses a slope of b_target, which is a worse fit than the mean itself and SM becomes… negative! That directly contradicts the text, which states that SM values can only range from 0 to 1. (That holds in my interpretation, because in my interpretation the regression is always a better fit than the mean (RSS<TSS). That's not true in your interpretation because the slope of the regression is fixed.)

Generalizing to values of b_measured that are neither 0 nor b_target, it quickly becomes apparent that SM computes the r² of a regression whose slope seems almost random and unrelated to anything of relevance. (Especially since b is defined to always be positive, which makes exactly zero sense no matter how you look at it.) SM seems completely broken and pointless at this point.

In light of the above, I will simply invoke Occam's razor and stick to my interpretation. I'm not saying my interpretation is free of problems: it doesn't explain why the text seems to initially define SL as b, and the definition of SM as r² is still a bit bizarre, as we've discussed at length already. But that's the only issues with my interpretation, as far as I can tell. Your interpretation has way, waaaay bigger issues and leads to a definition of SM that doesn't seem to make any kind of sense on any level.

(One has to wonder why the document was phrased in such a confusing way. Here's what I think might have happened: initially, SM and SL were defined in two completely separate sections and everything was clear. Then, Olive or one of his proofreaders might have noticed that, hey, SM and SL use the same linear regression under the hood, so we might as well put them in the same section. So the two sections were simply merged together and no-one noticed that the text became really confusing as a result.)

However, I don’t think that’s what I was talking about.

SL = | b_target - b_measured |

Where b_measured is simply the raw slope of the data (which is mentioned in the sentence right before talking about this formula).

So, you take the -1.75 slope, the raw slope, find their absolute difference, multiply it by ln(Hz), then add the y-intercept.

I assume you meant Y = m • x + a

??? Slope-Intercept form is y=mx+b; at least it is in the US (I know the UK uses BODMAS instead of PEMDAS).

However, I don’t think that’s what I was talking about.

SL = | b_target - b_measured |

Where b_measured is simply the raw slope of the data (which is mentioned in the sentence right before talking about this formula).

Yep, we agree on that, and this is the assumption I made in my previous post. My various points still stand.

So, you take the -1.75 slope, the raw slope, find their absolute difference, multiply it by ln(Hz), then add the y-intercept.

Yep, I understand that's your interpretation. And as I explain in my previous post, that makes absolutely no sense whatsoever. The resulting line (i.e. Y(Hz)) makes zero sense by itself (it's not the target slope, nor the raw slope, but... something derived from both with an abs() in the middle that makes the slope always positive?! WTF?), and the r² of that line relative to the original curve (i.e. SM) makes even less sense - at this point, I'd argue a random number generator would produce a more relevant result. This is why I don't think your interpretation is correct, and I elaborate on that in my previous post.

??? Slope-Intercept form is y=mx+b; at least it is in the US

Equation 5 in Olive's doc uses the letter "a" as the intercept, hence my confusion. If we start using two systems of symbols at the same time with no warning when switching from one to the other, I'm afraid it will be even harder for us to reach an understanding

OP

- Thread Starter

- #397

Gotcha. I do agree it’s odd, it is comparing the actual slope to the target slope, but if it matches then the slope would be 0, which doesn’t make sense.Yep, we agree on that, and this is the assumption I made in my previous post. My various points still stand.

Yep, I understand that's your interpretation. And as I explain in my previous post, that makes absolutely no sense whatsoever. The resulting line (i.e. Y(Hz)) makes zero sense by itself (it's not the target slope, nor the raw slope, but... something derived from both with an abs() in the middle that makes the slope always positive?! WTF?), and the r² of that line relative to the original curve (i.e. SM) makes even less sense - at this point, I'd argue a random number generator would produce a more relevant result. This is why I don't think your interpretation is correct, and I elaborate on that in my previous post.

Equation 5 in Olive's doc uses the letter "a" as the intercept, hence my confusion. I we start using two systems of symbols at the same time with no warning when switching from one to the other, I'm afraid it will be even harder for us to reach an understanding

On a different note, in an effort to see how SM can be done away with, I normalized the PIR curves of two speakers and ran NBD on them, but the NBD scores were worse! The only explanation I can think of is that the raw PIR is flat in some regions (good) which forced the normalized PIR to have a slope in those regions (bad). However, I would have thought the overall change would be positive. I’ll have to try it out on a speaker with a really straight PIR.

I do wonder how on Earth did Olive come up with the coefficients/constants that he did, a bunch of guess and check? It makes it very difficult for me to try alter it to exclude SM.

Last edited:

I do wonder how on Earth did Olive come up with the coefficients/constants that he did, a bunch of guess and check? It makes it very difficult for me to try alter it to exclude SM.

What do you mean? It's well-explained in the paper that the weight of the variables was determined through Principal Component Analysis (PCA).

This is both a blessing and a curse, by the way: PCA will optimize the model for best correlation with the data, but it does not know what the data means and therefore cannot guarantee that the results make sense from a perceptual perspective. It's the whole "correlation vs. causality" thing.

As to the initial selection of the variables themselves (NBD, SM, etc.), that indeed seems arbitrary, but one has to start somewhere. (One could say the same thing, albeit to a lesser extent, about the selection of curves.)

Posting it here again because we've hijacked Sony thread too much, but here are the digitized measurements for the 13 speakers used on Olive tests part 1.

The process isn't perfect, but I took care to make sure the graphs were as close as I could get them with no smoothing. Certainly should be within sample variance, anyway. A quick check showed speaker 2 scoring higher than speaker one using the Part 2 formula, but they are very close regardless. MZKM may want to double check as REW exports to a different amount of data points than the klippel and I had to tweak the sheet a little to get it to work.

Now, does anyone happen to have a copy of the August 2001 edition of Consumer Reports lying around their bathroom or so we can identify the speakers?

The process isn't perfect, but I took care to make sure the graphs were as close as I could get them with no smoothing. Certainly should be within sample variance, anyway. A quick check showed speaker 2 scoring higher than speaker one using the Part 2 formula, but they are very close regardless. MZKM may want to double check as REW exports to a different amount of data points than the klippel and I had to tweak the sheet a little to get it to work.

Now, does anyone happen to have a copy of the August 2001 edition of Consumer Reports lying around their bathroom or so we can identify the speakers?

Attachments

OP

- Thread Starter

- #400

I’ve done 1 & 2 so far. However, I’m not changing the data points from 1/96 to 1/24 or 1/20, that‘s just good much work, so the scoring will be a little off (likely a bit worse).Posting it here because we've hijacked Sony thread too much, but here are the digitized measurements for the 13 speakers used on Olive tests part 1.

They might be off here and there because the process isn't perfect, but I took care to make sure the graphs were as close as I could get them with no smoothing. Certainly should be within sample variance, anyway. A quick check showed speaker 2 scoring higher than speaker one using the Part 2 formula, but they are very close regardless. MZKM may want to double check as REW exports to a different amount of data points than the klippel and I had to tweak the sheet a little to get it to work.

Now, does anyone happen to have a copy of the August 2001 edition of Consumer Reports so we can identify the speakers?

Similar threads

- Replies

- 14

- Views

- 1K

- Replies

- 0

- Views

- 760

- Replies

- 3

- Views

- 781

- Replies

- 88

- Views

- 23K