-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Master Preference Ratings for Loudspeakers

- Thread starter MZKM

- Start date

AHA! I've got the list of Paper 1 speakers. Journalist skills coming in handy.

Here is the article from the August 2001 edition of Consumer Reports used to select the Paper 1 speakers:

Along with brief descriptions of each:

To save you the time reading, by matching the 23 bookshelf speakers above to Table 1 in the paper, we can isolate the ones used in the study.

Here they are as ranked by Consumer Reports. In brackets is their 'Accuracy score' based on CR's flat sound power model, as well as CR's "Bass Handling" score. In the article, CR says the overall ranking is mostly based on the accuracy score, with some weight given to " the ability to play bass notes very loud without distortion."

@amirm , in case you were curious.

P.S. I see a pair of Infinity IL10 for $79 a pair on eBay. Just sayin'

Here is the article from the August 2001 edition of Consumer Reports used to select the Paper 1 speakers:

Along with brief descriptions of each:

To save you the time reading, by matching the 23 bookshelf speakers above to Table 1 in the paper, we can isolate the ones used in the study.

Here they are as ranked by Consumer Reports. In brackets is their 'Accuracy score' based on CR's flat sound power model, as well as CR's "Bass Handling" score. In the article, CR says the overall ranking is mostly based on the accuracy score, with some weight given to " the ability to play bass notes very loud without distortion."

- Pioneer S-DF3-K [89/Excellent]

- Bose 301 Series IV [89/Very Good]

- Cambridge Soundworks Model Six [88/Very Good]

- BIC America Venturi DV72si [90/Good]

- Infinity Entra One [87/Excellent]

- JBL Northridge Series N28 [85/Excellent]

- Polk Audio RT15i [88/Good]

- Yamaha NS-A638 [83/Excellent]

- Bose 141 [89/Very Good]

- JBL Studio Series S26 [82/Very Good]

- Infinity Interlude IL10 [76/Excellent]

- Klipsch Synergy SB-3 Monitor [76/Excellent]

- KLH 911B [79/Good]

- Infinity Interlude IL10 [11]

- JBL Series S26 [10]

- Infinity Entra One [5]

- Pioneer S-DF3-K [1]

- JBL Northridge Series N28 [10]

- Klipsch Synergy SB-3 Monitor [12]

- Polk Audio RT15i [7]

- Cambridge Soundworks Model Six [3]

- BIC America Venturi DV62si [4]

- Bose 301 Series IV [2]

- Yamaha NS-A638 [8]

- Bose 141 [9]

- KLH 911B [13]

(Poor KLH can't catch a break)

@amirm , in case you were curious.

P.S. I see a pair of Infinity IL10 for $79 a pair on eBay. Just sayin'

Last edited:

- Joined

- Jun 19, 2018

- Messages

- 6,652

- Likes

- 9,403

AHA! I've got the list of Paper 1 speakers. Journalist skills coming in handy.

Genius!

- Joined

- Jun 19, 2018

- Messages

- 6,652

- Likes

- 9,403

I'm starting to wonder whether we should have two models: the original Olive model, for those who only trust the original double-blind peer-reviewed paper, don't like wild guesses, and/or are comparing speakers that are known to be similar to those in the Olive test (i.e. non-coaxial monopoles). And a separate "experimental" model that could be built from scratch based on what we know about perception of spinoramas (e.g. the importance of the DI curve, the relative unimportance of overall tilt), that might make more sense to use for non-standard speakers and would come with a fat warning that it is not directly backed by double-blind testing data and should therefore be taken with a huge grain of salt. The experimental model could be calibrated against the Olive model by aligning the scores for "standard" speakers. But even then, if we are asked to quantify how accurate that experimental model would be, our best answer would be ¯\_(ツ)_/¯

I think this is a great idea.

However, purporting to give an experimental "preference" model would be overstepping IMHO, given that we couldn't possibly derive it from valid preference data.

I would suggest instead that we simply give speakers a rating for each of:

- ON deviation from flat

- LFX

- PIR (or ER) deviation from line of best fit (defined mathematically such that it is independent of slope, in contrast to the Olive paper).

A nonlinear distortion rating could also be added, although IMHO this would not be possible on the basis of the limited distortion measurements Amir currently performs.

Finally, if we wanted to be a bit more experimental about it (which I think we should be), we could apply an equal-loudness based weighting to each metric.

In other words, a deviation from flat in the ON at say 15kHz would be penalised less harshly than a deviation at say 3kHz. And so forth...

This weighting could be derived from existing data from psychoacoustic research.

Last edited:

OP

- Thread Starter

- #408

I think this is a great idea.

However, purporting to give an experimental "preference" model would be overstepping IMHO, given that we couldn't possibly derive it from valid preference data.

I would suggest instead that we simply give speakers a rating for each of:

Optionally, additional sub-ratings could be given for HER and VER.

- ON deviation from flat

- LFX

- PIR (or ER) deviation from line of best fit (defined mathematically such that it is independent of slope, in contrast to the Olive paper).

A nonlinear distortion rating could also be added, although IMHO this would not be possible on the basis of the limited distortion measurements Amir currently performs.

Finally, if we wanted to be a bit more experimental about it (which I think we should be), we could apply equal-loudness based weightings to each metric.

In other words, a deviation from flat in the ON at say 15kHz would be penalised less harshly than a deviation at say 3kHz. And so forth...

This weighting could be derived from existing data from psychoacoustic research.

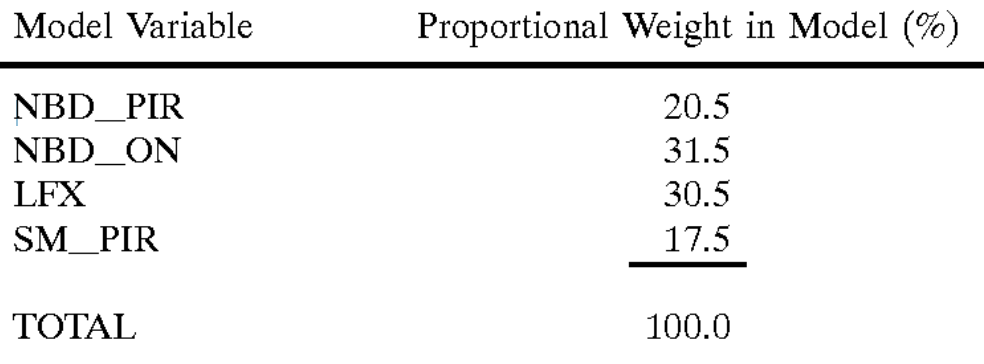

I was thinking about experimenting with seeing how just using the % weighting for each parameter would look like compared to the formula weighting. The score range may not be the same, but I would assume the ranking would stay the same; if so, I could then experiment with normalizing the PIR and running the NBD score just on that, and having that take the combined % that the original NBD_PIR & SM_PIR take up.

- Joined

- Jun 19, 2018

- Messages

- 6,652

- Likes

- 9,403

I was thinking about experimenting with seeing how just using the % weighting for each parameter would look like compared to the formula weighting. The score range may not be the same, but I would assume the ranking would stay the same; if so, I could then experiment with normalizing the PIR and running the NBD score just on that, and having that take the combined % that the original NBD_PIR & SM_PIR take up.

Normalising the PIR and running the NBD score on it sounds like a very elegant solution to me

I'm not sure I understood the first part about using the % weighting for each parameter as opposed to the formula weighting?

OP

- Thread Starter

- #410

The formula is this:Normalising the PIR and running the NBD score on it sounds like a very elegant solution to me

I'm not sure I understood the first part about using the % weighting for each parameter as opposed to the formula weighting?

the weighting is this:

You can’t just multiply the scores by these weights though (unless it’s a perfect score), as the numerical value wouldn‘t match, but I would assume the ranking would stay the same.

To give an example, you can curve a set of data, the numerical values will change, but the order won’t.

- Joined

- Jun 19, 2018

- Messages

- 6,652

- Likes

- 9,403

The formula is this:

View attachment 65702

the weighting is this:

You can’t just multiply the scores by these weights though (unless it’s a perfect score), as the numerical value wouldn‘t match, but I would assume the ranking would stay the same.

To give an example, you can curve a set of data, the numerical values will change, but the order won’t.

You know, this is something I'd never understood in the paper. If you add up the 4 coefficients in the formula, you get 12.11, not 12.69. I can't work out why this would be the case?

Anyway, if it were up to me, I wouldn't be trying to tweak the Olive model. I think it is what it is, and without the raw data, we can't know whether tweaks made to it would increase or decrease correlation with listener preference.

Instead, I'd simply build a new model that doesn't purport to be a preference model, basically along the lines I suggested in post #407. An "ASR Performance Rating", if you will

Last edited:

OP

- Thread Starter

- #412

The best score (to get a 10) for LFX isn’t a 1.0, that’s why.You know, this is something I'd never understood in the paper. If you add up the 4 coefficients in the formula, you get 12.11, not 12.69. I can't work out why this would be the case?

Anyway, if it were up to me, I wouldn't be trying to tweak the Olive model. I think it is what it is, and without the raw data, we can't know whether tweaks made to it would increase or decrease correlation with listener preference.

Instead, I'd simply build a new model that doesn't purport to be a preference model, basically along the lines I suggested in post #407.

Last edited:

I've attached 24 ppo

Have you applied 1/12 smoothing as warned by REW to avoid anti-aliasing before doing this export?

I think this is a great idea.

However, purporting to give an experimental "preference" model would be overstepping IMHO, given that we couldn't possibly derive it from valid preference data.

I would suggest instead that we simply give speakers a rating for each of:

- ON deviation from flat

- LFX

- PIR (or ER) deviation from line of best fit (defined mathematically such that it is independent of slope, in contrast to the Olive paper).

IMHO there are 2 paremeters with PIR which are important: one is average (squarreed?) deviation from best fit line and the other is slope of the best fit line, as if slope is too small or too high it would impact the overall tonal balance.

P.S. What is "ON"?

- Joined

- Jun 19, 2018

- Messages

- 6,652

- Likes

- 9,403

IMHO there are 2 paremeters with PIR which are important: one is average (squarreed?) deviation from best fit line and the other is slope of the best fit line, as if slope is too small or too high it would impact the overall tonal balance.

I agree. However, it's not established that any particular slope is superior (or likely to be most preferred). This is illustrated by the two Olive studies, where preferred slope proved to be dependent on the average slope of each sample.

Deviation, OTOH, is better established to correlate with listener preference.

Therefore, I think that deviation, but not slope, should be a rated parameter.

P.S. What is "ON"?

On-axis. I'm just borrowing the terminology from the Olive paper.

I agree. However, it's not established that any particular slope is superior (or likely to be most preferred). This is illustrated by the two Olive studies, where preferred slope proved to be dependent on the average slope of each sample.

Deviation, OTOH, is better established to correlate with listener preference.

Therefore, I think that deviation, but not slope, should be a rated parameter.

If we can agree that PIR with slope of 5 deg would intuitively sound "bright" and the one with slope of 45 deg would sound "dark" we can also agree that indicates there is an optimally sounding region of slopes somewhere in between.

For example, that is how I adjusted PIR of Sony speaker, I didn't only make it smoother but I also increased a slope a little to avoid too bright tonal balance.

On-axis. I'm just borrowing the terminology from the Olive paper.

Aha. In that case my vote would instead go in favor of using LW measured within +/-15 deg or similar.

OP

- Thread Starter

- #418

Keep in mind that EQing to obtain a slope is not the same as inherent slope, which is achieved based on the directivity of the loudspeaker, and of which no real consensus has been reached as to what is ideal.If we can agree that PIR with slope of 5 deg would intuitively sound "bright" and the one with slope of 45 deg would sound "dark" we can also agree that indicates there is an optimally sounding region of slopes somewhere in between.

For example, that is how I adjusted PIR of Sony speaker, I didn't only make it smoother but I also increased a slope a little to avoid too bright tonal balance.

Aha. In that case my vote would instead go in favor of using LW measured within +/-15 deg or similar.

Listening window includes +/-10° vertical which, if my quick calculation is correct, is +/-17in at 8ft away, which is too large of an arch in my opinion unless you are looking for speakers for use in a home theater with tiered seating. I also don’t fully like using LW for accounting for no toe-in, as even if a 30° difference is pretty accurate, LW is using a 60° arch for the horizontal, so for toe-in purposes it really should be like

=average(0°, average(+/-10°) , average(+/-20°) , average(+/-30°))

Last edited:

- Joined

- Feb 23, 2016

- Messages

- 20,706

- Likes

- 37,449

Yeah, that WebPlotDigitizer is a great tool to use. Pretty simple too.

Keep in mind that EQing to obtain a slope is not the same as inherent slope, which is achieved based on the directivity of the loudspeaker, and of which no real consensus has been reached as to what is ideal.

Sure, it definitely isn't. When doing EQ I'm eyeballing where the natural slope would be if I remove the non-linearities. I guess I'm trying to say that the more non-linearities exist in the 200-20khz region the greater are the chances for the least square line to miss the natural slope of the speaker.

Similar threads

- Replies

- 92

- Views

- 12K

- Replies

- 56

- Views

- 6K

- Replies

- 2

- Views

- 2K

- Replies

- 12

- Views

- 2K