Punter

Senior Member

- Joined

- Jul 19, 2022

- Messages

- 304

- Likes

- 1,421

Audio recording has come a long way since the first wax drum was cut in the late 1800’s. The search for ever higher quality and more realistic recording and reproduction has driven numerous technologies forward. From LP records to the Compact Disc, engineers and designers have poured their talents into improving specifications and eliminating distortion in recorded audio. There is no doubt that modern forms of digital recording and reproduction offer a solid improvement over previous technologies despite the moaning of various factions who, consumed with nostalgia, claim that vinyl LP’s are still superior to any other form of recorded music. These individuals are deaf to any technical criticism or glaring examples of limitations in the format. However, as time goes by, these individuals are being coaxed into the present. The superiority of digital over analogue is beyond dispute but just like the CD, digital and more importantly streaming digital music has a convenience aspect unmatched by any other format. So the Luddites are cautiously accepting of this technology and taking it up.

Here on the ASR we are well aware of the “Audiophool”, who is an individual who has been caught up in the “High-End” audio pseudoscience that pervades the HiFi audio space. The vendors and manufacturers who populate the High-End have for decades, relied heavily on the deficiencies of recorded audio to push their products. Without problems, you can’t have solutions. We have all witnessed some of the solutions from crystals to magic wooden blocks, directional wire and on and on it goes. Digitally recorded and reproduced music causes a problem in this ecosystem. Digital music recording was designed to be superior to any technology that preceded it. There were some restrictions built into the early systems by the technology that was available but even so, the two-channel 16-bit PCM encoding at a 44.1 kHz sampling rate per channel in the Red Book standard, allowed for superior audio bandwidth and dynamic range unmatched by any analogue format. Other common distortions were banished as well, hiss, disc surface noise, wow & flutter, all gone.

How can you make money out of tweaks when there’s nothing to tweak? We all know the answer to that question. You simply invent problems and then present the solution to them! An example of this would be the CD “treatments” that began to appear. Magic pens to mark the edge of the disc because the music was being compromised by “internal reflections” which was utter balderdash but many fell for it. Demagnetising CD’s for God’s sake! I had a go at debunking this one for the denizens of the Stereophile forums many years ago. In spite of the fact that I could show two files, one ripped off the disc before demagnetisation and the other afterwards, that were identical, the Audiophools wouldn’t concede in any way. They were told by an equipment manufacturer that demagnetising worked which was backed up by a few reviewer opinions and that was that. What this illustrates is that the “snake oil” vendors and their cheerleaders latched onto this new technology as a “green field” ripe for the plunder. So it’s no surprise that we now find ourselves assailed with new manufactured problems connected with digital music streaming.

The streaming and playback of digital music is now well established and even the most ardent CD and turntable fan has been seduced by its convenience and very obvious quality as a source of recorded music. One device that’s been thoroughly whacked with the “High-End” stick is the DAC (Digital to Analog Converter) despite the fact that the DAC chips in the equipment are manufactured by only half a dozen companies, it’s a fertile field for upselling and price inflation using perceived value. There's no question that DAC manufacturers have taken up the challenge of designing equipment that extracts an optimal analogue sound from the available DAC chips and there are accepted quality differences between budget and higher spec units. This is no different to any other component in a sound system. As always, it’s up to the buyer to decide if they’re getting value for money when purchasing their equipment. To a large extent, the DAC market has fully shaken out now. In external DAC’s there are units like the Schitt Modi3 at $100 to a slew of ridiculous over packaged “High-End” units with prices in the stratosphere so the market is fully populated. This being the case, it’s obvious where the next step is for the snake oil end of things. Network equipment.

In a previous post “The Truth About Music Streaming” I outlined the basic flow of music streaming with some details regarding network protocols and codecs. However, there has recently been a couple of the “golden ear” brigade on the interwebs, holding forth on how devices like network switches “sound different”. This is how it starts, the blind leading the blind and a pack of foxes pricking their ears up as a new potential market reveals itself. The next thing we see is the high-end network switch and the HiFi router being championed by the usual promoters. Groan!

The antidote to this should be facts and realities but as we know, the Audiophool is immune to both. Regardless, we here on ASR deserve the truth and knowledge is power so I am going to spell out the digital streaming audio path from the server to the speaker as best I can with enough detail so that we can move into the future with some understanding. Computer networking is not voodoo or black magic, it’s a robust mechanism, developed by brilliant minds and put to the test every day moving trillions of bytes of data globally. It is a system unfazed by transmitting a footling little audio stream from a server in Sweden to your DAC in the USA, Iceland or Tasmania.

Computer Networking for Audio 101

Looked at in its simplest form, a computer network has a relatively simple structure when we’re talking audio.

The Streaming Service

The job of the streaming service is quite simple on the surface. First, store the music files, second, make a searchable catalogue of the files and third, allow the client to access the files. The reality is very different and involves a vast array of equipment and software. So as not to overwhelm the reader, I’ll break the process down with a minimum of jargon and without reference to all of the software that works in concert to produce a song on your device of choice.

As a service, Spotify needs to fulfil the following user requirements:

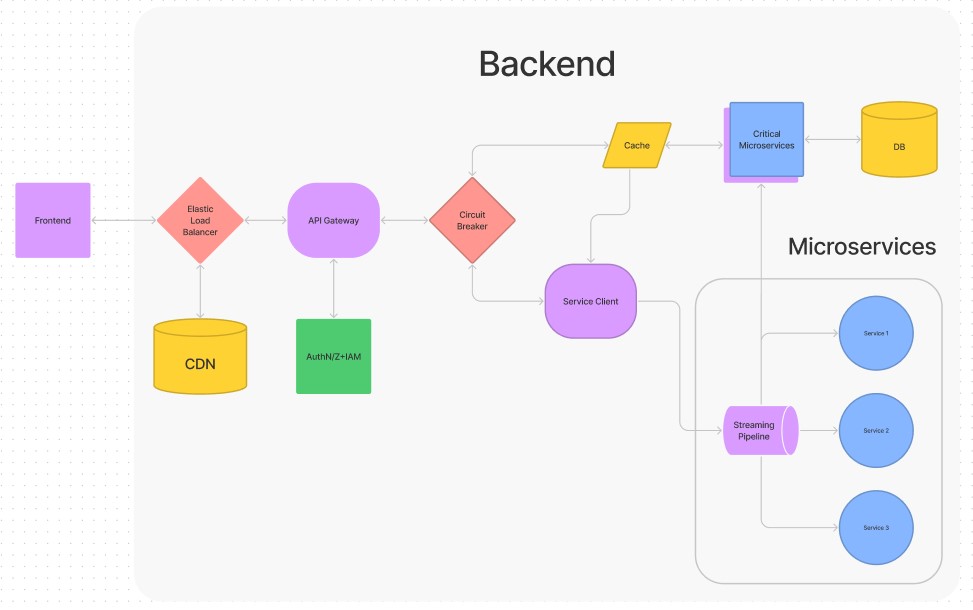

The standard way of building a service like this is usually the “Monolithic” approach meaning that the service has two main components, the “Frontend” and the “Backend”. The Frontend is also referred to as the “Presentation Layer” this layer implements the application UI elements and client-side API requests. It is what the client sees and interacts with. The Backend consists of the Controller Layer (all software integrations through HTTP or other communication methods happen here), the Service Layer (the business logic of the application is present in this layer) and the Database Access Layer: (all the database accesses, including both SQL and NoSQL, of the applications, happens in this layer). This simplifies the site software as communication between two parties namely as a frontend (client) talking to a backend (server).

The only problem with this model is that it doesn’t scale easily. Subsequently, Spotify (and other subscriber services) have moved forward with a “Microservices” model. Microservices builds off monolithic architecture. Instead of defining software as a single executable unit, it divides software into multiple executable units that interoperate with one another. Rather than having one complex client and one complex server communicating with one another, microservices split clients and servers into smaller units, with many simple clients communicating with many simple servers.

One of the prime directives of the service however is low latency. All of the internal workings of the site are geared towards this. Subsequently, a key feature of the client side interaction is the “front loading” of files. As soon as you choose a playlist, album or song, the site starts downloading it to your device. An example of this is if you have a playlist loaded on your device and you lose the network connection, you will probably be able to play five or six songs even when there is no connection. So in truth, services like Spotify are working on caching the files on your device rather than buffering a stream. The upshot of this is that your device is not relying on a buffer and so many of the issues caused by a slow or intermittent connection are nullified.

This fact however is thoroughly inconvenient to the snake oil brigade because it removes a slew of invented problems that can be “caused” by the network equipment between you and the service. So lets’ see if we can explore that so we have a more complete understanding of the mechanics. This understanding should equip us sceptics with the knowledge we need to see through the spurious claims of audiophools and dishonest equipment manufacturers.

Ethernet

Without Ethernet, there would be no internet. Ethernet is the backbone of almost all computer networking globally. It is a system developed by brilliant minds to be robust and scalable. To understand Ethernet, one must first delve into the OSI model. OSI stands for the Open Systems Interconnection model and conceptualizes the seven layers that computer systems use to communicate over a network. The model places computing functions into a set of rules to support the inter-operability between products and software. While the modern internet is based on a simpler model called TCP/IP, and not on OSI, the OSI 7-layer model is still widely used today to describe network architecture.

Frames vs Packets

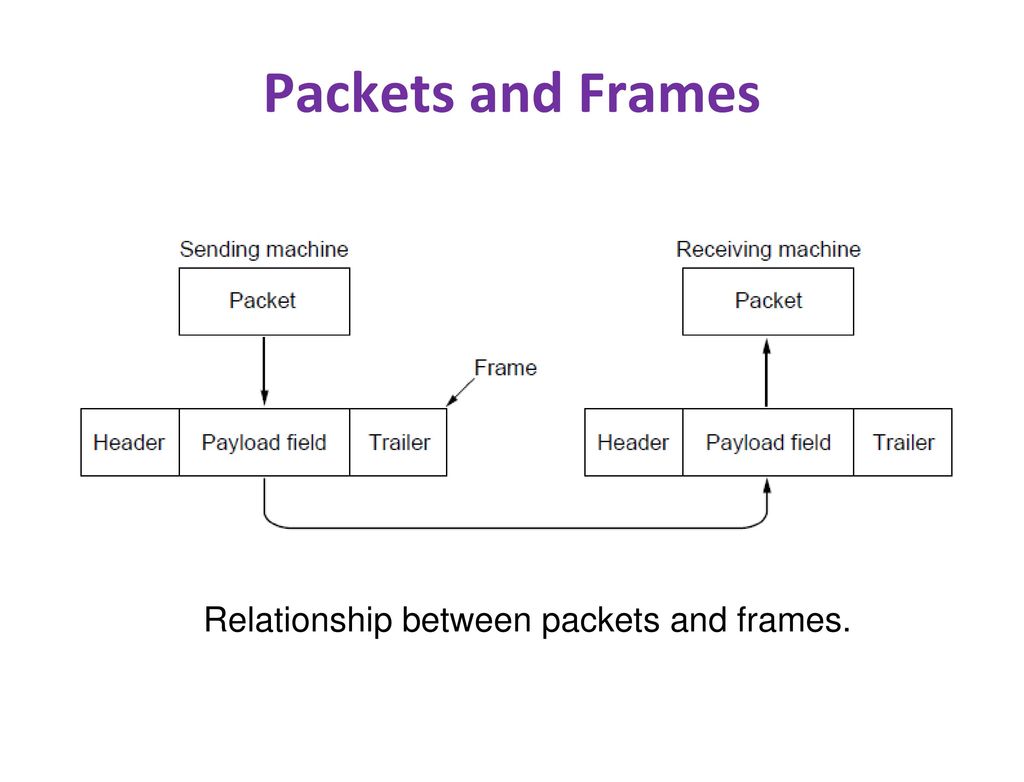

One of the important details to understand about Ethernet is the distinction between frames and packets. A frame is a data unit in a local area network (LAN) that includes header and trailer information in addition to the payload or data being transmitted. The header contains information such as the source and destination MAC addresses, while the trailer includes error checking data such as a cyclic redundancy check (CRC). A packet, on the other hand, is a data unit in a wide area network (WAN) or the Internet. It includes header information such as source and destination IP addresses and protocol-specific information, as well as the payload.

A frame in a LAN typically has the following structure:

In short, a frame is used for communication within a LAN, while a packet is used for communication across WANs or the Internet.

Just as an aside, every Ethernet capable device needs a unique MAC address. These addresses are administered by the IEEE (Institute of Electrical and Electronic Engineers). Unlike IPv4 IP (layer 3) addresses (which are running low) there are a potential 281 trillion possibilities in the 48 character string that makes up a MAC address. That’s roughly 40,000 for every living human. Even if they do start running out, there are already mechanisms in place to expand the system to 64 characters.

Error Correction and Jitter

If there’s one element of Ethernet that the Audiophools like to pounce on it’s this one. As I stated before, Ethernet was designed by brilliant minds to be robust and scalable. It would be almost impossible to calculate the number of frames and packets successfully transmitted over Ethernet networks every day. I emphasise the word “successfully” because the error correction built into Ethernet is very efficient and reliable. Desperate for a source of problems, the Audiophools and Snake Oil salesmen have error correction/jitter near the top of their list of boogeymen to scare the living daylights out of the golden-ear brigade.

So what is “jitter”? Jitter refers to variations in the arrival time of packets of audio data over a network connection. In other words, jitter is the difference between the expected time of arrival of a packet and its actual time of arrival. In a real-time audio streaming scenario, jitter can cause noticeable problems such as audio distortions, skips, or delays. This is because the timing of the audio samples is critical in maintaining the quality of the audio stream. If packets arrive too early or too late, the audio playback may not be in sync, causing audible artefacts. Jitter can be caused by a variety of factors such as network congestion, routing changes, unequal processing times at intermediate routers, or queuing delays. To mitigate the effects of jitter, most audio streaming protocols employ jitter buffering techniques that allow the receiving end to temporarily store incoming packets and play them back at a constant rate. Jitter buffering can help smooth out the variations in packet arrival time, reducing the effects of jitter on the quality of the audio stream.

The fact is that error counts of lost/late/corrupted packets or frames have to get to the point where the jitter buffer that stores the packets, before clocking them out, runs dry. As I pointed out earlier, streaming sites actively “front load” the client with data so that smooth, error free and low latency playback can occur. Indeed some audio applications, like JRiver, don’t start playing until they have downloaded the entire file from the location where it’s stored.

So a range of mitigation is employed to eliminate jitter and provided the buffer has sufficient data stored in it. Network switches do not have a jitter buffer. Jitter buffering is a feature that is implemented in the software or hardware of the receiving end of a network connection, such as a computer or an audio codec. Ultimately, switches are hardware devices that are responsible for forwarding network packets based on their destination MAC address. They do not have the capability to interpret the contents of the packets, buffer them, or manipulate their timing. The primary function of a switch is to forward network packets as quickly as possible and to minimize network congestion by managing the flow of data. So to claim that a network switch can have a perceptible effect on the quality of a stream of frames or packets containing digital music data is preposterous.

Network Timing

Recently, in the “Extreme Snake Oil” thread a member posted up a YouTube video of a gentleman named Hans Beekhuyzen who put forward a set of ideas meant to convince the viewer that internal clocks and network timing could cause some sort of distortion on the waveform decoded by the DAC. His explanation of how this effect occurs merely displays his lack of understanding rather than some sort of amazing revelation. Timing on networks is done by the synchronisation of devices to one of the current timing protocols available to Ethernet devices. The most common is NTP (Network Time Protocol) but there is also Precision Time Protocol (PTP) or Synchronous Ethernet (SyncE) to provide highly accurate timing over the network. For an average home router/modem, the most common time protocol used is Network Time Protocol (NTP). NTP is widely used to synchronize the clocks of network devices and provides an accurate and reliable way to maintain accurate time on the network. Home routers/modems typically use NTP to synchronize with NTP servers on the internet. The router/modem will periodically query one or more NTP servers to determine the correct time and adjust its internal clock accordingly. This helps to ensure that the device's clock is accurate and that it can accurately timestamp network traffic.

Routers

I notice nobody has seriously proposed a HiFi router yet but there are plenty of dreamers out there who claim that the router makes a difference to the sound of a music file. This is just as spurious as the claims made for data switches. The purpose of a router in a computer network is to forward network packets from one network to another. A router operates at the network layer (layer 3) of the OSI model and performs routing functions to determine the best path for a network packet to take based on its destination IP address. The router acts as a gateway for communication over a WAN, that we generally call the internet. Once again, routers do not buffer packets, they exist to facilitate communication from Layer 2 to Layer 3.

DAC’s

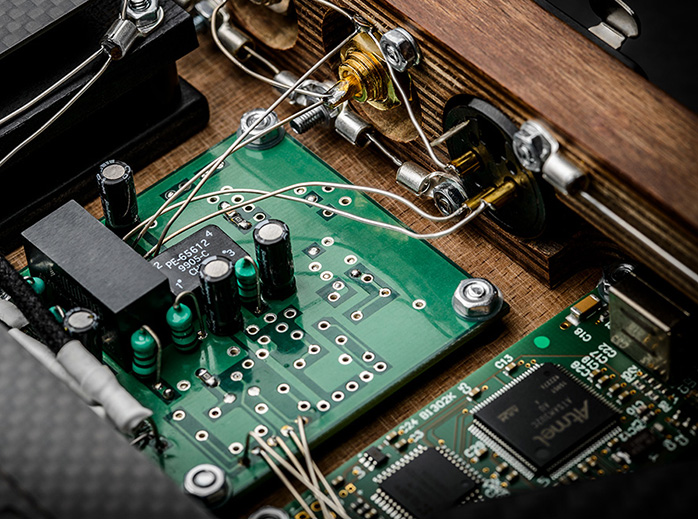

The DAC represents the best possible target for a whack with the “High End” stick. I went into the subject of DAC’s in my music streaming post earlier and pointed out that there are only a handful of manufacturers making quality DAC chips. Texas Instruments, ESS Technology, Cirrus Logic and AKM Semiconductor are a few of the makers and some would consider these near the top of the quality currently available. Of course, a DAC is a DAC and there are numerous support circuits and devices that are needed to create a functional HiFi component. However, as can be observed in many cases in this era, the “manufacturers don’t necessarily make everything inside the case. In many cases it’s the case that’s had the “High End” treatment and the internals are generic modules. This is true of amplifiers and DAC’s alike. In the case of the “Less Loss” DAC featured in another thread, the makers show some very janky looking internals, which are obviously not designed or manufactured by the brand company. Based on this type of activity I’m fully prepared to believe that there would be differences in the quality of sound from one DAC to another, however subtle.

Cables

OHHH PUHLEEEZZE! I know it’s just become an extension of the analogue cable miasma but it’s even more ridiculous (if that’s possible!). Ethernet can work effectively on standard, unshielded CAT5 cable over 100M. Yes that is one hundred metres, with no intermediate equipment, boosters or whatever. The data will be available at the end of that 100M cable, reliably and without serious error. USB is less effective from a distance perspective, being limited to around 5M but until that threshold is reached, the data will be retrievable and largely error free. To claim that any digital network connection can somehow influence the contents of the data frames or packets being transmitted on it is beyond ridiculous. No amount of pseudoscience, phase, RFI, EMI, Gophers or magic spells can make it true.

Conclusion

Ethernet is a demonstrably effective method of networking computers and other devices. It contains within its structure robust methods of error correction that have only become more effective as network speeds have increased. Network timing is achieved by the use of standardised protocols and is largely automatic. Music streaming services make it their business to cache or front load content on the client device to ensure low latency. Data/jitter buffering is automatic and as network speeds have increased, has also become more effective. Lost, late or corrupt packets or frames can be resent before the buffer runs out and processed in the order they were intended. No cable carrying a digital signal can influence the content of the data unless there are gross deficiencies in the cable or its environment. Even under these circumstances, the outcome will be lost, late or corrupt packets that will only be noticeable when the buffer has been depleted. Let’s be clear, the reason that routers, switches and cables do not change the contents of the frames and packets they transmit is because if they did, the whole network system would be utterly useless!!

Here on the ASR we are well aware of the “Audiophool”, who is an individual who has been caught up in the “High-End” audio pseudoscience that pervades the HiFi audio space. The vendors and manufacturers who populate the High-End have for decades, relied heavily on the deficiencies of recorded audio to push their products. Without problems, you can’t have solutions. We have all witnessed some of the solutions from crystals to magic wooden blocks, directional wire and on and on it goes. Digitally recorded and reproduced music causes a problem in this ecosystem. Digital music recording was designed to be superior to any technology that preceded it. There were some restrictions built into the early systems by the technology that was available but even so, the two-channel 16-bit PCM encoding at a 44.1 kHz sampling rate per channel in the Red Book standard, allowed for superior audio bandwidth and dynamic range unmatched by any analogue format. Other common distortions were banished as well, hiss, disc surface noise, wow & flutter, all gone.

How can you make money out of tweaks when there’s nothing to tweak? We all know the answer to that question. You simply invent problems and then present the solution to them! An example of this would be the CD “treatments” that began to appear. Magic pens to mark the edge of the disc because the music was being compromised by “internal reflections” which was utter balderdash but many fell for it. Demagnetising CD’s for God’s sake! I had a go at debunking this one for the denizens of the Stereophile forums many years ago. In spite of the fact that I could show two files, one ripped off the disc before demagnetisation and the other afterwards, that were identical, the Audiophools wouldn’t concede in any way. They were told by an equipment manufacturer that demagnetising worked which was backed up by a few reviewer opinions and that was that. What this illustrates is that the “snake oil” vendors and their cheerleaders latched onto this new technology as a “green field” ripe for the plunder. So it’s no surprise that we now find ourselves assailed with new manufactured problems connected with digital music streaming.

The streaming and playback of digital music is now well established and even the most ardent CD and turntable fan has been seduced by its convenience and very obvious quality as a source of recorded music. One device that’s been thoroughly whacked with the “High-End” stick is the DAC (Digital to Analog Converter) despite the fact that the DAC chips in the equipment are manufactured by only half a dozen companies, it’s a fertile field for upselling and price inflation using perceived value. There's no question that DAC manufacturers have taken up the challenge of designing equipment that extracts an optimal analogue sound from the available DAC chips and there are accepted quality differences between budget and higher spec units. This is no different to any other component in a sound system. As always, it’s up to the buyer to decide if they’re getting value for money when purchasing their equipment. To a large extent, the DAC market has fully shaken out now. In external DAC’s there are units like the Schitt Modi3 at $100 to a slew of ridiculous over packaged “High-End” units with prices in the stratosphere so the market is fully populated. This being the case, it’s obvious where the next step is for the snake oil end of things. Network equipment.

In a previous post “The Truth About Music Streaming” I outlined the basic flow of music streaming with some details regarding network protocols and codecs. However, there has recently been a couple of the “golden ear” brigade on the interwebs, holding forth on how devices like network switches “sound different”. This is how it starts, the blind leading the blind and a pack of foxes pricking their ears up as a new potential market reveals itself. The next thing we see is the high-end network switch and the HiFi router being championed by the usual promoters. Groan!

The antidote to this should be facts and realities but as we know, the Audiophool is immune to both. Regardless, we here on ASR deserve the truth and knowledge is power so I am going to spell out the digital streaming audio path from the server to the speaker as best I can with enough detail so that we can move into the future with some understanding. Computer networking is not voodoo or black magic, it’s a robust mechanism, developed by brilliant minds and put to the test every day moving trillions of bytes of data globally. It is a system unfazed by transmitting a footling little audio stream from a server in Sweden to your DAC in the USA, Iceland or Tasmania.

Computer Networking for Audio 101

Looked at in its simplest form, a computer network has a relatively simple structure when we’re talking audio.

- A digital audio file on a storage device

- A PC or NAS (Network Attached Storage) to act as a server

- An Ethernet adapter NIC (Network Interface Card)

- A network hub or switch

- A network router

- A suitable LAN (Local Area Network) or WAN (Wide Area Network)

- A mirror of the serving network

- A client device requesting the file

- A DAC (Digital Analogue Converter) to make listenable audio from the digital file

The Streaming Service

The job of the streaming service is quite simple on the surface. First, store the music files, second, make a searchable catalogue of the files and third, allow the client to access the files. The reality is very different and involves a vast array of equipment and software. So as not to overwhelm the reader, I’ll break the process down with a minimum of jargon and without reference to all of the software that works in concert to produce a song on your device of choice.

As a service, Spotify needs to fulfil the following user requirements:

- Account creation and Authorization

- Audio processing

- Recommendations

- Fast searching

- Low latency Streaming

- Billions of API requests internationally

- Store several hundred terrabytes of +100 million audio tracks.

- Store several petabytes of metadata from +500 million users.

The standard way of building a service like this is usually the “Monolithic” approach meaning that the service has two main components, the “Frontend” and the “Backend”. The Frontend is also referred to as the “Presentation Layer” this layer implements the application UI elements and client-side API requests. It is what the client sees and interacts with. The Backend consists of the Controller Layer (all software integrations through HTTP or other communication methods happen here), the Service Layer (the business logic of the application is present in this layer) and the Database Access Layer: (all the database accesses, including both SQL and NoSQL, of the applications, happens in this layer). This simplifies the site software as communication between two parties namely as a frontend (client) talking to a backend (server).

The only problem with this model is that it doesn’t scale easily. Subsequently, Spotify (and other subscriber services) have moved forward with a “Microservices” model. Microservices builds off monolithic architecture. Instead of defining software as a single executable unit, it divides software into multiple executable units that interoperate with one another. Rather than having one complex client and one complex server communicating with one another, microservices split clients and servers into smaller units, with many simple clients communicating with many simple servers.

One of the prime directives of the service however is low latency. All of the internal workings of the site are geared towards this. Subsequently, a key feature of the client side interaction is the “front loading” of files. As soon as you choose a playlist, album or song, the site starts downloading it to your device. An example of this is if you have a playlist loaded on your device and you lose the network connection, you will probably be able to play five or six songs even when there is no connection. So in truth, services like Spotify are working on caching the files on your device rather than buffering a stream. The upshot of this is that your device is not relying on a buffer and so many of the issues caused by a slow or intermittent connection are nullified.

This fact however is thoroughly inconvenient to the snake oil brigade because it removes a slew of invented problems that can be “caused” by the network equipment between you and the service. So lets’ see if we can explore that so we have a more complete understanding of the mechanics. This understanding should equip us sceptics with the knowledge we need to see through the spurious claims of audiophools and dishonest equipment manufacturers.

Ethernet

Without Ethernet, there would be no internet. Ethernet is the backbone of almost all computer networking globally. It is a system developed by brilliant minds to be robust and scalable. To understand Ethernet, one must first delve into the OSI model. OSI stands for the Open Systems Interconnection model and conceptualizes the seven layers that computer systems use to communicate over a network. The model places computing functions into a set of rules to support the inter-operability between products and software. While the modern internet is based on a simpler model called TCP/IP, and not on OSI, the OSI 7-layer model is still widely used today to describe network architecture.

- 7. Application Layer: Human-computer interaction layer, where applications can access the network services

- 6. Presentation Layer: Ensures that data is in a usable format and is where data encryption occurs

- 5. Session Layer: Maintains connections and is responsible for controlling ports and sessions

- 4. Transport Layer: Transmits data using transmission protocols including TCP and UDP

- 3. Network Layer: Decides which physical path the data will take

- 2. Data Link Layer: Defines the format of data on the network

- 1. Physical Layer: Transmits raw bit stream over the physical medium

Frames vs Packets

One of the important details to understand about Ethernet is the distinction between frames and packets. A frame is a data unit in a local area network (LAN) that includes header and trailer information in addition to the payload or data being transmitted. The header contains information such as the source and destination MAC addresses, while the trailer includes error checking data such as a cyclic redundancy check (CRC). A packet, on the other hand, is a data unit in a wide area network (WAN) or the Internet. It includes header information such as source and destination IP addresses and protocol-specific information, as well as the payload.

A frame in a LAN typically has the following structure:

- Preamble: A 7-byte sequence used to synchronize the clock speed of the sender and receiver.

- Start of Frame Delimiter (SFD): A 1-byte sequence that indicates the beginning of the frame.

- Destination MAC address: A 6-byte field that contains the MAC address of the intended recipient of the frame.

- Source MAC address: A 6-byte field that contains the MAC address of the sender.

- Type/Length field: A 2-byte field that indicates either the type of the payload (if it is greater than or equal to 1536 bytes) or the length of the payload (if it is less than 1536 bytes).

- Payload: The actual data being transmitted, which can range from 46 to 1500 bytes in length.

- Frame Check Sequence (FCS): A 4-byte field that contains a cyclic redundancy check (CRC) value used to detect errors in the transmission.

- Header: Contains various fields such as:

- Source IP address: A 4-byte field that contains the IP address of the sender.

- Destination IP address: A 4-byte field that contains the IP address of the intended recipient.

- Protocol: A 1-byte field that indicates the higher-level protocol being used, such as TCP or UDP.

- Time to Live (TTL): An 8-bit field that indicates the maximum number of hops a packet can make before it is discarded.

- Options (if present): Fields that carry additional information about the packet.

- Payload: The actual data being transmitted, which can range from 0 to 65,535 bytes in length.

- Trailer: Contains fields such as a cyclic redundancy check (CRC) used to detect errors in the transmission.

In short, a frame is used for communication within a LAN, while a packet is used for communication across WANs or the Internet.

Just as an aside, every Ethernet capable device needs a unique MAC address. These addresses are administered by the IEEE (Institute of Electrical and Electronic Engineers). Unlike IPv4 IP (layer 3) addresses (which are running low) there are a potential 281 trillion possibilities in the 48 character string that makes up a MAC address. That’s roughly 40,000 for every living human. Even if they do start running out, there are already mechanisms in place to expand the system to 64 characters.

Error Correction and Jitter

If there’s one element of Ethernet that the Audiophools like to pounce on it’s this one. As I stated before, Ethernet was designed by brilliant minds to be robust and scalable. It would be almost impossible to calculate the number of frames and packets successfully transmitted over Ethernet networks every day. I emphasise the word “successfully” because the error correction built into Ethernet is very efficient and reliable. Desperate for a source of problems, the Audiophools and Snake Oil salesmen have error correction/jitter near the top of their list of boogeymen to scare the living daylights out of the golden-ear brigade.

So what is “jitter”? Jitter refers to variations in the arrival time of packets of audio data over a network connection. In other words, jitter is the difference between the expected time of arrival of a packet and its actual time of arrival. In a real-time audio streaming scenario, jitter can cause noticeable problems such as audio distortions, skips, or delays. This is because the timing of the audio samples is critical in maintaining the quality of the audio stream. If packets arrive too early or too late, the audio playback may not be in sync, causing audible artefacts. Jitter can be caused by a variety of factors such as network congestion, routing changes, unequal processing times at intermediate routers, or queuing delays. To mitigate the effects of jitter, most audio streaming protocols employ jitter buffering techniques that allow the receiving end to temporarily store incoming packets and play them back at a constant rate. Jitter buffering can help smooth out the variations in packet arrival time, reducing the effects of jitter on the quality of the audio stream.

The fact is that error counts of lost/late/corrupted packets or frames have to get to the point where the jitter buffer that stores the packets, before clocking them out, runs dry. As I pointed out earlier, streaming sites actively “front load” the client with data so that smooth, error free and low latency playback can occur. Indeed some audio applications, like JRiver, don’t start playing until they have downloaded the entire file from the location where it’s stored.

So a range of mitigation is employed to eliminate jitter and provided the buffer has sufficient data stored in it. Network switches do not have a jitter buffer. Jitter buffering is a feature that is implemented in the software or hardware of the receiving end of a network connection, such as a computer or an audio codec. Ultimately, switches are hardware devices that are responsible for forwarding network packets based on their destination MAC address. They do not have the capability to interpret the contents of the packets, buffer them, or manipulate their timing. The primary function of a switch is to forward network packets as quickly as possible and to minimize network congestion by managing the flow of data. So to claim that a network switch can have a perceptible effect on the quality of a stream of frames or packets containing digital music data is preposterous.

Network Timing

Recently, in the “Extreme Snake Oil” thread a member posted up a YouTube video of a gentleman named Hans Beekhuyzen who put forward a set of ideas meant to convince the viewer that internal clocks and network timing could cause some sort of distortion on the waveform decoded by the DAC. His explanation of how this effect occurs merely displays his lack of understanding rather than some sort of amazing revelation. Timing on networks is done by the synchronisation of devices to one of the current timing protocols available to Ethernet devices. The most common is NTP (Network Time Protocol) but there is also Precision Time Protocol (PTP) or Synchronous Ethernet (SyncE) to provide highly accurate timing over the network. For an average home router/modem, the most common time protocol used is Network Time Protocol (NTP). NTP is widely used to synchronize the clocks of network devices and provides an accurate and reliable way to maintain accurate time on the network. Home routers/modems typically use NTP to synchronize with NTP servers on the internet. The router/modem will periodically query one or more NTP servers to determine the correct time and adjust its internal clock accordingly. This helps to ensure that the device's clock is accurate and that it can accurately timestamp network traffic.

Routers

I notice nobody has seriously proposed a HiFi router yet but there are plenty of dreamers out there who claim that the router makes a difference to the sound of a music file. This is just as spurious as the claims made for data switches. The purpose of a router in a computer network is to forward network packets from one network to another. A router operates at the network layer (layer 3) of the OSI model and performs routing functions to determine the best path for a network packet to take based on its destination IP address. The router acts as a gateway for communication over a WAN, that we generally call the internet. Once again, routers do not buffer packets, they exist to facilitate communication from Layer 2 to Layer 3.

DAC’s

The DAC represents the best possible target for a whack with the “High End” stick. I went into the subject of DAC’s in my music streaming post earlier and pointed out that there are only a handful of manufacturers making quality DAC chips. Texas Instruments, ESS Technology, Cirrus Logic and AKM Semiconductor are a few of the makers and some would consider these near the top of the quality currently available. Of course, a DAC is a DAC and there are numerous support circuits and devices that are needed to create a functional HiFi component. However, as can be observed in many cases in this era, the “manufacturers don’t necessarily make everything inside the case. In many cases it’s the case that’s had the “High End” treatment and the internals are generic modules. This is true of amplifiers and DAC’s alike. In the case of the “Less Loss” DAC featured in another thread, the makers show some very janky looking internals, which are obviously not designed or manufactured by the brand company. Based on this type of activity I’m fully prepared to believe that there would be differences in the quality of sound from one DAC to another, however subtle.

Cables

OHHH PUHLEEEZZE! I know it’s just become an extension of the analogue cable miasma but it’s even more ridiculous (if that’s possible!). Ethernet can work effectively on standard, unshielded CAT5 cable over 100M. Yes that is one hundred metres, with no intermediate equipment, boosters or whatever. The data will be available at the end of that 100M cable, reliably and without serious error. USB is less effective from a distance perspective, being limited to around 5M but until that threshold is reached, the data will be retrievable and largely error free. To claim that any digital network connection can somehow influence the contents of the data frames or packets being transmitted on it is beyond ridiculous. No amount of pseudoscience, phase, RFI, EMI, Gophers or magic spells can make it true.

Conclusion

Ethernet is a demonstrably effective method of networking computers and other devices. It contains within its structure robust methods of error correction that have only become more effective as network speeds have increased. Network timing is achieved by the use of standardised protocols and is largely automatic. Music streaming services make it their business to cache or front load content on the client device to ensure low latency. Data/jitter buffering is automatic and as network speeds have increased, has also become more effective. Lost, late or corrupt packets or frames can be resent before the buffer runs out and processed in the order they were intended. No cable carrying a digital signal can influence the content of the data unless there are gross deficiencies in the cable or its environment. Even under these circumstances, the outcome will be lost, late or corrupt packets that will only be noticeable when the buffer has been depleted. Let’s be clear, the reason that routers, switches and cables do not change the contents of the frames and packets they transmit is because if they did, the whole network system would be utterly useless!!