KSTR

Major Contributor

Hi,

Over the course of the last weeks I managed to set up and stabilize a procedure that allows to expose the error residual of Null-Tests á la DeltaWave. Actually I'm using DeltaWave for the final stage of display and analysis but it does only level matching fine-tining here, the rest is done in the pre-processing.

When comparing two files with different frequency response of magnitude and phase (any kind of "EQ", basically), direct subtraction always fails in that the difference is immediately dominated by theses magnitude and phase differences while small distortion products and such things are drowned in that linear difference. The linear differences are trivial in that it's "just EQ" which can be complete undone, whereas true distortion is not and that's what I'm after here.

The typical example would be the distortion of an DAC-->ADC loopback recording vs. the original file. The DA-->AD process has a frequency response: the AC-coupling (highpass) of the ADC input, and effect of the combined analog and digital lowpass filters in DAC and ADC.

DeltaWave itself provides a means to take care of those linear deviations, the (slightly misnamed) "Non-Linear Calabration" settings for "Level EQ" and "Phase EQ". When file has certain properties and the distortion is high enough, this produces satisfactory results but when the differences are really small the algorithm used produces a lot of artifacts that make it hard to listen for specific distortion in the residual. The generated plots and numbers are still quite representative, though.

With my method I was able to almost completely eliminate processing artifacts down to at least -130dB and create a clean residual (contaning only residual noise and the remaining difference signal).

I will detail the procedure in a seperate thread, for now I'll just throw a technical buzzword title for it: "Compensation of Linear Transfer Function Differences for Subtractive Analysis by Cross-Convolution of Impulse Responses". It also makes use of heavy time-domain averaging over many takes (100, here) to get the analog noise down, as well as the impact of fluctuating gain and other parameter drifts.

--------------

Please find a set of files for download here, containing an original file and several results of the subtractive analysis for different DUTs (Device Under Test).

1. The most important test for a new subtractive method is always the Null-Test, comparing a DUT with itself, with the recordings preferably made at different days, everything set up from scratch again. When that is stable and repeatable we have the analysis noise floor, the maximum resulution we can get.

This is the file "NullTest_ShortCable(+110dB).wav" (with the residual boosted by +110dB, a factor of 3,160,000 !)

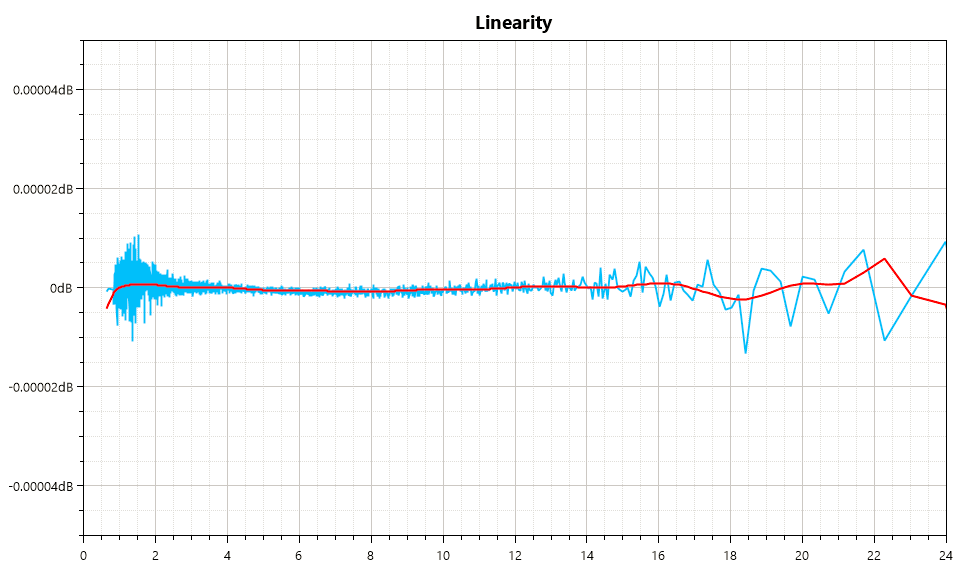

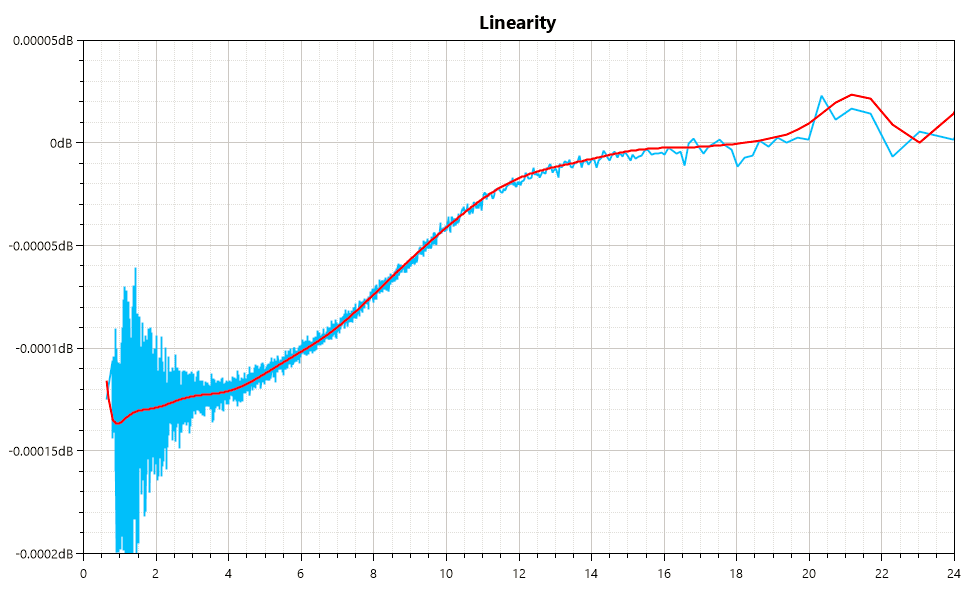

In Deltawave a most revealing plot is the Linearity Plot, for this test it looks like this:

This is a flat horizontal line, indicating that there is no distortion.

Listening to the residual, there is mainly noise with the signal already buried for the most part but the signal appears to be rather clean. Only two things stand out:

- there is some sort of random amplitude modulation... this is the effect of the remaining dynamic gain drift of the measurement rig (uisng an RME ADI-2 Pro FSR).

- the sound has a strange "reverb halo" effect. It's not a natual kind of decaying reverb, it more steady state in level. This effect shows one of the resolution limits of the method as this reverb artifact is caused by the remaining noise (of only -130dBFS rms) in the Impulse Response data used for the Compensation.

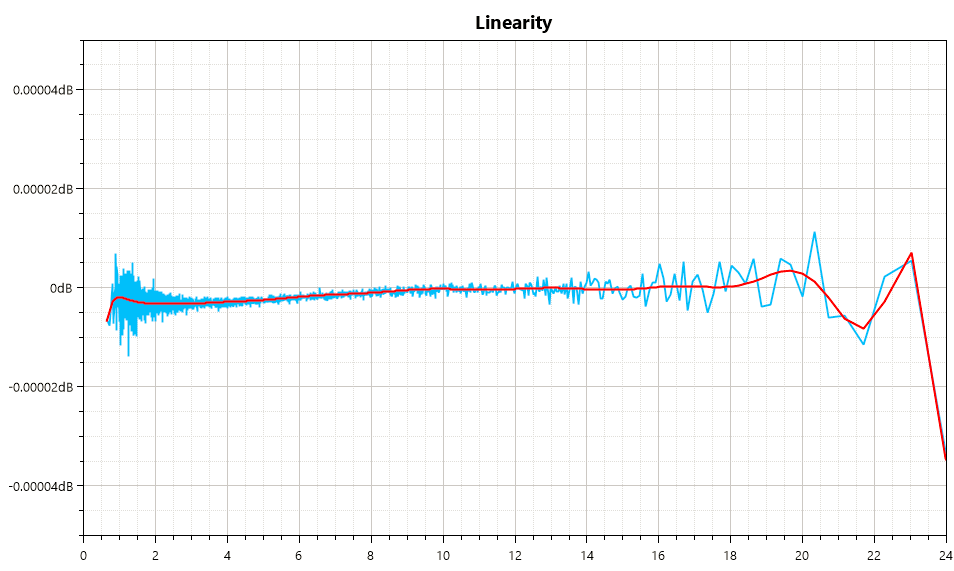

2. Let's move on to something more interesting, the comparison of a 6m long crappy guitar cable vs a 20cm short jumper cable, this is file "LongCable_vs_ShortCable(+110dB).wav".

DeltaWave shows Reference vs Original in the Linearity Plot, so we can infer that the long cable makes louder sounds louder that it should. This could be expansive nonlinear distortion of odd orders but it also could come from an expander-like effect somewhere.

Listening to the resudial, though, we don't find any distortion sticking out compared to the Null-Test. The effective distortion (if any) is truly neglegible even with this brutal level of magnification.

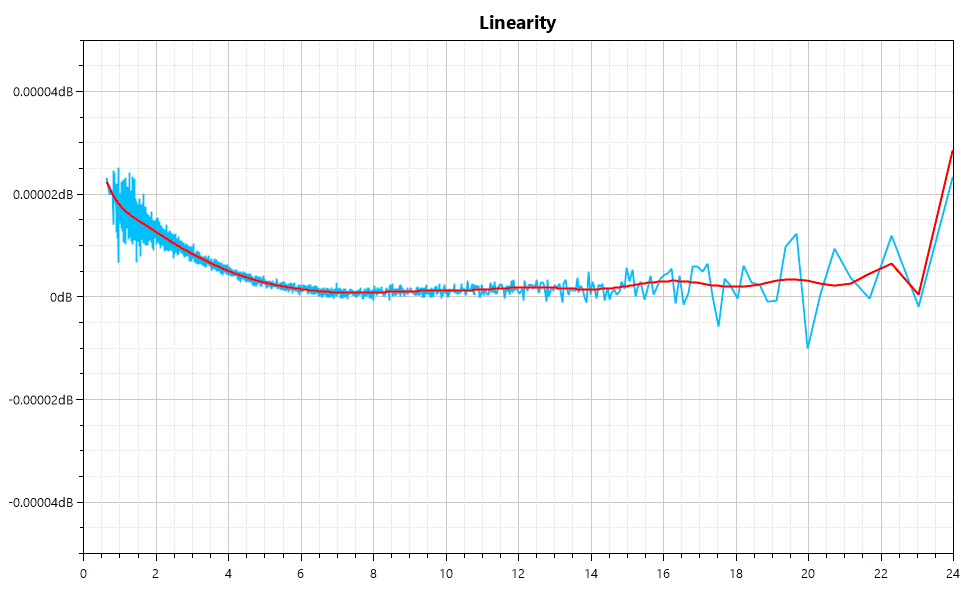

3. Now let's change something in the circuit and see if that gives some distortion results. For this, I switched the Reference Level setting of the ADI-2 Pro from +13dBu to +19dBu, meaning the analog level is 6dB louder now. This changes the gain settings of the internal circuits, we actually hear relays clicking. It actually may even skip or insert a complete circuit stage (OpAmp). File is "+19dBu_vs_+13dBu(+110dB).wav".

OK, now we have something! Once the signal is using the top 5bits or so, the +19dB file is showing some compression.

Listening to the residual exposes the distortion very clearly and it makes clear this is non-linear distortion mostly, no "compressor-effect".

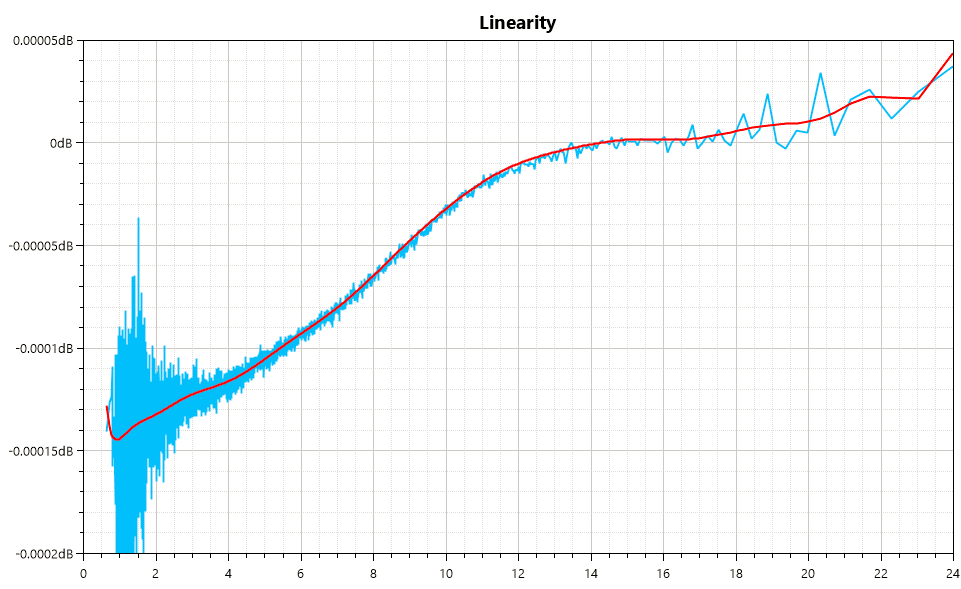

4. Next we get to what is a harder test case for the algorithm, the comparision of of the original file vs. the DAC-->ADC loopback recording where a lot of linear transfer function difference has to be undone to allow the direct subtraction. File is "Original_vs_+13dBu(+90dB).wav".

(Note the different, reduced scale on the Y-axis)

So here we see a lot of change when going from soft levels to loud levels, the loopback recording tends to be louder at high signal levels that it should. At first we would assume that this again is simple static nonlinear distortion like in the previous example, just much more of it. But listening to the residual (with now only +90dB gain to make it fit) offers a different insight:

- at low signal levels the residual is readily identified as being distorted

- at high levels, though, we have more of a linear sounding amplitude modulation again, and it is signal dependent. This appears to be more modulation than from what is expected from the (random) remaining gain modulation we saw in the Null-Test. This is an unexpected result, the DAC-->ADC chain shown a (extremely small, mind you) compression/expansion effect dominating the nonlinear distortion.

5. Finally the same test, now using the higher Reference Level of +19dB (6dB more), "Original_vs_+19dBu(+90dB).wav"

Same picture, with the plot bending up slightly in the top 4 bits. Compare its the difference to the previous plot with the plot of #3 and find that same bend, the same characteristic. So the overall measurement did catch the difference between +13dBu and +19dBu correctly.

The residual is close to #4, I think I can hear a bit more of nonlinear distortion in the loud part.

-- To be continued --

Over the course of the last weeks I managed to set up and stabilize a procedure that allows to expose the error residual of Null-Tests á la DeltaWave. Actually I'm using DeltaWave for the final stage of display and analysis but it does only level matching fine-tining here, the rest is done in the pre-processing.

When comparing two files with different frequency response of magnitude and phase (any kind of "EQ", basically), direct subtraction always fails in that the difference is immediately dominated by theses magnitude and phase differences while small distortion products and such things are drowned in that linear difference. The linear differences are trivial in that it's "just EQ" which can be complete undone, whereas true distortion is not and that's what I'm after here.

The typical example would be the distortion of an DAC-->ADC loopback recording vs. the original file. The DA-->AD process has a frequency response: the AC-coupling (highpass) of the ADC input, and effect of the combined analog and digital lowpass filters in DAC and ADC.

DeltaWave itself provides a means to take care of those linear deviations, the (slightly misnamed) "Non-Linear Calabration" settings for "Level EQ" and "Phase EQ". When file has certain properties and the distortion is high enough, this produces satisfactory results but when the differences are really small the algorithm used produces a lot of artifacts that make it hard to listen for specific distortion in the residual. The generated plots and numbers are still quite representative, though.

With my method I was able to almost completely eliminate processing artifacts down to at least -130dB and create a clean residual (contaning only residual noise and the remaining difference signal).

I will detail the procedure in a seperate thread, for now I'll just throw a technical buzzword title for it: "Compensation of Linear Transfer Function Differences for Subtractive Analysis by Cross-Convolution of Impulse Responses". It also makes use of heavy time-domain averaging over many takes (100, here) to get the analog noise down, as well as the impact of fluctuating gain and other parameter drifts.

--------------

Please find a set of files for download here, containing an original file and several results of the subtractive analysis for different DUTs (Device Under Test).

1. The most important test for a new subtractive method is always the Null-Test, comparing a DUT with itself, with the recordings preferably made at different days, everything set up from scratch again. When that is stable and repeatable we have the analysis noise floor, the maximum resulution we can get.

This is the file "NullTest_ShortCable(+110dB).wav" (with the residual boosted by +110dB, a factor of 3,160,000 !)

In Deltawave a most revealing plot is the Linearity Plot, for this test it looks like this:

This is a flat horizontal line, indicating that there is no distortion.

Listening to the residual, there is mainly noise with the signal already buried for the most part but the signal appears to be rather clean. Only two things stand out:

- there is some sort of random amplitude modulation... this is the effect of the remaining dynamic gain drift of the measurement rig (uisng an RME ADI-2 Pro FSR).

- the sound has a strange "reverb halo" effect. It's not a natual kind of decaying reverb, it more steady state in level. This effect shows one of the resolution limits of the method as this reverb artifact is caused by the remaining noise (of only -130dBFS rms) in the Impulse Response data used for the Compensation.

2. Let's move on to something more interesting, the comparison of a 6m long crappy guitar cable vs a 20cm short jumper cable, this is file "LongCable_vs_ShortCable(+110dB).wav".

DeltaWave shows Reference vs Original in the Linearity Plot, so we can infer that the long cable makes louder sounds louder that it should. This could be expansive nonlinear distortion of odd orders but it also could come from an expander-like effect somewhere.

Listening to the resudial, though, we don't find any distortion sticking out compared to the Null-Test. The effective distortion (if any) is truly neglegible even with this brutal level of magnification.

3. Now let's change something in the circuit and see if that gives some distortion results. For this, I switched the Reference Level setting of the ADI-2 Pro from +13dBu to +19dBu, meaning the analog level is 6dB louder now. This changes the gain settings of the internal circuits, we actually hear relays clicking. It actually may even skip or insert a complete circuit stage (OpAmp). File is "+19dBu_vs_+13dBu(+110dB).wav".

OK, now we have something! Once the signal is using the top 5bits or so, the +19dB file is showing some compression.

Listening to the residual exposes the distortion very clearly and it makes clear this is non-linear distortion mostly, no "compressor-effect".

4. Next we get to what is a harder test case for the algorithm, the comparision of of the original file vs. the DAC-->ADC loopback recording where a lot of linear transfer function difference has to be undone to allow the direct subtraction. File is "Original_vs_+13dBu(+90dB).wav".

(Note the different, reduced scale on the Y-axis)

So here we see a lot of change when going from soft levels to loud levels, the loopback recording tends to be louder at high signal levels that it should. At first we would assume that this again is simple static nonlinear distortion like in the previous example, just much more of it. But listening to the residual (with now only +90dB gain to make it fit) offers a different insight:

- at low signal levels the residual is readily identified as being distorted

- at high levels, though, we have more of a linear sounding amplitude modulation again, and it is signal dependent. This appears to be more modulation than from what is expected from the (random) remaining gain modulation we saw in the Null-Test. This is an unexpected result, the DAC-->ADC chain shown a (extremely small, mind you) compression/expansion effect dominating the nonlinear distortion.

5. Finally the same test, now using the higher Reference Level of +19dB (6dB more), "Original_vs_+19dBu(+90dB).wav"

Same picture, with the plot bending up slightly in the top 4 bits. Compare its the difference to the previous plot with the plot of #3 and find that same bend, the same characteristic. So the overall measurement did catch the difference between +13dBu and +19dBu correctly.

The residual is close to #4, I think I can hear a bit more of nonlinear distortion in the loud part.

-- To be continued --