This is definitely a problem with linear phase filters which require careful matching of the low and high pass sections in order to cancel out any pre-ringing which most likely won't be achieved off-axis. It's also a similar problem for normal high slope filters with lots of stored energy.Charlie, first of all it's good to see you posting here and still meddling with speakers. I've been following you for a long time now.

My expectation, which I suspect you share, is that listeners will probably not be able to detect, and certainly won't be able to articulate a preference between samples. It will be interesting if I am wrong.

However, won't real world speakers demonstrate different off axis behavior with differing crossovers? It seems as if this would be a much more significant difference than what we are scrutinizing here.

-

Welcome to ASR. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

INPUT WANTED: music tracks and methodology for testing audibility of group delay

- Thread starter charlielaub

- Start date

OP

charlielaub

Senior Member

- Thread Starter

- #22

Actually, this is yet another filter that I developed since writing the article. In this branch of the DFE "family" I double up one or more zeroes in the stopband. The filter is 7th order but if you look at the response there are only two notches - each one is actually a double zero (two at the same frequency). The reason for doing that is that, at the zero, there is a phase rotation of 180 degrees. When the attenuation is not all that deep (e.g. 30 to 40dB) and the phase angle between LP and HP is near zero at Fc (an in phase crossover), at the zero there is a 180 degree phase rotation of the stopband. The result is a sudden, small cancellation within the LP+HP sum. I had the idea to try and double one or more zeroes so that the phase rotation going from one side of the zero to the other is 360 degrees, and remains in phase relatively speaking. This is really only a problem when stopband attenuation is less than about 45 to 50dB and the relative phase angle between LP and HP filters at the crossover point is near zero. But like the DFES crossover it is yet another subtype of the DFE family that is worth checking out. The 7th order crossover I show is not bad, and I have a couple others like it that I have worked up.So we are on the same page which filter is this from your AE article ??

OP

charlielaub

Senior Member

- Thread Starter

- #23

Thanks, 617. You are correct in that, when changing the crossover type and then listening with loudspeakers, there will likely be off-axis response changes and other changes that could create their own audible differences such that it might be difficult to claim that the audible differences are from group delay alone with reasonable certainty. Unfortunately I cannot do anything about that. Researchers have instead used headphones for testing and have synthesized various group delay profiles that probe for the audibility threshold in one way or another but do not necessarily correspond to a real-world allpass group delay response.Charlie, first of all it's good to see you posting here and still meddling with speakers. I've been following you for a long time now.

My expectation, which I suspect you share, is that listeners will probably not be able to detect, and certainly won't be able to articulate a preference between samples. It will be interesting if I am wrong.

However, won't real world speakers demonstrate different off axis behavior with differing crossovers? It seems as if this would be a much more significant difference than what we are scrutinizing here.

I could ask participants to try the "test" using first loudspeakers and then headphones (or vice versa), or ask them to indicate what playback device was used to peform the test. This would allow me to report the results individually for headphones and for loudspeakers.

I made a post about my efforts to set up group delay audibility testing on my Faccebook feed and I got this comment from Sean Olive:

This corresponds to what the literature reports regarding the difference between headphone and loudspeaker playback.Sean Olive

We did an experiment years ago on group delay as part of the Athena Project. It measured the detection thresholds using an Up Down Transform Method using different signals like impulses, noise and music. If you go from impulses: headphones to music in rooms the thresholds go way up as you might expect. We never published it.

These filters look more like Inverse Chebyshev filters since there is no ripple in the passbandActually, this is yet another filter that I developed since writing the article. In this branch of the DFE "family" I double up one or more zeroes in the stopband. The filter is 7th order but if you look at the response there are only two notches - each one is actually a double zero (two at the same frequency). The reason for doing that is that, at the zero, there is a phase rotation of 180 degrees. When the attenuation is not all that deep (e.g. 30 to 40dB) and the phase angle between LP and HP is near zero at Fc (an in phase crossover), at the zero there is a 180 degree phase rotation of the stopband. The result is a sudden, small cancellation within the LP+HP sum. I had the idea to try and double one or more zeroes so that the phase rotation going from one side of the zero to the other is 360 degrees, and remains in phase relatively speaking. This is really only a problem when stopband attenuation is less than about 45 to 50dB and the relative phase angle between LP and HP filters at the crossover point is near zero. But like the DFES crossover it is yet another subtype of the DFE family that is worth checking out. The 7th order crossover I show is not bad, and I have a couple others like it that I have worked up.

OP

charlielaub

Senior Member

- Thread Starter

- #25

Yes, they do at first glance look like Chebyshev filters, but they are not identical because they are designed to have a relatively flat LP+HP sum and there is some amount of ripple in the crossover sum (see below).These filters look more like Inverse Chebyshev filters since there is no ripple in the passband

There is an online document at audioXpress that has more complete details on the crossovers, including a plot of the LP+HP sum with a scale of +/- 1dB. You can download it here:

The plots of the crossover sum look like this (this time it's one of the DFE filters in the document):

The PDF document available on line at aX also has all of the info needed to implement the filters, presented in tables. But you need to be able to create LP-notch and HP-notch filters in order to do so, and those are not found on any DSP unit. I published another aX article the prior month (AUG 2024) on those filters, which are the building blocks of the DFE filters as well as elliptical filters. In the supplementary info for that article I provide a short C++ program to generate the IIR biquad coefficients, so if you have e.g. a MiniDSP with the advanced biquad feature you can at least implement these for a given sample rate. I do it in software DSP using my LADSPA plugins.

OP

charlielaub

Senior Member

- Thread Starter

- #26

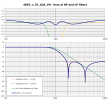

And here is an example of why I started to look into "zero doubling". The LP and HP filters are in phase at Fc but the stopband depth is not large. In between the stopband zero notches the phase is reversed. This causes the stopband to interact with the passband of the other filter and cancel it out a bit. If you look at the upper plot, the green and orange traces are the LP and HP filters and the bold blue line is the sum. You can see that the sum is less than the LP or HP filters in places, and this is due to the partial cancellation. It only amounts to around 0.1dB but I don't consider it to be "good". This is one reason why the lower orders or 4 or below of DFE filters do not really work all that well and you need order 5 or higher to avoid these effects.

Attachments

Tracks with bass and x-over in the bass region. Eg

www.audiosciencereview.com

www.audiosciencereview.com

Audibility of group delay at low frequencies

In the spectrogram tab You can change the appearance settings as well -- just change it to whatever method or resolution is needed to see things clearer. FDW doesn't affect the spectrogram at all, but it can clear up some of the reflection-induced rotations in seen in the phase traces. From...

www.audiosciencereview.com

www.audiosciencereview.com

Even though I am getting somewhat out of the scope and demands of the OP @charlielaub, but...

Just for your info and reference, I used/use not "music" tracks, but specially prepared multiple-Fq tone burst tracks/signals (including the time-shifted multiple-Fq rectangular sine tone burst signal sequences I used in #493 and #504) for my measurement and (perfect) tuning of "time alignment" among all the SP drivers in my DSP based multichannel multi-SP-driver multi-amplifier fully active audio system.

If you would be interested, please visit these posts on my project thread for the details;

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-1_ Precision pulse wave matching method: #493

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-2_ Energy peak matching method: #494

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-3_ Precision single sine wave matching method in 0.1 msec accuracy: #504, #507

- Measurement of transient characteristics of Yamaha 30 cm woofer JA-3058 in sealed cabinet and Yamaha active sub-woofer YST-SW1000: #495, #497, #503, #507

- Identification of sound reflecting plane/wall by strong excitation of SP unit and room acoustics: #498

- Perfect (0.1 msec precision) time alignment of all the SP drivers greatly contributes to amazing disappearance of SPs, tightness and cleanliness of the sound, and superior 3D sound stage: #520

- Not only the precision (0.1 msec level) time alignment over all the SP drivers but also SP facing directions and sound-deadening space behind the SPs plus behind our listening position would be critically important for effective (perfect?) disappearance of speakers: #687

And, you can find the details of my latest system setup here;

- The latest system setup of my DSP-based multichannel multi-SP-driver multi-amplifier fully active audio rig, including updated startup/ignition sequences and shutdown sequences: as of June 26, 2024: #931

If you would be seriously interested in using the test tone signal tracks I prepared and used in these measurements and tunings, and if you would be also interested in the contents and PDF booklet of "Sony Super Audio Check CD" (ref. #651, #750, #760), please simply PM me writing your wish.

Furthermore, many of the "music tracks" within my "Audio Reference/Sampler Music Playlist" consists of 60 tracks (summary ref. here as independent thread) would be very much suitable for your subjective listening confirmation after the delay-compensation or time-alignment tunings, I believe.

Again, if you would be seriously interested in using/listening such intact tracks of the "Reference Playlist", please simply PM me writing your wish.

Just for your info and reference, I used/use not "music" tracks, but specially prepared multiple-Fq tone burst tracks/signals (including the time-shifted multiple-Fq rectangular sine tone burst signal sequences I used in #493 and #504) for my measurement and (perfect) tuning of "time alignment" among all the SP drivers in my DSP based multichannel multi-SP-driver multi-amplifier fully active audio system.

If you would be interested, please visit these posts on my project thread for the details;

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-1_ Precision pulse wave matching method: #493

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-2_ Energy peak matching method: #494

- Precision measurement and adjustment of time alignment for speaker (SP) units: Part-3_ Precision single sine wave matching method in 0.1 msec accuracy: #504, #507

- Measurement of transient characteristics of Yamaha 30 cm woofer JA-3058 in sealed cabinet and Yamaha active sub-woofer YST-SW1000: #495, #497, #503, #507

- Identification of sound reflecting plane/wall by strong excitation of SP unit and room acoustics: #498

- Perfect (0.1 msec precision) time alignment of all the SP drivers greatly contributes to amazing disappearance of SPs, tightness and cleanliness of the sound, and superior 3D sound stage: #520

- Not only the precision (0.1 msec level) time alignment over all the SP drivers but also SP facing directions and sound-deadening space behind the SPs plus behind our listening position would be critically important for effective (perfect?) disappearance of speakers: #687

And, you can find the details of my latest system setup here;

- The latest system setup of my DSP-based multichannel multi-SP-driver multi-amplifier fully active audio rig, including updated startup/ignition sequences and shutdown sequences: as of June 26, 2024: #931

If you would be seriously interested in using the test tone signal tracks I prepared and used in these measurements and tunings, and if you would be also interested in the contents and PDF booklet of "Sony Super Audio Check CD" (ref. #651, #750, #760), please simply PM me writing your wish.

Furthermore, many of the "music tracks" within my "Audio Reference/Sampler Music Playlist" consists of 60 tracks (summary ref. here as independent thread) would be very much suitable for your subjective listening confirmation after the delay-compensation or time-alignment tunings, I believe.

Again, if you would be seriously interested in using/listening such intact tracks of the "Reference Playlist", please simply PM me writing your wish.

Last edited:

OP

charlielaub

Senior Member

- Thread Starter

- #29

So, getting back to testing methodology...

Here is my current idea:

The questions is how do I implement this test over the internet so that it is available to anyone to try!

I can generate all of the processed music tracks myself. Is there some sort of platform for hosting these sorts of tests online?

Here is my current idea:

- Th4e trials will consist of a series of ABX comparison tests.

- A or B will be the original audio, the other of A or B will be the audio processed through the crossover filters, and X will be a repeat of either A or B.

- The order of A,B could be random, e.g. the original audio will not always be presented first. The X track will always be presented last.

- The audio track used could be varied from among a couple of tracks, or all different tracks, of about 10 seconds each, instead of the same track over and over again.

- The processed audio uses a variety of different crossovers (my own and other types as well) and would be presented in random order. I could design a couple of crossovers to have what should be audible levels of group delay. Unfortunately, for my own DFE crossovers, there may be minor variations in the passband, e.g. ripple of up to +/- 0.2 dB that might make it possible for listeners to identify a difference from the unprocessed audio not caused by group delay. A flaw, but unfortunately that is how the cookie crumbles. In many cases the ripple amplitude will be less than 0.2dB and likely not audible.

- The listener will be asked to match X to either A or B. This is the test.

- Listeners could proceed with the testing until the end, or stop early if they get fatigued (or bored!). Crossovers would be presented randomly so even if most people exit early there would still be coverage of all the different crossovers under test.

The questions is how do I implement this test over the internet so that it is available to anyone to try!

I can generate all of the processed music tracks myself. Is there some sort of platform for hosting these sorts of tests online?

Last edited:

OP

charlielaub

Senior Member

- Thread Starter

- #30

OK, this looks encouraging as far as software goes. I just discovered it and need to read it through carefully. Has anyone used it for testing?

github.com

github.com

GitHub - jaakkopasanen/ABX: Web app for AB and ABX listening tests

Web app for AB and ABX listening tests. Contribute to jaakkopasanen/ABX development by creating an account on GitHub.

somebodyelse

Master Contributor

- Joined

- Dec 5, 2018

- Messages

- 5,355

- Likes

- 4,947

A couple of related threads:

https://www.audiosciencereview.com/...lace-spoiler-probably-yes.29353/#post-1028225

https://www.audiosciencereview.com/...sts-com-listening-tests-in-the-browser.22110/

Or you could ask @jaakkopasanen

https://www.audiosciencereview.com/...lace-spoiler-probably-yes.29353/#post-1028225

https://www.audiosciencereview.com/...sts-com-listening-tests-in-the-browser.22110/

Or you could ask @jaakkopasanen

I think the main issue with audio via the browser is that you have little to no control over what the playback system looks like. It always goes through the OS mixer, so resampling is almost unavoidable. There may also be differences in how the browsers handle audio themselves.

Given all that, I still think that most of this should not be too critical. The vast advantages that you get for conducting such an experiment at scale should way much heavier than the possible sound quality degradation.

Given all that, I still think that most of this should not be too critical. The vast advantages that you get for conducting such an experiment at scale should way much heavier than the possible sound quality degradation.

It might be nice if the interface allowed users to describe their playback units. Many transducers have crossovers in them after all, not sure what that looks like with BA IEMs, and headphones tend not to be very linear. But probably good enough for compartive measurements.

OP

charlielaub

Senior Member

- Thread Starter

- #34

I am rethinking about what I want to test, and I think ABX testing is not what I want to do.

I want to know the threshold of audibility of the group delay response (and any other effects like FR deviations) for a variety of crossover filters. I am able to put forward several choices that the listener can pick from or rank. For example, WRT the purported or assumed audibility threshold I can present N versions of a short audio track with N-1 different levels of group delay plus the original audio. The subject can play the audio corresponding to each one as many times as they would like. The subject is asked to choose ALL options that seem to be unaltered audio and leave any that seem altered unchecked. The options would be listed in a random order, however, I will of course know which is which.

After many trials by various subjects I should obtain a sort of histogram that represents the cumulative voting on the limit of audibility for the crossovers that were auditioned, and for the particular audio selection. Maybe something like this (0=unaltered audio, 10=audio with audible effects from group delay, 1 to 9 = increasing levels of group delay, the y-axis is the fraction of the test subjects who selected each audio track 0..10 as sounding "unaltered"):

This is in a way like having the user perform N AB tests in parallel.

The next and most important question is how to analyze (statistically) the historgram data? It seems to me that the histogram that I will obtain might be similar to a cumulative binomial probability distribution. If we assume those sorts of descriptive statistics apply, I can just convert it to the binomial form and then find the mean and calculate the confidence limits of the mean, or similar statistics. I would be able to provide the group delay corresponding to the mean. This would be reported as the GD audibility threshold for the particular audio track used in the test. Using a more statistical approach, given the number of times the test was taken I can calculate the fraction that should be required for 95% confidence and then see where the cumulative distribution crosses that value. This would be the audibility limit to 95% confidence.

This could be repeated for several audio tracks, since different sorts of sounds supposedly influence the audibility threshold. I would create one web page for each test, and then could easily add new tests (each test will have a new and different audio track) at any time. The user does not get any immediate feedback from their trial such as a score, but then this might also discourage users from trying to go back and get a better score, etc.

What I like about this approach is that the user is in control of how many times they must listen to the audio before making their selections.

Please share your thoughts on this approach and my assumptions about the statistics! Thanks

I want to know the threshold of audibility of the group delay response (and any other effects like FR deviations) for a variety of crossover filters. I am able to put forward several choices that the listener can pick from or rank. For example, WRT the purported or assumed audibility threshold I can present N versions of a short audio track with N-1 different levels of group delay plus the original audio. The subject can play the audio corresponding to each one as many times as they would like. The subject is asked to choose ALL options that seem to be unaltered audio and leave any that seem altered unchecked. The options would be listed in a random order, however, I will of course know which is which.

After many trials by various subjects I should obtain a sort of histogram that represents the cumulative voting on the limit of audibility for the crossovers that were auditioned, and for the particular audio selection. Maybe something like this (0=unaltered audio, 10=audio with audible effects from group delay, 1 to 9 = increasing levels of group delay, the y-axis is the fraction of the test subjects who selected each audio track 0..10 as sounding "unaltered"):

This is in a way like having the user perform N AB tests in parallel.

The next and most important question is how to analyze (statistically) the historgram data? It seems to me that the histogram that I will obtain might be similar to a cumulative binomial probability distribution. If we assume those sorts of descriptive statistics apply, I can just convert it to the binomial form and then find the mean and calculate the confidence limits of the mean, or similar statistics. I would be able to provide the group delay corresponding to the mean. This would be reported as the GD audibility threshold for the particular audio track used in the test. Using a more statistical approach, given the number of times the test was taken I can calculate the fraction that should be required for 95% confidence and then see where the cumulative distribution crosses that value. This would be the audibility limit to 95% confidence.

This could be repeated for several audio tracks, since different sorts of sounds supposedly influence the audibility threshold. I would create one web page for each test, and then could easily add new tests (each test will have a new and different audio track) at any time. The user does not get any immediate feedback from their trial such as a score, but then this might also discourage users from trying to go back and get a better score, etc.

What I like about this approach is that the user is in control of how many times they must listen to the audio before making their selections.

Please share your thoughts on this approach and my assumptions about the statistics! Thanks

Last edited:

OP

charlielaub

Senior Member

- Thread Starter

- #35

OK, I have managed to put together a test of a form that could be used to conduct the experiment as I described above. It's based on a contact form. The user can optionally provide some info about their system (headphones, loudspeakers, etc.). I could also use mandatory radio buttons to indicate playback via headphones or loudspeakers. The "business" part of the form contains N checkboxes and there will be N buttons that activate audio playback for each track (only two for now, with a couple of random 3 second audio clips just to test functionality).

The test subject clicks the buttons to listen to the processed audio tracks and then checks a checkbox to indicate whether they think that audio track contains audible artifacts or not. One button could be the "unprocessed" audio track as a reference and the others would be left to the user to judge as sounding the same as the reference, or with some artifacts.

Here is a link to the test form. You can play around with it if you would like.

TEST FORM TRIAL PAGE

Looks like this:

When the submit button is clicked my web host records the info from the form. I can later download that into Excel to process/analyze the results.

This was easy to put together and while it is a bit crude I think it will meet my needs.

The test subject clicks the buttons to listen to the processed audio tracks and then checks a checkbox to indicate whether they think that audio track contains audible artifacts or not. One button could be the "unprocessed" audio track as a reference and the others would be left to the user to judge as sounding the same as the reference, or with some artifacts.

Here is a link to the test form. You can play around with it if you would like.

TEST FORM TRIAL PAGE

Looks like this:

When the submit button is clicked my web host records the info from the form. I can later download that into Excel to process/analyze the results.

This was easy to put together and while it is a bit crude I think it will meet my needs.

Last edited:

How would the subject know without a reference? If I hear two pieces of music, and one of them is (obviously) altered in some way, how do I know if it’s A or B? My only option here would be to choose either the one that I like most, or the inverse: the one I dislike the most.The subject is asked to choose ALL options that seem to be unaltered audio and leave any that seem altered unchecked

For such a test, I would actually randomize every unique sample option, meaning that it’s possible to have multiple of the same sample in the set.For example, WRT the purported or assumed audibility threshold I can present N versions of a short audio track with N-1 different levels of group delay plus the original audio.

Another option that you may consider is to indeed have all unique options, and have the subjects rank them from least altered to most altered.

But again, without a reference, these options may be tricky to conclude anything from.

I have no buttons to play audio but looks good

OP

charlielaub

Senior Member

- Thread Starter

- #38

Oops My bad. I started with that form and then had to modify it in an online HTML editor to add the buttons, etc.

Let me up load it to my web host and then I will post the link.

Let me up load it to my web host and then I will post the link.

Last edited:

OP

charlielaub

Senior Member

- Thread Starter

- #39

Here ya go. Give it a try:

TEST FORM TRIAL PAGE

Please keep in mind this is just to figure out how to put the necessary elements on a page and get the data returned to me. This is NOT an actual test!

TEST FORM TRIAL PAGE

Please keep in mind this is just to figure out how to put the necessary elements on a page and get the data returned to me. This is NOT an actual test!

Works now.Here ya go. Give it a try:

TEST FORM TRIAL PAGE

Please keep in mind this is just to figure out how to put the necessary elements on a page and get the data returned to me. This is NOT an actual test!

Similar threads

- Replies

- 10

- Views

- 2K

- Replies

- 2

- Views

- 536

- Replies

- 7

- Views

- 1K

- Replies

- 32

- Views

- 3K