Hello again gang:

It has been a busy week, and since I don't generally hang out with the friendly, pitchfork wielding crowd here, I was not aware of this thread until Thursday when someone sent me a link.

Needless to say, we were shocked by the measurements you posted and the conclusion you drew to condemn our product as not blocking the path of leakage currents and transmitting noise and harmonics from whatever PS is used to "energize"/charge the

UltraCap LPS-1.

Happily I can report and prove that you measurements and conclusions are entirely incorrect.

The LPS-1 does not pass ANY AC leakage or noise from its charging supply.

What you are seeing is radiated--into the air--harmonics from the Mean Well (and iFi iPower as you showed even worse), from the brick itself, and very much from the DC cable from the SMPS to the LPS-1. The cables from your DAC to your analyzer are picking this up! (And we suspect that the output impedance of your bus-powered DAC may be a bit high, causing greater sensitivity to this--since our tests show one cheap DAC, the Micca OriGen+ was sensitive while others on hand were not.)

Before posting our graphs and photos and giving you suggestions on proving this for yourself, allow me to address a couple of other points mentioned in your opening assessment of our product:

a) You mentioned the interval at which the banks switch as 3 seconds when powering the ISO REGEN. The bank change-over rate is entirely dependent on the load. Since the ISO REGEN is the sole source of power for your bus-powered DAC, the overall load is 100mA (the ISO REGEN itself) plus whatever the DAC is drawing (perhaps 400mA? easy to check with a USB ammeter).

b) You wrote:

"FPGAs are normally used when CPUs are not fast enough to do the job in software. I don't know of any design characteristics of this type of device as to require an FPGA. I am at a loss as to why this part is used other than maybe more familiarity of the designer with hardware than software. Or a way of making the design difficult to copy."

Without debating the quizzical nature of the first sentence (why would we use a full CPU for our s/w when a small FPGA is the perfect place to load and run all the code?), there are a great many reasons--having to to with charging, diagnostics, resets, over-current modes and recovery--supporting our choice to use an FPGA.

In fact, the entire design was prototyped originally with all discrete circuitry--using about 100 more parts. It became apparent that for reliability, flexibility (we can adapt the core tech to larger applications), and cost, the FPGA architecture was the only reasonable way to go.

As for

"more familiarity of the designer with hardware than software," this again makes no sense, unless you are just tossing out another glib insult. There is a ton of code loaded into the board, and we used the right part for the job.

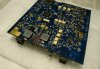

As for making the design difficult to copy, I'd like to see someone try. It is a 4-layer board with 4 power domains, a lot of fairly costly parts, and a lot of unique thinking went into it. It is also our lowest margin product as I was determined to price it for volume sales.

c) Your legend on the photo of the bottom of the board (the side with all the ultracaps) has the input and output jacks reversed.

d) Your measurements and any judgement of the merits of the LPS-1--aside from being incorrect about leakage blocking--are of course not assessing either of its other 2 key attributes.

- The LPS-1 offers extraordinarily low output impedance (across a wide bandwidth, and especially at low frequencies which we think is most important for its application). Plotted measurements of this are tricky to perform, but we plan to publish impedance plots in a few weeks.

- The cascaded TI TPS7A700 output regulators we use are the lowest noise (wideband) and highest PSRR integrated devices available in the 1A class. This is a VERY quiet supply. Again, environmental factors make it hard produce clean plots, but we are getting there.

--------------------

Okay, on with the show!

LEAKAGE: Defined in the current discussion as AC harmonic current traveling over DC connections.

Your supposition, which you tried to support with the measurements you made, is that any leakage current from the PS "energizing"/charging the LPS-1, is going through the LPS-1, coming out on its DC cable, going into whatever it is connected to (in this case the downstream side of the ISO REGEN), then through the USB cable to the DAC, and out of the DAC into your analyzer. Such would be quite damning if true, and would render our entire bank-switching design superfluous. Might as well offer a traditional low noise LPS.

Except that what you measured was not leakage coming through the output of the LPS-1!

What I am going to show with the following photos and plots is that simply having the Mean Well (or other SMPS) plugged into the wall allows it to radiate (from the brick itself and from the cable) into your single-ended RCA cables from your DAC to the analyzer. As you have pointed out, your analyzer is very sensitive and the harmonics you are picking up are more than 115dBv down.

By the way, our tests lead us to believe that almost none of what you are seeing is making its way from the SMPS into the wall and to your analyzer via mains ground pins, but without knowing all of your set up we can not be sure. In our own tests the wall connections did not make a lot of difference.

It will be easy for you to demonstrate any of this for yourself, and maybe later we can discuss a proper method for you to measure actual leakage currents from various power supplies directly (it is not hard, but we need to know about the input grounding of your analyzer to instruct you correctly).

Here we have the Mean Well SMPS energizing the LPS-1, which is powering an ISO REGEN, which is powering the Micca OriGen+ DAC you are familiar with. The 3.5mm>RCA cable in the foreground goes to our HP spectrum analyzer (12KHz signal on all graphs--just out of the displayed band):

View attachment 8233

Here is the plot for it.

Please note that for all our plots, the vertical axis is in dBm (versus dBV for Amir's graphs) and that's a 13 dBx difference. so the marker at -98.6 dBm is equivalent to -111.6 dBV.

View attachment 8232

Now here is the same set up, only this time the LPS-1 is being powered by a quiet linear supply (offscreen, our own choke-filtered, dual-rail, 5-7 amp JS-2). BUT NOTE THAT THE MEAN WELL IS STILL PLUGGED INTO THE WALL:

View attachment 8234

And the near identical plot for it:

View attachment 8235

Now we remove the Mean Well's power cord:

View attachment 8236

And ta-da, a much cleaner plot, proving that what was being measured was NOT leakage coming through the LPS-1's DC output. The active bank (supplying the load) has a pile of opto-isolators (those big white parts across power domain "moats") keeping it isolated from the side that is charging.

View attachment 8237

Now like the DACs you used, the Micca OriGen+ was pretty sensitive to the radiated harmonics of the Mean Well and other SMPS charging units (you already saw the even worse iFi iPower). But every DAC is different--likely owing to its output impedance and the analog cables used. So here is the same set up, with the Mean Well powering the LPS-1, but with an HRT MusicStreamer DAC:

View attachment 8238

And its much quieter results, with 120Hz noise down at -131dBV:

View attachment 8240

---------------------------------------------

John got called away today, but

we will follow-up soon with some direct measurements of power supply leakage--from the DC jacks that is. We will show plots of the Mean Well itself, and of our

UltraCap LPS-1 being powered by it (or any other charger). The latter should pretty much show up as a flat line. We might also post the leakage of some other supplies we have measured (like the iFi iPower, though that is not a pretty sight and it would be impolite of us).

Lastly, I want to close by discussing and disclosing something interesting we found during all the kerfuffle here--mostly when we were looking into your ISO REGEN power supply measurements, which we now thing were a combination of actual leakage plus the radiated harmonics issue. It concerns the Mean Well power supplies.

Aside from the obvious fact that the 22-watt unit we originally chose for inclusion with the original USB REGEN is oversize (we could easily have gotten away with a 5-8W model) puts out more leakage than a smaller wall-wart would, there is another factor at play that is less obvious.

The world-wide governing bodies that regulate the types, efficiencies, and emissions of all AC-mains-conneccted devices have been steadily changing the rules over the years. I won't bore you wth the details--feel free to Google it--but in 2016 the law changed to requiring power adaptors with certifications to Level VI. [Being as about 50% of our business is overseas, conforming to the current laws and standards is important--customs offices will and do reject importation of power products that are not fully certified. (Germany is the worst; must have your papers!)

The original Mean Well model we chose in 2015 (GS25A07-P1J), met the Level V certification for efficiency and emissions. You can see this model in our web photos as it has a 16AWG coaxial DC cable. Middle of 2016, after the laws requiring Level VI certification took effect, my Mean Well importer (actually I bring in hundreds straight from Taiwan as the USA distributors suck) informed me that GS25A07-P1J was being discontinued and replaced with the Level VI-compliant GST25A07-P1J. Here is a photo of the two versions (don't mind the M&Ms, I love dark chocolate M&Ms as they keep me from eating too much chocolate):

View attachment 8241

As you can see, the new version--aside from having a ferrite near the box (as well as near the DC plug end, as does the older version)--has 16AWG zip cord for its cable. I was not terribly happy about it at the time (mainly because the zip cord gives it a cheaper feel and is not as supply as the coax). But aside from a slight efficiency improvement, and likely lower emissions at ultra-high frequencies which is what the EU nannies are most paranoid about--I could find no differences on their detailed data sheets.

Well... it turns out that while the units have the same specs, with the newer Level VI version being quieter in the ultrasonic range, their leakage profiles are a little different, and the zip-cord DC cable radiates a bit more AC 50/60Hz harmonics. So while it does not matter much for the LPS-1 (usually the brick and the cord go away to the floor and are not near RCA cables) I will be looking into this with regards to the default supply for the ISO REGEN.

Right now, I am not finding any small, Class I, Level VI-compliant, low-leakage "medical" supplies that have IEC320-C14 3-prong AC inlets--just Class II units with two-wire C8 jacks. Those are problematic for export and forces my hand with regards to providing international plugs. Might have to accept that headache at some point.

--------------------

Well that about wraps it up for my Saturday.

Amir, I suggest that you play about a bit with various SMPS units plugged in and with their cables local to your setup. We think you will find that the single-ended cables to your analyzer are what are picking up the mains harmonics. Different analog cables, different DACs--even a change of AP input impedance (does it have a 50 ohm input)--will greatly alter what you see.

I know you love to measure noise and large jitter anomalies with you analyzer--at the output of DACs--and that's fine. But characterizing the performance of a low-noise power supply requires other methods.

As well, regarding the widespread reports of people using improved clocking, supplies, signal integrity, USB cables, and many server side improvements:

Perhaps the scientific curiosity in you can lead you towards deeper study of the mechanisms at play. I bet if you were not so quick to dismiss so much out of hand--because your current measures do not immediately reveal variations--you could join others in researching how the phase-noise fingerprints of upstream variables somehow are audible even when you don't see it on your screen. (Very close in PN, right around the shaft of the signal skirt may be one place to look. More dynamic tests as others are doing may also yield insights.)

John is developing an advanced test system (I just spent a couple grand this week on development boards for the project) with the hope and expectation that we will at some point be able to blow the doors off all this and come forth with some very clear results, providing vindication for some of the firms whose legitimate products are regularly derided by a few as "snake oil." It just may take a while. Designing and producing products that people enjoy is our first priority, but for more than one project we are working on (an alternative to USB interface) we really need these more advanced measurement techniques in place to finalize and prove the efficacy (this in part because the proprietary nature of the tech we will us, unless patented, will require us to do more "show" (results) than "tell" (about the how). And sanding chips may even be in order.

I am 100% certain that most of the ASR regulars are going to quickly take pot shots at nearly everything in this long post. I'll tell you upfront that I am not going to dignify snarky, derisive comments with any reply. Nor will I indulge in taunts and BS challenges regarding blind tests. Our happy customers prove our products every day. I'm a small operation working 60+ hours a week to keep everything on track. There are LOTS of other firms--a number of them with similar products to ours--who you could as well challenge to blind-test their products that you don't believe in. I'm the easy target, but I will choose to walk away from people who don't show a modicum of civility and respect.

Thank you and good night,

Alex Crespi

UpTone Audio LLC