Hello,

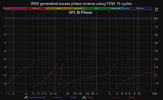

I had already corrected my bumps and minor dips with REW and APO i have a set of genelec 8030 on a table and a svs 1000 pro. the crossover is digital at 65hz done by the sound card. I learned of rephase recently.

I only did the mid and highs as i read this was best. and unwarping the phase in low frequency seems complicated.

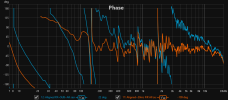

The 3 pictures : is first the correction estimate of the speaker (i took a close range reading (40 cm) and used paragraphic phase eq,

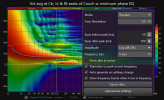

2nd is the result at my seating position and the

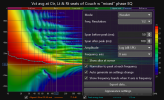

3rd was before correction.

i tried on/off the convoler in apo and I can't notice any difference in 3 listening position, i ask a firend and couldn't hear a difference. Is it because genelec have active crossover, or rephase should be used to fine tuned the phase between sub and speaker? I read on places phase cannot be heard (other than sound being cancelled and less loud)

thx for any insight

I had already corrected my bumps and minor dips with REW and APO i have a set of genelec 8030 on a table and a svs 1000 pro. the crossover is digital at 65hz done by the sound card. I learned of rephase recently.

I only did the mid and highs as i read this was best. and unwarping the phase in low frequency seems complicated.

The 3 pictures : is first the correction estimate of the speaker (i took a close range reading (40 cm) and used paragraphic phase eq,

2nd is the result at my seating position and the

3rd was before correction.

i tried on/off the convoler in apo and I can't notice any difference in 3 listening position, i ask a firend and couldn't hear a difference. Is it because genelec have active crossover, or rephase should be used to fine tuned the phase between sub and speaker? I read on places phase cannot be heard (other than sound being cancelled and less loud)

thx for any insight