Haint

Senior Member

- Joined

- Jan 26, 2020

- Messages

- 347

- Likes

- 453

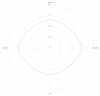

It doesn't really work like that. The correlation between the formula's predicted preference ratings and the actual average preference ratings people gave the speakers during the formal blind tests was 0.86. This doesn't mean 86% of the time the ratings matched, and 14% of the time they didn't. The 0.86 number specifically refers to the (sample) Pearson correlation coefficient - a measure of the linear correlation between the predicted and actual preference ratings (1 being perfect linear correlation, 0 no correlation at all). Effectively it's a measure based on how far on average the data points are away from the linear regression line (line of best-fit) through them. In Sean Olive's paper, A Multiple Regression Model for Predicting Loudspeaker Preference Using Objective Measurements: Part II - Development of the Model (scroll down in that link for the correct paper), the correlation between predicted and actual preference ratings can be seen by looking at this graph:

View attachment 48661

The fact that the line of best-fit is also a y =x line shows that on average the predicted and actual preference ratings have a one-to-one correlation, and the fact that most of the data points are close to this line shows that the correlation is high (0.86).

I see, thanks for the explanation. So it looks like some of the outliers are way off from the prediction. Is the "Measured Preference Rating" the score the test subjects actually awarded? So for example a predicted 4 was actually a 1.5, or a predicted 5 was actually a 7?