Pardon the interruption, but...

Long ago it bothered me that software could take a sine sweep (no edges) and create a measurement (impulse or step) that does have "edges".

So I set out to find the difference between "real" and "calculated" values.

Real:

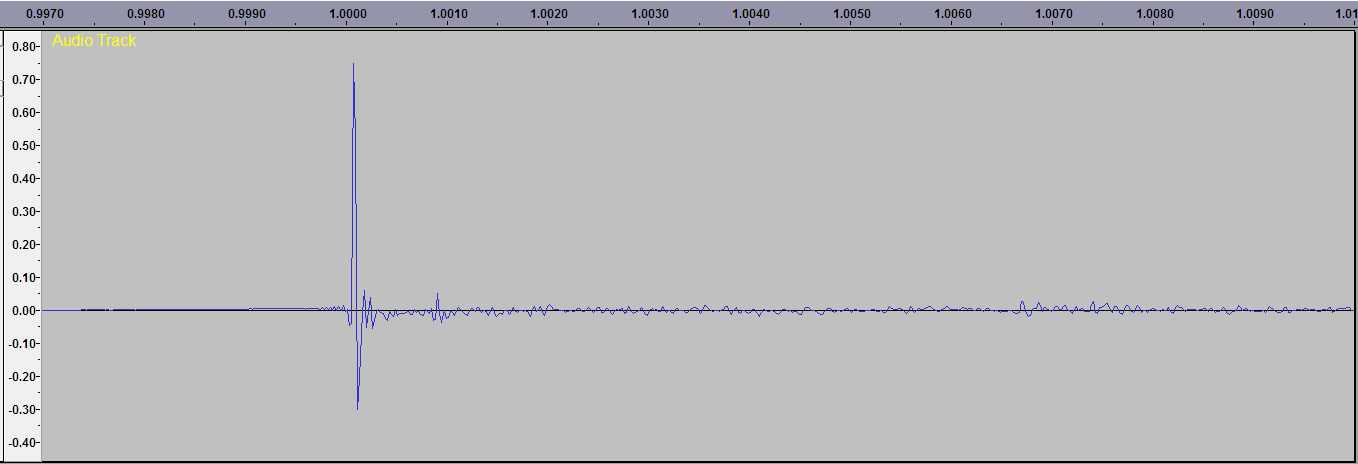

Single Full Scale Bit sent through speakers, playback recorded in Audacity via UMIK-1:

Ok, that looks good. A recording of an "impulse".

Now lets see what a sine sweep reveals after the mathematicians have a whack at it:

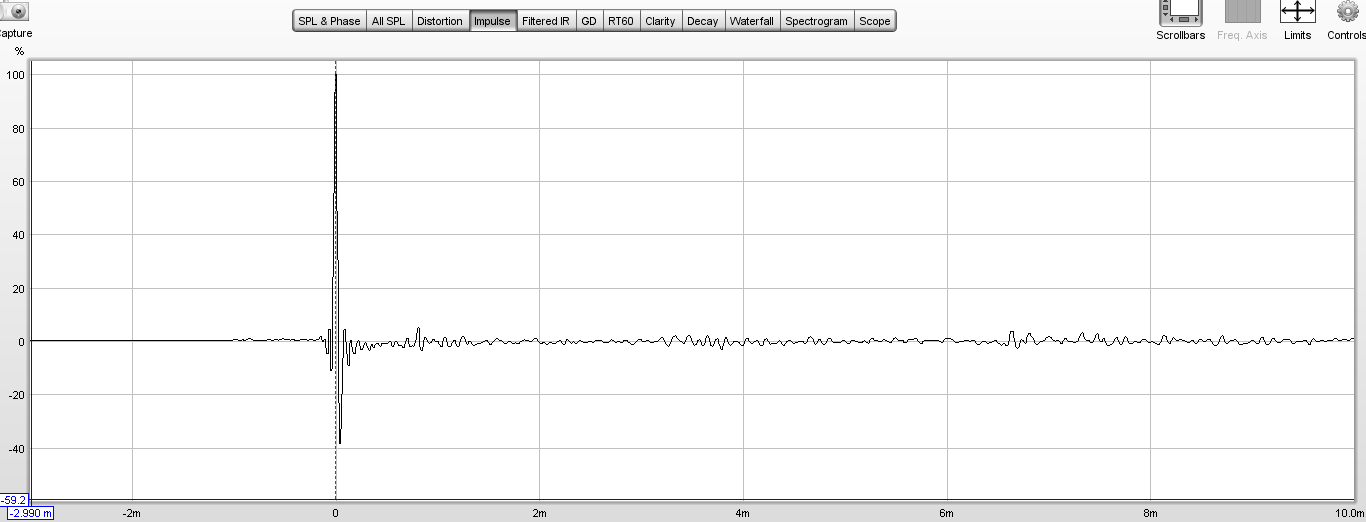

Impulse response calculated from a ten second 10-24kHz sweep tone as played through the speakers and analyzed in REW:

Test repeated for step response:

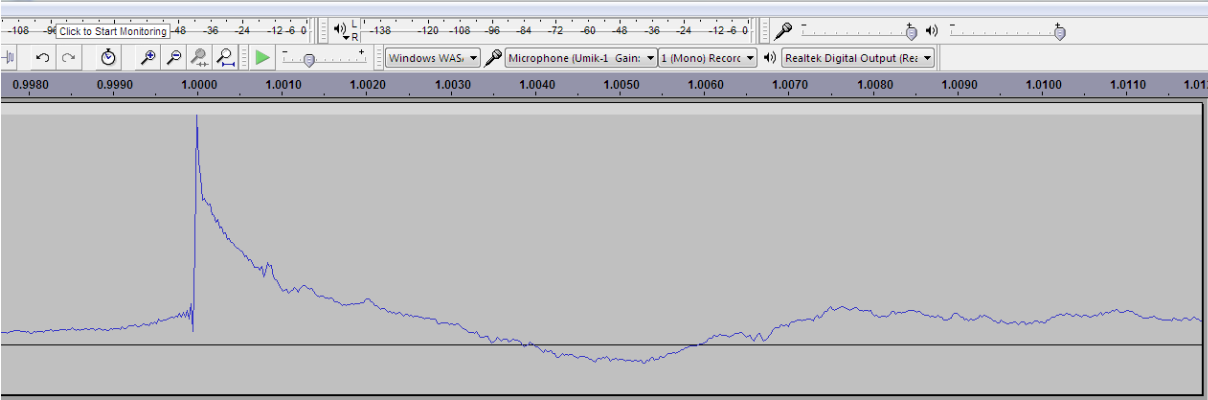

In-room recording of a step - using a 10Hz square wave played through the speakers as the excitation:

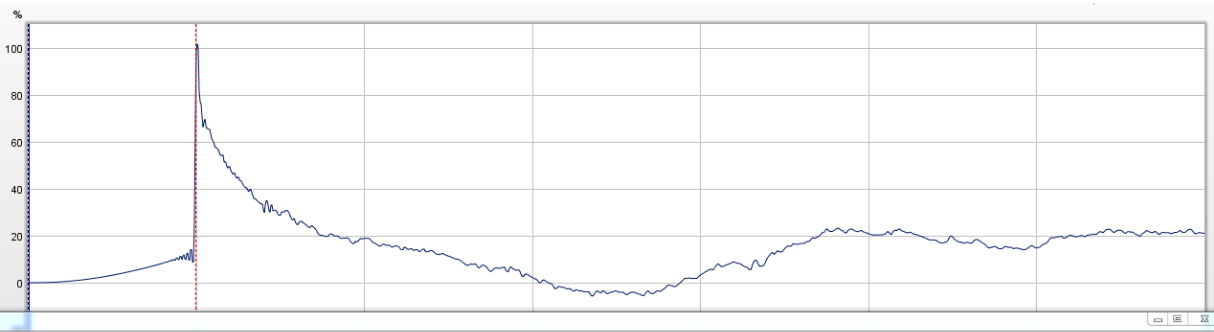

And the calculation of the step response from a sine wave sweep played through the speakers:

At that point I decided that the tone sweep was sufficient to characterize what the speakers were doing in the room.

No need to examine real impulses or steps - the math could accurately (somehow) pull that data out of a tone sweep.

Long ago it bothered me that software could take a sine sweep (no edges) and create a measurement (impulse or step) that does have "edges".

So I set out to find the difference between "real" and "calculated" values.

Real:

Single Full Scale Bit sent through speakers, playback recorded in Audacity via UMIK-1:

Ok, that looks good. A recording of an "impulse".

Now lets see what a sine sweep reveals after the mathematicians have a whack at it:

Impulse response calculated from a ten second 10-24kHz sweep tone as played through the speakers and analyzed in REW:

Test repeated for step response:

In-room recording of a step - using a 10Hz square wave played through the speakers as the excitation:

And the calculation of the step response from a sine wave sweep played through the speakers:

At that point I decided that the tone sweep was sufficient to characterize what the speakers were doing in the room.

No need to examine real impulses or steps - the math could accurately (somehow) pull that data out of a tone sweep.