Single-sample peaks are of no interest in the quest for realistic sound. The intervals over which sampling is averaged would need to match human perception, and no faster.

Agreed in general, and the 100ms sample in the paper I cited is perhaps the shortest tone burst that a person can hear without needing to be amplified above its current level. But the histogram I cited isn't an observation of single-sample peaks, but rather amplitudes aggregated into 100ms bins from continuous music. The usual graph I recall from Alton Everest's

Master Handbook of Acoustics reported the shortest tone burst (presumably in a field of relative silence--a single sample) that can be heard without needing to be amplified, meaning that shorter standalone tone bursts will not be perceived as being as loud. I'm sure there are all sorts of qualifications and limitations on that measure, but I don't recall them and I don't have the book handy.

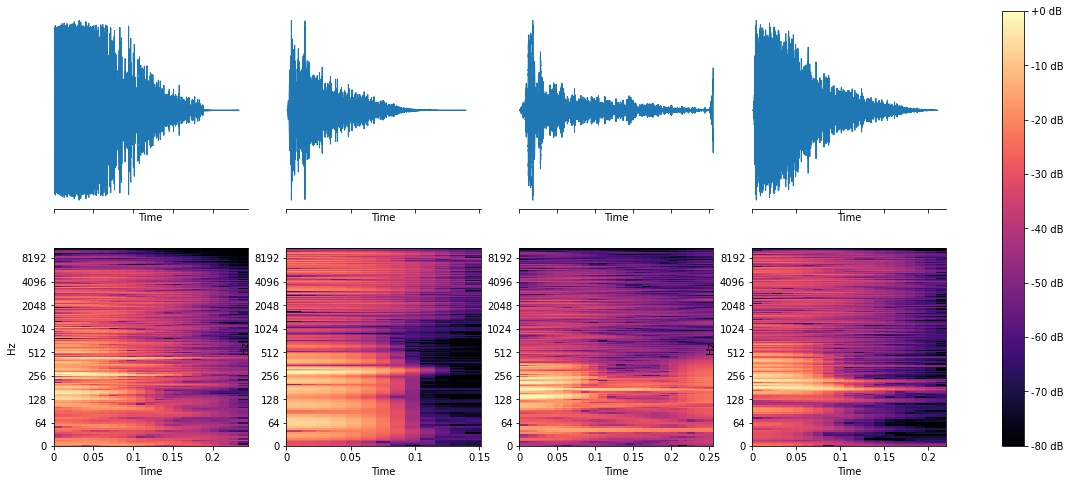

In this picture of snare-drum waveforms...

(source:

https://www.soundsandwords.io/drum-sound-classification/)

...initial impulses seem to be quite short--as little as 20 or 30 ms--plus a much longer reverberant tail. If that impulse clips, the waveform for the hit won't be as tall with respect to the reverberant tail, and that seems to me to affect the sound significantly, despite that the peak is a much shorter duration than 100ms. The difference between the hit and the reverberant tail in the third column, for example, is greater than how much the hit would need to be amplified to be perceived as being as loud.

Note that snare drum hits are one example of short peaks that are distinct from the wall of sound--potentially a single sample that does stand alone--and particularly in orchestral music, but also in quite a lot of jazz and old minimally processed rock.

(The spectral waterfalls don't seem to show the difference in amplitude very well, and sometimes I wonder if we think spectrally at the expense of looking at amplitude variations. The color variations that are supposed to reveal dynamics are not nearly as precise an indicator of amplitude as the waveform itself.)

In any case, any difference in the recording or playback and the initial signal is a distortion, and the question is how much of that dynamic distortion is needed to affect the perceived sound. So, the required dynamic range may be greater than the 75 dB range measured in the first paper I cited, because the peaks that affect it may be shorter than the 100ms bins into which they aggregated their samples. I think of it as a floor, because clearly the time period of interest isn't any longer than 100 ms. And, again, this is validated by the minimum dynamic range (as usually measured with audio--broadbanded) that I perceive as sounding "realistic".

Rick "thinking that clipping/compression happens somewhere in the recording/mastering/distribution/playback process much more often than we suspect" Denney