In a recent review of this phono stage, there was some discussion of the approach taken for measurement and interpretation of phono stages. This post is a follow up with additional measurements which I (and other designers and users of phono equipment) find necessary for evaluation. This a "in addition to" rather than "instead of" for Amir's review, but I'd like to not only present measurements, I wish to also go into the whys and hows of things which are peculiar to the genre.

And I'll start things out by thanking Amir for sending the unit to me, as well as @dinglehoser who volunteered his unit to let me dissect its performance a bit.

As usual, my test setup is centered on an APx525 analyzer, but I also pressed into service a Hewlett-Packard 3466A volt-ohm meter and a Kikusui COS6100M 100 MHz scope.

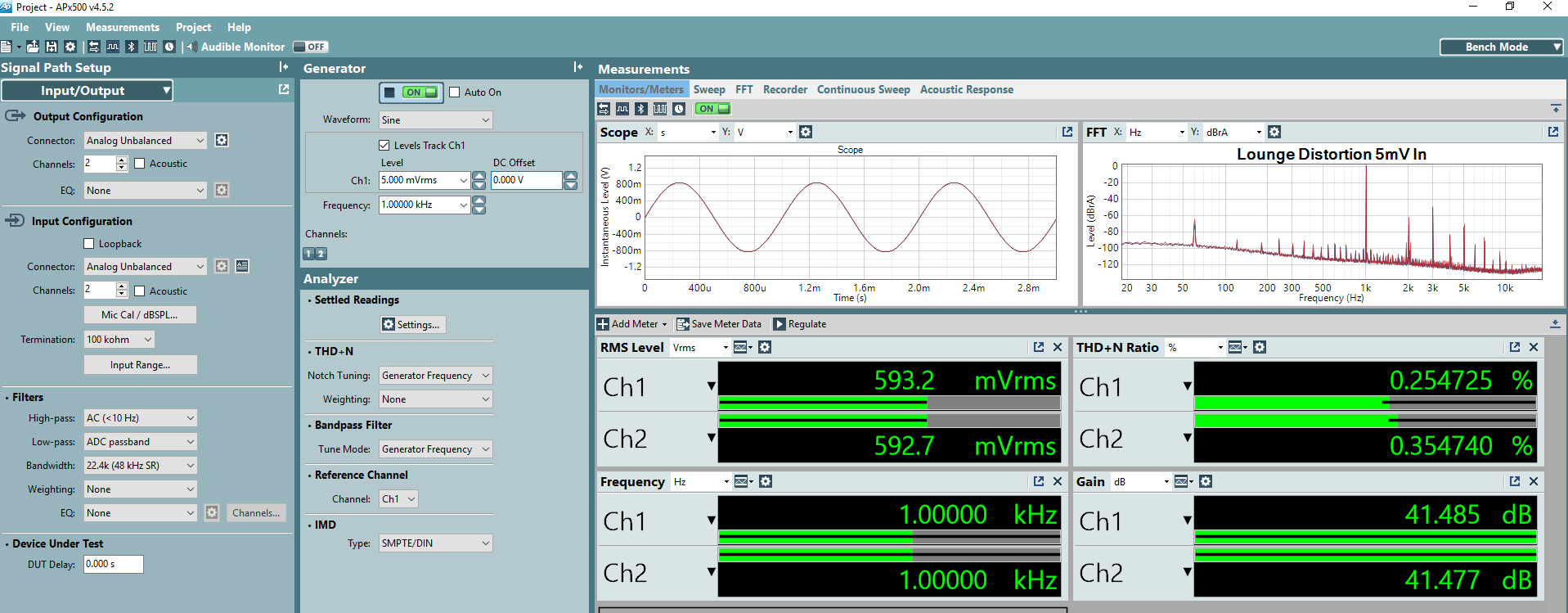

Let's start with the basics. I first measure gain so that I can freely switch between input-referred and output-referred numbers:

Gain at 1 kHz is pretty well matched between channels at about 41.5 dB, which corresponds to x118.6. We'll be using these numbers again. I used 5 mV as the drive level because that's a very typical MM cartridge output. This indicates that a line amp of 20 dB gain will be more than sufficient to use this unit with any standard power amp and normal MM cartridges, but that the gain may be a bit sparse if you're using a passive control or unity gain line amp.

Gain at 1 kHz is pretty well matched between channels at about 41.5 dB, which corresponds to x118.6. We'll be using these numbers again. I used 5 mV as the drive level because that's a very typical MM cartridge output. This indicates that a line amp of 20 dB gain will be more than sufficient to use this unit with any standard power amp and normal MM cartridges, but that the gain may be a bit sparse if you're using a passive control or unity gain line amp.

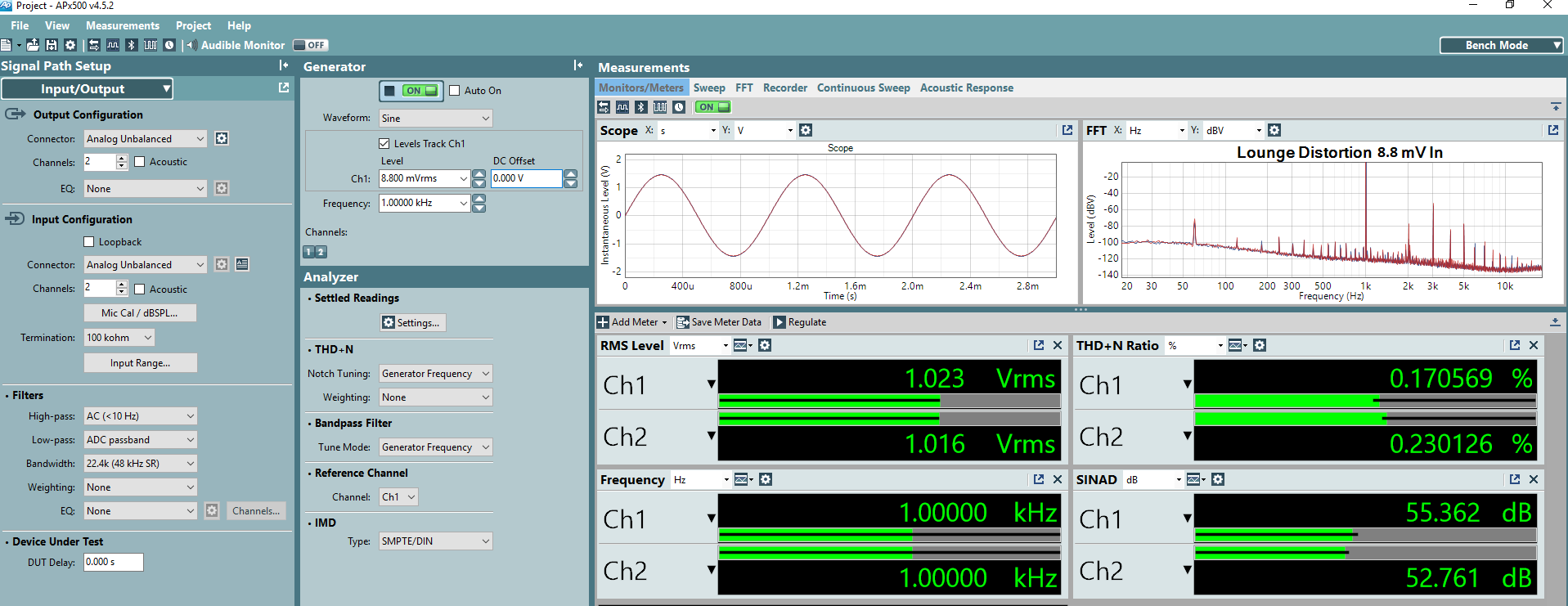

Just to see if my measurements and Amir's are consistent (he focuses on output levels rather than input levels, which may not be appropriate for phono stages- I will be referencing things to the input levels), I brought up the input level to achieve an output close to what he used. This required an input of 8.8 mV.

As you can see by comparison, our measurements correlate quite closely (though we use opposite numbering for our channels, which I'll blame on my Hebrew education, learning to write from right to left). So I can proceed without worrying about major inconsistencies.

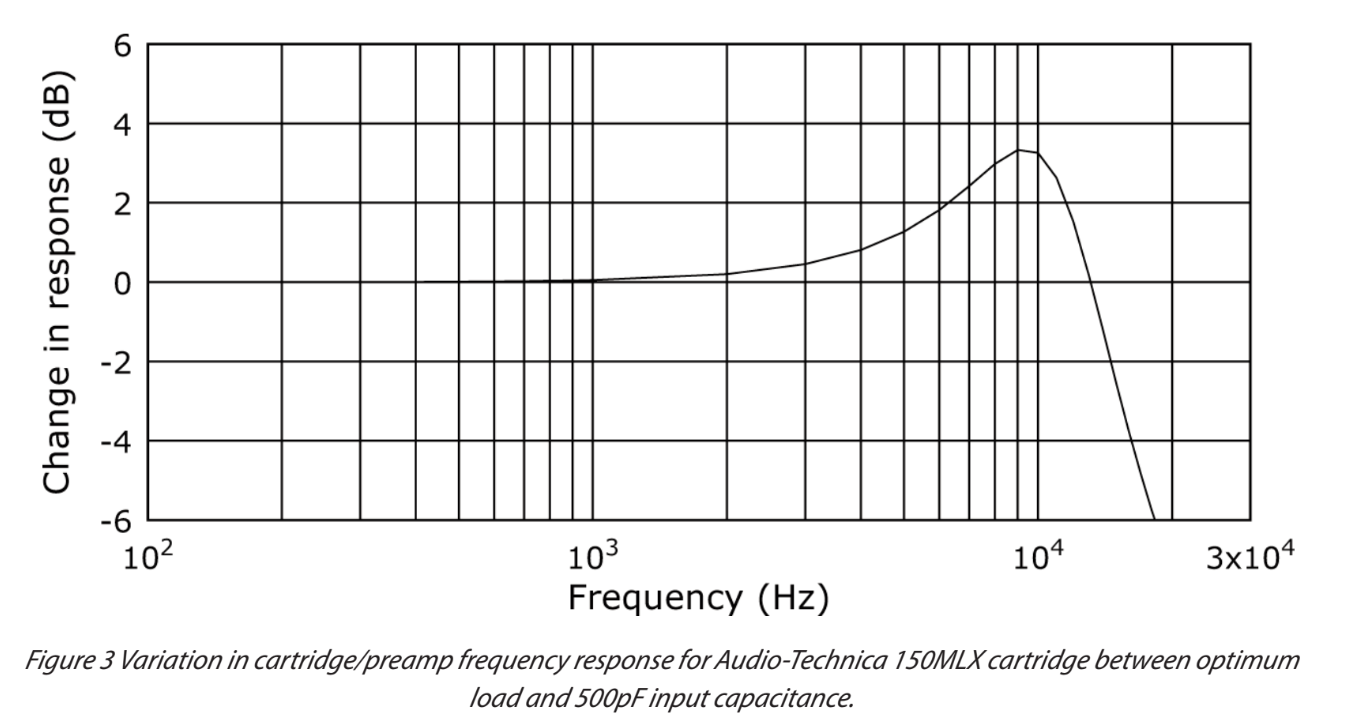

Now the first thing that needs to be measured is input impedance- and that goes beyond resistance. It is VITAL that input capacitance be measured- the frequency response of MM cartridges is heavily dependent on the load. Here is a figure from a phono stage design article I published in Linear Audio a few years back, showing the change in frequency response for the cartridge I use (an Audio-Technica 150MLX) between the recommended load and a 500 pF load.

Note that 500 pF is not that unusual- I have measured lots of preamps, especially discrete FET and tube preamps that have massively more input capacitance than spec. This is because manufacturers tend to pretend that the input capacitance is whatever capacitor they hang across the input, and they like to forget about the Miller Effect contribution of the input stage. So when you add those together and throw in the capacitance of the cabling between cartridge and preamp, you can get a significantly higher number than planned.

One other thing that I find amusing- it is often difficult to get capacitance specs for the cables. A quick look through a few sites showed specs for break-in time, adherence to the Golden Ratio, cryotreatment times, purity of silver, and similar irrelevant measures, but leave out the actual spec that affects what you can actually hear.

In any case, the input resistance and capacitance can't usually be measured with a normal meter because the signals sent out by the voltmeter can cause the input stages to overload, which will significantly throw off the measurement. A much better way to determine this is to put a large (say 100k) resistor in series with the phono stage input, hook a scope across the input, then inject a square wave. Input resistance can be calculated by the voltage divider equation, and input capacitance can be determined by rise time (C = 2.2tr/R where tr is the 10-90% rise time and R is the Thevenin source resistance). It is important that you use a 10x probe on the scope, otherwise the probe capacitance can swamp what you're trying to measure. With a 10x probe, you're only throwing the measurement off by 10pF or so, which can easily be corrected for.

Using this method and a 10 mV 1kHz square wave generated by the APx525, I determined that the input resistance and capacitance here were close to spec at slightly over 50k ohm and 110 pF, respectively. If we take into account the 100-150 pF or so from cabling, the input C is a bit high to use for the 150MLX, but will suit many other cartridges. I'd like to see it lower, since more capacitance can be added but cannot be subtracted. Nonetheless, it's not an unreasonable number if you're using a Shure or Grado or something similar. One peculiarity was that the square wave at the input showed some overshoot as well as the usual capacitance- this suggests an inductive component is present at the input or that there are some issues in the feedback compensation of the input stage gain block.

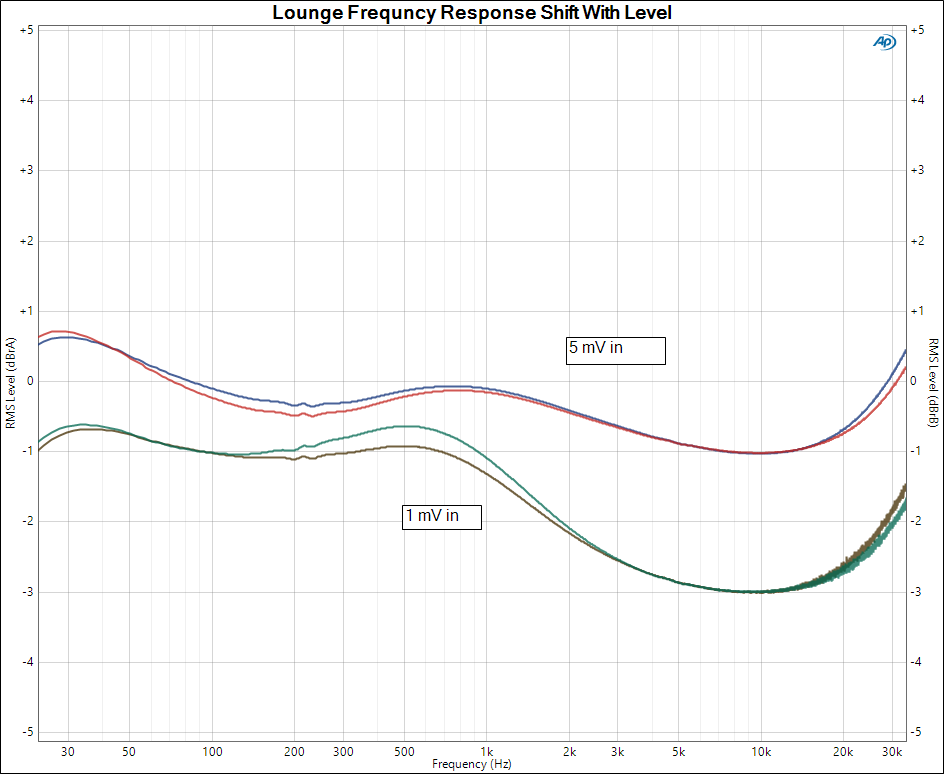

Next up, the frequency response. Amir showed that it was not particularly adherent to the RIAA standard. I was able to confirm this, but knowing something about the peculiarities of the inductors commonly used in these sorts of circuits, I took things a bit further. Here's the frequency response taken at two different levels, 1mV and 5 mV in, both pretty moderate numbers.

WOW! That's quite a change with level and although I'm not showing the graphs here, this tendency continued at higher and lower levels and is a VERY significant amount, easily to the point of audibility. This is not strictly an amplifier, it is very much an effects box. At least one top engineer I know bought one of these units and tried measuring the inductance of the equalization coils and failed for exactly this reason- the inductance was a strong function of applied voltage. Conventional RC equalization networks don't generally show this behavior, so the design "philosophy" here clearly has no connection to high fidelity and accurate reproduction.

Next, we consider overload. IMO, this is a very critical aspect of phono stage design (though disclosure: I correspond regularly with two VERY smart engineers, one of whom strongly agrees, the other of whom strongly disagrees). The guy who agrees has done measurements of cartridge outputs while playing records and has seen signals 25 dB or more above the rated output voltage, mostly from pops and ticks exciting the cartridge's mechanical resonance, and abetted by the velocity characteristic of magnetic cartridges (i.e., response rising proportional to frequency for a given displacement). Low frequency overload is less important for that reason, but given the reality of warps, should not be overlooked.

The manner in which overload voltage varies with frequency is a function of phono stage topology and output swing limits. Usually, the supply limit will be the determinant at low frequencies where the gain is higher and input stage overload is the limit at high frequencies. With feedback RIAA (not as much the case here), there's an additional complication that at higher frequencies and levels, there's a strong common mode voltage which can cause unexpected distortion. Likewise, the feedback network's impedance drops, which can challenge both the gain block's drive capability and unity gain stability/

In the case of the Lounge, the basic topology is a passive LRC network sandwiched between two gain blocks (there's a few tweaks to this mentioned by the designer in the discussion in the comments on Amir's review). So unsurprisingly, we see a reduction in overload as we go down in frequency due to output swing limitations. At 1 kHz, we have an 80mV input overload (translating to output, that's about 9.4V out, consistent with Amir's measurement). Going down in frequency, the overload point not unexpectedly also decreases- I'm not sure how to insert a table in this forum to show the full list, so I'll just summarize by saying it's 67mV at 500 Hz and 45 mV by 200 Hz. These aren't spectacular numbers, but aren't terrible either.

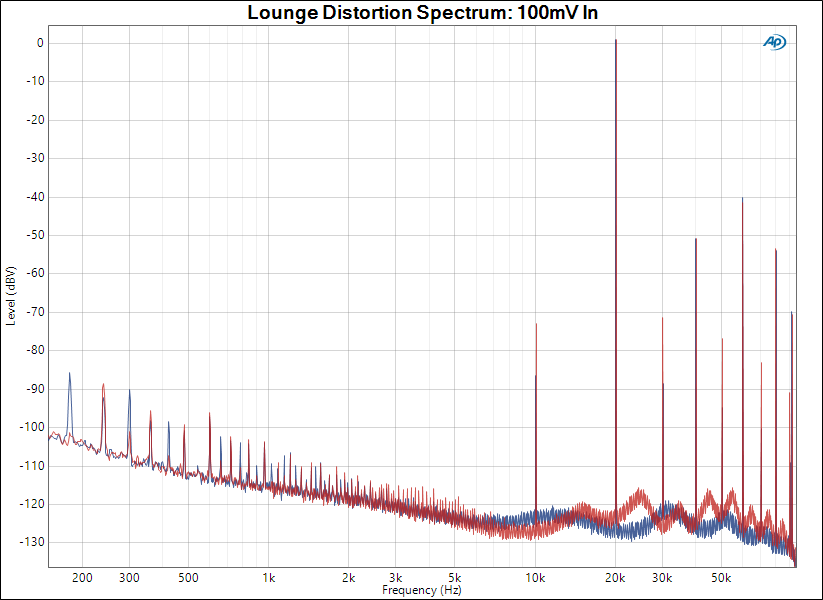

Going up in frequency, the input overload at 2kHz and above is even at about 100 mV, so clearly that's the limit of the input (pre-EQ) stage. Again, not great but probably adequate. As we hit the 1% distortion point, the 20 kHz distortion spectrum (already dominated by odd-order products, the seller's claims to the contrary notwithstanding) shows a large splattering of odd and high order harmonics. It also shows a very unusual subharmonic, likely from inductor saturation, which will be very audible on ticks and pops with hotter cartridges.

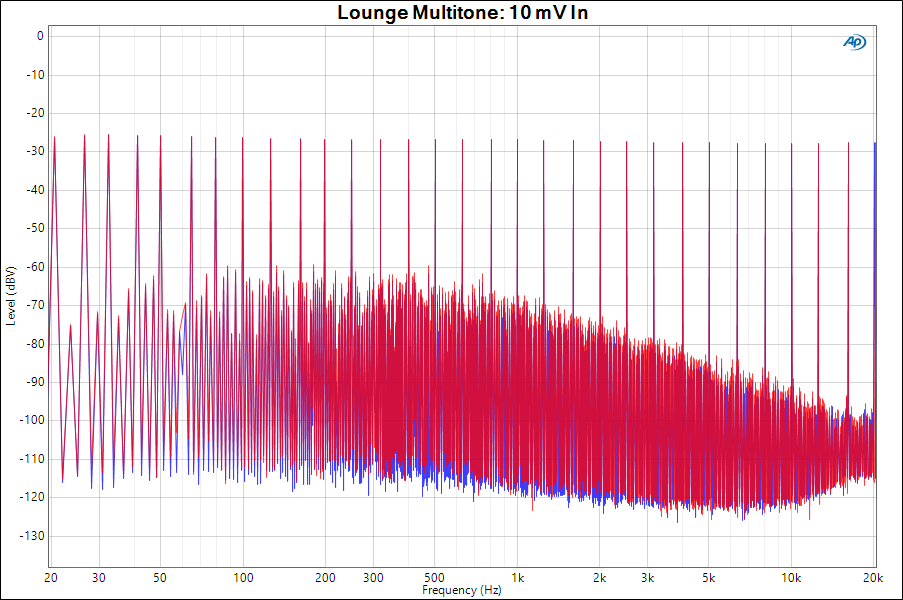

One test of distortion that I've long advocated and am delighted to see gain broader use is the multitone test- rarely does one see anything actually new come out of it that isn't suggested in single tone and intermodulation tests, but it usefully answers the objections of people who don't understand Fourier's theorem and does indeed present a rather nice visualization of what a DUT does handling complex material. There are a variety of multitone signals out in the wild (I most often use the one bundled with ARTA, but have also used Virtins MI to generate custom signals), but for consistency with Amir here, I used the AP 32 tone signal at 10 mV with an inverse RIAA pre-emphasis. Now given the rather poor distortion performance as well as the prevalance of lots of power supply noise (likely an issue of layout and ground routing) one might expect the multitone to be a mess. And indeed it is.

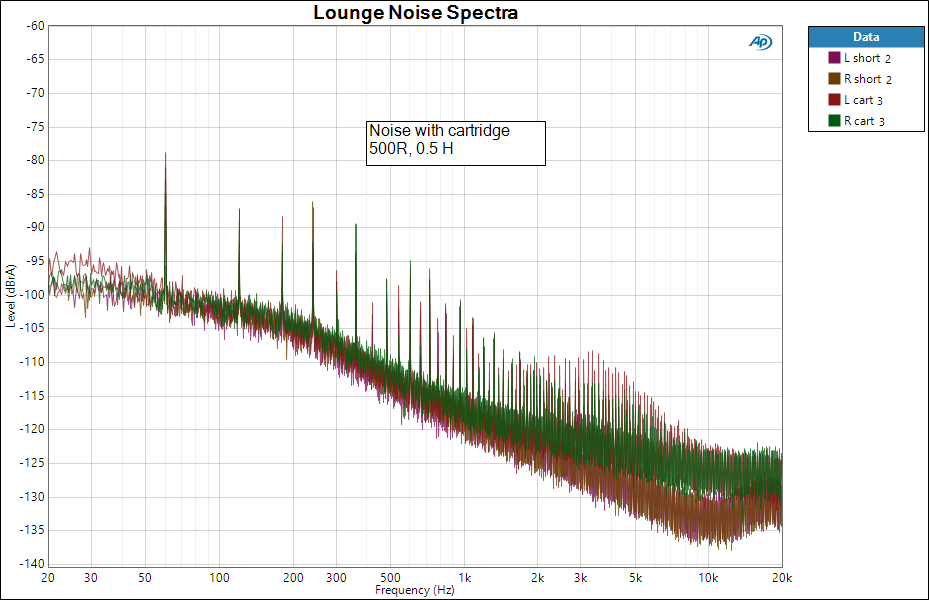

The other principal job of a phono preamp is to not add noise. Now unlike digital systems, phono is inherently noisy- a typical cartridge will have a DC resistance of 500-2000 ohms and an inductance of 0.5-1 Henry. What this means is that at low frequencies, the phono preamp sees a source impedance of the cartridge's DCR (say 1k) in parallel with the input resistance of the phono stage (say 47k). As the frequency goes up, the cartridge's inductance comes into play, so the input resistance of the phono stage is shunted less by the cartridge's impedance and the noise rises because of its higher resistance. Of course, the input capacitance still shunts the input resistance, so that drops the noise somewhat. A calculator which you can use to see what your cartridge and preamp's irreducible thermal noise floor is can be found at my website. The takeaways are that the laws of nature will set the noise floor between 60-75dB (typical) below the cartridge's rated output, so that reducing the preamp's noise much below that will not have an appreciable effect on the overall noise. It does give the designer a noise level to shoot for so that the limitation is the transducer and not the electronics.

Now, the electronics noise can be separated into two components- voltage and current noise. Although people commonly measure preamp noise using a shorted (or nearly shorted) input, this method basically ignores the current noise. If you measure with the input open, the current noise drawing across the input resistor will dominate. One should always measure these stages with a cartridge as a source. I keep a test cartridge body handy for such things, in this case a Stanton 881S, with a 500 ohm DCR and 0.5H inductance (both on the low side for a moving magnet. Current noise is usually less of an issue with FETs and tubes, but can be more dominant with bipolar transistor inputs. This is why opamps like the wonderful AD797 perform beautifully well with low DCR and inductance moving coil cartridges, but poorly with higher DCR and inductance moving magnets.

Shown here is the noise spectrum of the Lounge with a shorted input and with the cartridge attached relative to the output with a 5 mV input. The rise in noise above 1 kHz is totally missed by the shorted-input measurement (or even with the almost-sorted 20 ohm source impedance of the AP!) and is caused by the current noise of the opamp, which was not an optimum choice for this application.

One other testing note: noise should always be measured with NO windowing of the FFT. Using a window is appropriate for things like distortion, but for random (thermal and shot) noise, it will give a number that's a few dB too optimistic.

To wind up this overly-long exposition, there's a lot of extra measurement needed to fully characterize a phono stage. When we do these extra steps, we very clearly expose the severe flaws in preamps like this one.

And no, I did not listen to it- unfortunately, it's not compatible with my phono system, but I suspect that after years of using accurate phono stages, the sound of this effects box would likely not appeal to me.

And I'll start things out by thanking Amir for sending the unit to me, as well as @dinglehoser who volunteered his unit to let me dissect its performance a bit.

As usual, my test setup is centered on an APx525 analyzer, but I also pressed into service a Hewlett-Packard 3466A volt-ohm meter and a Kikusui COS6100M 100 MHz scope.

Let's start with the basics. I first measure gain so that I can freely switch between input-referred and output-referred numbers:

Just to see if my measurements and Amir's are consistent (he focuses on output levels rather than input levels, which may not be appropriate for phono stages- I will be referencing things to the input levels), I brought up the input level to achieve an output close to what he used. This required an input of 8.8 mV.

As you can see by comparison, our measurements correlate quite closely (though we use opposite numbering for our channels, which I'll blame on my Hebrew education, learning to write from right to left). So I can proceed without worrying about major inconsistencies.

Now the first thing that needs to be measured is input impedance- and that goes beyond resistance. It is VITAL that input capacitance be measured- the frequency response of MM cartridges is heavily dependent on the load. Here is a figure from a phono stage design article I published in Linear Audio a few years back, showing the change in frequency response for the cartridge I use (an Audio-Technica 150MLX) between the recommended load and a 500 pF load.

Note that 500 pF is not that unusual- I have measured lots of preamps, especially discrete FET and tube preamps that have massively more input capacitance than spec. This is because manufacturers tend to pretend that the input capacitance is whatever capacitor they hang across the input, and they like to forget about the Miller Effect contribution of the input stage. So when you add those together and throw in the capacitance of the cabling between cartridge and preamp, you can get a significantly higher number than planned.

One other thing that I find amusing- it is often difficult to get capacitance specs for the cables. A quick look through a few sites showed specs for break-in time, adherence to the Golden Ratio, cryotreatment times, purity of silver, and similar irrelevant measures, but leave out the actual spec that affects what you can actually hear.

In any case, the input resistance and capacitance can't usually be measured with a normal meter because the signals sent out by the voltmeter can cause the input stages to overload, which will significantly throw off the measurement. A much better way to determine this is to put a large (say 100k) resistor in series with the phono stage input, hook a scope across the input, then inject a square wave. Input resistance can be calculated by the voltage divider equation, and input capacitance can be determined by rise time (C = 2.2tr/R where tr is the 10-90% rise time and R is the Thevenin source resistance). It is important that you use a 10x probe on the scope, otherwise the probe capacitance can swamp what you're trying to measure. With a 10x probe, you're only throwing the measurement off by 10pF or so, which can easily be corrected for.

Using this method and a 10 mV 1kHz square wave generated by the APx525, I determined that the input resistance and capacitance here were close to spec at slightly over 50k ohm and 110 pF, respectively. If we take into account the 100-150 pF or so from cabling, the input C is a bit high to use for the 150MLX, but will suit many other cartridges. I'd like to see it lower, since more capacitance can be added but cannot be subtracted. Nonetheless, it's not an unreasonable number if you're using a Shure or Grado or something similar. One peculiarity was that the square wave at the input showed some overshoot as well as the usual capacitance- this suggests an inductive component is present at the input or that there are some issues in the feedback compensation of the input stage gain block.

Next up, the frequency response. Amir showed that it was not particularly adherent to the RIAA standard. I was able to confirm this, but knowing something about the peculiarities of the inductors commonly used in these sorts of circuits, I took things a bit further. Here's the frequency response taken at two different levels, 1mV and 5 mV in, both pretty moderate numbers.

WOW! That's quite a change with level and although I'm not showing the graphs here, this tendency continued at higher and lower levels and is a VERY significant amount, easily to the point of audibility. This is not strictly an amplifier, it is very much an effects box. At least one top engineer I know bought one of these units and tried measuring the inductance of the equalization coils and failed for exactly this reason- the inductance was a strong function of applied voltage. Conventional RC equalization networks don't generally show this behavior, so the design "philosophy" here clearly has no connection to high fidelity and accurate reproduction.

Next, we consider overload. IMO, this is a very critical aspect of phono stage design (though disclosure: I correspond regularly with two VERY smart engineers, one of whom strongly agrees, the other of whom strongly disagrees). The guy who agrees has done measurements of cartridge outputs while playing records and has seen signals 25 dB or more above the rated output voltage, mostly from pops and ticks exciting the cartridge's mechanical resonance, and abetted by the velocity characteristic of magnetic cartridges (i.e., response rising proportional to frequency for a given displacement). Low frequency overload is less important for that reason, but given the reality of warps, should not be overlooked.

The manner in which overload voltage varies with frequency is a function of phono stage topology and output swing limits. Usually, the supply limit will be the determinant at low frequencies where the gain is higher and input stage overload is the limit at high frequencies. With feedback RIAA (not as much the case here), there's an additional complication that at higher frequencies and levels, there's a strong common mode voltage which can cause unexpected distortion. Likewise, the feedback network's impedance drops, which can challenge both the gain block's drive capability and unity gain stability/

In the case of the Lounge, the basic topology is a passive LRC network sandwiched between two gain blocks (there's a few tweaks to this mentioned by the designer in the discussion in the comments on Amir's review). So unsurprisingly, we see a reduction in overload as we go down in frequency due to output swing limitations. At 1 kHz, we have an 80mV input overload (translating to output, that's about 9.4V out, consistent with Amir's measurement). Going down in frequency, the overload point not unexpectedly also decreases- I'm not sure how to insert a table in this forum to show the full list, so I'll just summarize by saying it's 67mV at 500 Hz and 45 mV by 200 Hz. These aren't spectacular numbers, but aren't terrible either.

Going up in frequency, the input overload at 2kHz and above is even at about 100 mV, so clearly that's the limit of the input (pre-EQ) stage. Again, not great but probably adequate. As we hit the 1% distortion point, the 20 kHz distortion spectrum (already dominated by odd-order products, the seller's claims to the contrary notwithstanding) shows a large splattering of odd and high order harmonics. It also shows a very unusual subharmonic, likely from inductor saturation, which will be very audible on ticks and pops with hotter cartridges.

One test of distortion that I've long advocated and am delighted to see gain broader use is the multitone test- rarely does one see anything actually new come out of it that isn't suggested in single tone and intermodulation tests, but it usefully answers the objections of people who don't understand Fourier's theorem and does indeed present a rather nice visualization of what a DUT does handling complex material. There are a variety of multitone signals out in the wild (I most often use the one bundled with ARTA, but have also used Virtins MI to generate custom signals), but for consistency with Amir here, I used the AP 32 tone signal at 10 mV with an inverse RIAA pre-emphasis. Now given the rather poor distortion performance as well as the prevalance of lots of power supply noise (likely an issue of layout and ground routing) one might expect the multitone to be a mess. And indeed it is.

The other principal job of a phono preamp is to not add noise. Now unlike digital systems, phono is inherently noisy- a typical cartridge will have a DC resistance of 500-2000 ohms and an inductance of 0.5-1 Henry. What this means is that at low frequencies, the phono preamp sees a source impedance of the cartridge's DCR (say 1k) in parallel with the input resistance of the phono stage (say 47k). As the frequency goes up, the cartridge's inductance comes into play, so the input resistance of the phono stage is shunted less by the cartridge's impedance and the noise rises because of its higher resistance. Of course, the input capacitance still shunts the input resistance, so that drops the noise somewhat. A calculator which you can use to see what your cartridge and preamp's irreducible thermal noise floor is can be found at my website. The takeaways are that the laws of nature will set the noise floor between 60-75dB (typical) below the cartridge's rated output, so that reducing the preamp's noise much below that will not have an appreciable effect on the overall noise. It does give the designer a noise level to shoot for so that the limitation is the transducer and not the electronics.

Now, the electronics noise can be separated into two components- voltage and current noise. Although people commonly measure preamp noise using a shorted (or nearly shorted) input, this method basically ignores the current noise. If you measure with the input open, the current noise drawing across the input resistor will dominate. One should always measure these stages with a cartridge as a source. I keep a test cartridge body handy for such things, in this case a Stanton 881S, with a 500 ohm DCR and 0.5H inductance (both on the low side for a moving magnet. Current noise is usually less of an issue with FETs and tubes, but can be more dominant with bipolar transistor inputs. This is why opamps like the wonderful AD797 perform beautifully well with low DCR and inductance moving coil cartridges, but poorly with higher DCR and inductance moving magnets.

Shown here is the noise spectrum of the Lounge with a shorted input and with the cartridge attached relative to the output with a 5 mV input. The rise in noise above 1 kHz is totally missed by the shorted-input measurement (or even with the almost-sorted 20 ohm source impedance of the AP!) and is caused by the current noise of the opamp, which was not an optimum choice for this application.

One other testing note: noise should always be measured with NO windowing of the FFT. Using a window is appropriate for things like distortion, but for random (thermal and shot) noise, it will give a number that's a few dB too optimistic.

To wind up this overly-long exposition, there's a lot of extra measurement needed to fully characterize a phono stage. When we do these extra steps, we very clearly expose the severe flaws in preamps like this one.

And no, I did not listen to it- unfortunately, it's not compatible with my phono system, but I suspect that after years of using accurate phono stages, the sound of this effects box would likely not appeal to me.

Last edited: