Dr. Griesinger mentions extracting ITD from the positive-going zero crossings. Is that what you mean here, or something else?

Go watch the hearing tutorial at the PNW site as long as it's still up.

At low frequencies, positive going on the basilar membrane, yes. Above 800Hz that starts to lose importance, about 2kHz for all practical purposes (there is some remaining waveform sensitivity to 4k,but it's overwhelmed for the most part), the leading edge of the filtered envelope.

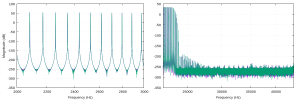

For your use in the bass, I'd make an 8th order fit to the cochlear filter in an IIR minimum-phase filter (match both skirts, which is moderately tricky), or in an assymetric FIR filter (it will be long but computers are fast) that is a better fit, and make sure you convert that filter to pure minimum phase, and then look at the positive going waveform in each 1/3 ERB.