audiofooled

Addicted to Fun and Learning

- Joined

- Apr 1, 2021

- Messages

- 644

- Likes

- 689

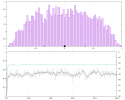

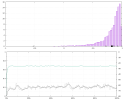

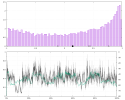

I just ran about 30 tracks through matlab. Clunky, slow. I filtered the L and R to 60Hz, with a filter cutting off by 80dB at 120Hz. (long FIR constant delay filter) and then rudely calculated the normalized cross-correlation between the two channels ( this means I ignored level differences, but captured all changes due to phase, frequency, etc).

This is a number that can vary between -1 and 1. -1 means that the two signals are precisely out of phase and identical (except for level). 1 means they are identical. 0 means they are uncorrelated.

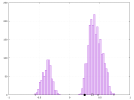

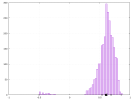

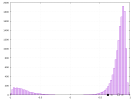

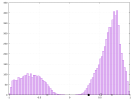

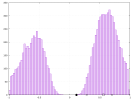

Lowest number I saw was .2 on an acoustic recording with a multimike panpotted arrangement with widely spaced mikes. Unquestionably this did not do any "mix to mono". A bunch of pop recordings came in very precisely at .95 +- .02. A LOT of them. That's actually kind of weird, because they are "almost mono bass". I'd say 50% were above .9 and about 10% under .5. I didn't find any track overall that was under 0. Out of about 30 tracks, only ONE hit '1'. No, it wasn't a mono recording.

Now, this is a very, very rough, broad approximation, it's 2AM, I was bored, and I did the fastest (by code writing) calculation I could imagine. I will go back and do more like I did long ago, calculating both level and phase mismatch, zero out quiet parts, and get a histogram of the actual "sameness" <or lack thereof>. But not tonight. Since this very broad measure would tend to hide differences in level, I'll have to say that there is much less mono bass in modern recordings.

Is there a chance to not exclude tracks where most of the bass is mono (usually in the band of 40-80Hz), but sense of space/decorrelation is latent in low level signals bellow 30hz, sometimes even 20?