Nice OP

@flipflop  @sergeauckland

@sergeauckland and others, I always tend to agree in principle that audiophile land takes distortion (especially the nebulous THD metric) far too seriously, but I do think there is pretty strong evidence of distortion audibility below 0.01%, although when I say "audible", I mean quite specific kinds of nonlinear distortion being discernible from an undistorted signal, as opposed to "sounding distorted" (and it's a whole other can of worms, but low levels of distortion seem to be preferred by a large number of test subjects across various studies).

The main study I have in mind is "Psychoacoustic Detection Threshold of Transient Intermodulation Distortion" by Petri-Larmi et al in 1980. There were two subjects in that study who, after much training and screening, were able to reliably differentiate between a signal with no additional distortion added and a signal with an average of 0.003% (averaged over 250ms) of added transient intermodulation distortion on a particular piano track.

This is the table showing the study's results, subjects two and five were the "golden ears" in this case:

Neither subject preferred the undistorted signal nor were able to say which was and which wasn't distorted btw. They were just able to discern the difference to a statistically signficant extent.

It's a bit complicated here since the distortion we're looking at was averaged over a 250ms time window with a complex signal and was no doubt very nasty being TIM, i.e. it's impossible to translate this finding into a THD or IMD figure for a sine wave.

And then there's the whole problem of THD being a more or less completely meaningless figure in itself, as the

spectrum of the distortion and its relationship to the signal envelope (e.g. peaks vs zero crossings) are so much more important (hence the far greater audibility of e.g. crossover distortion vs tube saturation).

But I think it would be fair to say that some types of nonlinear distortion below 0.01% will be audible to some people on some systems.

How do you expect to hear THD of 0.05% or even 0.005% when loudspeakers are having THD of app 0.5% (above app 200Hz)?

I tend to agree, but again am hesitant to be so certain. A decent single speaker might generate 0.5% THD at 90dB RMS, but most of that distortion will be very well-masked 2nd order distortion, while most music in most homes isn't listened to as loud as 90dB RMS.

Moreover, transducer distortion tends to drop to relatively low levels as signal level decreases. 0.5% @ 90dB may translate to 0.1% or 0.2% @ 80dB, which is still moderately loud in most living rooms (if we assume two speakers, a listening distance of about 3 metres, and an Rt of about 0.5, this will likely produce about 80dB RMS at the listening position).

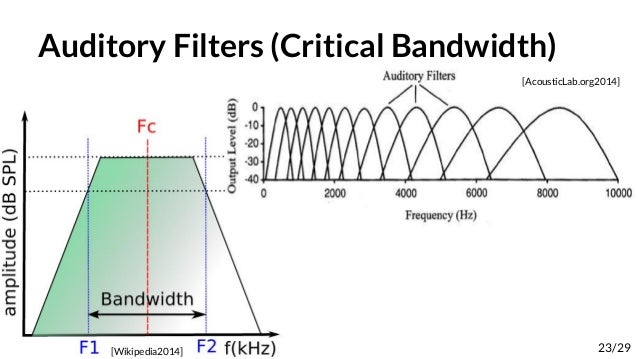

Moreover, as signal level decreases, so does the masking threshold for sounds higher in frequency than the masker (i.e. higher order harmonic distortion or high frequency IMD).

So if a DAC or amp is putting out 0.1% or even 0.01% distortion independently of SPL at the listening position, and particularly if the spectrum of that distortion is nastier (e.g. higher order) than the distortion spectrum of the speakers (which tends to be lower order and therefore much better masked), I can at least see an argument that that distortion might be audible.

Anyway, I still think -120dB is absurdly low even for the strict requirement. I'm not sure I understand where this figure derives from

@flipflop? It doesn't seem to be mentioned in the Nwavguy article that you link; perhaps you could explain more?

Also, I'm not sure what the "lenient" requirement is supposed to represent exactly, but I think it's quite reasonable if the criterion is supposed to be "never sounding distorted" (even if certain types of distortion at that level are nevertheless discernible from an undistorted signal with certain types of music and listening levels).