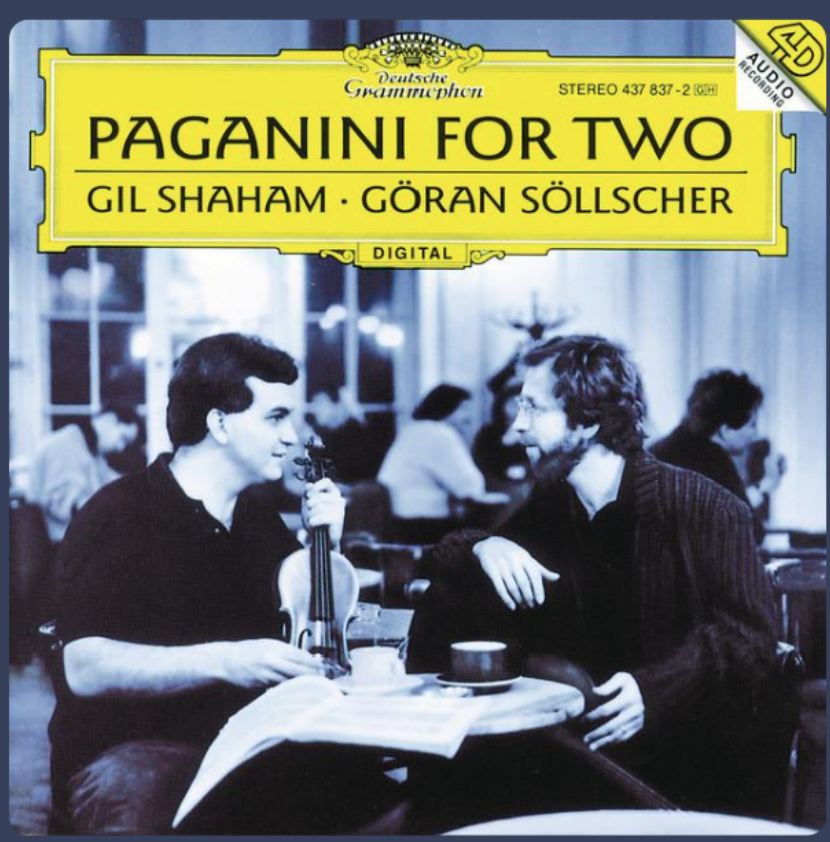

Here's a good album to test with:

The same album is on Spotify, Qobuz, Tidal and Amazon.

Web links:

open.qobuz.com

open.qobuz.com

https://music.amazon.com/albums/B000V8E5SE?ref=dm_sh_WflChC98b13P2clLld3uXBI2U

I don't hear any differences (not blind testing) except the Tidal version seemed slightly louder.

Great album anyway if you like Paganini's music.

The same album is on Spotify, Qobuz, Tidal and Amazon.

Web links:

Open Qobuz

https://music.amazon.com/albums/B000V8E5SE?ref=dm_sh_WflChC98b13P2clLld3uXBI2U

I don't hear any differences (not blind testing) except the Tidal version seemed slightly louder.

Great album anyway if you like Paganini's music.