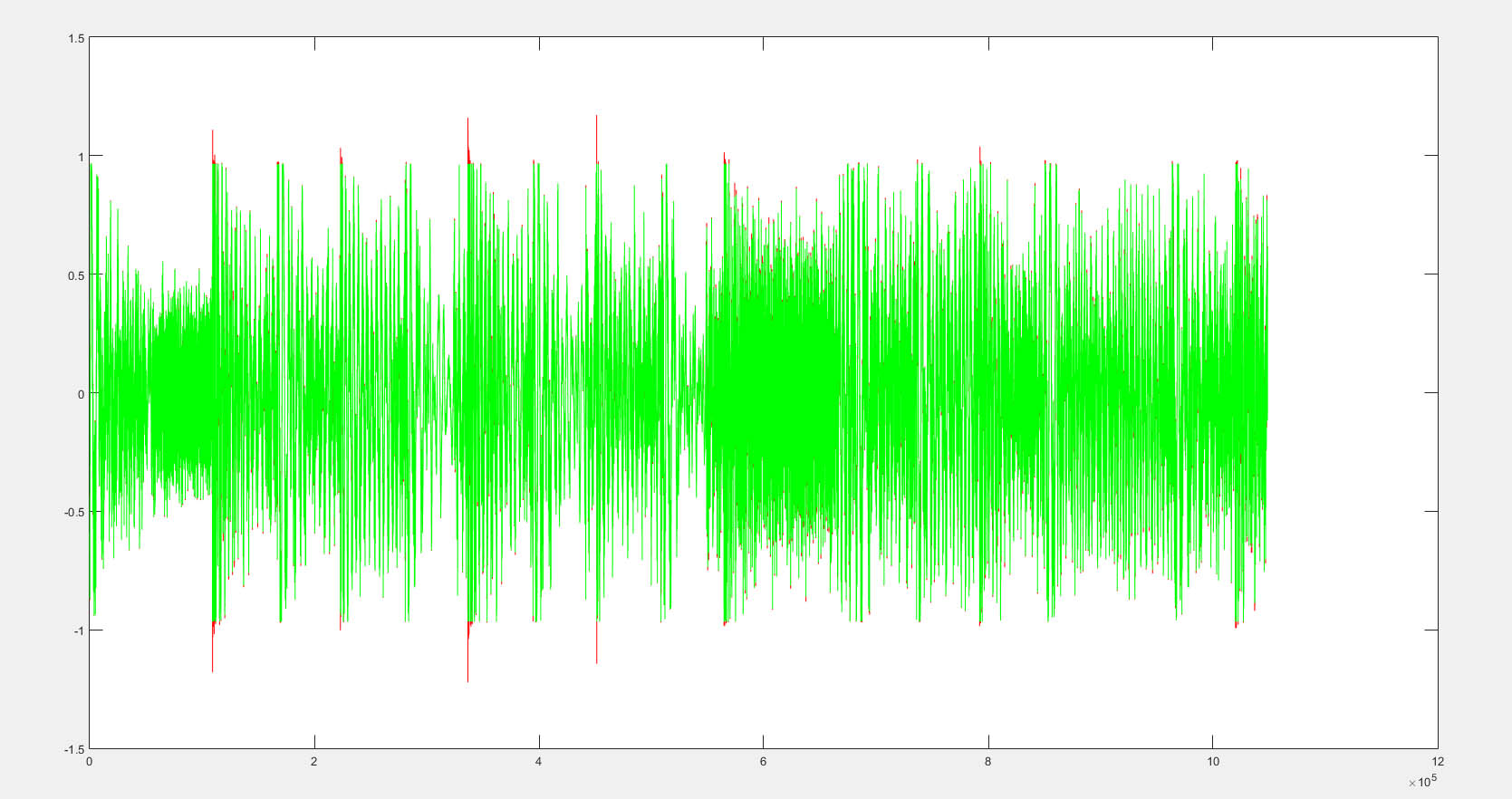

Here, let me help now. What I just did is took a very popular CD track from the 2000's, and interpolated it accurately by 8.

First, I plotted the interpolated values in red. Then I plotted the ORIGINAL values (with sample repeat so they would cover the data that wasn't bigger) over that in green.

Now, any red that's above 1 or below -1 is an actual ISP. No error, no muss, no fuss, just an ISP that many DAC's simply can not handle. Those who persist in arguing they shouldn't exist don't matter, because here is concrete proof on a popular, successful track (that I happen to like, even), even across the alien nation!

As you can plainly see, there are some pretty big ISP's. Some DAC's, including some computers, simply will not reproduce that. Yes, you can mistakenly use a digital volume control and raise your overall noise floor, but I'm not a big fan of that, either. The answer is to produce responsibly, and not do that. Anything else is being mean to the client, whose material will not sound the same on all machines.

Note: The red spots that are not above an absolute value of 1 are not an error, but you do expect that kind of result in an interpolation, which is, of course, how ISP's come to exist.

First, I plotted the interpolated values in red. Then I plotted the ORIGINAL values (with sample repeat so they would cover the data that wasn't bigger) over that in green.

Now, any red that's above 1 or below -1 is an actual ISP. No error, no muss, no fuss, just an ISP that many DAC's simply can not handle. Those who persist in arguing they shouldn't exist don't matter, because here is concrete proof on a popular, successful track (that I happen to like, even), even across the alien nation!

As you can plainly see, there are some pretty big ISP's. Some DAC's, including some computers, simply will not reproduce that. Yes, you can mistakenly use a digital volume control and raise your overall noise floor, but I'm not a big fan of that, either. The answer is to produce responsibly, and not do that. Anything else is being mean to the client, whose material will not sound the same on all machines.

Note: The red spots that are not above an absolute value of 1 are not an error, but you do expect that kind of result in an interpolation, which is, of course, how ISP's come to exist.