- Thread Starter

- #221

I won't get it for another week.can someone tag me when the zero 2 review is out in case in case i miss it

I won't get it for another week.can someone tag me when the zero 2 review is out in case in case i miss it

Do you know how exactly the preference rating scores are calculated by any chance?

It turns out, I doIt's in the Harman articles, which I believe you have access to.

"A Statistical Model That Predicts Listeners’ Preference Ratings of In-Ear Headphones: Part 2 – Development and Validation of the Model"

"A Statistical Model that Predicts Listeners’ Preference Ratings of Around-Ear and On-Ear Headphones"

It's a bit different between in-ears and around ears.

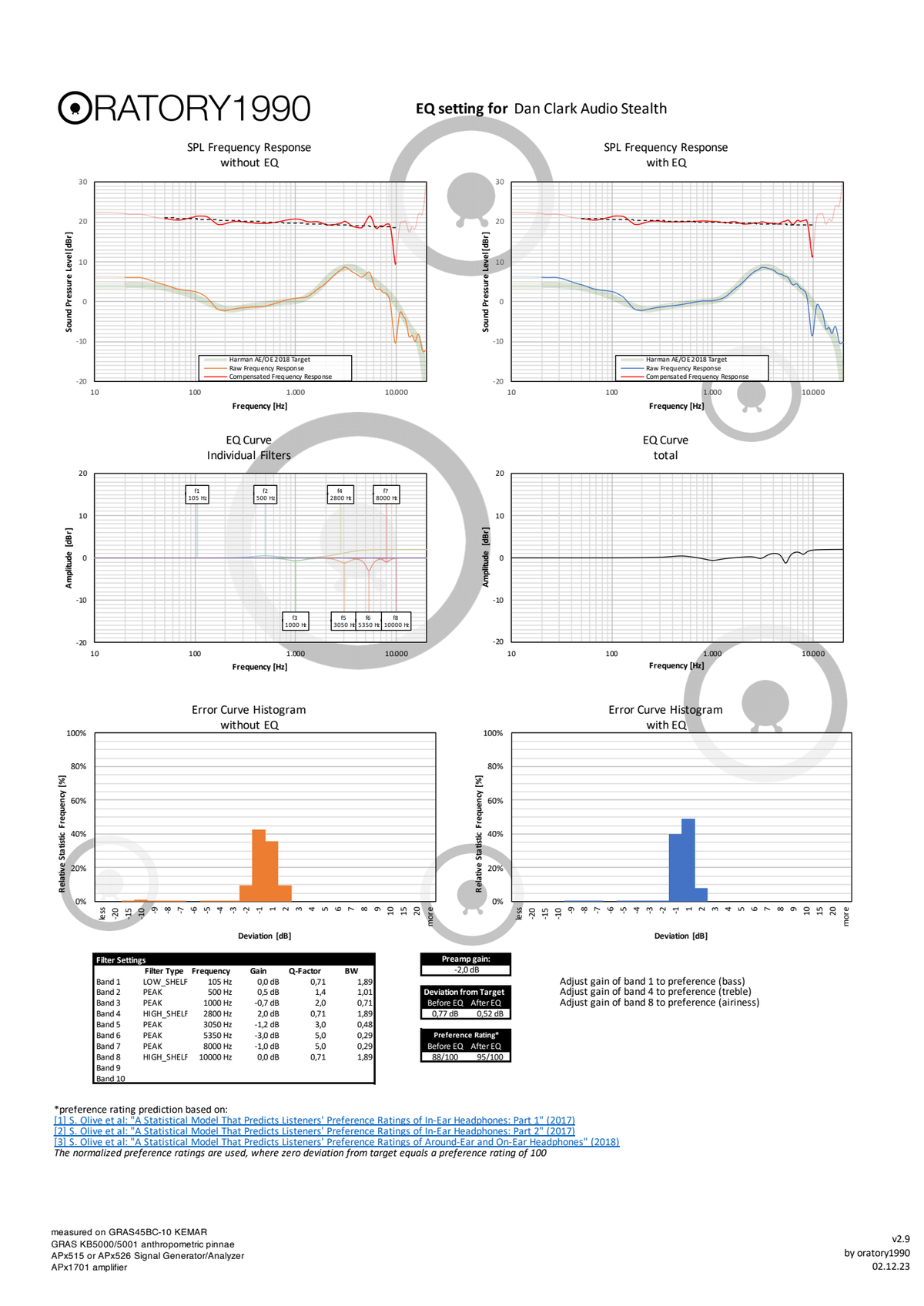

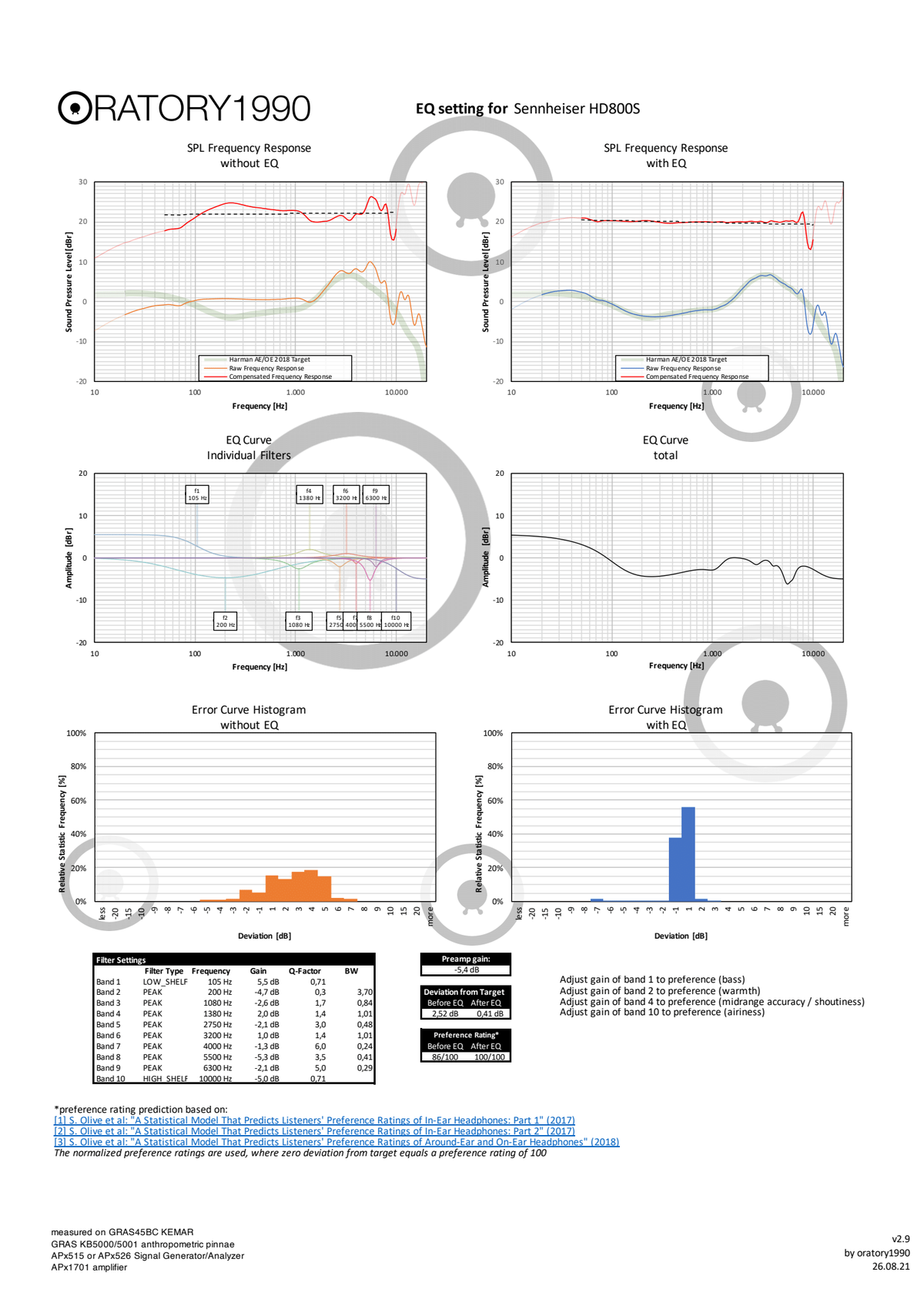

I looked at it in detail, there are 2 independent variables that affect the preference rating, the std-dev of error curve, and absolute value of the slope of the regression line of the error curve, both of which negatively contribute to preference score.The scores for the Stealth and HD800S come directly from oratory1990:

ah so i was somewhat right. So the slope is much more important than we thinkSo maybe the preference is not so much about matching the target at every turn. Maybe then it might not be a good idea to look at deviations from the target curve and claim those deviations point to an objectively lower preference? Because, if my understanding is not wrong, it looks like even if a headphone is deviating from the target curve, if it is doing that in a balanced way that keeps the slope of regression line flat, predicted preference of that headphone might not be very low afterall.

Interesting.The target thinks the extra 3 dB bass boost is objectively incorrect and scores accordingly.

You are sure you are getting good seal though?Interesting.After reading this review I actually ordered Zero 2 because of this extra bass they are supposed to have compared to this first version that seems a bit lacking in the bass area. Just received them today and first impression is they still don't have enough bass.

Apparently not. Changed the default red eartips to yellow (the biggest available) and the sound changed completely.You are sure you are getting good seal though?

Same experience, I had to change to foam ear tips, then the bass became excellentApparently not. Changed the default red eartips to yellow (the biggest available) and the sound changed completely.IMHO they sound pretty balanced now, I don't find bass excessive (but at this point I used them for less than an hour). Also, bass extends pretty deep, it goes much lower compared to TRUTHEAR x Crinacle Zero. Note that this is about Zero: 2, not the first version of Zero (which this topic is supposed to be, I admit).

Anyone have experience with the fit of these compared to the Tangzu Wan'er, which has a tiny shell and shallow fit which won't stay in my ears?

Triggered a question…I personally like Wan'er better as it fits me fine while I struggle with this Zero.

Triggered a question…

So we have the Harman target to maximize user FR preference.

For IEMs, is there a similar “shape target” to maximize user fit preference (tradeoff between comfort and “audio-friendly” fit)?

So then the question becomes: what is the actual users preference for either: a lower s.d. of the error curve OR a flatter error regression line? That has probably not been tested.It turns out, I do

I looked at it in detail, there are 2 independent variables that affect the preference rating, the std-dev of error curve, and absolute value of the slope of the regression line of the error curve, both of which negatively contribute to preference score.

Looking at the error histograms of both headphones, clearly Stealth has a lot smaller std dev so is the clear winner in that metric. For the absolute slope, HD800S is almost flat while Stealth has slightly larger slope which somehow closes the gap for the std dev score it seems.

When you are training a neural network, it produces these weights and sometimes if the model is small, you expect these weights to have a sense, a meaning of sort. They usually don't yet the model works anyway. This feels like that a bit to me - the deviations from the curve are wildly different but predicted preferences are very similar.

So maybe the preference is not so much about matching the target at every turn. Maybe then it might not be a good idea to look at deviations from the target curve and claim those deviations point to an objectively lower preference? Because, if my understanding is not wrong, it looks like even if a headphone is deviating from the target curve, and doing that in a balanced way that keeps the slope of regression line flat, predicted preference of that headphone might not be very low afterall.

Preference rating calculation equation is the result of a regression analysis done on the preference data. The weighting of the std-dev and absolute slope is about equal in the equation that calculates the score.So then the question becomes: what is the actual users preference for either: a lower s.d. of the error curve OR a flatter error regression line? That has probably not been tested.

I would guess thinner nozzle and smaller body would maximize wide compatibility as this would fit people with small ear canals and people with bigger canals just use bigger tips. Most of the fit complaints I've seen recently on this forum are to do with nozzles being too wide.Triggered a question…

So we have the Harman target to maximize user FR preference.

For IEMs, is there a similar “shape target” to maximize user fit preference (tradeoff between comfort and “audio-friendly” fit)?