Statistics of ABX Testing

By Amir Majidimehr

ABX is by far the most talked about type of listening test on the Internet to demonstrate audible difference between two devices, or two audio files. As the name implies, the test involves two inputs, "A" and "B" and the listener is asked to vote whether X (random version of "A" or "B") is closer to "A" or "B." People tend to think this is a rather new type of test but its history dates back to a paper published in the Journal of Acoustic Society of America circa 1950 (yes, 1950!), by two Bell Labs researchers, Munson and Gardner. Here is part of the abstract:http://scitation.aip.org/content/asa/journal/jasa/22/5/10.1121/1.1917190

“The procedure, which we have called the “ABX” test, is a modification of the method of paired comparisons. An observer is presented with a time sequence of three signals for each judgment he is asked to make. During the first time interval he hears signal A, during the second, signal B, and finally signal X. His task is to indicate whether the sound heard during the X interval was more like that during the A interval or more like that during the B interval.“

The modern day instantiation is a bit different in the way the user has control of the switching but its main characteristic remains that of a "forced choice" listening test. Much like a multiple choice exam in school, the listener can get the right answer one of that two ways: actually matching X to the right input (“A” or “B”), or guessing randomly and getting lucky. Unlike school exam however we don’t want to give credit for lucky guesses. We are interested in knowing if the listener actually determined through listening that X matched one of the two inputs. If so we know that the two inputs differed audibly.

At the extreme above is an impossible problem to solve. Take the scenario of a listener voting correctly 6 out of 10 times. Did he guess randomly and got lucky or did he really hear the difference between "A" and "B" 6 out of 10 times? Either outcome is possible.

Let's note the other complexity which is the fact that a human tester is fallible when it comes to audio testing. What if for example the tester correctly matched X to “A” but clicked on the wrong button and voted it being same as “B?” Having taken many such tests I can tell you that this is a common occurrence. Blind tests rely on short-term memory recall and carefulness, neither of which can be guaranteed to be there during the entire test. This and other sources of imperfection in the test fixture and methodology means some number of negatives needs to be allowed.

How much error is tolerable then? The convention in the industry and research is to accept an answer that has less than 5% probability of being due to chance. Inverted, we are 95% sure the outcome was intentional and not lucky guesses. Why 5%? You may be surprised but it is an arbitrary number that dates back to Fisher’s 1925 book, Statistical Methods for Research Workers, where he proposed one in 20 probability of error (5%). Is there some magic there? How about one in 19 or 21? Common sense would say those results should be just as good but again, people stick to the 95% as if it is commandments from above.

Adding to the confusion is that people don't realize computing this probability of chance is based “discrete” value and hence it jumps in value. The reduction of one more right answer may cause a jump from 3% probability of chance to 8%. There will be no intermediate values between them as there is no way to get half a right answer or some other fraction. This makes it silly then to target a value like 5% which it is not physically achievable value. Or insist that 6% is not good when the next value would be 4%.

These issues have been subject of much discussion in the much wider field than audio. Fisher himself opined in his 1956 paper, Statistical methods and scientific inference, that people should not dogmatically stick to the 5% value. His recommendation which I agree with, is to look at the application at hand and let that determine the right threshold. In matters of life and death, we may want to have lower value of chance for example as compared to something as mundane as audio. So don't be hung up on the 5% number like many people seem to be online or even in published studies.

Computing Significance of Results

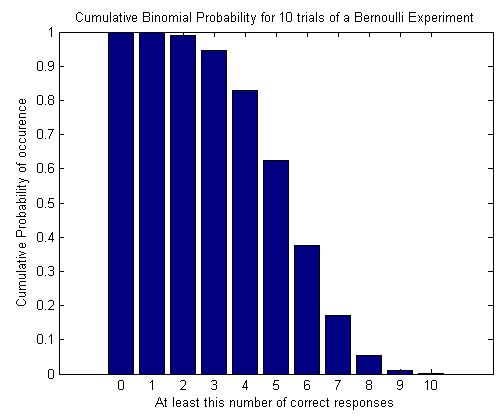

Moving beyond the threshold, let’s discuss how we compute the probability of chance itself. We take advantage of the fact that ABX testing has a distribution that is "binomial." The listener either gets the results right or wrong (hence the starting letters "bi" or two outcomes). As you have seen so far, the more right answers the listener gets, the less chances that the outcome is due to chance alone. This picture from the super paper, "Statistical Analysis of ABX Results Using Signal Detection Theory," shows this pictorially:

And the example from the above data:

"Fig. 2 shows that there is a particular chance involved in randomly getting s responses correct out of n trials. To be more confident that the result is not due to chance, more correct responses are needed. As an example, if there were 8 out of 10 correct responses from a Bernoulli experiment, there is a 5.5% chance that it was due to random guesses. The reader should also recognize that given random responses for 100 tests, 5 test results would show at least 8 out of 10 correct responses."

"Cumulative binomial distribution” tables (countless many are online) and calculators can be used to find the number of right answers to achieve 95% confidence out of total number of trials. A much easier method though is to use the Microsoft Excel function "binom.inv.” It uses the same three parameters we would use in those tables: the probability of each outcome which is 0.5, the criteria which is 0.95 (95% confidence), and the number of trials. The returned value is the number of right answers to achieve 5% probability of chance.

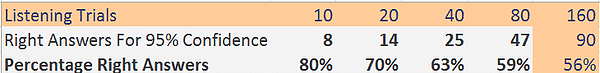

In the table below, I have computed the answer for 10, 20, 40, 80 and 160 trials:

Notice something fascinating: as the number of trials increases, the percentage of right answers needed to achieve less than 5% probability of chance shrinks. So much so that at 160 trials, you only need 56% of the answers to be right! Let me repeat, you only need 56% right answers to have high confidence in the results not being due to chance. At the risk of really blowing your mind, we only need 95 right answers out of 160 to achieve 99% confidence. This represents only 59% right answers!!!

The non-intuitive nature of this statistical measure is what gave me the motivation for this article. In 2014, the Audio Engineering Society paper, the audibility of typical digital audio filters in a high-fidelity playback system, was published in whichthe authors tested whether listeners could tell the difference when 24-bit/192 KHz music and versions converted to 44.1/48 KHz sampling at 24 bits and 16. The paper stated that the threshold for achieving 95% statistical significance was 56% right answers. This set off immediate reaction among the online skeptics who claimed foul by saying 56% is not much better than “50% probability of a lucky coin toss.” Never mind that the paper in question was peer reviewed and won the award for the best paper at the AES conference. That alone should have told these individuals they were barking up the wrong tree but online arguments being what they are, folks started to rally behind this mistaken notion.

The study used 160 trials which is the reason I also included that number in the above table. As we have already discussed, we indeed only need 56% right answers to reduce probability of chance to 5%. So the authors were quite correct and it was the online pundits who are mistaken.

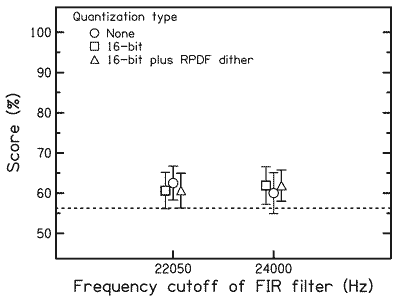

Another source of confusion was that people thought 56% right answers is what the test achieved. That was also wrong and indicated people had not understood the test results as outlined in the paper:

The dashed line is the 95% confidence line (@56% right answers). The vertical bars show the percent right answers in each of 6 tests (22050 is 44.1 KHz sampling and 24000, 48 KHz). With the exception of one test, the mean in the others easily cleared the 95% confidence threshold. The authors justifiably state the same:

“The dotted line shows performance that is significantly different from chance at the p<0.05 level [5% probability of chance] calculated using the binomial distribution (56.25% correct comprising 160 trials combined across listeners for each condition).”

Now let's look at another report where online pundits have not questioned, the AES Journal engineering report, Audibility of a CD-Standard A/D/A Loop Inserted into High-Resolution Audio Playback, by Meyer and. Moran. Here is the break down of some of the results:

"...audiophiles and/or working recording-studio engineers got 246 correct answers in 467 trials, for 52.7% correct.

Females got 18 in 48, for 37.5% correct.

...The “best” listener score, achieved one single time, was 8 for 10, still short of the desired 95% confidence level."

We notice the first mistake right away: only indicating the percentage right and not statistical significance. Rightly or wrongly the authors appear to want to sway the reader to think that these are small percentages near 50% "probability of chance," and hence listeners failed to tell the difference between DVD-A/SACD and 16-bit/44.1 converted version. As we have been discussing the percentage right answers does not provide an intuitive feel for how confident the results are. Statistical analysis needs to have been used, not percentages.

Now let's look at how the audiophiles and recording engineers: 246 correct out of 467 trials. Converting this to statistical significance gives 90% confidence that the results were not due to chance. Very different animal than "52.7%" right answers. Just five more right answers would have given us 95% confidence! Is 10% probability that this outcome was due to chance too high? You can be the judge of that. A judgment that you can only make when the statistical analysis presented, not just a percentage right answers.

The next bit is the statistics of female testers: 37.5% correct. To a lay person that may seem to be even more random than random. But this can't be further from the truth. Imagine the extreme case of getting zero right answers. Does that mean the listeners could not distinguish the files? Answer is most definitely no. Indeed it means 0% probability of chance! Why did the tester get zero right? Simple explanation is that he may have read the vote instructions wrong and while he heard the differences correctly, voted wrong. The authors in the previously mentioned paper, "Statistical Analysis of ABX Results Using Signal Detection Theory," give credence to the same factor:

"It is also important to consider the reversal effect. For example, consider a subject getting 1 out of 10 responses correct. According to the cumulative probability, it may be tempting to say that this outcome is very likely: 99.9% in this case. However, consider that your test subject has been able to incorrectly identify „X‟ 9 out of 10 times. The cumulative probability shows the likelihood of getting at least 1 out of 10 correct responses. The binomial probability graph shows that to get exactly 1 out of 10 correct responses has a probability of 0.97%. It is possible that the subject has reversed his/her decision criteria. Rather than identifying X=A as X=A, the subject has consistently identified X=A as X=B. The difference in the stimuli was audible regardless of the subjects possible misunderstanding of the ABX directions or labeling."

In that regard, getting and worth investigating.

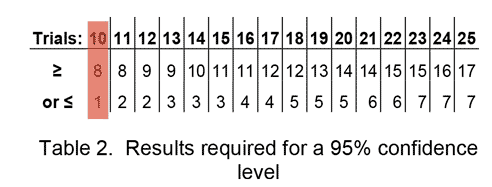

The last bit in Meyer and Moran data summary is also puzzling. That the one person who achieved 8 out of 10 answers also did not meet the confidence bar. As I noted in the quote in above paper, and shown in this table from it:

the probability of chance is 5.5% as opposed to 5%. How could that small difference be enough to dismiss the outcome as not significant? Let's remember again that there is no magic in 5% number. As the table shows, 8 out of 10 does give us high confidence that the results were not due to chance.

Summary

Audio listening tests may seem simple but there is much complexity to conducting and analyzing their results. That we have two pairs of ears do not qualify us to run them, or interpret their results. The statistical analysis of ABX test results is one such aspect. It seems that authors of tests like Meyer and Moran have farmed out this work instead of owning it and explaining in the paper. The result is a source of confusion for people who run with the talking points of these reports as opposed to really digging deep and understanding the results.

I wrote this paper when I realized how little of this information exists and how much confusion abounds as a result. While many run with the "95% confidence" or "p<.05," the knowledge level does not exist below this superficial level. Hopefully with this article I have filled the hole in our collective knowledge in this area.

Disclaimer

I have attempted to heavily simplify this topic in order to get the main points across. More Rigorous analysis may be called for before confidence ratings are trusted. For example you need to make sure the inputs were truly randomized. The paper, "Statistical Analysis of ABX Results Using Signal Detection Theory,"is a super review of ABX testing in general and this point in the specific.

References

"Audibility of a CD-Standard A/D/A Loop Inserted into High-Resolution Audio Playback," E. Brad Meyer and David R. Moran, Engineering Report, J. Audio Eng. Soc., Vol. 55, No. 9, 2007 September

“The audibility of typical digital audio Filters in a high-fidelity playback system,” Convention Paper, Presented at the 137th AES Convention 2014

"Statistical Analysis of ABX Results Using Signal Detection Theory," Jon Boley and Michael Lester, Presented at the 127th Convention 2009 October 9–12

"High Resolution Audio: Does It Matter?," Amir Majidimehr, Widescreen Review Magazine, January 2015

By Amir Majidimehr

ABX is by far the most talked about type of listening test on the Internet to demonstrate audible difference between two devices, or two audio files. As the name implies, the test involves two inputs, "A" and "B" and the listener is asked to vote whether X (random version of "A" or "B") is closer to "A" or "B." People tend to think this is a rather new type of test but its history dates back to a paper published in the Journal of Acoustic Society of America circa 1950 (yes, 1950!), by two Bell Labs researchers, Munson and Gardner. Here is part of the abstract:http://scitation.aip.org/content/asa/journal/jasa/22/5/10.1121/1.1917190

“The procedure, which we have called the “ABX” test, is a modification of the method of paired comparisons. An observer is presented with a time sequence of three signals for each judgment he is asked to make. During the first time interval he hears signal A, during the second, signal B, and finally signal X. His task is to indicate whether the sound heard during the X interval was more like that during the A interval or more like that during the B interval.“

The modern day instantiation is a bit different in the way the user has control of the switching but its main characteristic remains that of a "forced choice" listening test. Much like a multiple choice exam in school, the listener can get the right answer one of that two ways: actually matching X to the right input (“A” or “B”), or guessing randomly and getting lucky. Unlike school exam however we don’t want to give credit for lucky guesses. We are interested in knowing if the listener actually determined through listening that X matched one of the two inputs. If so we know that the two inputs differed audibly.

At the extreme above is an impossible problem to solve. Take the scenario of a listener voting correctly 6 out of 10 times. Did he guess randomly and got lucky or did he really hear the difference between "A" and "B" 6 out of 10 times? Either outcome is possible.

Let's note the other complexity which is the fact that a human tester is fallible when it comes to audio testing. What if for example the tester correctly matched X to “A” but clicked on the wrong button and voted it being same as “B?” Having taken many such tests I can tell you that this is a common occurrence. Blind tests rely on short-term memory recall and carefulness, neither of which can be guaranteed to be there during the entire test. This and other sources of imperfection in the test fixture and methodology means some number of negatives needs to be allowed.

How much error is tolerable then? The convention in the industry and research is to accept an answer that has less than 5% probability of being due to chance. Inverted, we are 95% sure the outcome was intentional and not lucky guesses. Why 5%? You may be surprised but it is an arbitrary number that dates back to Fisher’s 1925 book, Statistical Methods for Research Workers, where he proposed one in 20 probability of error (5%). Is there some magic there? How about one in 19 or 21? Common sense would say those results should be just as good but again, people stick to the 95% as if it is commandments from above.

Adding to the confusion is that people don't realize computing this probability of chance is based “discrete” value and hence it jumps in value. The reduction of one more right answer may cause a jump from 3% probability of chance to 8%. There will be no intermediate values between them as there is no way to get half a right answer or some other fraction. This makes it silly then to target a value like 5% which it is not physically achievable value. Or insist that 6% is not good when the next value would be 4%.

These issues have been subject of much discussion in the much wider field than audio. Fisher himself opined in his 1956 paper, Statistical methods and scientific inference, that people should not dogmatically stick to the 5% value. His recommendation which I agree with, is to look at the application at hand and let that determine the right threshold. In matters of life and death, we may want to have lower value of chance for example as compared to something as mundane as audio. So don't be hung up on the 5% number like many people seem to be online or even in published studies.

Computing Significance of Results

Moving beyond the threshold, let’s discuss how we compute the probability of chance itself. We take advantage of the fact that ABX testing has a distribution that is "binomial." The listener either gets the results right or wrong (hence the starting letters "bi" or two outcomes). As you have seen so far, the more right answers the listener gets, the less chances that the outcome is due to chance alone. This picture from the super paper, "Statistical Analysis of ABX Results Using Signal Detection Theory," shows this pictorially:

And the example from the above data:

"Fig. 2 shows that there is a particular chance involved in randomly getting s responses correct out of n trials. To be more confident that the result is not due to chance, more correct responses are needed. As an example, if there were 8 out of 10 correct responses from a Bernoulli experiment, there is a 5.5% chance that it was due to random guesses. The reader should also recognize that given random responses for 100 tests, 5 test results would show at least 8 out of 10 correct responses."

"Cumulative binomial distribution” tables (countless many are online) and calculators can be used to find the number of right answers to achieve 95% confidence out of total number of trials. A much easier method though is to use the Microsoft Excel function "binom.inv.” It uses the same three parameters we would use in those tables: the probability of each outcome which is 0.5, the criteria which is 0.95 (95% confidence), and the number of trials. The returned value is the number of right answers to achieve 5% probability of chance.

In the table below, I have computed the answer for 10, 20, 40, 80 and 160 trials:

Notice something fascinating: as the number of trials increases, the percentage of right answers needed to achieve less than 5% probability of chance shrinks. So much so that at 160 trials, you only need 56% of the answers to be right! Let me repeat, you only need 56% right answers to have high confidence in the results not being due to chance. At the risk of really blowing your mind, we only need 95 right answers out of 160 to achieve 99% confidence. This represents only 59% right answers!!!

The non-intuitive nature of this statistical measure is what gave me the motivation for this article. In 2014, the Audio Engineering Society paper, the audibility of typical digital audio filters in a high-fidelity playback system, was published in whichthe authors tested whether listeners could tell the difference when 24-bit/192 KHz music and versions converted to 44.1/48 KHz sampling at 24 bits and 16. The paper stated that the threshold for achieving 95% statistical significance was 56% right answers. This set off immediate reaction among the online skeptics who claimed foul by saying 56% is not much better than “50% probability of a lucky coin toss.” Never mind that the paper in question was peer reviewed and won the award for the best paper at the AES conference. That alone should have told these individuals they were barking up the wrong tree but online arguments being what they are, folks started to rally behind this mistaken notion.

The study used 160 trials which is the reason I also included that number in the above table. As we have already discussed, we indeed only need 56% right answers to reduce probability of chance to 5%. So the authors were quite correct and it was the online pundits who are mistaken.

Another source of confusion was that people thought 56% right answers is what the test achieved. That was also wrong and indicated people had not understood the test results as outlined in the paper:

The dashed line is the 95% confidence line (@56% right answers). The vertical bars show the percent right answers in each of 6 tests (22050 is 44.1 KHz sampling and 24000, 48 KHz). With the exception of one test, the mean in the others easily cleared the 95% confidence threshold. The authors justifiably state the same:

“The dotted line shows performance that is significantly different from chance at the p<0.05 level [5% probability of chance] calculated using the binomial distribution (56.25% correct comprising 160 trials combined across listeners for each condition).”

Now let's look at another report where online pundits have not questioned, the AES Journal engineering report, Audibility of a CD-Standard A/D/A Loop Inserted into High-Resolution Audio Playback, by Meyer and. Moran. Here is the break down of some of the results:

"...audiophiles and/or working recording-studio engineers got 246 correct answers in 467 trials, for 52.7% correct.

Females got 18 in 48, for 37.5% correct.

...The “best” listener score, achieved one single time, was 8 for 10, still short of the desired 95% confidence level."

We notice the first mistake right away: only indicating the percentage right and not statistical significance. Rightly or wrongly the authors appear to want to sway the reader to think that these are small percentages near 50% "probability of chance," and hence listeners failed to tell the difference between DVD-A/SACD and 16-bit/44.1 converted version. As we have been discussing the percentage right answers does not provide an intuitive feel for how confident the results are. Statistical analysis needs to have been used, not percentages.

Now let's look at how the audiophiles and recording engineers: 246 correct out of 467 trials. Converting this to statistical significance gives 90% confidence that the results were not due to chance. Very different animal than "52.7%" right answers. Just five more right answers would have given us 95% confidence! Is 10% probability that this outcome was due to chance too high? You can be the judge of that. A judgment that you can only make when the statistical analysis presented, not just a percentage right answers.

The next bit is the statistics of female testers: 37.5% correct. To a lay person that may seem to be even more random than random. But this can't be further from the truth. Imagine the extreme case of getting zero right answers. Does that mean the listeners could not distinguish the files? Answer is most definitely no. Indeed it means 0% probability of chance! Why did the tester get zero right? Simple explanation is that he may have read the vote instructions wrong and while he heard the differences correctly, voted wrong. The authors in the previously mentioned paper, "Statistical Analysis of ABX Results Using Signal Detection Theory," give credence to the same factor:

"It is also important to consider the reversal effect. For example, consider a subject getting 1 out of 10 responses correct. According to the cumulative probability, it may be tempting to say that this outcome is very likely: 99.9% in this case. However, consider that your test subject has been able to incorrectly identify „X‟ 9 out of 10 times. The cumulative probability shows the likelihood of getting at least 1 out of 10 correct responses. The binomial probability graph shows that to get exactly 1 out of 10 correct responses has a probability of 0.97%. It is possible that the subject has reversed his/her decision criteria. Rather than identifying X=A as X=A, the subject has consistently identified X=A as X=B. The difference in the stimuli was audible regardless of the subjects possible misunderstanding of the ABX directions or labeling."

In that regard, getting and worth investigating.

The last bit in Meyer and Moran data summary is also puzzling. That the one person who achieved 8 out of 10 answers also did not meet the confidence bar. As I noted in the quote in above paper, and shown in this table from it:

the probability of chance is 5.5% as opposed to 5%. How could that small difference be enough to dismiss the outcome as not significant? Let's remember again that there is no magic in 5% number. As the table shows, 8 out of 10 does give us high confidence that the results were not due to chance.

Summary

Audio listening tests may seem simple but there is much complexity to conducting and analyzing their results. That we have two pairs of ears do not qualify us to run them, or interpret their results. The statistical analysis of ABX test results is one such aspect. It seems that authors of tests like Meyer and Moran have farmed out this work instead of owning it and explaining in the paper. The result is a source of confusion for people who run with the talking points of these reports as opposed to really digging deep and understanding the results.

I wrote this paper when I realized how little of this information exists and how much confusion abounds as a result. While many run with the "95% confidence" or "p<.05," the knowledge level does not exist below this superficial level. Hopefully with this article I have filled the hole in our collective knowledge in this area.

Disclaimer

I have attempted to heavily simplify this topic in order to get the main points across. More Rigorous analysis may be called for before confidence ratings are trusted. For example you need to make sure the inputs were truly randomized. The paper, "Statistical Analysis of ABX Results Using Signal Detection Theory,"is a super review of ABX testing in general and this point in the specific.

References

"Audibility of a CD-Standard A/D/A Loop Inserted into High-Resolution Audio Playback," E. Brad Meyer and David R. Moran, Engineering Report, J. Audio Eng. Soc., Vol. 55, No. 9, 2007 September

“The audibility of typical digital audio Filters in a high-fidelity playback system,” Convention Paper, Presented at the 137th AES Convention 2014

"Statistical Analysis of ABX Results Using Signal Detection Theory," Jon Boley and Michael Lester, Presented at the 127th Convention 2009 October 9–12

"High Resolution Audio: Does It Matter?," Amir Majidimehr, Widescreen Review Magazine, January 2015