This is a technical post aimed at people who like to tinker with electronics and software. Nothing really useful has come out of it (so far).

Like many others before me, I thought it would be neat to have multichannel audio working on the Beaglebone Black. It could be used as a base for a custom USB DAC (I'm envisioning a desktop DAC with 2 balanced main channels, 2 SE sub channels, and 2 headphone channels, all with different filters and with a volume knob controlling only main+sub outputs) or for DIY active speakers fed via AES67, for example.

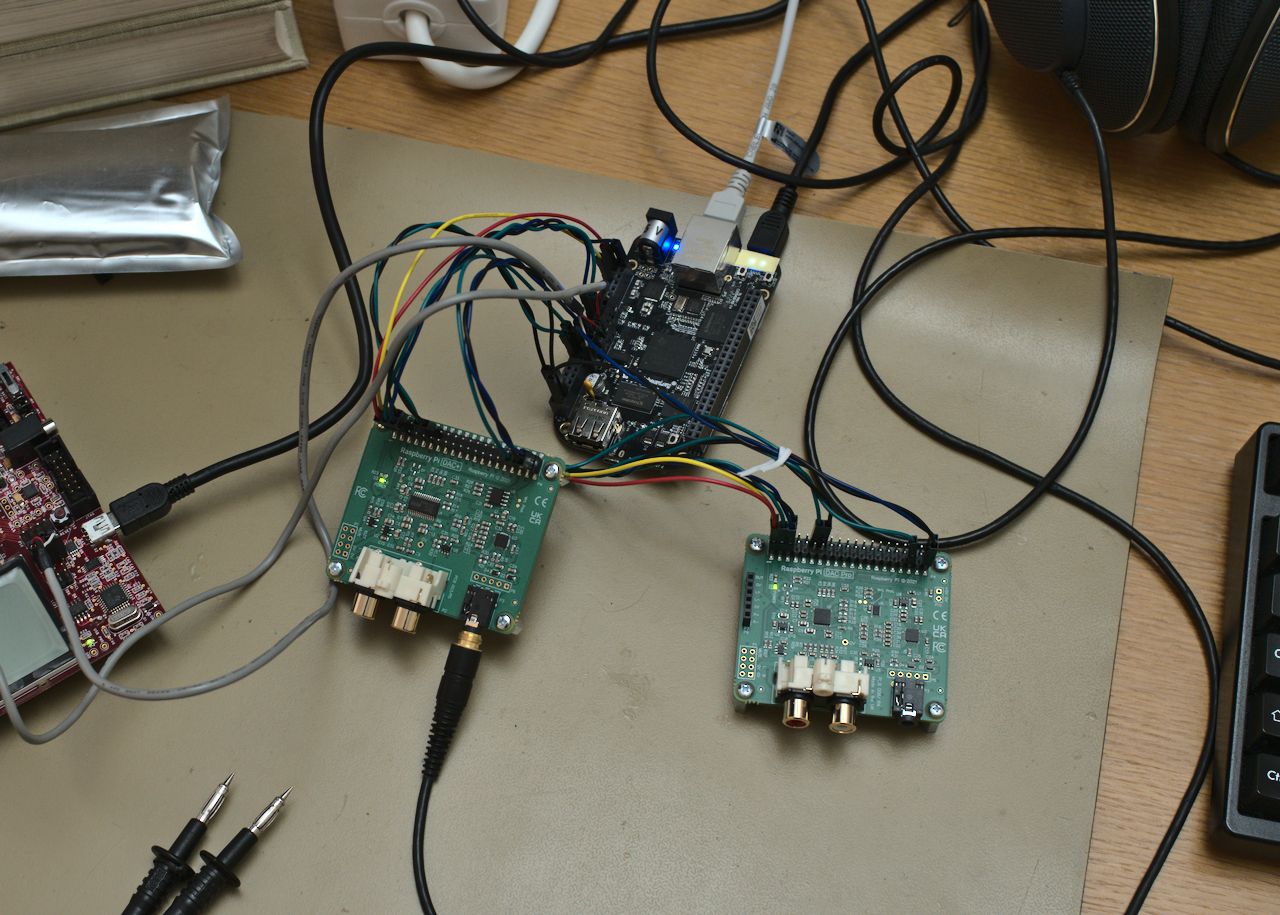

So I tried it. Here's my experimental setup:

(The larger board on the left with the two gray cables going to it I'm just using as a USB<->3.3V serial converter.)

To the Beagleboard Black is connected a Raspberry Pi DAC+ (codec TI PCM5122) and a Raspberry Pi DAC Pro (codec TI PCM5242, balanced output) - tested by Amir here. Both boards also have a headphone amp which was useful during testing.

The Beaglebone Black's (BBB's) audio peripheral is what TI calls a "Davinci McASP" - short for Multichannel Audio Serial Port. Actually the SoC has two such peripherals. Each one supports four I2S audio streams (and each one of those can also be TDM:ed to carry up to 32 channels each - on paper at least).

The BBB also has a dedicated 24.576 MHz audio clock oscillator connected to mcasp0. This clock is evenly divisible by 48 kHz but not by 44.1 kHz which means only multiples of 48 kHz are supported (actually I think multiples of 16 kHz?). On the other hand it should give a very nice and stable clock at the supported rates.

In my experiments I used mcasp0 and I connected the codecs to individual I2S ports: the DAC+ is connected to axr2 and the DAC Pro is connected to axr0. They share bit clock and frame clock which are supplied by the McASP, derived from the 24.576 MHz oscillator. Both codecs generate their own master clock internally from the bit clock via a PLL, which makes things easier.

Besides the I2S data streams the codecs also need an I2C connection for setup of audio data format and for controlling things like digital volume and analog gain. The codecs have what TI calls a "basic digital volume control" (+24 dB to -103 dB in 0.5 dB steps) and also a selectable -6 dB analog gain control (i.e. one can select 2 Vrms or 1 Vrms full scale), among other settings. By default both of these boards use I2C address 0x4c, but the Pro board is designed so that one can change the codec address to 0x4e using a small solder bridge, which I elected to do to be able to hook them up to the same I2C bus. (Alternatively, the BBB has two I2C buses available on its pin headers.)

Both the McASP peripheral and the PCM5xxx codecs are pretty complicated beasts. Luckily they already have drivers in the Linux kernel. "All" that remained to be done was to make them talk to each other! This is generally accomplished using what's called a device tree that describes to the Linux kernel what's connected and how.

Getting either one of the codecs to talk to ALSA and output sound was not too difficult actually. My device tree source for using either codec can be found here.

However, try as I may I cannot seem to get both codecs working at the same time. Modifying the device tree to use both codecs by enabling both McASP serializers and "commenting-in" the pcm5142 port just gives:

... as does all other things I've tried (well, some have failed more spectacularly). I've really tried to make this work but without a solid understanding of ALSA drivers and device trees I think I am at the end of the road, at least for the moment. I have asked for help on LKML but that's not really the right forum for the "please tell me how to do this" kind of questions; it's more of an "I found a kernel bug and here's a patch to fix it" kind of place. (Obviously, if there's a Linux kernel/ALSA developer here that wants to chime in I'd be all ears!)

Well, I guess a negative result is also a result, so there you have it! Perhaps it can be of use to someone.

Like many others before me, I thought it would be neat to have multichannel audio working on the Beaglebone Black. It could be used as a base for a custom USB DAC (I'm envisioning a desktop DAC with 2 balanced main channels, 2 SE sub channels, and 2 headphone channels, all with different filters and with a volume knob controlling only main+sub outputs) or for DIY active speakers fed via AES67, for example.

So I tried it. Here's my experimental setup:

(The larger board on the left with the two gray cables going to it I'm just using as a USB<->3.3V serial converter.)

To the Beagleboard Black is connected a Raspberry Pi DAC+ (codec TI PCM5122) and a Raspberry Pi DAC Pro (codec TI PCM5242, balanced output) - tested by Amir here. Both boards also have a headphone amp which was useful during testing.

The Beaglebone Black's (BBB's) audio peripheral is what TI calls a "Davinci McASP" - short for Multichannel Audio Serial Port. Actually the SoC has two such peripherals. Each one supports four I2S audio streams (and each one of those can also be TDM:ed to carry up to 32 channels each - on paper at least).

The BBB also has a dedicated 24.576 MHz audio clock oscillator connected to mcasp0. This clock is evenly divisible by 48 kHz but not by 44.1 kHz which means only multiples of 48 kHz are supported (actually I think multiples of 16 kHz?). On the other hand it should give a very nice and stable clock at the supported rates.

In my experiments I used mcasp0 and I connected the codecs to individual I2S ports: the DAC+ is connected to axr2 and the DAC Pro is connected to axr0. They share bit clock and frame clock which are supplied by the McASP, derived from the 24.576 MHz oscillator. Both codecs generate their own master clock internally from the bit clock via a PLL, which makes things easier.

Besides the I2S data streams the codecs also need an I2C connection for setup of audio data format and for controlling things like digital volume and analog gain. The codecs have what TI calls a "basic digital volume control" (+24 dB to -103 dB in 0.5 dB steps) and also a selectable -6 dB analog gain control (i.e. one can select 2 Vrms or 1 Vrms full scale), among other settings. By default both of these boards use I2C address 0x4c, but the Pro board is designed so that one can change the codec address to 0x4e using a small solder bridge, which I elected to do to be able to hook them up to the same I2C bus. (Alternatively, the BBB has two I2C buses available on its pin headers.)

Both the McASP peripheral and the PCM5xxx codecs are pretty complicated beasts. Luckily they already have drivers in the Linux kernel. "All" that remained to be done was to make them talk to each other! This is generally accomplished using what's called a device tree that describes to the Linux kernel what's connected and how.

Getting either one of the codecs to talk to ALSA and output sound was not too difficult actually. My device tree source for using either codec can be found here.

However, try as I may I cannot seem to get both codecs working at the same time. Modifying the device tree to use both codecs by enabling both McASP serializers and "commenting-in" the pcm5142 port just gives:

Code:

kernel: asoc-audio-graph-card sound: parse error -22

kernel: asoc-audio-graph-card: probe of sound failed with error -22... as does all other things I've tried (well, some have failed more spectacularly). I've really tried to make this work but without a solid understanding of ALSA drivers and device trees I think I am at the end of the road, at least for the moment. I have asked for help on LKML but that's not really the right forum for the "please tell me how to do this" kind of questions; it's more of an "I found a kernel bug and here's a patch to fix it" kind of place. (Obviously, if there's a Linux kernel/ALSA developer here that wants to chime in I'd be all ears!)

Well, I guess a negative result is also a result, so there you have it! Perhaps it can be of use to someone.