Thanks, and Yes, totally agree. I think it's a mistake to linearize phase independently of mag, and that's a big part of my reservations about FIR based global room corrections.absolutely agree. I was a little unclear, the bit quoted was referring to linearising phase independently of magnitude (because a driver is minimum phase then linearising its magnitude response is all you need)

-

Welcome to ASR. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Keith_W DSP system

- Thread starter Keith_W

- Start date

Yes, I followed your impressions with interest. My experience is the distance we use for making those flattening corrections is critical, to say the least.That is what I have been doing, I flatten the mag and the phase with a reverse pass IIR filter, and then apply the XO. The results were posted above. About the only thing I am doing differently is that the initial measurement is not quasi-anechoic, it is an extreme near field (mic almost touching the drivers) with tight windowing.

I'm told phase does not settle down until we are far enough away from a driver for inverse square law fall off of SPL to occur. Supposedly, 2-3x longest speaker dimension is a good ROT for the onset of the acoustic far field.

My indoor 1m raw measurements can vary substantially from outdoor 3m quasi-meas. (and not just due to room relelections)

Even the relative SPL between drivers varies, not just mag and phase.

I guess its because the synergy/unity horns place drivers at different distance from the mic, and relative distances vs SPL, become very acoute at close range.

If you have z-axis offsets with your speakers, might be something to look at when doing near field meas.

3ll3d00d

Senior Member

- Joined

- Aug 31, 2019

- Messages

- 308

- Likes

- 245

in audible terms, I think we're basically talking about pre echoI have to take a quick aside to confess my ignorance here. I understand what the words mean, but I don't understand it on any deeper level. In particular, there is an audible difference between (what I am assuming) is the driver's natural min phase response and the corrected response which makes it lin phase. Is this a known phenomenon? Or is phase really inaudible?

outside or in the middle of the room should doHow do you suggest I take a quasi-anechoic measurement? You mean take the drivers outside and run sweeps?

Interesting your journey. If you compare your results between hardware upgrades an DSP like Acourate upgrade whats the difference in percentage gain. As for you mine upgrade to DSP is also a life changing experience using Mathaudio Room EQ. Got more or less same results with Lyngdorf, Dirac. DSP for me close to a 70% improvement compared to only hardeware upgrades at the most 10 a 15 % improvement.I have resisted making a system thread on ASR for quite some time for many reasons. (1) ASR members seem to be anti-high end audio. (2) I do some things in my system that many in ASR might deem controversial.

I will start off the thread with a few posts describing the architecture and hardware of the system, then I will post measurements. I have considered in detail every aspect of this system.

The system is a mish-mash of different philosophies for a simple reason - I changed my mind. I am open-minded about learning, and I can and do abandon beliefs if I am persuaded. I might seem stubborn to some of you, but that is because my brain is a little bit slow, but eventually I do come around. The evidence that I can and do change beliefs can be seen in the hardware you see before you.

When I first bought these speakers 15 years ago, I was a subjectivist. I loved the way the top end sounded, but I immediately noticed a bass problem with the speaker. I went through the usual recommendations which left me unsatisfied - high end speaker cable (I still have them), then more powerful valve amps. Then I moved on to some real solutions - first, I bi-amp'ed the speaker (SS amp for the woofers, valve amp for the top). This brought improvement, but not enough. So in came a pair of subwoofers. It was better, but not good enough. Then I bought a DEQX and started my DSP journey. I never obtained satisfactory results - in hindsight this was because I could not operate the thing properly, and did not know how to interpret the measurements. I got rid of it and bought a Marchand passive crossover. That worked better than the DEQX, but I was still not satisfied.

Around 2015, I heard about DSP with Acourate. I saw a post on WhatsBestForum here by "Blizzard" (aka @dallasjustice on ASR). To me it sounded incredibly advanced, and the more I read about it, the more excited I became at the possibilities. @dallasjustice and I exchanged a few PM's, and he rang me from the USA to sell me on the idea of using Acourate. This was truly a life-changing moment for me in this hobby. Gradually I started to shed subjectivist beliefs as I learnt more, could hear the improvements being made to my system, and marvelled how they were REAL improvements with measurable results instead of imagined ones where I wasn't sure of what I was hearing.

What you see in these pictures are remnants of a long abandoned approach to audio, a time when I believed in high end DAC's, amplifiers, cables, and the like. Mixed in with this older equipment are more modern stuff. For example, this fully active system requires 8 interconnects. Two pairs of these interconnects are high end audio cables, which I have owned for years. The rest of the cabling is ultra cheap stuff from pro audio shops.

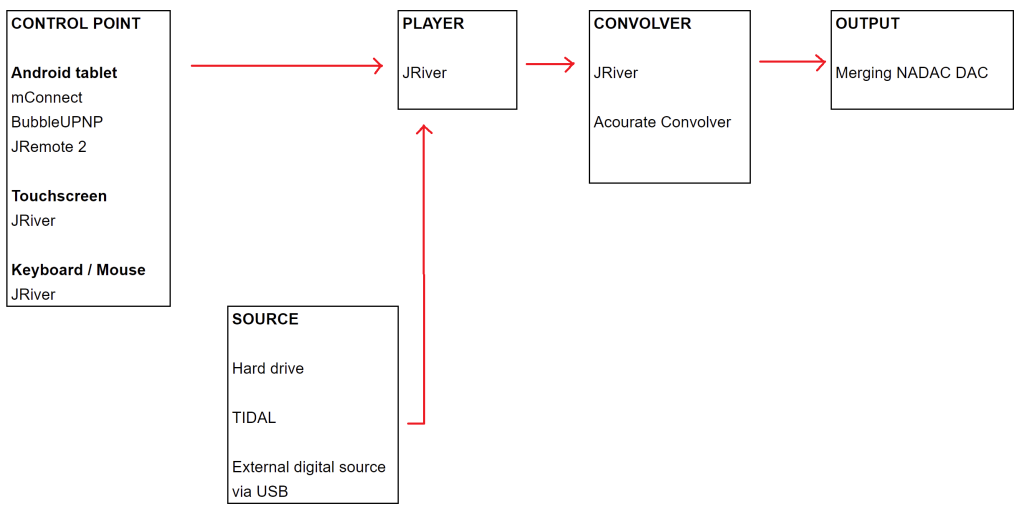

Hardware Architecture

The speakers are Acapella High Violons. These are delivered as a 3-way speaker with a horn loaded plasma tweeter, a midrange horn, and a conventional woofer. The speaker has been dissected and modified. The woofers that came with the speaker were replaced with a pair of prototypes from SGR Audio in Melbourne, manufactured by Lorantz (also in Melbourne). The passive crossovers have been bypassed. Each driver has its own amplifier channel and DAC channel and individual filter made in Acourate.

Not shown in the diagram is some room treatment. Normally they are stacked behind the TV, but I can deploy them around the room if needed.

Also not shown is the measurement setup - I own two Behringer ECM8000's, an Earthworks M30 mic, and a RME Fireface UC.

Software Architecture

The software setup is pretty simple, the above diagram explains it all.

Although the diagram shows two convolvers, I actually have licenses for 3 - JRiver, Acourate Convolver, and HQPlayer. I have uninstalled HQP from the system because it is CPU intensive and inconvenient to use. I use Acourate Convolver daily because I like the filter bank feature, I can switch between different filters and do A-B comparisons. I can also control it from my tablet, so no need to get off the sofa to change filters! Not shown in the diagram is the VST pipeline. I use a Pultec VST, pKane's PKHarmonic, and uBACCH.

Last edited:

Keith_W

Major Contributor

- Thread Starter

- #285

in audible terms, I think we're basically talking about pre echo

Yeah, I don't have any pre-echo. I can get rid of it with careful adjustment of the excess phase window (Acourate Macro 4). If that doesn't get rid of it, there is also pre-echo compensation.

outside or in the middle of the room should do

It's not as if I have only measured my tweeters once

About the only thing I haven't done is take them outside.

Keith_W

Major Contributor

- Thread Starter

- #286

Interesting your journey. If you compare your results between hardware upgrades an DSP like Acourate upgrade whats the difference in percentage gain. As for you mine upgrade to DSP is also a life changing experience using Mathaudio Room EQ. Got more or less same results with Lyngdorf, Dirac. DSP for me close to a 70% improvement compared to only hardeware upgrades st the most 10 a 15 % improvement.

IN MY OPINION, there are 3 factors when it comes to what gains you get from DSP. In descending order of importance, these are:

1. The user

2. Software

3. Hardware

The user makes the biggest difference. You are the one taking the measurements, interpreting the results, making decisions, and doing the corrections. If you start doing stupid things (as I have done on multiple occasions in my journey), you will get bad results. I am sure that everyone has done stupid things including some of the other guys in this thread. Or maybe even especially them because I can't see how you can accumulate so much knowledge without making mistakes along the way. ASR can help you somewhat, but these guys can only see my measurements and can't hear what I hear, so in that sense I am alone. You have to learn a bit of everything ... speaker engineering, room acoustics, psychoacoustics, DSP, not to mention get to know your software. This is what makes the journey so fascinating for me.

Software is next most important, and the most important features of software are ease of use with automation (for beginners) and manual control and flexibility (for advanced users). Some DSP software are like "DSP black boxes" where measurement goes in, and result comes out. What manipulation the software has done to the signal is a mystery. Some of these software packages give you some control over the corrections, but ultimately software like Dirac removes control from you in exchange for ease of use. This type of software is more likely to give you good results if you do not know what you are doing, but ultimately you are limited by decisions made by software. If you want to make your own decisions, then it's Acourate or REW/RePhase. I think also FIR Designer and MATLAB (with audio tools) but really MATLAB is a tool for extreme nerds and masochists.

Hardware only unlocks the potential for better sound, by itself it won't make a difference. Trinnov, MiniDSP, and any AVR with Dirac in it are severely limited in processing power. They typically have 1024 taps which will limit how much correction you can do. In contrast, PC based convolution is not limited by processing power. I have a friend who is convolving 30 channels of audio at 65536 taps each on his Mac Mini. But I am of the opinion that it's not the number of taps that makes the difference, it's what you do with them. Doing 1024 clever things is better than 65536 stupid things.

I should point out that there are other considerations as well, for example if you want to perform DSP on an external audio source (say, a HDMI feed) or you own a turntable. To obtain this functionality with PC based convolution is a massive pain. It theoretically can be done, but it involves audio routing and maybe purchase of extra hardware. In contrast, a MiniDSP has built-in analog inputs and multiple digital inputs, and it is as simple as plugging it in.

Keith_W

Major Contributor

- Thread Starter

- #287

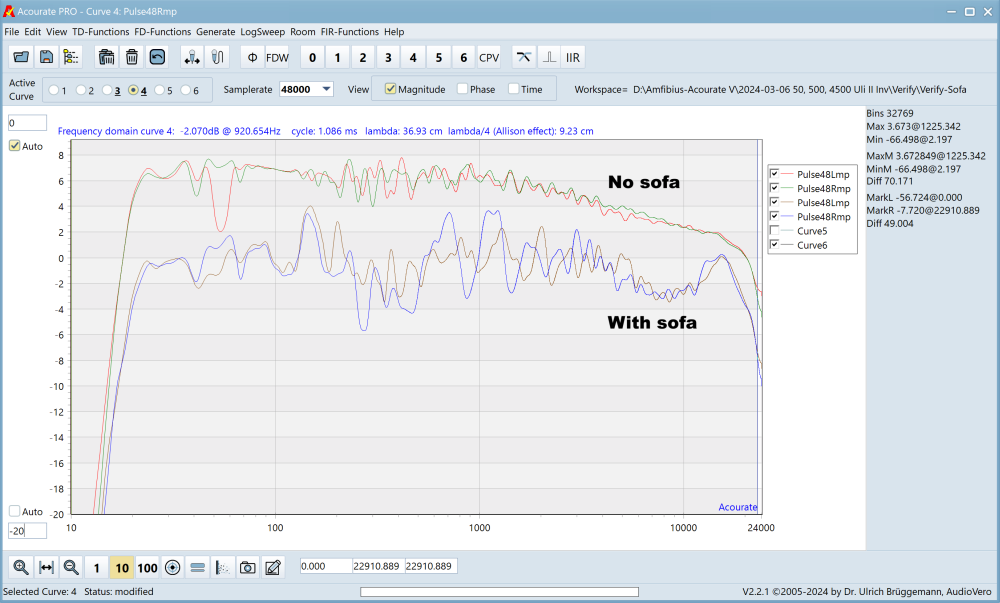

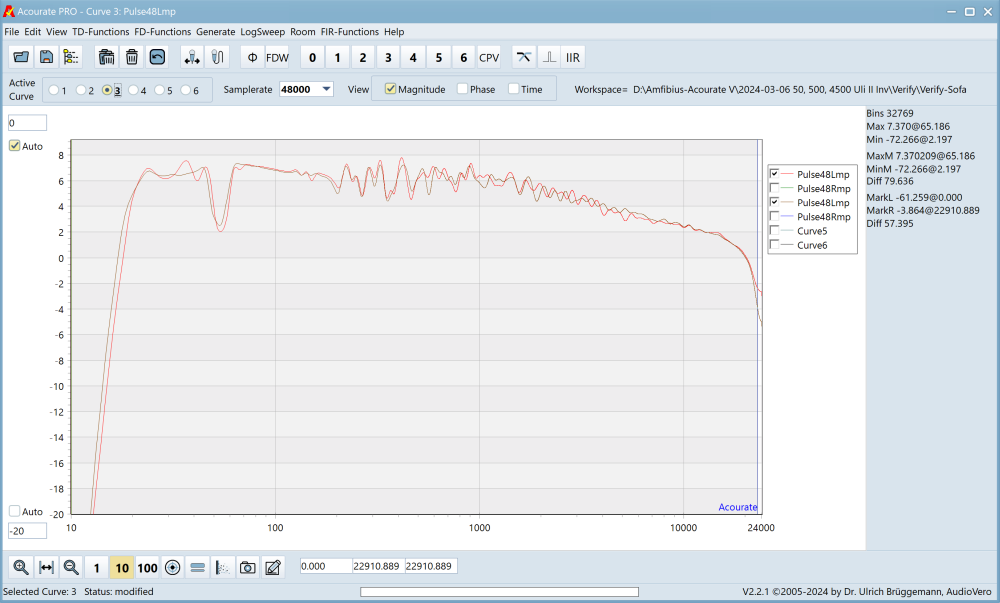

I continue to be perplexed by why the filters with the linearized phase sound so different. It occurred to me that I have been measuring the speakers independently (left vs. right). But if the phase of the left and right speaker were out of whack, then maybe a mono recording (i.e. both speakers playing together) might show something? TLDR: now there is even more mystery.

It is a stinking hot day here in Australia so I was too lazy to move the sofa out of the way for measurements. Take a look at the difference the sofa makes to the measurement, the nicer looking pair is the verification measurement made a few days ago with the sofa pushed out of the way. The other measurement is with the sofa in place. I have posted similar curves to this on ASR before, and I started a thread on it, but this time the effect of the sofa seems MUCH more dramatic.

It's not as if I am not aware of the differences in outcomes if correction is done with vs. without sofa, but previous experiments producing corrections with initial measurements taken with vs. without sofa has lead to nicer looking measurements if the initial measurement was taken with the sofa in place, but subjectively nicer sound if the sofa is removed. Please don't ask me why, all I can tell you is that when the filters are compared back to back there is no contest. One sounds flat and lifeless, the other sounds "alive". If you look at the other thread, you will see that some people go as far as constructing dummies by stuffing pillows and cushions into clothes and taking measurements with those dummies in place.

Interestingly, that 50Hz suckout in the left channel (red curve) that we discussed before, which I tried to ameliorate via phase alignment of the subwoofer vs. woofer, is now gone with the sofa in situ. This is obviously due to reflections from the sofa fortuitously getting rid of it. Of course there are now a lot more horrible looking anomalies, particularly that treble lift which is probably responsible for making this filter sound "alive".

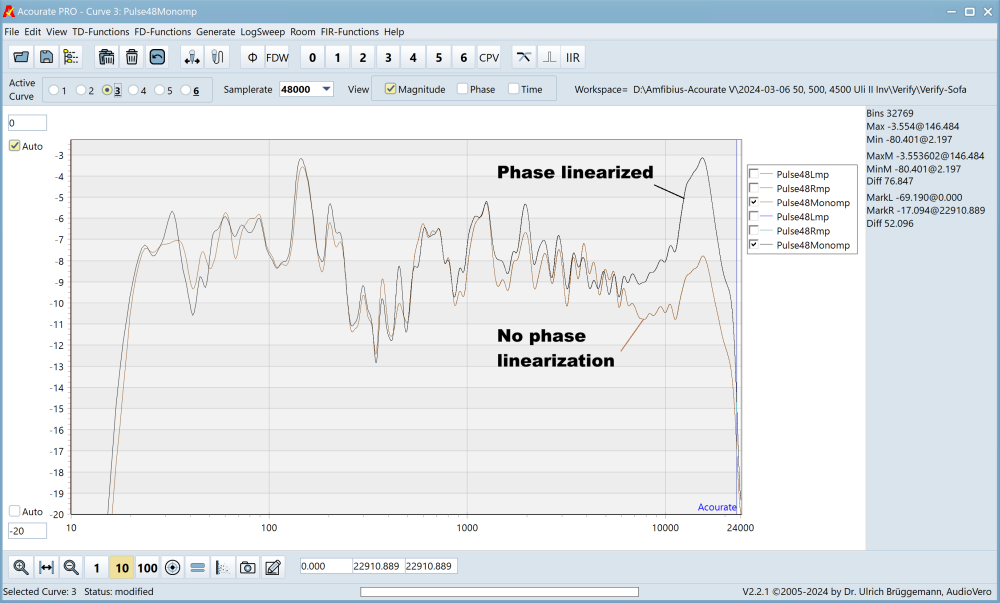

ANYWAY, that aside, my intention was to compare mono recordings of filters with individual driver phase linearization, vs. without. So here we go:

Mono recording of two different sets of filters. I have matched the gain so the measurements overlap. Look at how hot the treble is in the phase linearized version. OK, so now we are on to something. Is this an artefact of the phase corrections from each filter causing a boosted treble? For clarity, I am only showing the left channel (the right channel is the same):

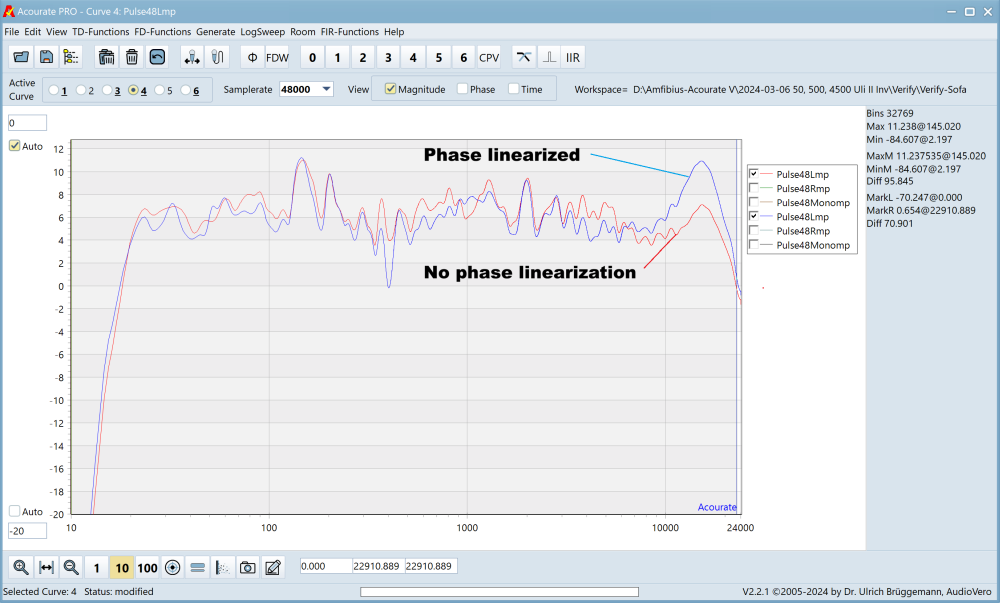

I mean, WTF. Why is the tweeter so hot in the phase linearized version!!! Bear in mind I am showing you a vertically stretched out scale, but we can see that it is about 4dB hotter. So, the hotter treble is coming from the individual tweeters themselves, and not from interaction between tweeters. And before you ask, these measurements were made back to back with the mic in the same position and the volume settings untouched.

How can this be, when the verification measurements taken a few days ago showed them looking exactly the same? Here is the left channel only with the curves overlaid for clarity:

I won't bother labelling which is which because the two filters measure the same.

More head scratching. This one I really can't explain.

It is a stinking hot day here in Australia so I was too lazy to move the sofa out of the way for measurements. Take a look at the difference the sofa makes to the measurement, the nicer looking pair is the verification measurement made a few days ago with the sofa pushed out of the way. The other measurement is with the sofa in place. I have posted similar curves to this on ASR before, and I started a thread on it, but this time the effect of the sofa seems MUCH more dramatic.

It's not as if I am not aware of the differences in outcomes if correction is done with vs. without sofa, but previous experiments producing corrections with initial measurements taken with vs. without sofa has lead to nicer looking measurements if the initial measurement was taken with the sofa in place, but subjectively nicer sound if the sofa is removed. Please don't ask me why, all I can tell you is that when the filters are compared back to back there is no contest. One sounds flat and lifeless, the other sounds "alive". If you look at the other thread, you will see that some people go as far as constructing dummies by stuffing pillows and cushions into clothes and taking measurements with those dummies in place.

Interestingly, that 50Hz suckout in the left channel (red curve) that we discussed before, which I tried to ameliorate via phase alignment of the subwoofer vs. woofer, is now gone with the sofa in situ. This is obviously due to reflections from the sofa fortuitously getting rid of it. Of course there are now a lot more horrible looking anomalies, particularly that treble lift which is probably responsible for making this filter sound "alive".

ANYWAY, that aside, my intention was to compare mono recordings of filters with individual driver phase linearization, vs. without. So here we go:

Mono recording of two different sets of filters. I have matched the gain so the measurements overlap. Look at how hot the treble is in the phase linearized version. OK, so now we are on to something. Is this an artefact of the phase corrections from each filter causing a boosted treble? For clarity, I am only showing the left channel (the right channel is the same):

I mean, WTF. Why is the tweeter so hot in the phase linearized version!!! Bear in mind I am showing you a vertically stretched out scale, but we can see that it is about 4dB hotter. So, the hotter treble is coming from the individual tweeters themselves, and not from interaction between tweeters. And before you ask, these measurements were made back to back with the mic in the same position and the volume settings untouched.

How can this be, when the verification measurements taken a few days ago showed them looking exactly the same? Here is the left channel only with the curves overlaid for clarity:

I won't bother labelling which is which because the two filters measure the same.

More head scratching. This one I really can't explain.

Got your point about hardware. I meant with Hardware things like buying a new amp, DAC, cables, power conditioners etc etc it does not come close using imo DSP. It struck me that people are still considering that. If you know that roughly the Investment ratio building a pro controll room is around 70 30 in investing in room treathment an gear (in that order) to get reverb time under controll an a linear frequency response it's obvious that the average customer audio room is to say atleast far from optimal. DSP is not perfect but it is a obvious choice/solution without breaking the bank rebuilding your room.Hardware only unlocks the potential for better sound, by itself it won't make a difference. Trinnov, MiniDSP, and any AVR with Dirac in it are severely limited in processing power. They typically have 1024 taps which will limit how much correction you can do. In contrast, PC based convolution is not limited by processing power. I have a friend who is convolving 30 channels of audio at 65536 taps each on his Mac Mini. But I am of the opinion that it's not the number of taps that makes the difference, it's what you do with them. Doing 1024 clever things is better than 65536 stupid things.

Last edited:

Keith_W

Major Contributor

- Thread Starter

- #289

Got your point about hardware. I meant with Hardware things like buying a new amp, DAC, cables, power conditioners etc etc it does not come close using imo DSP. It struck me that people are still considering that. If you know that roughly the Investment ration building a pro controll room is around 70 30 in investing in room treathment an gear (in that order) to get reverb time under controll an a linear frequency response it's obvious that the average customer audio room is to say atleast far from optimal. DSP is not perfect but it is a obvious choice/solution without breaking the bank rebuilding your room.

Ah, I may have misunderstood your question. Well obviously, even before you get started you need amps, DAC's, etc that behave properly and don't clip, don't inject noise, etc. and that your speakers are adequately amplified. All DSP products sacrifice volume for linearity, and it is your choice how much volume you wish to sacrifice. So even before you start, make sure that you have enough volume, because you will be losing some of it.

Assuming that your hardware choices are adequate, and you are asking what % gain of using DSP vs. going from a DAC of -100 SINAD to -120 SINAD, the answer is: orders of magnitude difference in either direction (it can be good or bad). You will struggle to hear differences in DAC's. Whereas a good DSP filter can transform your system. For me, DSP is the third most important component in my system - behind only the room and the speakers. It will cost me a few hundred thousand $$$ to change the room, if not a few million given stupid Australian property prices. It will cost a few thousand for new speakers. But DSP? A few hundred bucks + many nights reading books. It really is the most cost effective upgrade.

ernestcarl

Major Contributor

I continue to be perplexed by why the filters with the linearized phase sound so different. It occurred to me that I have been measuring the speakers independently (left vs. right). But if the phase of the left and right speaker were out of whack, then maybe a mono recording (i.e. both speakers playing together) might show something? TLDR: now there is even more mystery.

It is a stinking hot day here in Australia so I was too lazy to move the sofa out of the way for measurements. Take a look at the difference the sofa makes to the measurement, the nicer looking pair is the verification measurement made a few days ago with the sofa pushed out of the way. The other measurement is with the sofa in place. I have posted similar curves to this on ASR before, and I started a thread on it, but this time the effect of the sofa seems MUCH more dramatic.

View attachment 355334

It's not as if I am not aware of the differences in outcomes if correction is done with vs. without sofa, but previous experiments producing corrections with initial measurements taken with vs. without sofa has lead to nicer looking measurements if the initial measurement was taken with the sofa in place, but subjectively nicer sound if the sofa is removed. Please don't ask me why, all I can tell you is that when the filters are compared back to back there is no contest. One sounds flat and lifeless, the other sounds "alive". If you look at the other thread, you will see that some people go as far as constructing dummies by stuffing pillows and cushions into clothes and taking measurements with those dummies in place.

Interestingly, that 50Hz suckout in the left channel (red curve) that we discussed before, which I tried to ameliorate via phase alignment of the subwoofer vs. woofer, is now gone with the sofa in situ. This is obviously due to reflections from the sofa fortuitously getting rid of it. Of course there are now a lot more horrible looking anomalies, particularly that treble lift which is probably responsible for making this filter sound "alive".

ANYWAY, that aside, my intention was to compare mono recordings of filters with individual driver phase linearization, vs. without. So here we go:

View attachment 355337

Mono recording of two different sets of filters. I have matched the gain so the measurements overlap. Look at how hot the treble is in the phase linearized version. OK, so now we are on to something. Is this an artefact of the phase corrections from each filter causing a boosted treble? For clarity, I am only showing the left channel (the right channel is the same):

View attachment 355338

I mean, WTF. Why is the tweeter so hot in the phase linearized version!!! Bear in mind I am showing you a vertically stretched out scale, but we can see that it is about 4dB hotter. So, the hotter treble is coming from the individual tweeters themselves, and not from interaction between tweeters. And before you ask, these measurements were made back to back with the mic in the same position and the volume settings untouched.

How can this be, when the verification measurements taken a few days ago showed them looking exactly the same? Here is the left channel only with the curves overlaid for clarity:

View attachment 355339

I won't bother labelling which is which because the two filters measure the same.

More head scratching. This one I really can't explain.

I would import into REW just the filters created by Acourate (e.g. HF horn section) for one driver (linear phase vs min phase. s mixed phase versions) and compare the differences side-by-side: magnitude and phase, step, envelope etc.

As well as convolve (A x B trace arithmetic) each filter set to the raw unequalized response itself measured at the MLP and observe the differences.

I should point out that there are other considerations as well, for example if you want to perform DSP on an external audio source (say, a HDMI feed) or you own a turntable. To obtain this functionality with PC based convolution is a massive pain. It theoretically can be done, but it involves audio routing and maybe purchase of extra hardware.

Just let me briefly touch on this point for your possible reference and interest.

We can easily use VB-Audio Matrix (a donation software) as really stable robust reliable system-wide one-stop ASIO/VASIO/VAIO routing center which can receive all the audio signals from any of software audio(-visual) players, web browser(s) (set VB-Audio Matrix VAIO as system's default audio device), PC software TV-tuner audio (while feeding only visual signal into HDMI to large OLED TV as second PC monitor, ref. here), as well as turntable(s) (via phono preamp + ADC unit); then, VB-Audio Matrix can route "on-the-fly (with negligible lip-synch issue)" all the audio signals into our system-wide onestop DSP center (in my case DSP "EKIO") for multichannel DSP processing to feed multi-channel DAC unit (in my case 8-CH OKTO DAC8PRO).

You can find here my use-case of VB-Audio Matrix as system-wide onestop ASIO/VASIO/VAIO routing center.

In VB-AUDIO Matrix's excellent/beautiful Matrix Routing Grid representation, each of the "routing grid cells" can have flexible plus/minus gain adjustment in 1.0 dB granularity. You can easily level match between e.g. web browser's audio (into Windows default audio device = Matrix VAIO) and your other ASIO audio player like JRiver, Roon, etc.

Last edited:

GaryY

Senior Member

- Joined

- Nov 25, 2023

- Messages

- 380

- Likes

- 383

Thanks Keith, I need to read this carefully again. I have searched a lot, but there was no clear explanation for time alignment. I'm still confused what to read, how I have to do frequency sweep and what is important plot in REW for alignment.I have managed to time align the subwoofers now. I will say a few things about subwoofer time alignment which will hopefully help someone out there. I took a leaf out of Dr. Uli's book, and decided that I will use multiple methods to cross-check subwoofer time alignment. I have gone over the procedure so many times and spent so much time staring at the curves that I could write a treatise about it, but I suspect that even the eyes of DSP nerds on ASR will glaze over in utter boredom. So here is the abbreviated version.

Keith's rules of subwoofer time alignment:

1. You can align for the initial impulse OR the steady state, but not for both. You have to choose. This law is immutable, it is due to physical behaviour of your drivers AND the behaviour of bass in the room (it may appear to change phase due to reflections before it arrives at your mic). There is no way to DSP around it.

2. If you align for the steady state, only one frequency can ever be time aligned, and all other frequencies will diverge from perfect time alignment. You may need to employ additional strategies to achieve better time alignment.

3. Every time you do a sweep, the result will be different. I have measured variances between 0.02 - 0.1ms. This is normal, don't get too hung up about it. You are unlikely to hear a subwoofer misalignment of 0.1ms.

4. Different filters will produce different delays, so it is important to repeat the time alignment procedure every time you design a new filter for the sub, or for the woofer.

5. The importance of a good cup of coffee can not be overstated.

So why should you time align for the initial impulse? Answer: if you are high passing the main speakers. In this case, the alignment of lower frequencies of the main speaker does not matter because the sub will be handling those frequencies. Why should you time align for the steady state? Answer: if you are using both your mains and subs to produce bass.

As proof of my first statement, that it is impossible to achieve time alignment for both the initial impulse and the steady state, I offer this graph as proof:

View attachment 354567

This is a sweep of the subwoofer and the woofer, convolved with a 50Hz sinewave to better show the time behaviour of the drivers. You can see that I have rotated the subwoofer to align with the woofer at the initial impulse, but doing this messes up the steady state. Almost as if the subwoofer has inverted polarity! But not so, look closely at the initial impulse and you will see the shape of the deflection of both drivers is the same.

If we align for the steady state at 50Hz, the initial impulse is no longer aligned, as we can see here:

View attachment 354568

However, there is not much good in doing this. This is because the phase rotates across the frequency range of both the subwoofer and the woofer. What this means is that only one frequency can ever be time aligned, and all other frequencies will diverge from perfect time alignment. I will show this graph as proof:

View attachment 354566

This is the unwrapped measured phase of both the sub and the woofer, zoomed in the frequency band of interest (20Hz - 100Hz) and the vertical scale adjusted. Using the above method (sinewave convolution), I rotated the sub and woofer by the values I derived. As you can see, perfect alignment at 50Hz. But nowhere else. Some of those delays are almost 10 radians apart. I posted the calculation to convert radians to time here - we are talking delays of up to 30ms! Now 30ms may not matter if it was in isolation, but if you have two drivers playing the same frequency 30ms apart it is bound to cause problems.

It MAY be possible to extract excess phase and use the min phase version to design some kind of all pass filter that will straighten the phase of both the sub and the woofer to get them to align a bit better. But since I don't need to do it because I am high passing the main speakers, I did not try this experiment.

This is why I like Acourate - it is not a "DSP black box" where measurement goes in, and result comes out. You have no idea what the "black box" did and whether the result is correct. I know that some software packages don't even let you change what the software has decided, nor do you even get the tools to check yourself. Acourate forces you to think and make decisions, and you learn so much in the process.

(EDIT) after all that I forgot to post a step response to show proper driver time alignment. So here it is:

View attachment 354570

What does a time aligned system sound like? Well, it is easier to describe what a non-time aligned system sounds like. If you have the ability, you should deliberately mess up the time alignment of your system. You will hear smearing and a loss of clarity. The image is unstable and seems to shift depending on pitch (the worst offenders are instruments with a wide frequency range like a grand piano). Bass sounds "slow" and laggy. Dynamics are affected, and the system sounds lazy and "constricted". With time alignment, there is incredible transparency, slam, and punch.

Sorry again to be a little bit out of the scope of this thread, but...

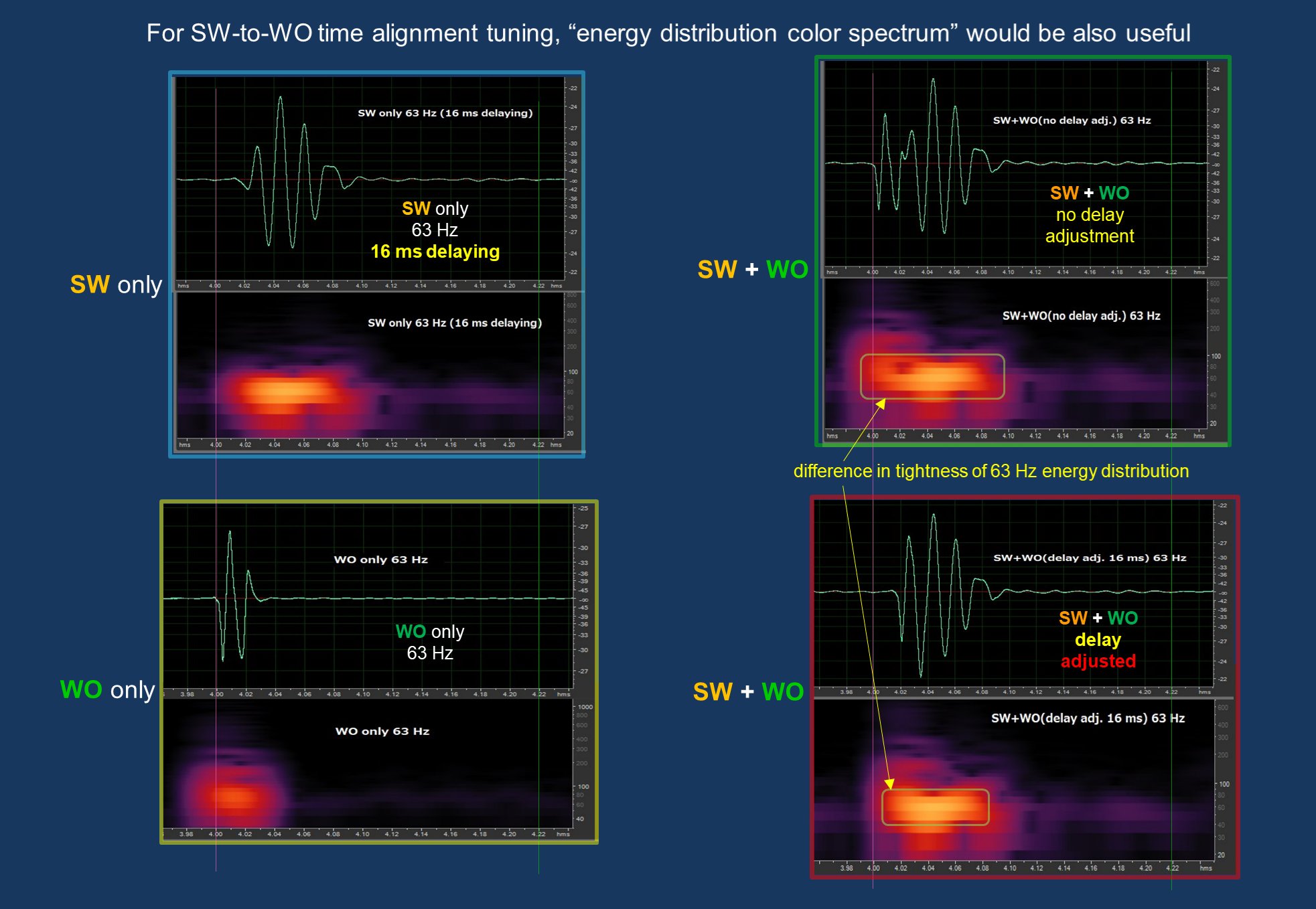

Just for your, our, possible interest and reference, let me suggest one another tip (a different perspective) for semi-quantitative "observation" of transient behavior of bass sound sung by subwoofer only, woofer only, and subwoofer plus woofer around the XO Fq with or without time-alignment tuning.

The 3D sound color spectrum (gain-Fq-time) of Adobe Audition (I use ver.3.0.1) would represent a kind of sound energy distribution (or a kind of compactness/tightness of the sound) which I found useful and worthwhile for semi-quantitative observation of transient sound reproduction around the XO Fq measured by sine tone burst stimulation given to the drivers; please refer to my post #507 on my project thread.

Just for your, our, possible interest and reference, let me suggest one another tip (a different perspective) for semi-quantitative "observation" of transient behavior of bass sound sung by subwoofer only, woofer only, and subwoofer plus woofer around the XO Fq with or without time-alignment tuning.

The 3D sound color spectrum (gain-Fq-time) of Adobe Audition (I use ver.3.0.1) would represent a kind of sound energy distribution (or a kind of compactness/tightness of the sound) which I found useful and worthwhile for semi-quantitative observation of transient sound reproduction around the XO Fq measured by sine tone burst stimulation given to the drivers; please refer to my post #507 on my project thread.

Keith_W

Major Contributor

- Thread Starter

- #295

Thanks Keith, I need to read this carefully again. I have searched a lot, but there was no clear explanation for time alignment. I'm still confused what to read, how I have to do frequency sweep and what is important plot in REW for alignment.

There are a number of "time" related plots in all measurements - phase, impulse, step, group delay, etc. The first step is to understand what all the measurements mean - read the REW Help Guide (if you are like me, you have to read it several times). Then watch this @OCA video (if you are like me, you have to watch it several times as well):

IMO the hardest part about any DSP is subwoofer time alignment. As my above posts have shown, the initial subwoofer impulse and the phase of the sub in relation to the woofer both need to be aligned if possible. And - even if the phase is aligned at one frequency, it may not be aligned at another frequency. You will be staring at graphs a lot in utter confusion. Don't be discouraged, it is all part of the learning process.

GaryY

Senior Member

- Joined

- Nov 25, 2023

- Messages

- 380

- Likes

- 383

Thank you Keith, I will try more. The 1st question to me was time alignment is matter of physical distance + reaction of driver while phase cotrol is frequency dependant (I still may be wrong). Let me look at the curves again.There are a number of "time" related plots in all measurements - phase, impulse, step, group delay, etc. The first step is to understand what all the measurements mean - read the REW Help Guide (if you are like me, you have to read it several times). Then watch this @OCA video (if you are like me, you have to watch it several times as well):

IMO the hardest part about any DSP is subwoofer time alignment. As my above posts have shown, the initial subwoofer impulse and the phase of the sub in relation to the woofer both need to be aligned if possible. And - even if the phase is aligned at one frequency, it may not be aligned at another frequency. You will be staring at graphs a lot in utter confusion. Don't be discouraged, it is all part of the learning process.

P.S.) I also thoght my ears will hear only magnitude, not phase....so may be phase control is important only at the boundary of cross over at listening point????....

Keith_W

Major Contributor

- Thread Starter

- #297

Thank you Keith, I will try more. The 1st question to me was time alignment is matter of physical distance + reaction of driver while phase cotrol is frequency dependant (I still may be wrong). Let me look at the curves again.

There are 3 types of delays introduced by subwoofers: (1) time of flight delay, which is the delay caused by placing your sub at a different distance to your speaker. Calculate this with t = d/c (d = distance in m, c = speed of sound of 343m/s); (2) propagation delay, which is the delay introduced by the sub plate amp and potentially inertia of the drivers, (3) DSP delay, which is the delay caused by the FIR filter and any additional delay you introduce. The last 2 can only be measured using an acoustic pulse, the first can be approximated with a tape measure. In fact, the MAJORITY of the delay in my system is not time of flight, it is one of the other two. I calculate my time of flight delay (distance between sub and speaker, take a look at the first picture in post #187) to be 3.8ms. The actual measured delay is 3 times that.

As an experiment, you should try using a time delay for your subs which is way off whack. Try 30ms. That will quickly tell you what poor time alignment does. It no longer sounds cohesive, and bass has a woolly, slow, and flabby quality. I was surprised that it subjectively seemed to make the bass linger in the room, as if reverb was worse. I didn't know that 30ms would have such a dramatic effect. Once it is aligned properly, all these negative qualities disappear.

P.S.) I also thoght my ears will hear only magnitude, not phase....so may be phase control is important only at the boundary of cross over at listening point????....

Sigh, I don't know what I believe in any more

There are 3 types of delays introduced by subwoofers: (1) time of flight delay, which is the delay caused by placing your sub at a different distance to your speaker. Calculate this with t = d/c (d = distance in m, c = speed of sound of 343m/s); (2) propagation delay, which is the delay introduced by the sub plate amp and potentially inertia of the drivers, (3) DSP delay, which is the delay caused by the FIR filter and any additional delay you introduce. The last 2 can only be measured using an acoustic pulse, the first can be approximated with a tape measure. In fact, the MAJORITY of the delay in my system is not time of flight, it is one of the other two.

Hello again Keith,

Your above summary of "delays by subwoofers" is really nice and comprehensive, and I fully agree with you!

Just for your and @GaryY's possible interest and reference, let me share "my case" based on your above wonderful summary.

In my setup, the difference in physical distance between microphone-woofer vs. microphone-subwoofer at my listening position is only 6 cm.

Therefore, in addition to the differences of inertia (mass of moving parts) as well as the magnet strength of woofer driver and subwoofer driver (both 30 cm cone, though), I can understand that the "Helmholtz Resonator mechanism" of YST-SW1000 would also more-or-less "contribute" to transient delay against the light-weight 30 cm cone woofer in sealed cabinet.

The active subwoofer YST-SW1000 has powerful dedicated plate amplifier (120W, 5Ohm, 0.01% distortion) and I do not know its contribution to delay against woofer which is driven directly dedicatedly by HiFi integrated amplifier YAMAHA A-S3000 (100W+1000W/8Ohm, 150W+150W/4Ohm, 20Hz-20kHz 0.07%THD, damping factor >250). I myself do not know whether these amplifier difference(s) would contribute to delay of the transient sound given by them, or not.

As for your point (3), you know well that I use DSP EKIO's IIR mild slope -12 dB/Oct Linkwitz-Riley XO filters for low-pass (high-cut) and high-pass (low-cut) at XO Fq of 50 Hz which may more-or-less "contribute" (or not?) to the timing-difference between subwoofer and woofer, just like your case of FIR filters.

Having these considerations which are consistent with above nice "your summary", I very carefully measured the relative delay of the sound given by subwoofer YST-SW1000 against the 30 cm woofer YAMAHA JA-3058 in sealed cabinet by the following three methods;

1. Time-shifted sine tone-burst method (ref. #493)

2. Simultaneous multiple-Fq sine-tone-burst-peak matching method (ref. #494)

3. Sine tone-burst 8-wave, 3-wave, single-wave precise matching method (ref. #504, #507)

All of the three methods clearly told me that, in my case, 16.0 msec delay adjustment (time alignment) of woofer against subwoofer is just feasible for perfect synchronization of my subwoofer and woofer.

I understand that in your audio setup the actual measured delay of subwoofers was/is 11.4 msec determined by your method(s), right?

Furthermore, as for time alignment between woofer and midrange (both driven directly by dedicated amplifiers), I found that 0.3 msec given delay of midrange against woofer (by the method 3. ref. #504) is feasible for perfect synchronization. I mean that woofer's kickup delays in 0.3 msec against midrange. I again assume the 0.3 msec difference would be attributable to the large difference in inertia (moving mass) between 30 cm woofer paper cone and 8.8 cm extremely-light Beryllium dome diaphragm of the midrange, as described in #494.

Last edited:

GaryY

Senior Member

- Joined

- Nov 25, 2023

- Messages

- 380

- Likes

- 383

Thanks for introducing lots of great method. I tried to use the same method measuring burst by creating file in REW. But somehow it was not possible to create wav file which can be played in REW for measurement. Error message was that created wav file not proper format for measurement.Hello again Keith,

Your above summary of "delays by subwoofers" is really nice and comprehensive, and I fully agree with you!

Just for your and @GaryY's possible interest and reference, let me share "my case" based on your above wonderful summary.

In my setup, the difference in physical distance between microphone-woofer vs. microphone-subwoofer at my listening position is only 6 cm.

Even with this negligible difference in physical distance, I need to carefully understand/consider the "operation mode/mechanism" of my L&R large-heavy subwoofer YAMAHA YST-SW1000 (48 kg each, 30 cm powerful cone driver in YST=YAMAHA Active Servo Technology system) around the XO Fq. I do hope your web browser would properly translate this web page on YST-SW1000 into English, especially the YST system fully utilizing Helmholtz Resonator mechanism.

Therefore, in addition to the differences of inertia (mass of moving parts) as well as the magnet strength of woofer driver and subwoofer driver (both 30 cm cone, though), I can understand that the "Helmholtz Resonator mechanism" of YST-SW1000 would also more-or-less "contribute" to transient delay against the light-weight 30 cm cone woofer in sealed cabinet.

The active subwoofer YST-SW1000 has powerful dedicated plate amplifier (120W, 5Ohm, 0.01% distortion) and I do not know its contribution to delay against woofer which is driven directly dedicatedly by HiFi integrated amplifier YAMAHA A-S3000 (100W+1000W/8Ohm, 150W+150W/4Ohm, 20Hz-20kHz 0.07%THD, damping factor >250). I myself do not know whether these amplifier difference(s) would contribute to delay of the transient sound given by them, or not.

As for your point (3), you know well that I use DSP EKIO's IIR mild slope -12 dB/Oct Linkwitz-Riley XO filters for low-pass (high-cut) and high-pass (low-cut) at XO Fq of 50 Hz which may more-or-less "contribute" (or not?) to the timing-difference between subwoofer and woofer, just like your case of FIR filters.

Having these considerations which are consistent with above nice "your summary", I very carefully measured the relative delay of the sound given by subwoofer YST-SW1000 against the 30 cm woofer YAMAHA JA-3058 in sealed cabinet by the following three methods;

1. Time-shifted sine tone burst method (ref. #493)

2. Simultaneous multiple-Fq sine tone burst peak method (ref. #494)

3. Sine tone burst 8-wave, 3-wave, single-wave matching method (ref. #504, #507)

All of the three methods clearly told me that, in my case, 16.0 msec delay adjustment (time alignment) of woofer against subwoofer is just feasible for perfect synchronization of my subwoofer and woofer.

I understand that in your audio setup the actual measured delay of subwoofers was/is 11.4 msec determined by your method(s), right?

Furthermore, as for time alignment between woofer and midrange (both driven directly by dedicated amplifiers), I found that 0.3 msec given delay of midrange against woofer (by the method 3. ref. #504) is feasible for perfect synchronization. I mean that woofer's kickup delays in 0.3 msec against midrange. I again assume the 0.3 msec difference would be attributable to the large difference in inertia (moving mass) between 30 cm woofer paper cone and 8.8 cm extremely-light Beryllium dome diaphragm of the midrange, as described in #494.

Thanks for introducing lots of great method. I tried to use the same method measuring burst by creating file in REW. But somehow it was not possible to create wav file which can be played in REW for measurement. Error message was that created wav file not proper format for measurement.

Thank you for your kind attention on my time alignment tuning efforts.

I can share with you the test tone burst signal tracks I prepared for my tuning.

I will soon contact you through PM communication system on this ASR Forum!

Similar threads

- Replies

- 2

- Views

- 876

- Poll

- Replies

- 14

- Views

- 1K