An intelligent weapon that can be made completely autonomous, heavily armed and can easily out-think and out-maneuver any human or groups of humans is what scares me.Automatic weapons are old news unfortunately. Doesn't really require AI to do it, even though that's often conflated with neural nets today.

-

Welcome to ASR. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How Dangerous is AI?

- Thread starter Ron Texas

- Start date

- Status

- Not open for further replies.

The Switchbade (autonomous kamikaze drone) fits that bill on a tactial level. Not really scary on it's own in my opinion, but the fact that it can be replicated on the cheap by a radicalized Joe Schmoe is.

We have not let neural nets control force projection on the level in say the movie below.... yet.

We have not let neural nets control force projection on the level in say the movie below.... yet.

PHD

Active Member

- Joined

- Mar 15, 2023

- Messages

- 292

- Likes

- 441

Does anyone know if any of the current or future room correction software uses AI? I assume if so, it would be either Trinnov's Room optimizer or Dirac's upcoming ART. I think advanced room corrections are great candidate applications for AI or at least neural networks.

They said similar things about pocket calculators, then computers, self driving cars now AI. I remember three pages to solve differential equations in college now trivial on on a computer. I remember standing in room with room sized computer at Eli Lilly to model new drugs and that can now be done with a couple of laptops. Maybe because I'm dyslexic and it took me three minutes to to type three sentences I feel differently or maybe I like to think optimistically about new technology.The risks of AI are great, not in the sense that computers get smarter then us, but in the sense that our human stupidity becomes mirrored, automatised and powerful on a previously unimaginable scale.

MRC01

Major Contributor

AI doesn't "think" or "understand" like (some) humans do. Every ML algorithm essentially boils down to pattern recognition. Yet they can be quite sophisticated, and even if they never actually think or understand, they may someday become functionally indistinguishable from humans.

The definition has 3 key parts

1. Given data, discovers patterns on its own, which the programmers did not tell it about or anticipate.

2. Uses these patterns to make predictions or decisions.

3. Given more data, makes better predictions or decisions.

Every practical computer is a Turing machine, which means ultimately, everything it does boils down to 4 operations: READ, WRITE, IF, GOTO. AI/ML has a lot of hype from Musk et.al. Most of the experts, like experts in any other field, have a strong curiousity and fascination of the field (else they wouldn't have invested years of their lives studying it) so they focus on capabilities rather than limitations. Some get so entranced by the possibilities that they redefine their own humanity in terms of it, with notions like, "my mind is nothing more than a Turing machine, and any understanding or consciousness I have is just an illusion however real it might seem." But some experts do talk about limitations, and aren't afraid to discuss the idea that meaning might be deeper than formal logic, that truth may be deeper than formal proof. For example, Gary Marcus and Emily Bender among others. For a more balanced perspective on this subject, Google them and read some of their stuff.

Ultimately, it requires human supervision. AI/ML can outperform humans on tasks that match their training, but performance becomes unpredictable and poor as soon as they encounter situations outside their training. Only a human can understand the context and meaning of a situation and act accordingly, outside instructions, disobey an order realizing that carrying it out would be unethical, or contrary to the mission objective.

The definition has 3 key parts

1. Given data, discovers patterns on its own, which the programmers did not tell it about or anticipate.

2. Uses these patterns to make predictions or decisions.

3. Given more data, makes better predictions or decisions.

Every practical computer is a Turing machine, which means ultimately, everything it does boils down to 4 operations: READ, WRITE, IF, GOTO. AI/ML has a lot of hype from Musk et.al. Most of the experts, like experts in any other field, have a strong curiousity and fascination of the field (else they wouldn't have invested years of their lives studying it) so they focus on capabilities rather than limitations. Some get so entranced by the possibilities that they redefine their own humanity in terms of it, with notions like, "my mind is nothing more than a Turing machine, and any understanding or consciousness I have is just an illusion however real it might seem." But some experts do talk about limitations, and aren't afraid to discuss the idea that meaning might be deeper than formal logic, that truth may be deeper than formal proof. For example, Gary Marcus and Emily Bender among others. For a more balanced perspective on this subject, Google them and read some of their stuff.

Ultimately, it requires human supervision. AI/ML can outperform humans on tasks that match their training, but performance becomes unpredictable and poor as soon as they encounter situations outside their training. Only a human can understand the context and meaning of a situation and act accordingly, outside instructions, disobey an order realizing that carrying it out would be unethical, or contrary to the mission objective.

computer-audiophile

Major Contributor

People's distrust of each other is promoted when they no longer know whether a message, a photo or a video is not just a manipulation of the truth. But that is still one of the more harmless consequences. I am sure that criminals are already making creative use of these AI techniques.

MRC01

Major Contributor

This is true, yet age old. Governments lie, and newspapers lie. In a free country they are different lies. Plenty of cases of the truth being distorted or violated long before AI ever existed. But AI does exacerbate the situation, since it makes manipulation so much easier and more lifelike.People's distrust of each other is promoted when they no longer know whether a message, a photo or a video is not just a manipulation of the truth. ...

Great article:AI doesn't "think" or "understand" like (some) humans do. Every ML algorithm essentially boils down to pattern recognition. Yet they can be quite sophisticated, and even if they never actually think or understand, they may someday become functionally indistinguishable from humans.

The definition has 3 key parts

1. Given data, discovers patterns on its own, which the programmers did not tell it about or anticipate.

2. Uses these patterns to make predictions or decisions.

3. Given more data, makes better predictions or decisions.

Every practical computer is a Turing machine, which means ultimately, everything it does boils down to 4 operations: READ, WRITE, IF, GOTO. AI/ML has a lot of hype from Musk et.al. Most of the experts, like experts in any other field, have a strong curiousity and fascination of the field (else they wouldn't have invested years of their lives studying it) so they focus on capabilities rather than limitations. Some get so entranced by the possibilities that they redefine their own humanity in terms of it, with notions like, "my mind is nothing more than a Turing machine, and any understanding or consciousness I have is just an illusion however real it might seem." But some experts do talk about limitations, and aren't afraid to discuss the idea that meaning might be deeper than formal logic, that truth may be deeper than formal proof. For example, Gary Marcus and Emily Bender among others. For a more balanced perspective on this subject, Google them and read some of their stuff.

Ultimately, it requires human supervision. AI/ML can outperform humans on tasks that match their training, but performance becomes unpredictable and poor as soon as they encounter situations outside their training. Only a human can understand the context and meaning of a situation and act accordingly, outside instructions, disobey an order realizing that carrying it out would be unethical, or contrary to the mission objective.

You Are Not a Parrot

And a chatbot is not a human. And a linguist named Emily M. Bender is very worried what will happen when we forget this.

phoenixdogfan

Major Contributor

Most likely, we will either be viewed as pets or cockroaches. Either way highly unlikely we'll be masters of it, and because of striving for competitive advantage among our differing human factions, highly unlikely the roll out will happen without any guardrails whatsoever.

But maybe it results in a post scarcity society with indefinite lifespan extension. Not bloody likely, but maybe.

But maybe it results in a post scarcity society with indefinite lifespan extension. Not bloody likely, but maybe.

Last edited:

Scott Lanterman

Member

- Joined

- Feb 14, 2023

- Messages

- 57

- Likes

- 135

My view has shifted, but not for scientific reasons, thanks kemmler3D and others. It's not about science, it's about ethics.I have been following these speculative arguments for years, and there is something to it, but it's not science per se.

The internet is a mess. It is filled with scams and worse. An AI could make the internet much worse with more scams, more fakes, more disinformation, and even more bad stuff. But this simply bad behavior.

Imagine what it would take to actually plan and implement a six month ban on AI implementations? It would probably have to be done by the UN or at least a bipartisan Senate and Congress. People working together for the common good? Wow, that would be pretty cool. Is there an an AI race going on... yes, and it would have to addressed internationally. Let's work together people!

Here is one issue that is ubiquitous: the problem of identity online. An AI with fake bot accounts trolling a person, that is bad and could be horrific. Does this make the general issue of trolling people a problem? Yes, I think it is a general misery of online life for many. To be an internet troll you need to conceal your identity or else those death threats will get you into trouble. So in our six month hiatus on AI could the issue of being anonymous online be addressed? Scammers thrive with anonymous accounts and easy access to fake phone numbers and Facebook accounts. I suspect that online anonymity is really a fear of reprisal from other anonymous folks online.

AI makes anonymity issues much worse, perhaps we should be non-scientific, and think purely about the ethics of being online and not being responsible for what we say and do. My first salvo in the sixth month hiatus; let us consider that we should reduce or eliminate the ability to hide your identity online, this would harm scammers and criminals as well as trolls. We are we so scarred online that we cannot be ourselves? This fear is no way to go. We have to be responsible for our conduct, and if we are anonymous online we can act badly without repercussion and many are seduced into having no respect for anyone.

So, let's go for it and do a global summit about AI and the internet and hopefully act with greater respect and care going forward with some laws that makes sense to rational folks of goodwill that really just want the best for all.

Scott Lanterman

Member

- Joined

- Feb 14, 2023

- Messages

- 57

- Likes

- 135

Even if we know it is an AI fake it can be very harmful. Imagine a bunch of neo-nazi skinheads changing Madonna's Like a Virgin into a right wing fight song and they make the AI Madonna do things against her will. She is known to sympathetic to the horrors of the holocaust. We should just pause and get the lawyers together and figure out how to stop this kind of stuff now. I think your right.People's distrust of each other is promoted when they no longer know whether a message, a photo or a video is not just a manipulation of the truth. But that is still one of the more harmless consequences. I am sure that criminals are already making creative use of these AI techniques.

computer-audiophile

Major Contributor

Really a very good article. I enjoyed reading it, thank you!Great article:

You Are Not a Parrot

And a chatbot is not a human. And a linguist named Emily M. Bender is very worried what will happen when we forget this.nymag.com

MRC01

Major Contributor

Anonymity cuts both ways. I used to live on a small island where everyone knew everyone. There was essentially no violent or property crime. When we bought the house, it took the prior owner 2 weeks to find the keys because she had never used them in the 6 years she lived there! I left the keys in my car ignition for 6 years straight. People from the far left, far right, and everything in between found a way to at least tolerate each other and share the island mostly in harmony. Because like you suggest, when you aren't anonymous, you take responsibility for everything you do and say.... let us consider that we should reduce or eliminate the ability to hide your identity online, this would harm scammers and criminals as well as trolls.... We have to be responsible for our conduct, and if we are anonymous online we can act badly without repercussion and many are seduced into having no respect for anyone.

...

However, the ability to be anonymous can protect people in many situations. It can encourage people to speak up or act for social good when they otherwise wouldn't due to repercussions. It can encourage whistleblowers, journalists, and many other beneficial situations.

So the question is how to enjoy the benefits and avoid the drawbacks? This is why I have mixed feelings about the topic of "use your real name on the internet".

UltraNearFieldJock

Active Member

Aren't we forgetting that we are also biological machines? Our AI (human intelligence) is just a process (software) in our brain and body (hardware) = Turing machine. The only difference is that we came into being through biological evolution, whereas the AI (software) and the computer (hardware) were created by us.Only a human can understand the context and meaning of a situation and act accordingly, outside instructions, disobey an order realizing that carrying it out would be unethical, or contrary to the mission objective.

MRC01

Major Contributor

We don't know how the brain works. To say it is a Turing machine is a leap of faith.Aren't we forgetting that we are also biological machines? Our AI (human intelligence) is just a process (software) in our brain and body (hardware) = Turing machine. The only difference is that we came into being through biological evolution, whereas the AI (software) and the computer (hardware) were created by us.

Certainly, we all have a biological "computer" in our heads. Certainly we can follow formal logic, so our brains are at least Turning machines. But whether they are equivalent to a Turing machine, or something more, remains to be seen.

In that sense, the name we use for "neural net" models, is the height of arrogance.

AlexanderM

Active Member

- Joined

- May 1, 2021

- Messages

- 299

- Likes

- 211

If something becomes self-aware, it can't be assumed that it will like us. If we come up with rules, laws, protocols, no way everyone will follow them. I expect it's just a matter of time.

www.livescience.com

www.livescience.com

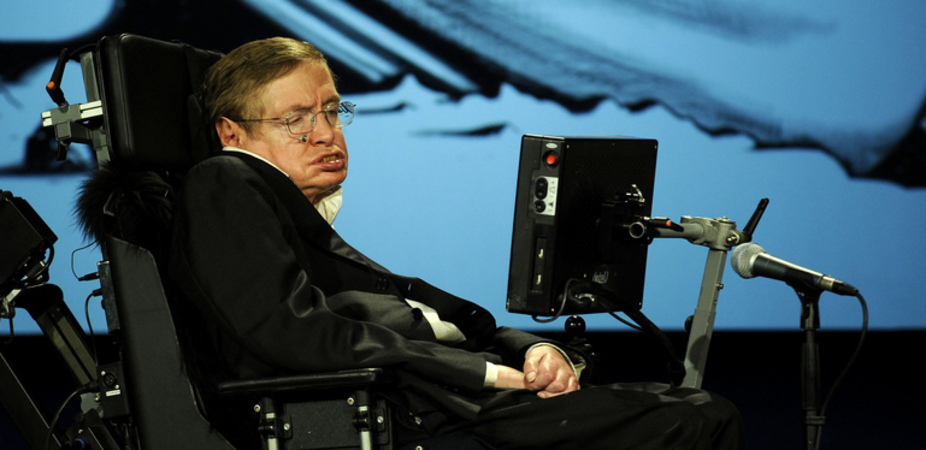

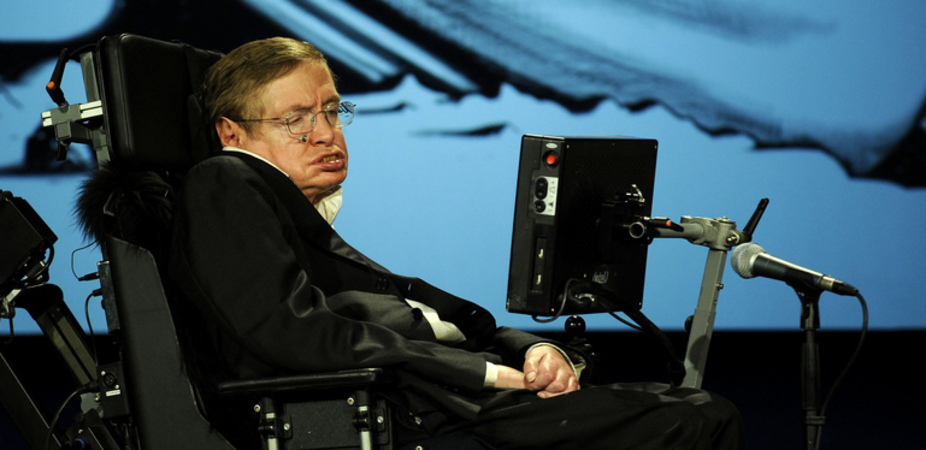

Stephen Hawking: Artificial Intelligence Could End Human Race

Famed British physicist Stephen Hawking warned that artificial intelligence could be a threat to mankind.

Last edited:

kemmler3D

Master Contributor

This is a bit of a sidetrack, but online anonymity is really important in non-free countries. For example in China, Russia and Ukraine, (among other) depending on what information you might want to put online, it is actually a matter of life or death, and not just hypothetically.We are we so scarred online that we cannot be ourselves? This fear is no way to go.

For most online activities, I would agree with you, anonymity just enables crappy behavior. But in some other contexts, it remains vital.

As for the summit / pause on AI being a practical idea - someone on the Ars Technica forum pointed out that the cost of training a model like GPT-4 is in excess of $100M. So the number of organizations who can actually do this is small, they could quite easily come together and form a mutual agreement of some kind. There is precedent, they pointed out that a similar summit (Asilomar) happened when scientists realized recombinant DNA had a lot of potential risks as a research subject.

Personally I am in favor, anytime powerful organizations might take a step back and soberly consider the good of humanity... we should encourage every such meeting possible. I don't think we currently have an excess of those, to put it mildly.

Ignoring the doomsday stuff:

-Every video is now suspect.

-TikTok can procedurally generate more nonsense to entertain people indefinitely and adapt.

-No more links to valid sites on Google. The AI just adjusts and clicks on articles it wrote.

-You'll never see an ASR link again because other clickbait sites will refer to it with a trillion to the power of a trillion more references than exist here.

-Total curation of a curated internet. You'll be fed whatever information and ads the AI and programmers want you to see.

Google is bad enough right now with bad links to "review" sites that don't even own the items they are reviewing and things are moving to reddit and Discord/chat rooms, the later of which is destroying millions of hours of human communication and knowledge and help because you can't find it and it's less permanent and not easily searchable and recoverable.

But now everything can be like that as the AI driving your browser negotiates with the AI of trash websites with procedurally generated crap to keep you entertained and you'll simply never be able to find that useful video.

Basically, imagine the internet today vs a dozen years ago when Google search results were more useful, and now multiply that by ten... and 100 the next year... and a trillion billion gazillion the next year.

At some point I want a paid internet, no AI, promote small niche websites and block content farms and anything AI generated. I don't want to have to type in Reddit and not search through busted Discord etc.

-Every video is now suspect.

-TikTok can procedurally generate more nonsense to entertain people indefinitely and adapt.

-No more links to valid sites on Google. The AI just adjusts and clicks on articles it wrote.

-You'll never see an ASR link again because other clickbait sites will refer to it with a trillion to the power of a trillion more references than exist here.

-Total curation of a curated internet. You'll be fed whatever information and ads the AI and programmers want you to see.

Google is bad enough right now with bad links to "review" sites that don't even own the items they are reviewing and things are moving to reddit and Discord/chat rooms, the later of which is destroying millions of hours of human communication and knowledge and help because you can't find it and it's less permanent and not easily searchable and recoverable.

But now everything can be like that as the AI driving your browser negotiates with the AI of trash websites with procedurally generated crap to keep you entertained and you'll simply never be able to find that useful video.

Basically, imagine the internet today vs a dozen years ago when Google search results were more useful, and now multiply that by ten... and 100 the next year... and a trillion billion gazillion the next year.

At some point I want a paid internet, no AI, promote small niche websites and block content farms and anything AI generated. I don't want to have to type in Reddit and not search through busted Discord etc.

Agreed, AI has only written and numerical input its options are far more limited than than human brain. The new mRNA tech is much more likely to go Frankenstien when people want to have taller and smarter kids and are willing to pay for it or racially or ethnically targeted compounds that do harm.We don't know how the brain works. To say it is a Turing machine is a leap of faith.

Certainly, we all have a biological "computer" in our heads. Certainly we can follow formal logic, so our brains are at least Turning machines. But whether they are equivalent to a Turing machine, or something more, remains to be seen.

In that sense, the name we use for "neural net" models, is the height of arrogance.

UltraNearFieldJock

Active Member

Today's hype about AI stems from the realization that we gradually no longer understand how AI works, the same goes for our brain, these two Turing machines are too complex for our intelligence today.We don't know how the brain works. To say it is a Turing machine is a leap of faith.

- Status

- Not open for further replies.