Good way to make products far more complex and more costly than the need to be.

You are worrying about things that don't need to be worried about. When was the last time you came across an amp that was totally incompatible with a pre due to widely disperate outputs, and sensitivities?

Trust me, the engineers and designers have given thought to this.

I trust you on this

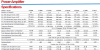

, but there is one example I can give, the ATI amps, that I thought may require closer scrutiny. Below are some specs for their two very similar products, the AT4000 seems to be a newer generation model that replaced the AT2000.

AT4000:

Rated 200 W, 8 ohms, 300 W 4 ohms, input sensitivity: 1.6 V, Gain: 28 dB, same for both unbalanced and balanced

AT2000:

Rated 200 W, 8 ohms, 300 W, 4 ohms, input sensitivity: 1.6 V, same for both unbalanced and balanced, Gain:

32 dB unbalanced (RCA), 28 dB balanced (XLR).

How would you reconcile the two, both have the same 1.6 V input sensitivity specs for both RAC and XLR inputs, but the AT2000's gain is 6 dB higher for the RCA inputs, yet the input sensitivity are the same 1.6 V for both RCA and XLR. If you do the math, 34 dB gain, 1.6 V input will result in an output of 800 W! So shouldn't the input sensitivity for the AT2000 be 0.8 V?

Now if you look at the AT4000, input sensitivity are again 1.6 V, same for both RCA and XLR, but now the gain is 28 dB for both inputs. So in this case, it would seem that the AT4000 would work better with an AV processor/preamp that has its XLR output 2X that of their RCA output, if the AVP measured much better in SINAD at 2 to 2.4 V than at 4 V output, such as the AV7705?

ATI's explanation for the difference in input sensitivity and gain between the AT2000 and 4000 (see below), seems fine, as they claimed they were able to "normalize the input voltage" for the AT4000 (the newer gen.) but I still have a little trouble with the specified input sensitivity, can't help wonder if there is a typo or two somewhere.

As someone from a reputable audio equipment manufacturer, I hope you can take a good look and shed some light on this. I have no trouble understanding the input sensitivity specs of Marantz, Yamaha, McIntosh, Parasound, and many other power amp's, just the ATI's for now, I found confusing.

When asked, apparently ATI's explanation for the difference specs between the 2000 and 4000 are as follow:

https://forums.audioholics.com/foru...-to-be-a-denon-avr.119013/page-6#post-1417210

The AT2000/3000 circuitry didn't allow us to normalize input voltage. The AT4000/6000 design allowed us to make input voltage the same for RCA and XLR, so that the same gain can be applied to both.

XLR output on most (not all) pre-amps is 6dB louder than the RCA output. But that's just a result of having two signal wires instead of one. That doubles the signal (and noise), so it's not like XLR has a better signal than the RCA, just twice as much of the same signal.

We haven't decided on output voltage for the ATP-16 or whether XLR and RCA will be the same.

To me, I would much prefer March Audio's specs for the P252, no input sensitivity specs, just gain, and that's good enough for me.

https://www.marchaudio.net.au/product-page/p252-stereo-250-watt-power-amplifier

Power Output

- 2 Ohms - 180 W rms

- 4 Ohms - 250 W rms

- 8 Ohms - 150 W rms

Voltage Gain 26dB

That's because I can then calculate the input sensitivities easily, based on the rated output into 2,4, and 8 Ohms.

That would be:

- 2 Ohms - 180 W rms (*Input sensitivity: 0.95 V)

- 4 Ohms - 250 W rms (*Input sensitivity: 1.58 V)

- 8 Ohms - 150 W rms (*Input sensitivity: 1.74 V)

* The input sensitivity figures are from my calculations only, not part of March Audio's specs. I could be wrong if by chance I made some silly mistakes.

With ATI's, I can do the same kind of calculations, but the way they specified, I can't help but wonder if I interpreted their specs correctly.

Edit: For some reason, I must have keyed in something wrong (the formula in my Excel sheet were right) so the sensitivity results were all wrong, corrected now, and of course they are same as that calculated by restorer-John and March Audio.

keysandampexforak

keysandampexforak