In my previous reply I said:

I have a very good reason to believe that this effect has something to do with spectral cues and listening environment, more so than the loudspeakers, setup, or the recording itself. To test for this I chose this recording:

If I had to pick one recording that sounds "different" when listening to it on a closed back headphones and over loudspeakers in an untreated room, it would be this one. There is so much subtle details that are panned left and right, which are actually not that subtle and more easily audible on the headphones, than in room.

I listened to it at the same, quiet listening level, during the day, in a noisy environment, and late at night, but with desktop computer (with multiple fans) on, and finally with computer off.

What I found out is that, the less broadband noise which is sharing some of the bandwidth in the reverberant field, the more precise my localization was. Up to a point that, when doing the test with eyes open vs. eyes closed, in the most quiet environment, there was no difference at all. And then, I listened to the same track over the headphones again, only to find out that I actually heard all the details clearly.

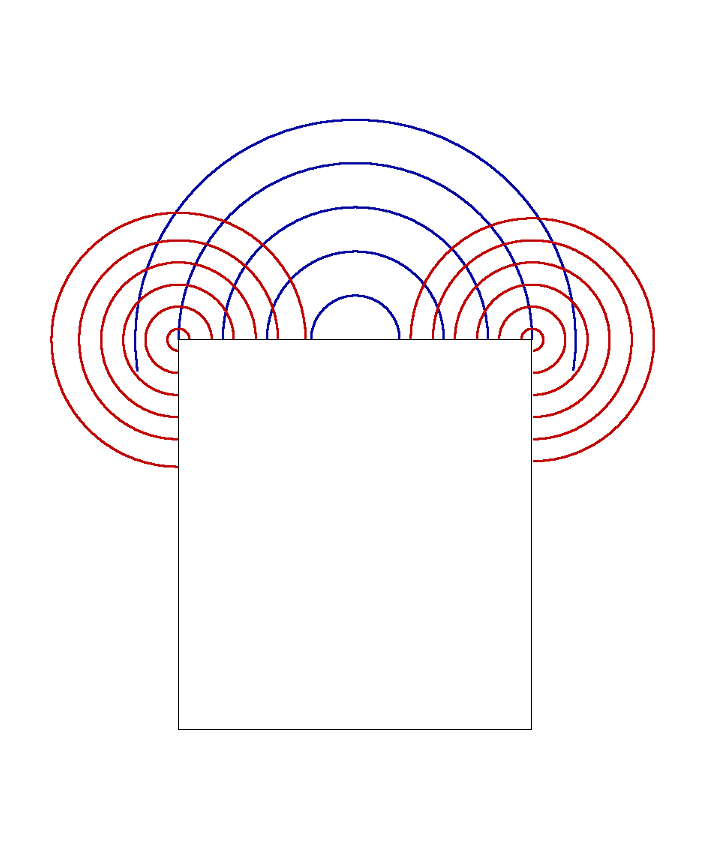

I believe that this has something to do with spectral cues which we get from our pinnae shape and the filtering mechanism when we try to look for an apparent sound source. When the broadband noise is making the information more fuzzy, with eyes open there's probably an expectation bias from knowing that the sound must be coming from the loudspeakers, but ITD and ILD information is telling us otherwise. So there are subtle image shifts and less precision when you try to point where the actual image is located. Spectral cues calibration is a delicate thing, I suppose. I mean, try to locate your phone ringing somewhere in the room, when you have lost it. All the reflections won't let you do that very easily.

In conclusion, even though we get used to "filtering out the room" from the sound field and we are doing it routinely, broadband noise may affect this. Thinking about it, even with controlled directivity, there would be energy radiated into the room, you just control how much of it. Direct sound is never "all you get" and reflections, combined with noise, may very well cause some out of phase cancellations, on some recordings.

@jim1274 , you may try this recording I shared above, I expect some interesting observations may arise with different setups