dasdoing

Major Contributor

from a here known Youtube video

can anybody explain this further? what happens in the nearfield?

can anybody explain this further? what happens in the nearfield?

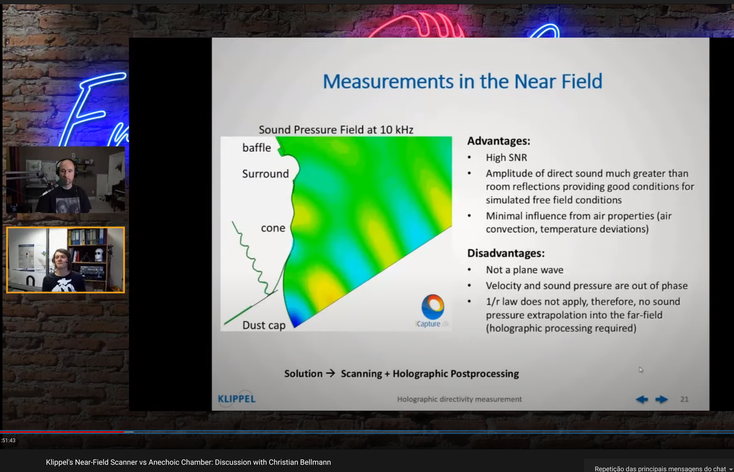

Normally, as a soundwave goes from source (speaker) to boundary (wall), velocity decreases and pressure increases. At the wall, pressure is maximum while velocity is zero. As it bounces back from the wall as a reflection pressure decreases and velocity increases. Same thing applies to a speaker's nearfield, where pressure is initially maximum and then decreases as the wave propagates.can anybody explain this further? what happens in the nearfield?

Normally, as a soundwave goes from source (speaker) to boundary (wall), velocity decreases and pressure increases. At the wall, pressure is maximum while velocity is zero. As it bounces back from the wall as a reflection pressure decreases and velocity increases. Same thing applies to a speaker's nearfield, where pressure is initially maximum and then decreases as the wave propagates.

The acoustical nearfield of a speaker acts as many chaotic sound sources which haven't combined to form a coherent wavefront (Huygens' principle below) with a clear direction of propagation, which is a plane wave. Before summation, the phase relationship between sound pressure and sound velocity is essentially random. This is where acoustical holography comes in and why so many measurement points are necessary for the NFS to capture exact propagation of nearfield sources and then compute their farfield values/summation.

View attachment 117459

I'm not an engineer and don't have a physics background so anyone looking in should please correct me.

It is a "problem" with headphones.hard for me to understand this.

but what I understood is that the waves are still forming in the near-field?

why is this not a problem with headphones?

Headphones couple directly to your ear system. There is so little distance and volume between the driver and your eardrum that from a physical perspective the space is less like air (acoustics) than like a fluid (hydrodynamics), and sound moves a lot more efficiently in fluids than in air. However, this is, again, frequency dependent. At high frequencies you start seeing acoustical resonances related to the shape of the outer ear and volume of the ear canal, and for bass there needs to be a clean seal. (@NTK beat me to it.)why is this not a problem with headphones?

from a here known Youtube video

View attachment 117455

can anybody explain this further? what happens in the nearfield?

The acoustic pressure perturbation and the acoustic velocity perturbation of an established traveling plane wave (and wave-train) are exactly in phase (as mentioned by pozz in post #5). The situation you mention applies to established standing waves, wherein the velocity is 90 degrees out of phase with the pressure. Due to sound attenuation during their journey, room/wall reflections are not as strong as the original, and so one might expect the pressure and velocity to be largely in phase in the midfield. In the nearfield vicinity of the speaker, where the Klippel measurements are made, the waves emanating from the driver and their reflections off the surrounding surfaces have not yet interfered/interacted and coalesced and straightened out into coherent wavefronts, and during this process the pressure (a scalar) and velocity (a directed quantity) will in general not be in phase.I am not sure he means what it says. An established sound wave traveling through the air HAS the property that the velocity and pressure ARE 90 degrees out of phase.

I said that un clearly in an expanding sound wave the V and P are 90 degrees out of phase or shifted relative to each other.

This continues until the wavefront is approaching a plane wave.

Thanks for clarifying what you meant, and thanks for the detailed explanations and the linked discussion on stackexchange. I mistook your previous statement to be about a traveling plane wave-train. Yes, you are quite right: in an expanding wave (like a spherical wave), the radial acoustic velocity (which is the only acoustic velocity component in a pure spherical acoustic wave) has two parts to it - a part that attenuates as 1/r like the acoustic pressure, and a second part that attenuates as 1/r^2. The first part is proportional to the acoustic pressure at the same time and location, while for the second part its time derivative is proportional to the spatial derivative of the acoustic pressure. If the acoustic pressure variation is sinusoidal in time and space, then the first part of the acoustic velocity will also be a sine wave in phase with the acoustic pressure. And the second part will be a cosinusoidal variation, i.e. a sinusoidal variation 90 degrees out of phase with the acoustic pressure, with the implied convention of the phase of each being relative to a peak or null. Please note that the effective radial distance is relative to the center of curvature of the acoustic wave, which should normally lie behind the driver diaphragm.A reference;

Pressure and particle V are in phase in a plane wave far field (a resistive condition) , but not with a spherical or expanding wavefront until the wave has expanded sufficiently to be a locally "a plane wave".

https://physics.stackexchange.com/q...een-particle-velocity-and-sound-pressure-in-s

"where if I recall correctly the minus sign means a wave traveling outwards and the plus means a wave traveling inwards. So:

u=f(ct∓x)r2±f′(ct∓x)r

p=ρcf′(ct∓x)r

It's because u and p have a different r dependence that you get the change in phase for distances below a wavelength or two."

I agree that the phrase "out of phase" in Christian's presentation slide is ambiguous in general, but in the context of his talk, I understood it to mean "not-in-phase", where the phase difference is variable. On the other hand, perhaps he was referring to the nearfield effect you pointed out with regard to spherical expanding waves.Perhaps it's also a semantic issue as "out of phase" without qualifier generally means opposite polarity rather than 90 degrees or other phase shift but it could also mean not 100% in phase.

I agree that that is a good way to look at the phenomena, as the practical sensation and measurements are of stationary oscillations, and the pressure and velocity have a 90 degrees phase difference with regard to the way most engineers would define phase in this situation (i.e., relative to maxima or nulls of each quantity). However, I preferentially interpret them as a superposition of counter-propagating or otherwise interfering acoustic waves because their fundamental nature is that of acoustic perturbations.I believe it would be more correct to say that in a standing wave AND interference patterns, the velocity maximum and pressure maximums in the wave appear to be stationary instead of propagating away at the speed of sound and to the degree the losses are small, they in near perfect quadrature (about 90 degrees phase shift between them).