Serge Smirnoff

Active Member

- Joined

- Dec 7, 2019

- Messages

- 240

- Likes

- 136

Hi everybody,

During the last year the df-metric got its further development not the least thanks to partnership between SoundExpert and Hypethesonics projects. We successfully assembled the measurement device, capable of measuring degradation of music signal down to -80dB of Difference level. The device is based on TI PCM4222 evaluation board and shows accurate and stable performance. To this moment we measured 23 portable audio players from different consumer segments - http://soundexpert.org/vault/dpa.html

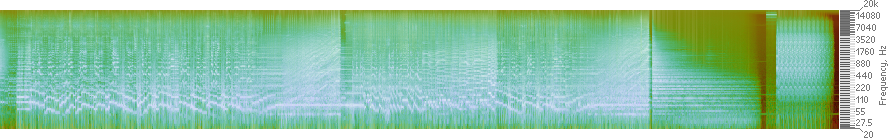

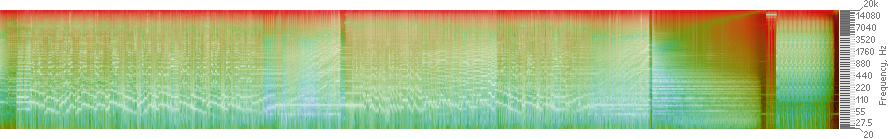

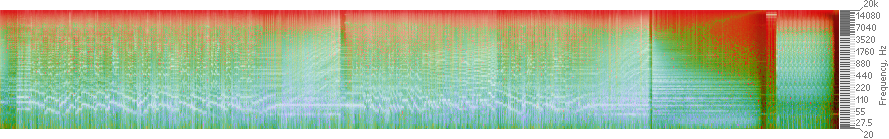

Another progress was achieved in visualization of df-measurements. The DF parameter can be equally computed in both time and freq. domains. Using freq. domain allows to compute DF levels separately for signal magnitudes and phases and for different freq. sub-bands as well. The full-featured diffrograms computed this way show degradation of a signal in two dimentions - with time using a time window and with frequency using a set of freq. sub-bands. Below is an example of such diffrogram, which uses 400ms time window (=1px) and 1/12 octave bands (1px each). The DUT is Chord Hugo 2, the test track is A Day In The Life, The Beatles:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Tm-89.3474(-76.8128)-21.6638_v3.3.png

Median df level (dB) for this DUT/track is (in brackets). We can see that the whole audible band is accurately and in full reproduced with some visible degradation only above 15kHz and for the fragments of the signal with low energy.

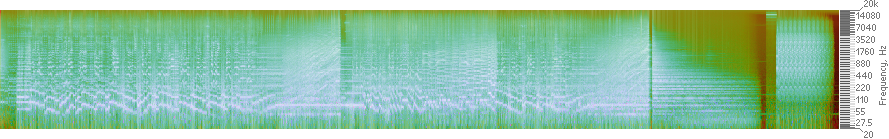

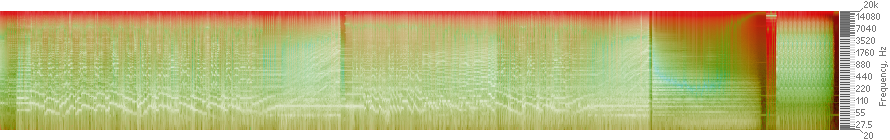

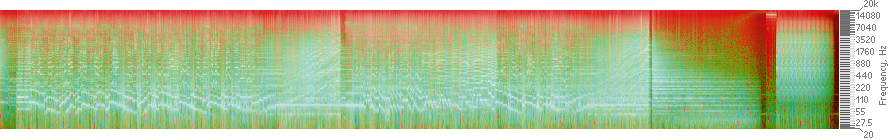

Degradation of the signal magnitudes looks slightly better:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Mg-92.3221(-80.4647)-24.1157_v3.3.png

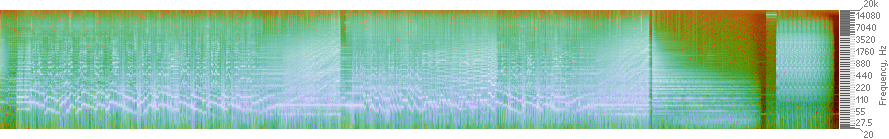

The degradation of the phases is much higher:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Ph-56.9230(-23.4586)-4.5759_v3.3.png

The examples below will help to understand such diffrograms better.

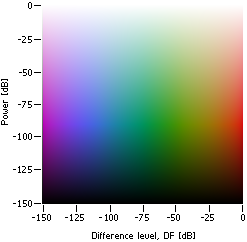

DF levels and energy of a signal are coded according to this color map (algorithmically defined) :

Max.brightness of colors (white) corresponds to the power of full scale square wave; min.brightness (black) corresponds to -150dBFS.

All computations are performed in the region of interest: 20Hz - 20kHz regardless of a signal sample rate.

As the energy is indicated not per frequency but for freq. sub-bands, the diffrograms with white nose are slightly brighter towards the top:

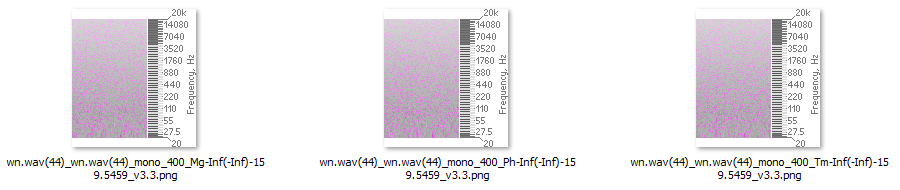

These are magnitude, phase and time diffrograms computed for the signal of white noise (20Hz-20kHz, -10dBFS, 30s, 44.1k, 16bit). Grey color indicates DF = -Inf. Diffrograms with pink noise have equal brightness along vertical dimension.

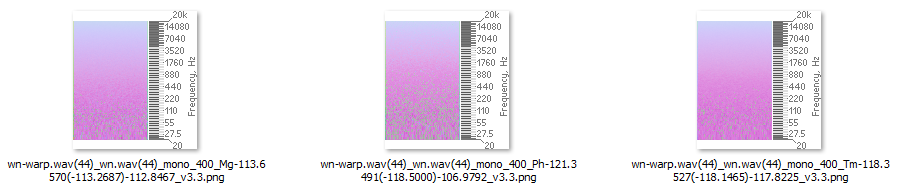

The diffrograms above were computed with the same signal as reference and output. In other words both signals were perfectly time aligned and DF levels were computed as is. In practice the output signals usually have small time and pitch shifts which are corrected with a time-warping mechanism of the diffrogram33 function. Below are the same diffrograms computed with the time-warping:

These diffrograms show inaccuracy of the time-warping mechanism, which increases with frequency. Being the most complex signal, white noise forces the worst case scenario for the time warping, all other signals are corrected with higher accuracy; the latter can be increased at the expense of computation time. Time warping allows to compare signals at different sample rates and with any arbitrary time/pitch shifts. All examples with analogue signals in this presentation were time warped.

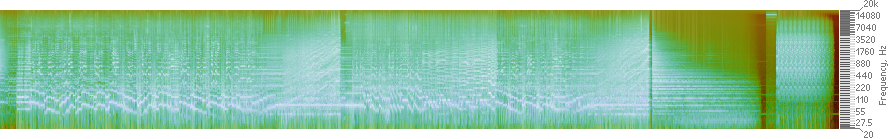

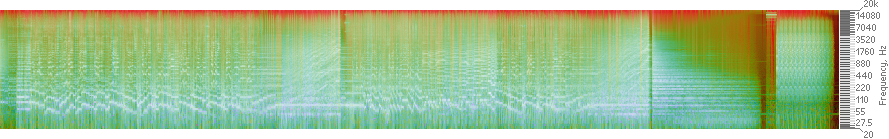

Let's return to the portable players and compare Hugo 2 with three other devices using diffrograms - Apple dongle (A1749), Questyle QP2R, HiBy FC3:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Tm-86.3888(-81.0615)-20.6228_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Tm-89.3474(-76.8128)-21.6638_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Tm-59.7192(-42.9632)-6.5048_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Tm-51.1570(-38.3077)-8.7829_v3.3.png

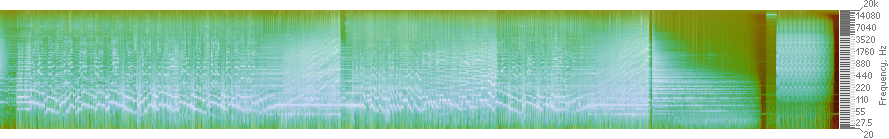

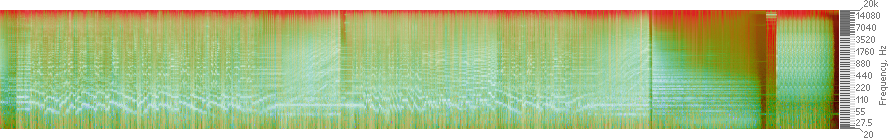

Corresponding magnitude diffrograms:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Mg-89.2874(-83.5085)-23.0726_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Mg-92.3221(-80.4647)-24.1157_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Mg-74.0361(-56.3325)-10.7843_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Mg-70.6177(-53.3802)-11.8761_v3.3.png

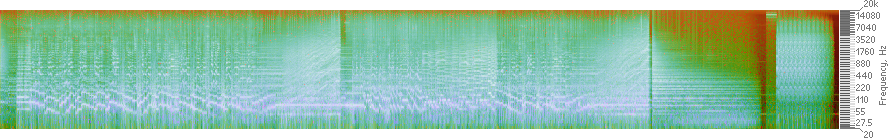

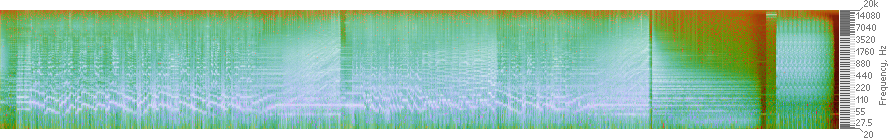

And their phase diffrograms:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Ph-61.4849(-23.8133)-3.7251_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Ph-56.9230(-23.4586)-4.5759_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Ph-15.3690(-4.2380)+0.1039_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Ph-13.5322(-3.3230)-0.2530_v3.3.png

According to the above diffrograms Chord Hugo 2 and HiBy FC3 provide almost the same accuracy of this signal reproduction; QP2R and the dongle are far behind.

Resulting df-slides with full-featured diffrograms for tech. signals (1/6 octave bands) and with histogram of DF levels for two hours of music:

![[df33]Hiby-FC3_LGMA.png [df33]Hiby-FC3_LGMA.png](https://www.audiosciencereview.com/forum/index.php?attachments/df33-hiby-fc3_lgma-png.150906/)

![[df33]Chord-Hugo2.png [df33]Chord-Hugo2.png](https://www.audiosciencereview.com/forum/index.php?attachments/df33-chord-hugo2-png.150907/)

![[df33]Questyle-QP2R.png [df33]Questyle-QP2R.png](https://www.audiosciencereview.com/forum/index.php?attachments/df33-questyle-qp2r-png.150908/)

![[df33]Apple-A1749.png [df33]Apple-A1749.png](https://www.audiosciencereview.com/forum/index.php?attachments/df33-apple-a1749-png.150909/)

- on average, DF levels of t-signals are pretty consistent to DF levels with the music signal;

- t-signals degrade not accordingly; it depends on particular processing/circuitry used in playback device.

Such set of test signals (the music one in particular) and their DF levels give comprehensive picture of a device performance and its playback accuracy. Program simulation noise IEC 60268-1 can be used for express audio testing due to its good (actually - best) correlation with a music signal.

... to be continued below

During the last year the df-metric got its further development not the least thanks to partnership between SoundExpert and Hypethesonics projects. We successfully assembled the measurement device, capable of measuring degradation of music signal down to -80dB of Difference level. The device is based on TI PCM4222 evaluation board and shows accurate and stable performance. To this moment we measured 23 portable audio players from different consumer segments - http://soundexpert.org/vault/dpa.html

Another progress was achieved in visualization of df-measurements. The DF parameter can be equally computed in both time and freq. domains. Using freq. domain allows to compute DF levels separately for signal magnitudes and phases and for different freq. sub-bands as well. The full-featured diffrograms computed this way show degradation of a signal in two dimentions - with time using a time window and with frequency using a set of freq. sub-bands. Below is an example of such diffrogram, which uses 400ms time window (=1px) and 1/12 octave bands (1px each). The DUT is Chord Hugo 2, the test track is A Day In The Life, The Beatles:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Tm-89.3474(-76.8128)-21.6638_v3.3.png

Median df level (dB) for this DUT/track is (in brackets). We can see that the whole audible band is accurately and in full reproduced with some visible degradation only above 15kHz and for the fragments of the signal with low energy.

Degradation of the signal magnitudes looks slightly better:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Mg-92.3221(-80.4647)-24.1157_v3.3.png

The degradation of the phases is much higher:

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Ph-56.9230(-23.4586)-4.5759_v3.3.png

The examples below will help to understand such diffrograms better.

DF levels and energy of a signal are coded according to this color map (algorithmically defined) :

Max.brightness of colors (white) corresponds to the power of full scale square wave; min.brightness (black) corresponds to -150dBFS.

All computations are performed in the region of interest: 20Hz - 20kHz regardless of a signal sample rate.

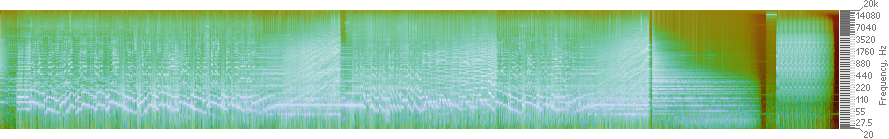

As the energy is indicated not per frequency but for freq. sub-bands, the diffrograms with white nose are slightly brighter towards the top:

These are magnitude, phase and time diffrograms computed for the signal of white noise (20Hz-20kHz, -10dBFS, 30s, 44.1k, 16bit). Grey color indicates DF = -Inf. Diffrograms with pink noise have equal brightness along vertical dimension.

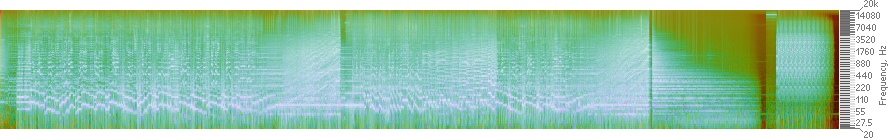

The diffrograms above were computed with the same signal as reference and output. In other words both signals were perfectly time aligned and DF levels were computed as is. In practice the output signals usually have small time and pitch shifts which are corrected with a time-warping mechanism of the diffrogram33 function. Below are the same diffrograms computed with the time-warping:

These diffrograms show inaccuracy of the time-warping mechanism, which increases with frequency. Being the most complex signal, white noise forces the worst case scenario for the time warping, all other signals are corrected with higher accuracy; the latter can be increased at the expense of computation time. Time warping allows to compare signals at different sample rates and with any arbitrary time/pitch shifts. All examples with analogue signals in this presentation were time warped.

Let's return to the portable players and compare Hugo 2 with three other devices using diffrograms - Apple dongle (A1749), Questyle QP2R, HiBy FC3:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Tm-86.3888(-81.0615)-20.6228_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Tm-89.3474(-76.8128)-21.6638_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Tm-59.7192(-42.9632)-6.5048_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Tm-51.1570(-38.3077)-8.7829_v3.3.png

Corresponding magnitude diffrograms:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Mg-89.2874(-83.5085)-23.0726_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Mg-92.3221(-80.4647)-24.1157_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Mg-74.0361(-56.3325)-10.7843_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Mg-70.6177(-53.3802)-11.8761_v3.3.png

And their phase diffrograms:

FC3-05.wav(192)_ref5.wav(44)_mono_400_Ph-61.4849(-23.8133)-3.7251_v3.3.png

Hugo2-05.wav(192)_ref5.wav(44)_mono_400_Ph-56.9230(-23.4586)-4.5759_v3.3.png

QP2R-05.wav(192)_ref5.wav(44)_mono_400_Ph-15.3690(-4.2380)+0.1039_v3.3.png

A1749-05.wav(192)_ref5.wav(44)_mono_400_Ph-13.5322(-3.3230)-0.2530_v3.3.png

According to the above diffrograms Chord Hugo 2 and HiBy FC3 provide almost the same accuracy of this signal reproduction; QP2R and the dongle are far behind.

Resulting df-slides with full-featured diffrograms for tech. signals (1/6 octave bands) and with histogram of DF levels for two hours of music:

- on average, DF levels of t-signals are pretty consistent to DF levels with the music signal;

- t-signals degrade not accordingly; it depends on particular processing/circuitry used in playback device.

Such set of test signals (the music one in particular) and their DF levels give comprehensive picture of a device performance and its playback accuracy. Program simulation noise IEC 60268-1 can be used for express audio testing due to its good (actually - best) correlation with a music signal.

... to be continued below