Howdy folks, thought I should share an interesting study I came across, which was published just now:

www.sciencedirect.com

www.sciencedirect.com

It's behind an academic paywall, but if anybody wants a pdf copy do send me a message and I'll send it to you (academic publishing is nothing but evil capitalism!). Or search for it using sci cough hub cough.

There's been some discussions on blind testing here before, including a mammoth thread some years ago. Does blind testing make us "blind" to real differences which are there, and can be perceived with normal relaxed listening, but which are difficult to perceive with blind testing protocols? That's the subjectivist claim, but there is little evidence to back it up systematically. This study here though is probably the most thorough attempt I've seen at looking at different protocols for blind testing, what the limitations may be, and whether some test protocols perform better than others. I'll try to sum it up as best as I can.

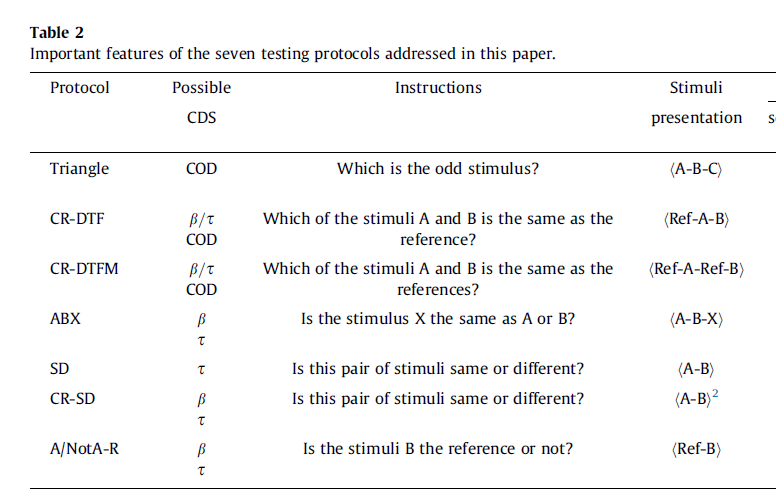

They used 134 test persons, and compared various ways of doing it:

The common ABX method, where one is asked to say whether X is identical to either A or B - where X is often played at the end (or sometimes in the middle)

Same/different approach: Just to say whether A and B are identical or not

CR-DTF: Complicated name, but essentially it is quite similar to ABX, only that "X" is always played first, and then one needs to decide whether A or B is similar to X - it's a XAB test, kind of

It's a bit complicated, but here are the protocols they tried out:

The test was about comparing recordings of room acoustics through headphones (Sennheiser 650) - could the listeners perceive the acoustic conditions as different.

So... drumroll... what did they find?

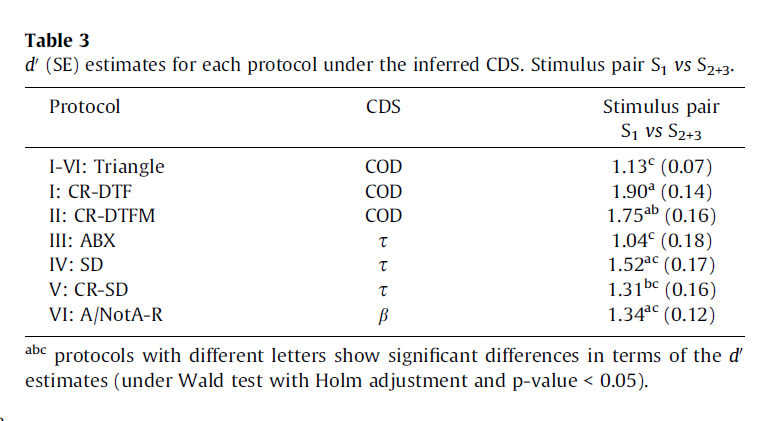

The ABX protocol, which is a common way of doing blind listening in audio, was actually the worst protocol for discerning differences. Same/different testing did better. But the best results were with the CR-DTF test, i.e. the "XAB" test.

I'm posting the table with the results as well (higher score is better):

I found this very interesting, as the ABX method has long been the most common method of doing blind testing in audio. I was surprised by the fact that the same-different test did not score the highest - that's what I would have assumed initially. The highest scoring method was the CR-DTF or XAB test!

I'm not sure if it's possible to do blind testing through Foobar using the CR-DTF or XAB formula today?

Anyways, maybe this can be of interest to some of you. If any of you smart guys on the forum who actually know something about blind testing procedures (unlike me) read the whole paper, I would be interested in hearing what you think of the study.

Listening tests in room acoustics: Comparison of overall difference protocols regarding operational power

Listening tests in room acoustics: Comparison of overall difference protocols regarding operational power

Listening tests are key to evaluate the perception of difference between confusable auditory stimuli, among other purposes. In room acoustics, the use…

www.sciencedirect.com

www.sciencedirect.com

It's behind an academic paywall, but if anybody wants a pdf copy do send me a message and I'll send it to you (academic publishing is nothing but evil capitalism!). Or search for it using sci cough hub cough.

There's been some discussions on blind testing here before, including a mammoth thread some years ago. Does blind testing make us "blind" to real differences which are there, and can be perceived with normal relaxed listening, but which are difficult to perceive with blind testing protocols? That's the subjectivist claim, but there is little evidence to back it up systematically. This study here though is probably the most thorough attempt I've seen at looking at different protocols for blind testing, what the limitations may be, and whether some test protocols perform better than others. I'll try to sum it up as best as I can.

They used 134 test persons, and compared various ways of doing it:

The common ABX method, where one is asked to say whether X is identical to either A or B - where X is often played at the end (or sometimes in the middle)

Same/different approach: Just to say whether A and B are identical or not

CR-DTF: Complicated name, but essentially it is quite similar to ABX, only that "X" is always played first, and then one needs to decide whether A or B is similar to X - it's a XAB test, kind of

It's a bit complicated, but here are the protocols they tried out:

The test was about comparing recordings of room acoustics through headphones (Sennheiser 650) - could the listeners perceive the acoustic conditions as different.

So... drumroll... what did they find?

The ABX protocol, which is a common way of doing blind listening in audio, was actually the worst protocol for discerning differences. Same/different testing did better. But the best results were with the CR-DTF test, i.e. the "XAB" test.

I'm posting the table with the results as well (higher score is better):

I found this very interesting, as the ABX method has long been the most common method of doing blind testing in audio. I was surprised by the fact that the same-different test did not score the highest - that's what I would have assumed initially. The highest scoring method was the CR-DTF or XAB test!

I'm not sure if it's possible to do blind testing through Foobar using the CR-DTF or XAB formula today?

Anyways, maybe this can be of interest to some of you. If any of you smart guys on the forum who actually know something about blind testing procedures (unlike me) read the whole paper, I would be interested in hearing what you think of the study.