Last Christmas I got emotionally week and payed for Spotify Premium (limited offer/3 months for reduced price). Being a person who wants to actually "really use something" when you payed for it (don't want to say "extort"  I must admit, I have skipped quite a bunch of songs during that quarter (several dozens GB of traffic when checked back). I was also in a mood for searching some hifi/reference/test/showcase tracks (call it what you like) so I added several playlists with this orientation. Well, I found many interesting songs/artists but it got also somehow frustrating because many notorious songs were reappearing in these playlists.

I must admit, I have skipped quite a bunch of songs during that quarter (several dozens GB of traffic when checked back). I was also in a mood for searching some hifi/reference/test/showcase tracks (call it what you like) so I added several playlists with this orientation. Well, I found many interesting songs/artists but it got also somehow frustrating because many notorious songs were reappearing in these playlists.

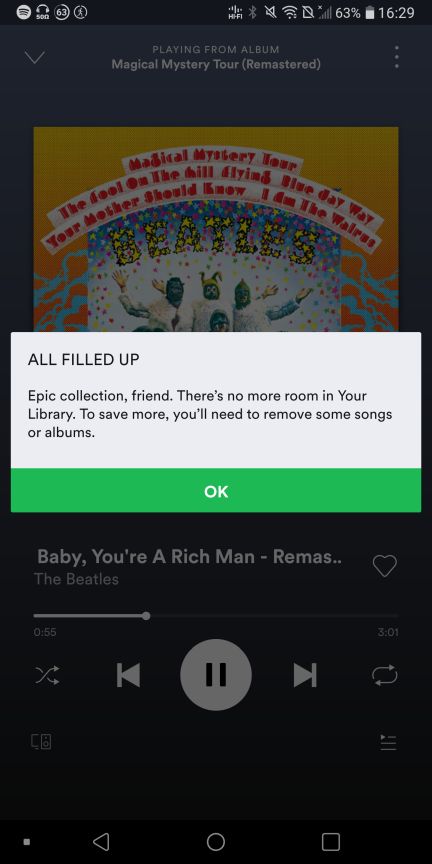

That being said, I missed one particular feature that would ease my situation/user experience, that is the possibility to enable some kind of tagging (either automatic or manual) of songs that have been already played. I know, it might also get counterproductive. Let's imagine you forget to hit pause and leave Spotify "unattended" playing for several hours so it would tag quite a lot of content that you actually haven't listened to. Well this could be cured for example by enabling only manually skipped songs for "autotagging feature". OK, enough of my minority wishes I had to play with cards I had been given. So there was only one convenient way to achieve my aim - to manually tag every "once listened" song with the heart button (which remembers your choice within your account and also adds every tagged song to your personal "Liked playlist"). So I was playing music, enjoying a huge music database and "liked" songs happily, when all of a sudden this message appeared:

I had to play with cards I had been given. So there was only one convenient way to achieve my aim - to manually tag every "once listened" song with the heart button (which remembers your choice within your account and also adds every tagged song to your personal "Liked playlist"). So I was playing music, enjoying a huge music database and "liked" songs happily, when all of a sudden this message appeared:

I thought wtf is this? In this age of "infinite" storage and massive datacenters crazy Swedes set some strange limit? I checked the numbers and the barrier seemed to be at the number of 10 000 (cumulative for both liked songs and albums). Well as my subscription expired I also needed time to have a little rest from this frenetic like/skip, skip/skip marathon So I returned to some laid back listening of my local music and haven't used Spotify for quite some time. Recently I have looked there again for some new content and voilá the limitation is gone.. Don't know if they only raised the bar or set it to "unlimited", like gmail..

So I returned to some laid back listening of my local music and haven't used Spotify for quite some time. Recently I have looked there again for some new content and voilá the limitation is gone.. Don't know if they only raised the bar or set it to "unlimited", like gmail..

Ok that's all as to my funny experience with this service.

Oh, for God of SNR's sake! I just realized I write this in a review department. OK, here is some quick list of pros/cons:

good:

- consistent sound quality throughout content (my subjective feeling / choices being auto-low-normal-high-very high / very high being exclusive to premium substription / as for me, I'm also happy with "high"

/ choices being auto-low-normal-high-very high / very high being exclusive to premium substription / as for me, I'm also happy with "high"

- quite pleasant user interface (black style, good for eyes and oleds as well), obviously you need some time to get used to its logic

- reasonable amount of options in settings (crossfade/gapless/automix/explicit content/volume normalization/autoplay/device connection/ car mode/cellular download/ etc.)

- number of offered ways for discovering new content (daily mix, release radar, discover weekly, new album releases, "similar" artists, local playlists, best of artists, "radio"...) - this gets more and more personalized according to your taste, I guess it is quite similar to other streaming services

- very usable service also in non-premium mode if you can live with:

/ ads

/ limited skipping (I think 6 skips for an hour or so)

/ listening to full albums/playlists in shuffle mode only (in full album listening it inserts "random" songs from other artists as well)

- in premium you can download and listen offline content (limited only to your storage I guess)

neutral (decide for yourself):

- limited "social" aspect - you can browse and search user playlists, but not contact them (there is a possibility to link your facebook account though which I have not tried as I don't have any - I am already on ASR, I can't be everywhere After FB linking I can imagine it opens further possibilities

After FB linking I can imagine it opens further possibilities

- instead of just a heart button I'd like to see a 5-star rating like in iTunes (ok, this is only my subjective deviation against the crowd, I know..)

- reasonable price with regular limited offers/discounts/family plans

bad:

- I don't listen to local music scene (shame on me) and in non-premium mode it keeps playing me ads for some crap local playlists with packed with brand new mainstream shite..

- during "rush hours" it can get a little laggy (less responsive)

That's all folks! If you feel the "review" part is not detailed enough, this guy can say some more useful points to it:

PS: If I can's sleep I may add some more thoughts to this post or edit grammar mistakes (that was a joke, life is too short for that)

That being said, I missed one particular feature that would ease my situation/user experience, that is the possibility to enable some kind of tagging (either automatic or manual) of songs that have been already played. I know, it might also get counterproductive. Let's imagine you forget to hit pause and leave Spotify "unattended" playing for several hours so it would tag quite a lot of content that you actually haven't listened to. Well this could be cured for example by enabling only manually skipped songs for "autotagging feature". OK, enough of my minority wishes

I thought wtf is this? In this age of "infinite" storage and massive datacenters crazy Swedes set some strange limit? I checked the numbers and the barrier seemed to be at the number of 10 000 (cumulative for both liked songs and albums). Well as my subscription expired I also needed time to have a little rest from this frenetic like/skip, skip/skip marathon

Ok that's all as to my funny experience with this service.

Oh, for God of SNR's sake! I just realized I write this in a review department. OK, here is some quick list of pros/cons:

good:

- consistent sound quality throughout content (my subjective feeling

- quite pleasant user interface (black style, good for eyes and oleds as well), obviously you need some time to get used to its logic

- reasonable amount of options in settings (crossfade/gapless/automix/explicit content/volume normalization/autoplay/device connection/ car mode/cellular download/ etc.)

- number of offered ways for discovering new content (daily mix, release radar, discover weekly, new album releases, "similar" artists, local playlists, best of artists, "radio"...) - this gets more and more personalized according to your taste, I guess it is quite similar to other streaming services

- very usable service also in non-premium mode if you can live with:

/ ads

/ limited skipping (I think 6 skips for an hour or so)

/ listening to full albums/playlists in shuffle mode only (in full album listening it inserts "random" songs from other artists as well)

- in premium you can download and listen offline content (limited only to your storage I guess)

neutral (decide for yourself):

- limited "social" aspect - you can browse and search user playlists, but not contact them (there is a possibility to link your facebook account though which I have not tried as I don't have any - I am already on ASR, I can't be everywhere

- instead of just a heart button I'd like to see a 5-star rating like in iTunes (ok, this is only my subjective deviation against the crowd, I know..)

- reasonable price with regular limited offers/discounts/family plans

bad:

- I don't listen to local music scene (shame on me) and in non-premium mode it keeps playing me ads for some crap local playlists with packed with brand new mainstream shite..

- during "rush hours" it can get a little laggy (less responsive)

That's all folks! If you feel the "review" part is not detailed enough, this guy can say some more useful points to it:

PS: If I can's sleep I may add some more thoughts to this post or edit grammar mistakes (that was a joke, life is too short for that)

Last edited: