- Joined

- Jan 23, 2020

- Messages

- 4,335

- Likes

- 6,702

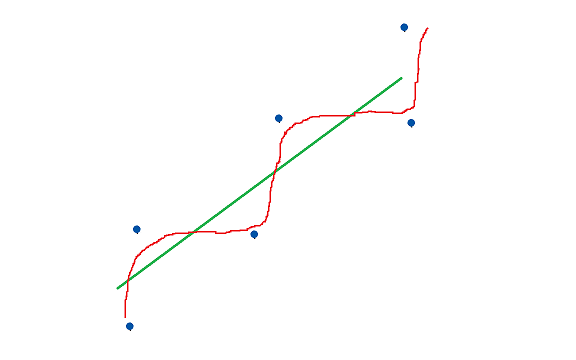

While Dr Olive makes good points about the speakers being different and the controls not being equivalent between studies, a .99 correlation on a sample size of 13, with 5 parameters screams overfitting.

Overfitting Regression Models: Problems, Detection, and Avoidance

Overfitting regression models produces misleading coefficients, R-squared, and p-values. Learn how to detect and avoid overfit models.statisticsbyjim.com

Which is why I was asking @preload originally about it. I wanted to know how much the smaller sample size affected things. He's good with stats, but he didn't really believe me about the .99 correlation thing(understandably so). I'm especially interested in how well the model can predict monopole speaker comparisons where bass extension is of little importance(ie using subs), and I know he's really good at that kinda stuff.

13 samples for 4 variables does seem quite small, but it's important to point out that it's not 13 comparisons, but 13 speakers that are all compared against each other many times with many different combinations.

It may be that the sample size of the first study doesn't need to be as large, since it's much more controlled, both in terms of bass extension and the type of speaker(only monopole(except 1?)). The larger study does bring in a larger sample size, but it also introduces a couple extra really important variables, namely widely different bass extensions and speakers of completely different types(panels, open baffles dipoles, etc.)

While the sample size of the larger study is nice, I have a hunch that it's a mistake to try to come up with a single model to predict preferences for all types of speakers. My hunch is that trying to do so is lowering the correlation, and it might be easier and/or better to try separate models for separate speaker types.

Last edited: