There's been a lot of discussion about DAC linearity measurements in various threads, so I thought I'd throw in my opinions and a few observations to support my thinking. I want to start by thanking Amir, who has generously shared project files, filters, and anything else I've asked for to try replicating what he's doing. For the measurements, I used an APx525 with every bell and whistle (with a major hat tip to AP and AudioXpress for making this wonderful equipment available to me).

The test mule here is a Behringer UMC404HD, which is a combo ADC/DAC with 4 mike preamps, selling for under $100. The focus here should be in what results are gathered and how they're interpreted, which seems to be the gist of the arguments.

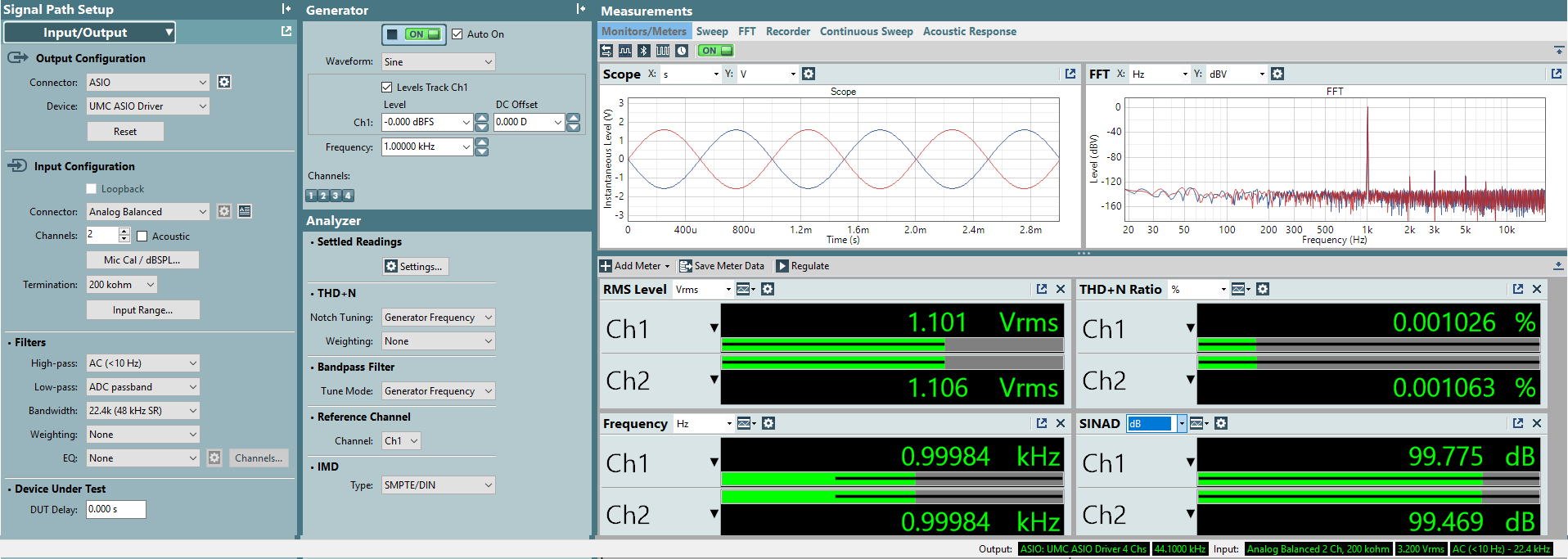

The basic measurements are as shown here:

(Don't mind the phase reversal between channels, that's because I only had two XLR-to-TRS cables and one was wired backward)

A couple things to note: this is at best a 99.5dB S/N device, good enough for CD, not exactly state of the art, though. And the output at full scale is pathetically low; having a reasonably healthy output (3-5V) would probably have squeezed out another 6-10dB of S/N.

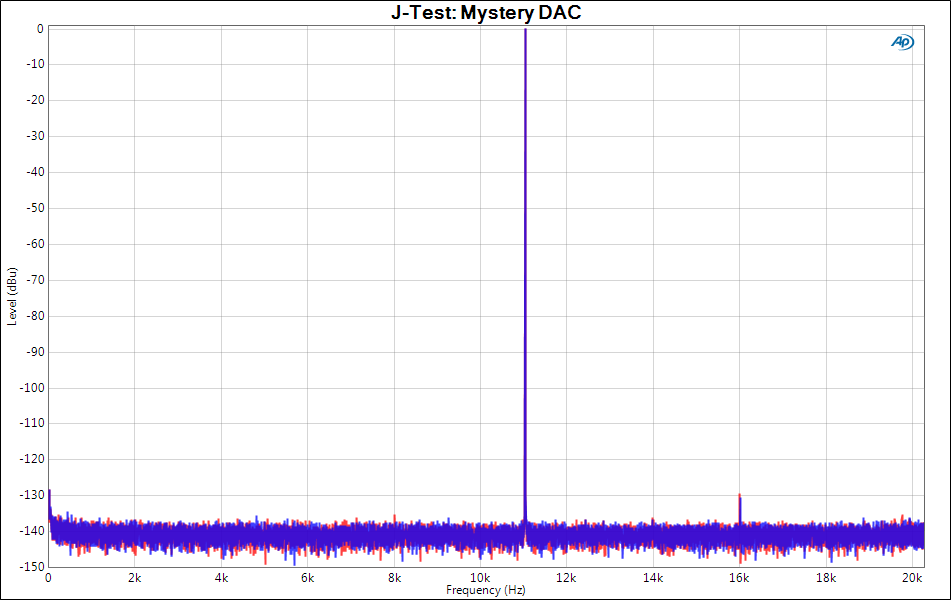

Regarding jitter, I posted the J-Test results in an earlier thread, but here they are again for reference:

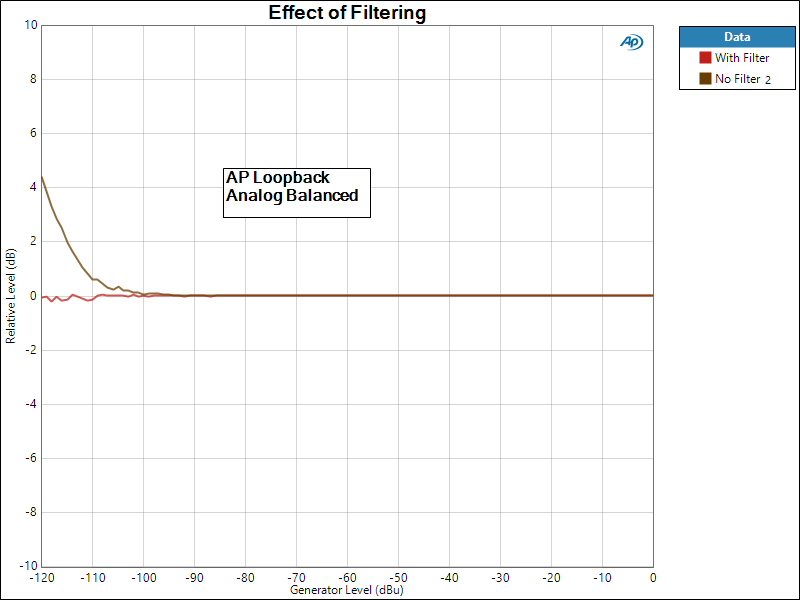

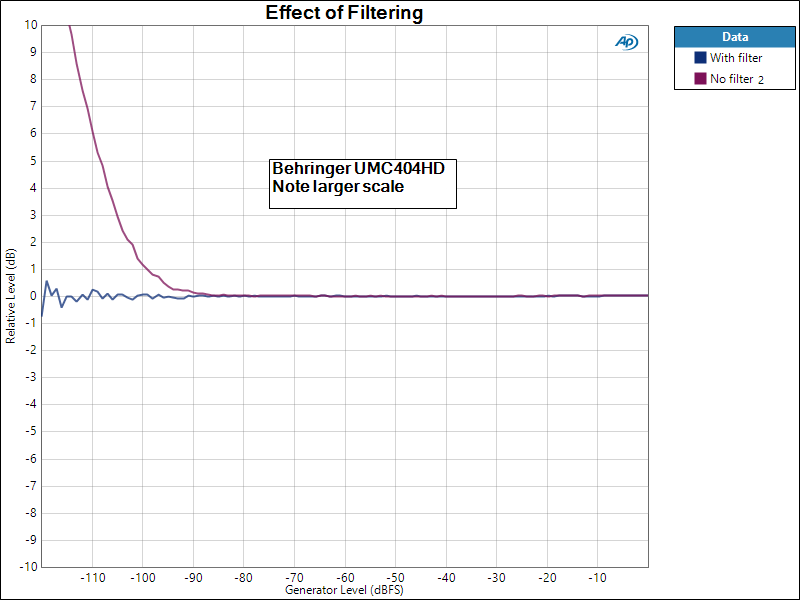

So I think that the linearity stuff won't get overshadowed by other defects. And for purposes of clarity, I thought it might be nice to see what the baseline linearity of the APx525 was. Unfortunately, you can't do exactly the linearity test that you would with a DAC, but you can do an analog loopback, which is certainly going to be a worst-case. I used 0dBu as my reference, so to translate to dBV (which is close to the dBFS for the UMC404HD), add (or subtract) about 2.2 dB. I ran this with and without the narrow bandpass filter Amir uses. This is run with and without the bandpass filter so you can see the effect of the noise floor on this measurement.

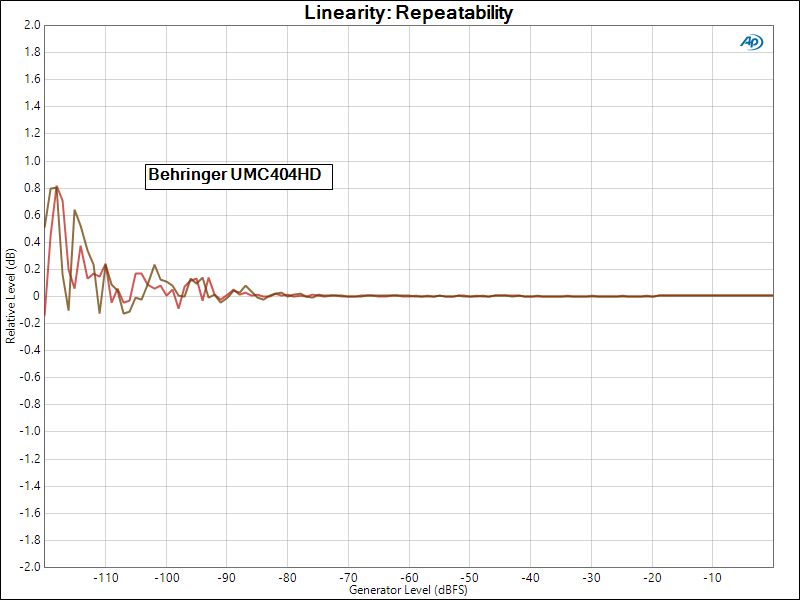

OK, let's kick things off with Amir's Basic Linearity Test, which uses the bandpass filter. I ran it twice in order to get an idea of what the repeatability is:

By Amir's criterion, this loses linearity at about -95dBFS. But is it really becoming nonlinear? Let's take off the bandpass filter:

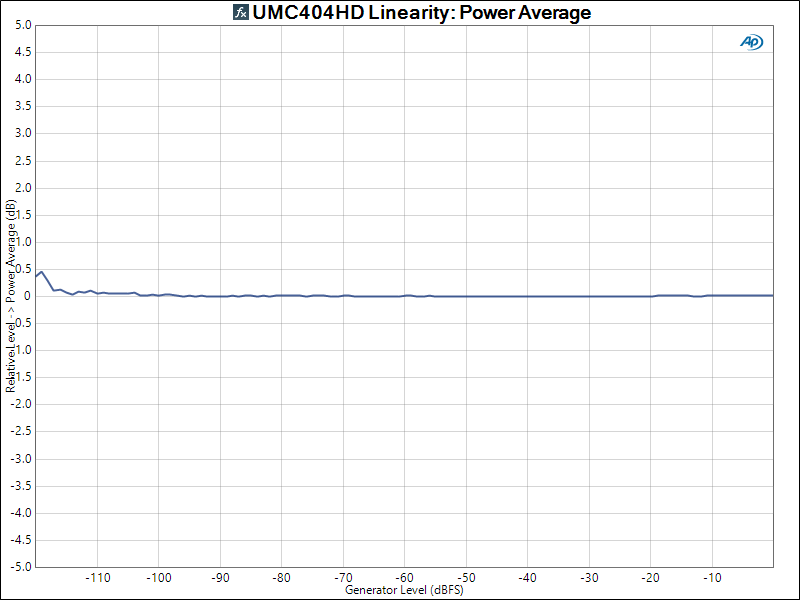

So, it's clear from the last two graphs that the noise floor is a really big deal as expected in a broadband measurement- you expect this to increase at low signal levels. But what about the "linearity" with the filter on? How much is that affected by noise and how much by linearity? Does it matter? First, let's do a power average of multiple runs (16 in this case), which will tend to cancel noise (by a factor of sqrtN, where N is the number or runs) and bring out whatever nonlinearity is inherent to the DAC.

Looks like the linearity now holds up to better than -116dBFS. And I say "better than" because more averages might reduce the deviation even further. I would have done 64 averages to see if the bump below -116dB halved in amplitude, but that's a slow process for old and software-stupid people who can't write macros to let it run unattended. But I think the point is adequately demonstrated- the deviation of the linearity curve in this kind of measurement is noise-dominated, even with the bandpass filter.

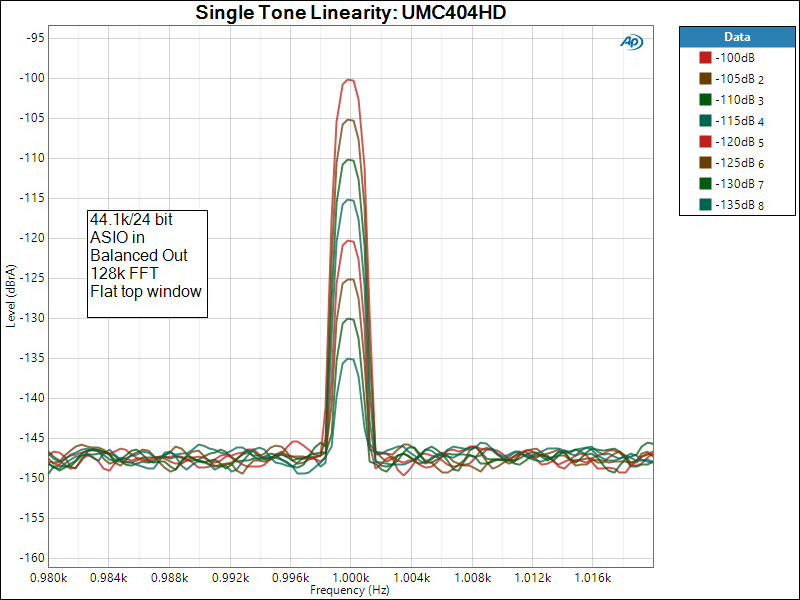

The linearity vs noise thing really came to mind when Amir showed spectra of a single tone taken at lower and lower levels from one particularly questionable DAC. At a certain point, reducing the level at the DAC didn't result in the analog output signal getting smaller, i.e., the transfer function breaks into a horizontal line. That is something I would call a nonlinearity!

So juuuust for fun, I used that trick to see how well the DAC tracks at low levels. And whaddaya know.

32 averages knocks down the noise floor and shows that the tracking of the tone's levels is really excellent.

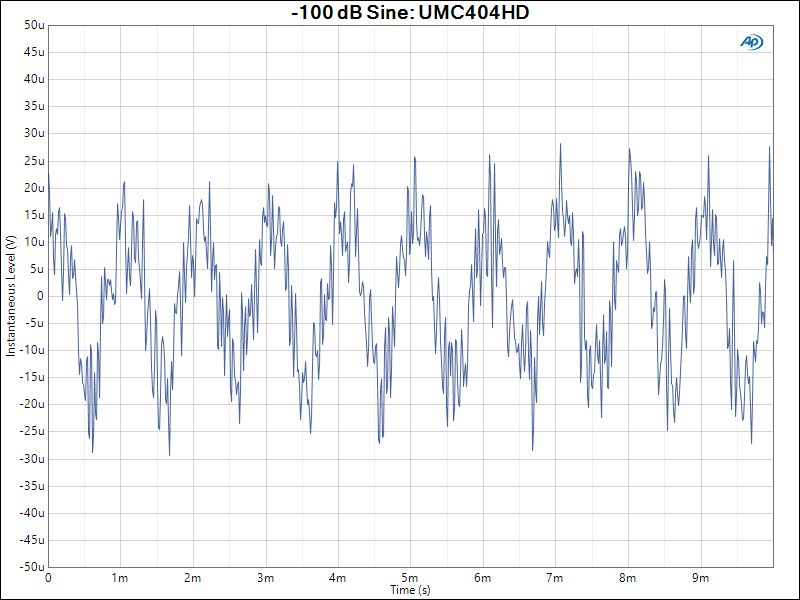

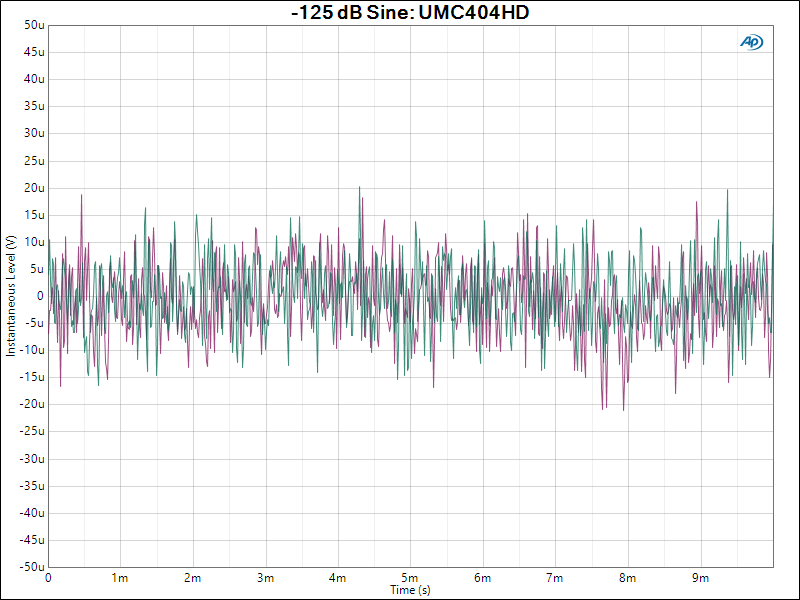

It can be argued that, who cares about this, if the noise dominates below -99 dB, the inherent DAC linearity is unimportant. perhaps so, but it's not hard to demonstrate (try it yourself!) that we can detect tones well below a random noise floor. For example, let's look at a sine wave coming out of the DAC at -100dB:

Still pretty sine-y. So it's not implausible that this would be clearly audible through the noise, assuming your gain is turned up high enough. Let's delve deeper:

It's quite a bit harder to see the sinewave in here, but surprisingly, a sine this small with respect to the noise floor can still be perceived IF the gain is turned up (I proved this to myself at a somewhat higher overall level, headphones, and some fierce concentration). Is that realistic for normal listening? I don't think so, but others may have better ears and listening environments than I do.

The key point I want to make here is that the traditional "linearity" measurement is completely entangled with noise, but some additional measurement distinguishes noise (which at low levels may not be objectionable) from intrinsic linearity (which might well be objectionable). The linearity test that Amir and does and similar ones from hifi magazines is a necessary test, a good overall look, but a few extra measurements really tease out how a DAC is acting, how (and if!) it can be improved, and honestly don't take much more time.

All things being equal, in my opinion, I think it's better to have an intrinsically linear DAC and then work on reducing the noise floor, but that's just my own prejudices. Oh and by the way, other than the low output, for $99 this DAC is pretty good.

The test mule here is a Behringer UMC404HD, which is a combo ADC/DAC with 4 mike preamps, selling for under $100. The focus here should be in what results are gathered and how they're interpreted, which seems to be the gist of the arguments.

The basic measurements are as shown here:

(Don't mind the phase reversal between channels, that's because I only had two XLR-to-TRS cables and one was wired backward)

A couple things to note: this is at best a 99.5dB S/N device, good enough for CD, not exactly state of the art, though. And the output at full scale is pathetically low; having a reasonably healthy output (3-5V) would probably have squeezed out another 6-10dB of S/N.

Regarding jitter, I posted the J-Test results in an earlier thread, but here they are again for reference:

So I think that the linearity stuff won't get overshadowed by other defects. And for purposes of clarity, I thought it might be nice to see what the baseline linearity of the APx525 was. Unfortunately, you can't do exactly the linearity test that you would with a DAC, but you can do an analog loopback, which is certainly going to be a worst-case. I used 0dBu as my reference, so to translate to dBV (which is close to the dBFS for the UMC404HD), add (or subtract) about 2.2 dB. I ran this with and without the narrow bandpass filter Amir uses. This is run with and without the bandpass filter so you can see the effect of the noise floor on this measurement.

OK, let's kick things off with Amir's Basic Linearity Test, which uses the bandpass filter. I ran it twice in order to get an idea of what the repeatability is:

By Amir's criterion, this loses linearity at about -95dBFS. But is it really becoming nonlinear? Let's take off the bandpass filter:

So, it's clear from the last two graphs that the noise floor is a really big deal as expected in a broadband measurement- you expect this to increase at low signal levels. But what about the "linearity" with the filter on? How much is that affected by noise and how much by linearity? Does it matter? First, let's do a power average of multiple runs (16 in this case), which will tend to cancel noise (by a factor of sqrtN, where N is the number or runs) and bring out whatever nonlinearity is inherent to the DAC.

Looks like the linearity now holds up to better than -116dBFS. And I say "better than" because more averages might reduce the deviation even further. I would have done 64 averages to see if the bump below -116dB halved in amplitude, but that's a slow process for old and software-stupid people who can't write macros to let it run unattended. But I think the point is adequately demonstrated- the deviation of the linearity curve in this kind of measurement is noise-dominated, even with the bandpass filter.

The linearity vs noise thing really came to mind when Amir showed spectra of a single tone taken at lower and lower levels from one particularly questionable DAC. At a certain point, reducing the level at the DAC didn't result in the analog output signal getting smaller, i.e., the transfer function breaks into a horizontal line. That is something I would call a nonlinearity!

So juuuust for fun, I used that trick to see how well the DAC tracks at low levels. And whaddaya know.

32 averages knocks down the noise floor and shows that the tracking of the tone's levels is really excellent.

It can be argued that, who cares about this, if the noise dominates below -99 dB, the inherent DAC linearity is unimportant. perhaps so, but it's not hard to demonstrate (try it yourself!) that we can detect tones well below a random noise floor. For example, let's look at a sine wave coming out of the DAC at -100dB:

Still pretty sine-y. So it's not implausible that this would be clearly audible through the noise, assuming your gain is turned up high enough. Let's delve deeper:

It's quite a bit harder to see the sinewave in here, but surprisingly, a sine this small with respect to the noise floor can still be perceived IF the gain is turned up (I proved this to myself at a somewhat higher overall level, headphones, and some fierce concentration). Is that realistic for normal listening? I don't think so, but others may have better ears and listening environments than I do.

The key point I want to make here is that the traditional "linearity" measurement is completely entangled with noise, but some additional measurement distinguishes noise (which at low levels may not be objectionable) from intrinsic linearity (which might well be objectionable). The linearity test that Amir and does and similar ones from hifi magazines is a necessary test, a good overall look, but a few extra measurements really tease out how a DAC is acting, how (and if!) it can be improved, and honestly don't take much more time.

All things being equal, in my opinion, I think it's better to have an intrinsically linear DAC and then work on reducing the noise floor, but that's just my own prejudices. Oh and by the way, other than the low output, for $99 this DAC is pretty good.