- Joined

- Jul 31, 2019

- Messages

- 334

- Likes

- 3,065

I have not had the pleasure to test them.I would be very curious to see how the MBL omnis would have scored in the blind tests!

I have not had the pleasure to test them.I would be very curious to see how the MBL omnis would have scored in the blind tests!

There you are the man said it. Do not focus on the score. Stop treating it as gospel, stop sorting speakers according to it, only.You shouldn't focus on preference scores. It was only intended to help naive people interpret spinorama measurements and reduce it to a single number.

Stereo is 1950s, and the research focus now is immersive audio.

There is no difference between the first two options of the poll. "Its a good metric that helps, but that is all" means its 100% valuable.

This whole thread is a exercise in quixotism. The OP is slaying dragons that just aren't there.

Controlling variables in experiments is important to make valid conclusions between the measured effects and their causation. Designing tests and measurements to provide sensitive, discriminating and reproducible results is also important. Mono tests provide that. Stereo tests do not.That alone only makes me more covinced that you took the easy way by choosing mono. You eliminated the variability of stereo ratings but in doing that you're throwing away the baby with the bath water.

I have given 3 reasons for that: possible incorrect positioning of speaker to boundaries, possible incorrect listening axis, possible incorrect postion of listener.

I can see several other problems with listening to a single speaker located left or right.

I have seen photos and schematics of several people performing listening test simultaneously. They can't all be sitting on axis, or in the best acoustical place in the room.

I have performed in-room measurements with both speakers and mic/listener at different locations that I can upload if you wish.

They show that woofer distance to front wall, to side wall, to floor, to ceiling and to listener will affect the response in the bass and sub-bass.

Not taking that into account is a mistake.

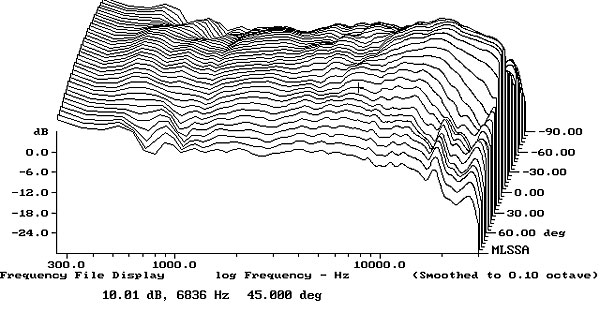

The most obvious example would be Danish manufacturer Dali, whose speakers are designed to be positioned with no toe-in. When listened-to on axis they will sound "coloured" (see cursor postion at 45° below):

https://www.stereophile.com/content/dali-rubicon-8-loudspeaker-measurements

Dipoles are designed to make use of the front wall, corner horns must go in corners, on-wall speakers on walls.

Again you're taking the easy way by remove a variable. I understand that this makes the testing more feasible but it also makes it invalid.

That deals with my earlier comment regarding people listening off axis.

You are correct. "Tilting and windmills" is the more appropriate phrase.You are confused, no hay dragones in Don Quixote...

If this was the contention at the start of this thread, it would have been a very brief set of mutual agreements - nobody is advocating using the predicted preference score as the sole assessment element of speakers. However, what it actually started with was...There you are the man said it. Do not focus on the score. Stop treating it as gospel, stop sorting speakers according to it, only.

...which is incorrect. The preference rating has a meaningful correlation with speaker preference. It's not sufficiently predictive to be the sole parameter we assess. It is, however, also not meaningless.The more I look into it the less I can trust the Harman speaker quality score. IMHO it is a totally meaningless metric. I know the background, I read all the papers even before Harman was involved, and their patent. However, it works so badly that IMHO it is a meaningless metric.

This is simply the nirvana fallacy. The score is somewhat valuable. Its imperfection does not make it without value - which is good, because all the models, explicated (like the predicted preference rating) or internal to our heads (we're assessing these measurements ourselves with some conception of how they correlate with perception, aren't we?), are flawed.75.8% of the (naïve) voters say that they value the score. I'll remember that next time someone calls subjectivists gullible or biased...

BTW, this is how that room looks like now. We have moved on from mono and stereo.Good to know.

Does Harman leave the absorbents on the rear wall in the picture in their reasearchers? They look to be 2" thick? And the half circular ones are perhaps BAD Arcs?

View attachment 192060

View attachment 192061

Agreed. HARMAN does not use the metric. That is all you need to know. It is a metric that is helpful but it does not tell all.There is no difference between the first two options of the poll. "Its a good metric that helps, but that is all" means its 100% valuable.

This whole thread is a exercise in quixotism. The OP is slaying dragons that just aren't there.

We've tested both large and smaller Martin Logan dipoles in the same room and got similar results. The listening test results are completely predictable by the anechoic spinorama measurements: poor octave-to -octave balance, resonances that are visible in many of the curves. The speaker is very directional and the balance changes as you move off-axis. It seems to sound worse as you sit more on-axis (it is too bright or harsh in the upper mids) but even off-axis, it sounds colored.

Thank you for saying that. I (and others) have tried to tell people that my money is on you not using that score and I am glad you do not.Agreed. HARMAN does not use the metric. That is all you need to know. It is a metric that is helpful but it does not tell all.

Curious -- what's with the center rear surround? I was under the impression that a speaker placed directly behind the listener could trigger front/back reversal and basically sound like it was in front.BTW, this is how that room looks like now.

Really though if you smooth that rating out and take it lightly then a casual buyer is going to get a decent speaker. Audiofools like you & me are going to be digging much much deeper.

Agreed. HARMAN does not use the metric. That is all you need to know. It is a metric that is helpful but it does not tell all.

Of course testing outdoors doesn't test the off-axis response because there are no reflections.. It solves loudspeaker positional biases but some people will argue it the results cannot be extrapolated to results in rooms.I would add to the claim that a speaker that tests well in mono will test well in stereo,

by saying that a speaker that listens well in mono will listen well in stereo.

I do a lot of testing and listening in mono, stereo, and LCR using three identical speakers.

In addition, I test and listen to mono outdoors very frequently, and stereo occasionally.

Here's a snip of the presets currently is use for such:

View attachment 192148

There is no question in my mind that critical speaker evaluation is best done in mono, and best done outdoors.

I don't see how stereo can ever be fairly evaluated, due to the vagaries of rooms, recordings, individual presences for ambiance vs imaging, and radiation patterns of major types (point source, dipole, planar, open baffle, omni, line, etc.,

My guess is the evaluation impossibility will always exist for stereo, and multi-channel too, until a particular standard listening room design, ....size/acoustics/et al, is somehow adopted by the marketplace.

Hat's off to the work you guys accomplished evaluating mono.

The principles you came up with i trust fully

Preference algorithms/scores that have been pulled from them, can't say the same.

The problem is that it is not perceptually based and doesn't take into account masking.