STC

Active Member

I recall seeing that and the Lexicon thing years ago.

This is interesting - similar to what I experienced - from:

https://forums.prosoundweb.com/index.php/topic,156030.0.html?PHPSESSID=3iunvg8v9qomorr3ls2vo2uca3

"I have been involved in installation of several of these type systems and used to have the only portable VRAS (which is what the Constellation was called before Meyer bought it and put a new name on it) that we used to take around for demos.

For any of these type systems (there are several manufacturers who have this type of system-and each has advantages and disadvantages), the room has to be DEAD-NOT LIVE.

You CANNOT take away existing reflections (reverb).

You can only ADD IT.

Yes these type of systems can help congregational singing when the room is dead.

But putting them in a live room is a TOTAL waste of money.

All they can do is make a bad situation worse.

They REALLY need to be aware of this before making a large financial mistake. "

Further on - interesting concept:

"Constellation is used for a different purpose in the restaurants - there, it is essentially a fancy sound masking system. The idea is that if you make the restaurant totally dead acoustically it will be too quiet and a table will overhear conversation from the next table over. No acoustic treatment and the restaurant will get too loud very fast. Constellation allows you to start with a totally dead room and play around getting the exact amount of reverberation you want to make sound from the next table over unintelligible while setting a limit on how loud the space gets. "

One of the big things in recording studio control room design was LEDE - Live end, dead end. The front with the far field speaker doghouses was deadened with something like Sonex, the rear of the room was treated using RPG Diffusors.

RPG Diffusors:

Quadratic: http://www.rpgeurope.com/products/product/modffusor.html

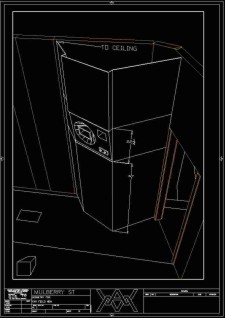

View attachment 50737View attachment 50738

The also make various others like the Hemiffussor - you'll see this on one of the late night talk shows

https://www.bhphotovideo.com/c/prod...r_Systems_HEMIP_2_Hemiffusor_W1_Diffusor.html

View attachment 50736

After spending a lot of time doing sound with the Pittsburgh Symphony Orchestra outdoors for their Point State Park gigs, there's nothing like hearing them in a place like Heinz Hall or Carnegie - even with artificial reverb systems (we tried - had telephone poles in the park for them) Sucked real bad. Even the recording done using the various mic trees and such... ehhh.

The acoustical power output of 102 people playing a fortississimo on something like Copeland's Rodeo is not within reach of any system I've ever heard. And what's real interesting is that on stage it sucks too. All you hear is brass and percussion, depending on what section you're standing in. Nothing like what you hear out in the hall...

Without going out of topic, I emphasized the 'domestic concert hall" to show that it is possible to create the spatial information of a venue to give realism. The main point is still crosstalk cancellation of the front stereo pair to produce the correct ILD and ITD at ears.

Do you have an overview of your system and software? 30 channels is a serious investment, and if you're maxing an i9 that's pretty mind-boggling. Or are you basically using the system described on the ambiophonics page - the LaScala impluses (only 24 channels shown) with Voxengo? What bandwidth is needed in the ambience channels? My limited experiments with ambio were mind-blowing on the right material, but my sense is that on things like Cowboy Junkies Trinity Sessions low-frequency ambience is actually important meaning you can't just use little satellite speakers.

As I indicated above, my mad-scientist idea is to try to do this over headphones by recording appropriate HRIR responses. this would require physical speakers for measurement but not as a permanent set-up. It might ultimately not work without head-tracking, but I figure it's an interesting avenue of investigation.

I missed to reply this post earlier.

Surprising the cost is much lower than my audiophiles setup. I used to have Mytek, Theta Classe, Supratek and Marantz SA11S2. After getting CrownXLS and blindtests, I got rid of Classe and then all of them for a Motu interface which can give you up to 128 channels. And a few $20 digital amps for the surround. For the multi channel format the rear and side is driven by Marantz and Sony Amp as you need better speakers and amplifiers for those discrete channels.

All these thru is fed thru Reaper. The crosstalk itself hardly do not take even 1% of the CPU but the SIR2 convolution engine is the one taking my CPU.

I was using i7-7700 and it was at 82%. I thought the i9 would reduce the load and would allow me to add more channels but it turned that the load actual increased by 2% to 84%. But the ambiance sounded much nicer. Never understood this part.

p.s. I wrongly quoted 82% in my early post. It should have been 84%.