[Note: This article was published in the Widescreen Review Magazine. This is a revised edition.]

There is no larger transformation of personal music consumption than to store your entire library of music on a server and play them on demand. Rich metadata and searching means instant access to all of your content. With the advent of high resolution downloads, the notion of using a physical media is obsolete even for the most diehard devotees of physical media.

I suspect if you have not yet gone this route, one of the reasons is the myriad of choices in building a music server. Unlike buying a stand-alone transport, the choices are infinite when it comes to building a server/playback system. I hope to shed some light on one critical component of such a system, at least in the case of PC and Mac Servers. Namely, how to interface them to an external DAC. Unless you are using a professional audio interface, in general I do not recommend that you use internal DACs to the computers. You get far more flexibility in using a high-quality interface to "bridge" the connection between the PC/MAC music server and the DAC that converts the audio samples to analog.

If you have a modern computer with HDMI output, you already have a very convenient way of sending digital audio out of your computer to an outboard device – usually an Audio/Video Receiver (AVR) or Home Theater processor. HDMI can produce good performance but sadly, that is more of an exception than rule. HDMI “slaves” audio to video meaning you cannot have audio without video. This in turn lights up tremendous amount of circuitry in the receiver. The enemy of high-performance audio is noise and interference and with HDMI that comes with the territory.

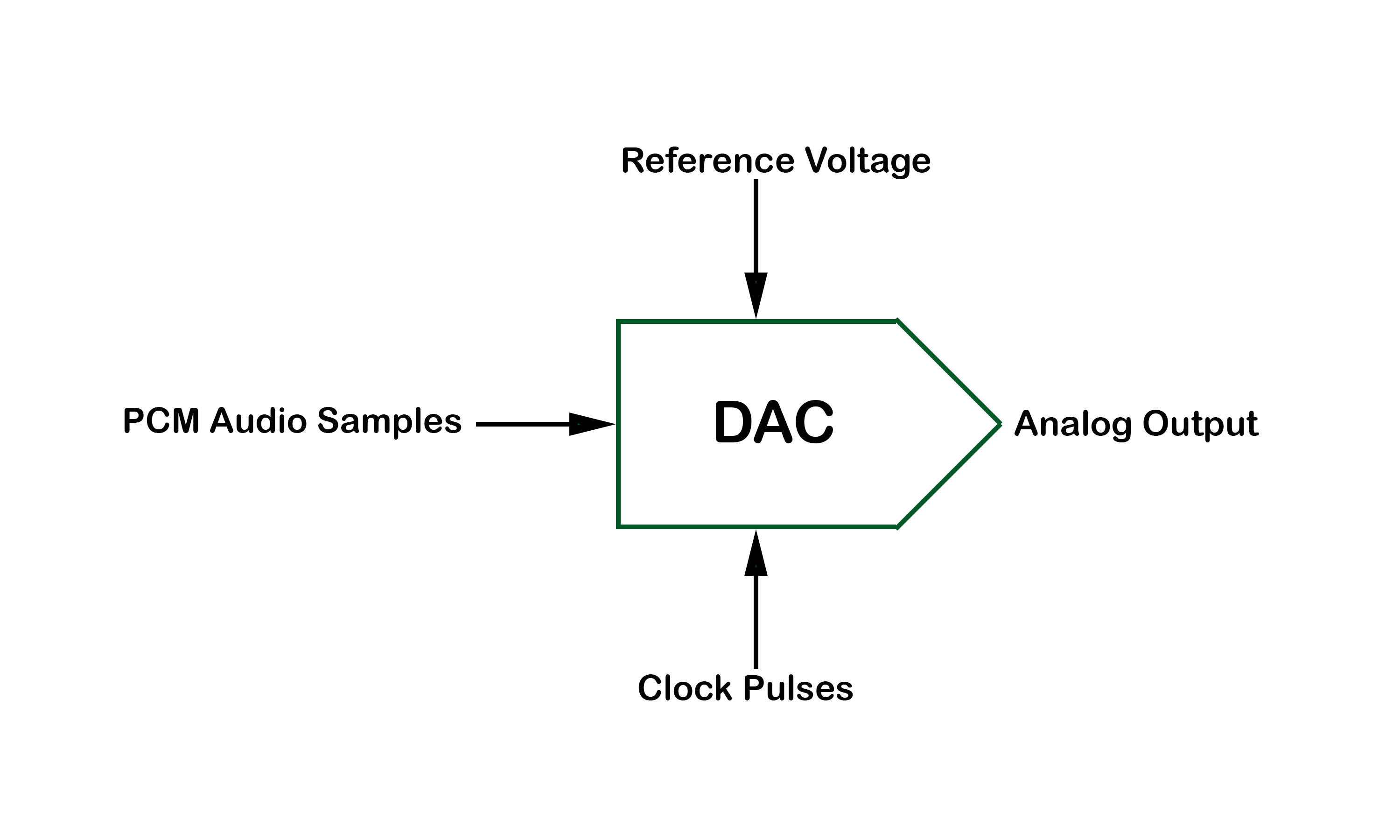

Please don’t believe in online folklore that says “digital is digital” and hence it does not matter which digital path you use to output your audio samples to the external DAC (stand-alone or internal to an AVR or Processor). That is the layman understanding of how our audio systems work, not the reality. That reality says that digital audio reproduction requires two pieces of information: audio samples and their timing. For the purposes of this article we assume that digital audio samples are always delivered error free to the receiver (if not, you will get audible glitches). Fidelity differences are not as such due to errors in the digital link. They are due to the other inputs to the digital to audio converter (DAC) as shown in Figure 1. Delivering correct PCM (or DSD) audio samples takes care of only one out of three factors that determine the output of the DAC.

Figure 1: Digital to Analog Converters (DACs) need high quality clock pulses and reference voltage to output clean analog signal. It is not sufficient to just capture the audio samples reliably.

Let's look at the role of the clock pulses. When you play CD audio, there are ostensibly 44,100 such samples/second. I say ostensibly because there is no requirement that the source actually feed the DAC that many samples/second. It can just as easily choose to run a tiny bit slower or faster or alternate between those speeds. The system must reliably handle such small timing differences and stay in “lock” with the source. The DAC cannot generate its own timing using the sample rate to drive its DAC silicon. If it did so, its clock no matter how accurate, would over time drift away from the one used in the source. If it runs too fast, it would run out of data at some point and would have to introduce a pause or glitch. If it ran too slow, then it would keep accumulating audio and at some point, run out of a place to store them. And in the case of video, lose audio and video sync. For these reasons, an unwritten rule of consumer audio is that the DAC must slave its timing, i.e. its clock to the source. It must stay in lock-step with it no matter what.

The timing extraction subjects the DAC to noise and variations in the clock produced by the source. We call such variations "jitter." Yes, the DAC can and routinely filters such noise using a circuit called "PLL" or Phased Locked Loop. No, such a circuit does not eliminate audible jitter. The primary role of the PLL in the DAC is to extract digital values coming to it. That is the mission critical component. Eliminating high-frequency jitter, i.e. well above audio band, accomplishes that and gives us glitch-free audio that is in sync with the source. This however does not ensure best fidelity as if we allow audio-band jitter frequencies to enter the DAC, then they show up in the output of the DAC per Figure 1. They may not always be audible depending on their level but they will be there and no amount of hand-waiving saying there is a PLL and "locally generated clock" explains them away.

Because of high precision of our audio samples, i.e. 16 bits or higher per PCM sample, it takes very little variation in the clock timing to produce a distortion product in the output of the DAC. We are talking mere billionth of a second here. Do not make the mistake of thinking the DAC is running at thousands of samples per second makes this issue go away. What I just said can be shown mathematically to be the case. To produce even 16-bit audio samples correctly requires jitter to be down in nanosecond range.

Jitter in audio systems is a manifestation of real systems versus what I call paper ones. On paper, a DAC performs as well as its silicon manufacturer specs in their publications. We see numbers these days that are superlative even in the case of DACs that cost pennies. What any DAC designer knows though is that as soon as you put said DAC silicon in a real device, there is potential for significant drop in performance. DAC silicon manufacturers test their components using clean and simple circuits. Nothing remotely as complex and noisy as aforementioned HDMI is used. It is left as an exercise for the designer to figure out how to deliver a clean clock (and reference voltage) to the DAC. Something that is missed unfortunately in all the AVRs I have tested. So on that front, my recommendation is to stay away from HDMI for 2-channel, critical music listening. For movies, it is a fine and mandatory choice to get multi-channel sound and such. For music, there are far better choices.

Toslink and S/PDIF

Many desktop PCs have with a digital audio interface in the form optical Toslink or coax S/PDIF digital audio output. These are the same familiar connections we have had in our traditional audio systems such as the output of a CD or DVD/Blu-ray player. Each has its own advantages. Toslink optical connection electrically isolates the server and the DAC and hence can keep the noise in the PC from polluting the sensitive DAC downstream. The down side is that optical connection tends to suffer from higher jitter due to low bandwidth of the cheap optical cable used for audio. In that case, the coax connection may be superior.

Without measurements it is hard to know whether you are better off with Toslink optical or S/PDIF. You could get lower jitter over S/PDIF only to have the electrical coupling of the server and DAC undo that and then some. My suggestion is to connect both links and perform a blind test. Close your eyes and randomize which one you are using. Listen for any differences. Use a soundtrack that has high frequency notes that decay into nothing and pay attention to how clean that decay is and whether it terminates abruptly. Also note whether the sound is brighter in one interface than the other. I have had better luck with coax but in at least one occasion, I found optical sounding better.

USB Audio

The (relatively) new kid on the block is USB. No doubt you are familiar with this ubiquitous computer interface for everything from keyboards to printers. Countless DACs are available that take USB as their input and operate in what is called Isochronous mode. Isochronous mode sends packets of data that are ~1024 bytes long. The receiver uses this block transfer cadence to generate the DAC clock. The problem with this approach is that it is "glitchy." At the end of each block transfer by definition there is a pause before the next block is transmitted. So if care is not taken, we get a timing glitch/jitter every 1 KHz which is smack in the middle of audio band and where the ear is nearly most sensitive.

Despite this issue, USB paves the road to finally fix the ills of our current consumer digital interfaces. Namely the fact that the DAC must stay "slave" to the source in deriving its timing. This as I explained, immediately sets us up for source induced jitter. A much better scheme is put to the DAC in charge of timing and make the source slave. In doing so, we can have a clean clock source for the DAC chip and have the server feed us data as we need and request it. USB if completely suited to this purpose as its primary function is to convey digital samples to the peripheral device. And that is all we want to use it for: to give us data but let us determine the timing of them. This mode of operation is called "asynchronous USB" or async USB for short.

Another benefit of async USB is that it can deal with the server falling behind the DAC. Audio is a "real time" operation. If you are playing 48 Khz PCM audio, there better be an audio sample every 1/48,000 of a second or else you will hear a glitch as the DAC would have nothing to play. A MAC or PC on the other hand is a non-real-time device. They are orders of magnitude faster than what we need them for audio, but they are not always there when needed. Activities on the computer may make it slow at a critical moment to feed data to a DAC resulting in the glitch. It is the case of being way too fast, and too slow at the same time. A technique called buffering allows us to take advantage of our "faster than real-time" performance to make up for slower than real-time response. Instead of reading data just when we need it, we can set aside a large pool of memory called a buffer, and put the audio samples there. Then we "drain" the data as we need it using our precise DAC clock. As long as the pre-fetch mechanism, the logic that fetches audio samples, is faster than us consuming the data, we can survive momentary periods where the server gets too busy to service the DAC. This mechanism is ideally suited to async USB because by definition we can read data at a different rate than we are consuming it in the DAC.

Note that asynchronous audio output has existed for a long time in professional audio cards that you plugged into a computer or used over Firewire or USB. What is new is being able to do the same thing but with standard in-box drivers in Windows or Mac. Both operating systems come with built-in drivers for USB class 2 that allows driver-less attachment of such devices. The Windows implementation stops at 24 bit/96 KHz so you usually get a driver from the manufacturer to support higher data rates. In some cases the implementation is proprietary requiring a driver for the device to operate at all.

Asynchronous mode nicely solves our upstream jitter problem. It matters not how much jitter exists in the USB clock coming or the source PC. We have our own clock in the DAC or S/PDIF interface that is used for conversion of digital audio to analog. This method, on paper at least, is superior to HDMI or SPDIF out of the server because these continue to keep the source/server as the master. I say on paper because the local clock still has to have good performance and external interference kept away. It is possible to build an asynchronous USB interface that does no better than classic interconnects because of failings in this regard. Let's dig into this further.

Electrical Isolation

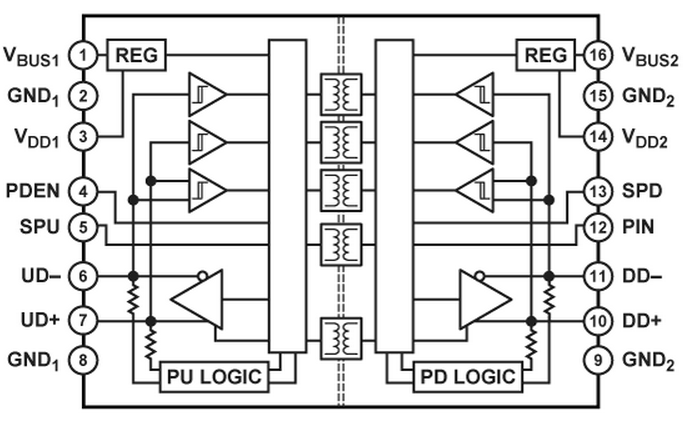

Asynchronous transfer mode of USB solves our clocking problem but we are still stuck with electrical coupling of the PC and our AV gear courtesy of wired USB connection. You can have the world's best DAC clock but if you let noise bleed into its power supply or even couple through it over the air, you still wind up with noise and distortion on the output of your DAC. The first way to guard against this is to isolate the power including the ground of the server from the DAC. This is usually done with an integrated IC such as the Analog Devices ADuM4160 whose block diagram is shown in Figure 2. Alas, that IC cannot support speeds above 24-bit/96 KHz. Higher speed solutions require a more discrete solution such as a generic digital isolator.

Figure 2: Electrical/Galvanic isolation using off-the-shelf USB isolator IC.

As I noted, it is important that the ground connection is also isolated as otherwise that line will not only allow interference to transmit to our sensitive audio circuits, but can also create ground loops. Ground loops are caused by differences in the voltage potential of the ground on the sending and receiving equipment. They almost always exist in such scenarios and the question only becomes how well the equipment deals with it and how bad the differential is. Best thing is to not create a ground loop than try to diagnose and fix it. Galvanic isolation does that and should be on your list of “must haves” when buying USB audio solutions.

Flavors of Async USB

You have two choices here. You can get a new DAC or AVR with a built-in asynchronous USB interface or buy a “bridge” adapter that converts USB asynchronously to S/PDIF (and its balanced professional AES/EBU counterpart if you have that in your DAC). My preference is for the bridge as that gives you much more choices of AV products including the DAC/AVRs you already have. There are many such interfaces at all price points. In this article I will be talking about two of my favorites:

1. Berkeley Audio Design Alpha USB adapter. This is a very high performance bridge that takes USB input and produces either S/PDIF or AES/EBU. It has a built-in power supply that is a must for clean power as opposed to trying to use the USB power.

2. Audiophilleo USB to S/PDIF adapter. This is another well designed product which is small enough to couple directly to the S/PDIF connector.

Measurements

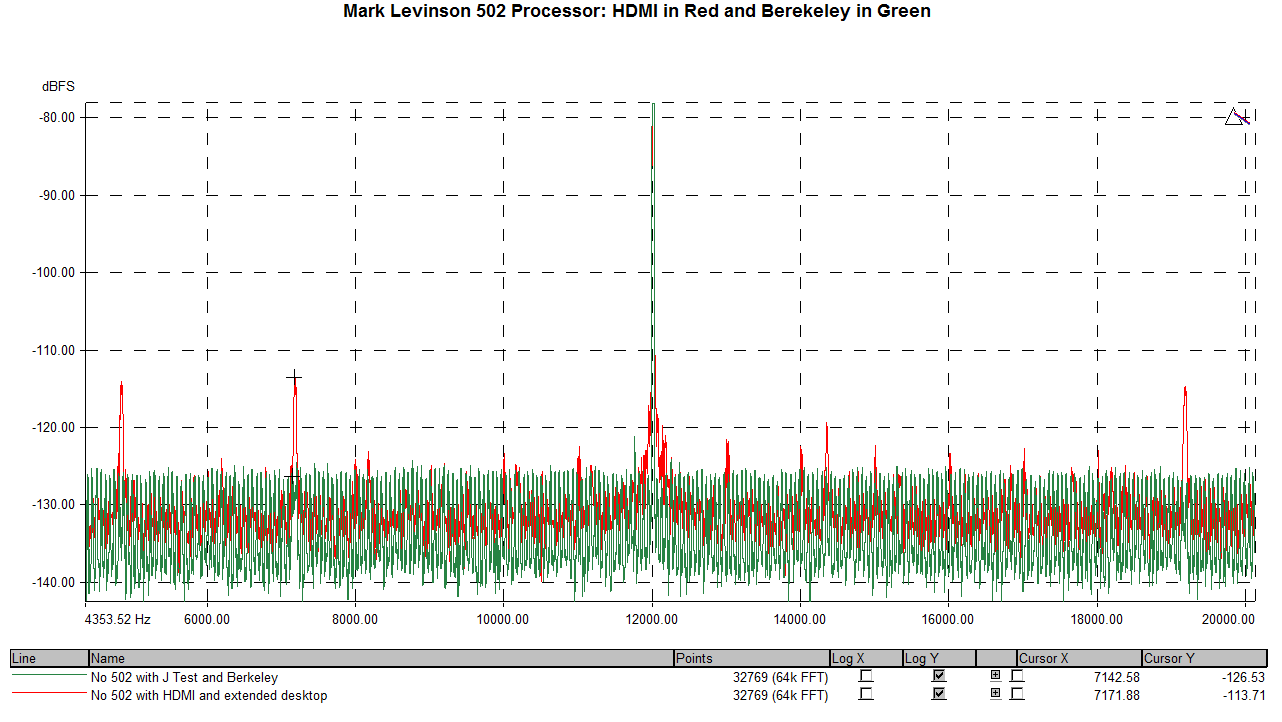

Let’s put the theory to practice and see if we are able to improve the performance of our systems using this new technology. Figure 3 is the measurement of the Mark Levinson No 502 processor when being fed the “J-Test” signal (essentially a 12 KHz tone). The Ideal performance would have a single tone/sharp spike at 12 KHz and nothing else. The server is my Sony laptop driving the processor either using its built-in HDMI output, or the Berkeley async USB adapter feeding the 502 over S/PDIF.

I have given substantial benefit of the doubt to HDMI as the Mark Levinson 502 processor produces superb performance with the spikes hovering at very low level of -113 dBFs. No other HDMI device I tested could remotely approach this level of performance as you will see later. As good this HDMI performance is though, the Berkeley S/PDIF connection bests it, by taking distortions down by 13 dB (-127 dBFs vs -114 dBFs). Yes, we are talking about how many angels may be dancing on top of the pin but I wanted to show that even in heroic implementations of HDMI, a better architecture in the form of async USB and great implementation of that, can provide superior performance.

Figure 3: Mark Levinson 502 Processor performance using HDMI as input versus S/PDIF driven by the Berkeley USB adapter.

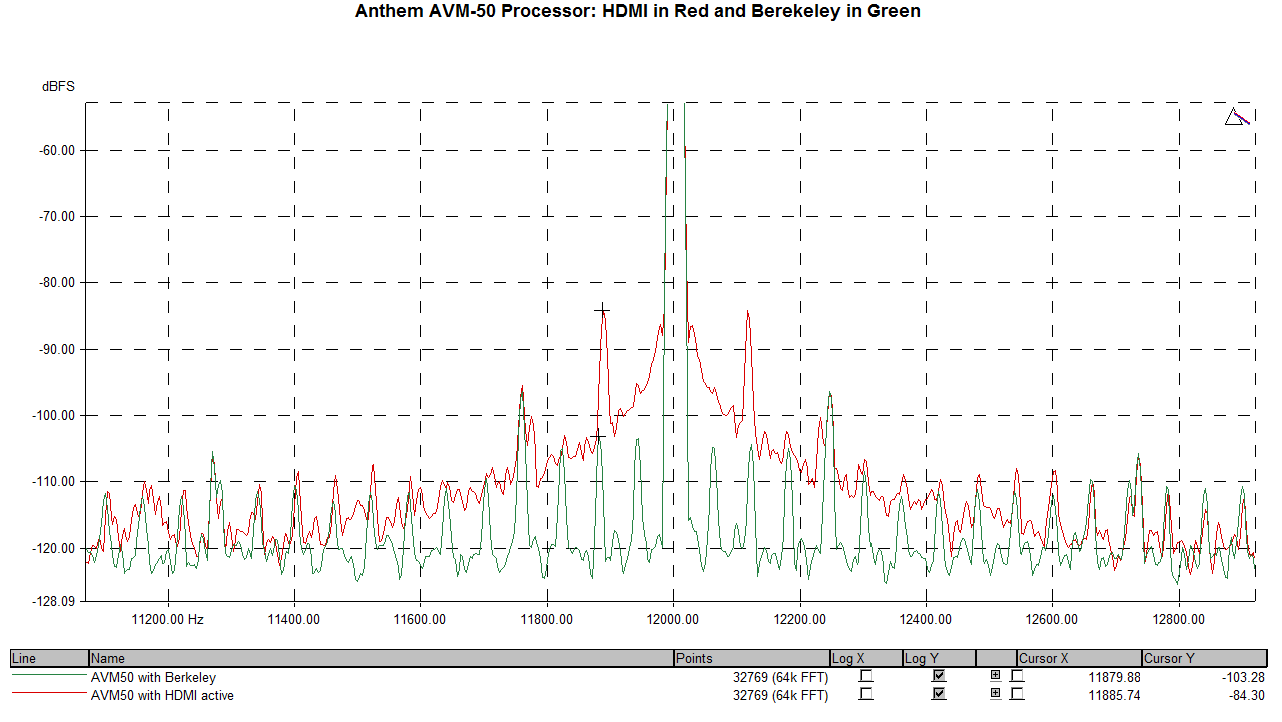

Now let’s come down to earth and see what happens when we make the same comparison using a sampling of higher-end AVRs. First up is the Anthem AVM 50 processor as shown in Figure 4.

Figure 4: Anthem AVM 50 HDMI in red compared to Berkeley S/PDIF in green. Note the much improved level of distortion in the latter case.

An entirely different picture emerges with HDMI distortion spikes reaching up to -84 dBFs. Let's put that in context by mentioning that signal to noise ratio of 16 bit audio is 96 dB. Inverted, the noise floor would be at -96 dBFs which means using HDMI on this AVR, we have introduced distortion products that rise well above our theoretical noise floor for 16 bit audio. Forget about 24 bit audio. We are having trouble and serious one at that, reproducing 16 bit audio here.

Thankfully using the Berkeley to drive the S/PDIF input on the exact same AVR with the exact same DAC results in reduction of distortions by nearly 20 dB. Let me repeat: nothing was changed in this experiment other than replacing one digital interface, HDMI, for another one, USB to S/PDIF bridge. Yet we managed to reduce the analog distortion coming out of the DAC by a huge amount. Distortion products are down to a much more reasonable -103 dBFs making the promise of producing clean 16 bit audio a realistic proposition (we can hear distortions through noise so need better than -96 dBFs distortion performance). Next time someone says "bits are bits" and you can't change anything by modifying the digital feed to a DAC, show them this graph.

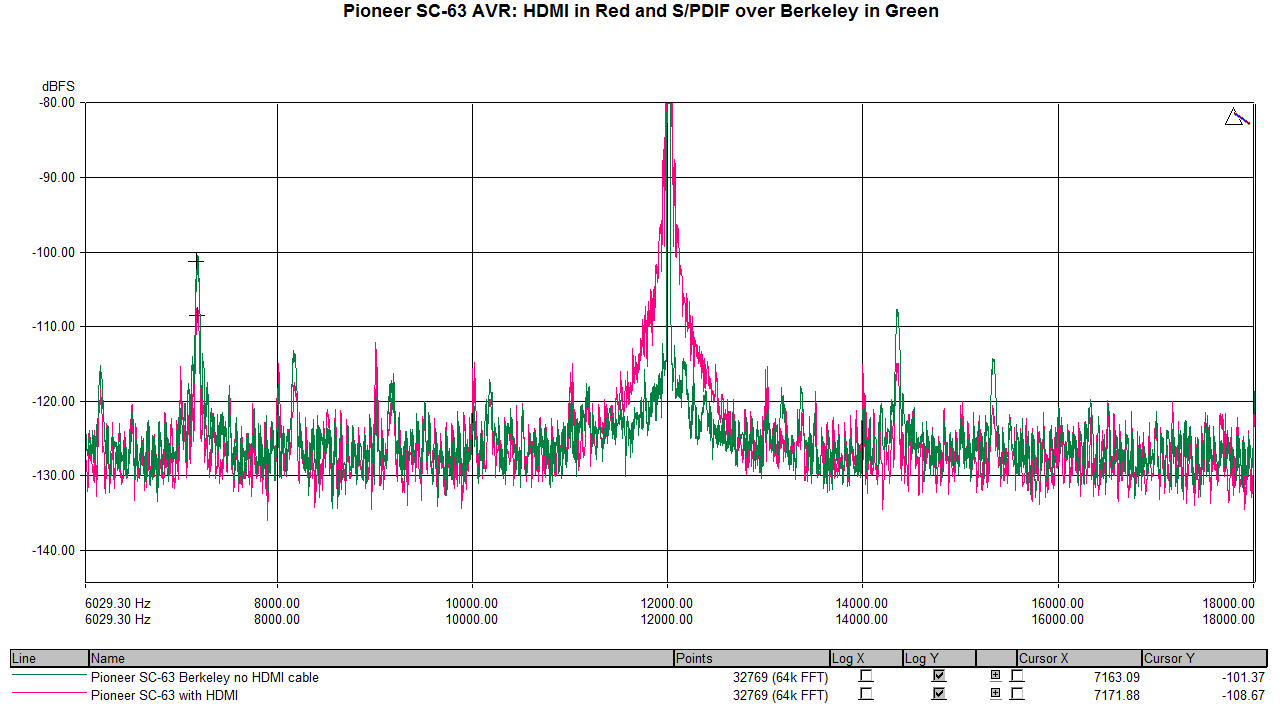

To show this is not a unique example, let’s repeat the exercise with the Pioneer Elite SC-63 AVR now in Figure 5. As with the Anthem, HDMI interface is suffering from low frequency jitter which causes our main 12 KHz test tone to broaden its "skirt" on each side. We also have our usual set of "correlated" distortion spikes on both sides of our main test signal.

Switching to the Berkeley USB to S/PDIF bridge yet again shows a marked improvement by attenuating the low frequency jitter and reducing the amplitude of the higher frequencies ones. The reduction in correlated distortion spikes is about 7 dB which is not an insignificant amount.

Figure 5: Comparison of HDMI to S/PDIF on a Pioneer SC-63 AVR showing better performance yet again using USB to S/PDIF bridge.

I won’t bore you with more measurements as the theme continues with all the other AVRs I have tested. Suffice it to say, unless someone backs their statement with objective measurements, you can safely assume that you can do better than HDMI with a well-implemented USB Async interface such as the Berkeley and while not shown, the Audiophilleo.

Quality Implementation

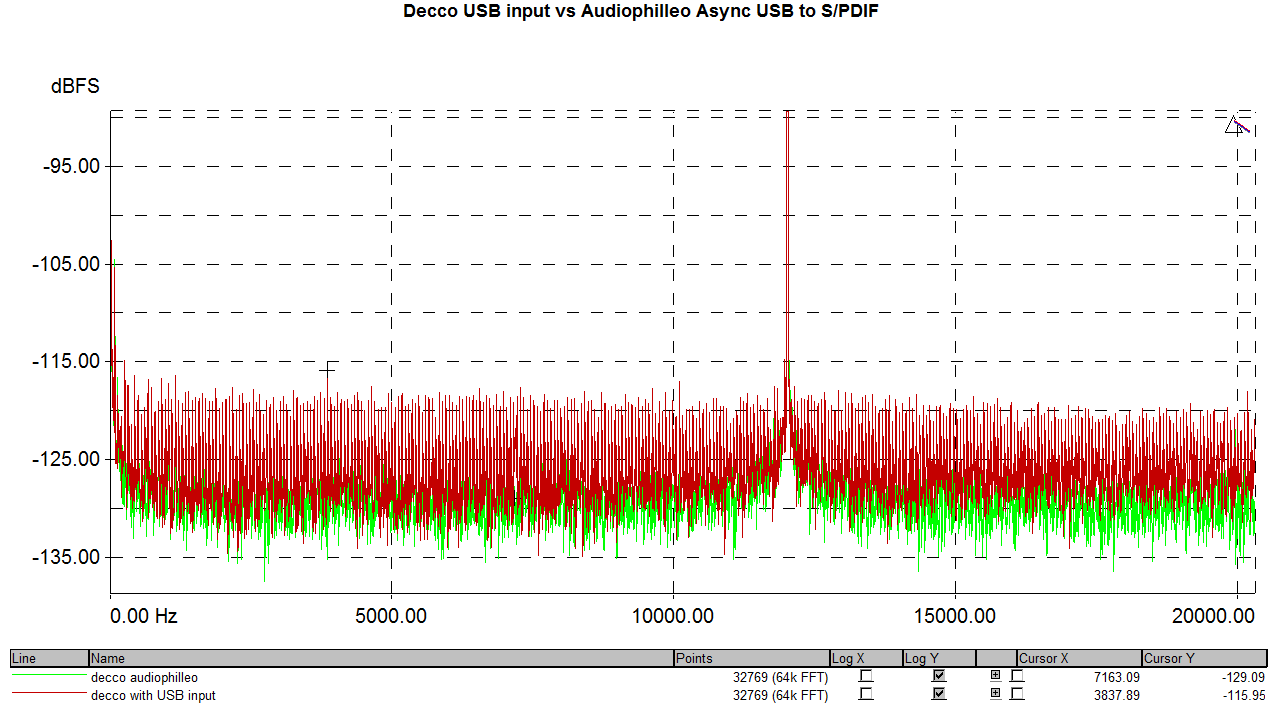

It is important to not automatically assume that just because a device is advertising asynchronous USB operation that it produces the same results I have shown above. The implementation can create its own “local” noise and timing distortions. To show an example of differing performance, let’s look at Figure 6 which compares performance of the Peachtree Decco DAC's internal asynchronous USB versus an external bridge. That input as shown in red produces excellent performance at -116 dBFs distortion level. As good as that is, we are able to improve upon it by using the Audiophilleo USB to S/PDIF to the tune of 13 dB reduction in noise+distortion products. Clearly the two async USB implementations are not the same.

Figure 6: Comparison of built-in asynchronous USB in the Peachtree Decco (in red) versus outboard Audiophilleo (in green).

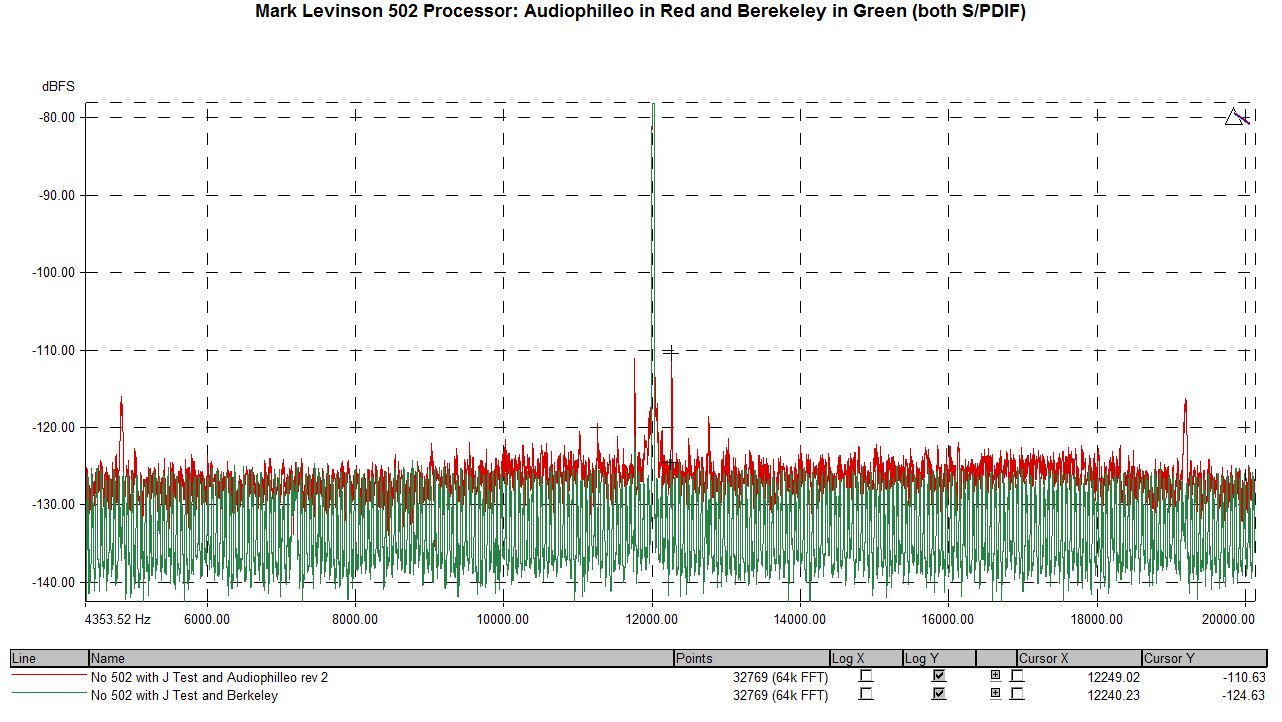

For another comparison let's look at how the Berkeley compares to the Audiophilleo when driving the mark Levinson 502 in Figure 7.

Figure 7: comparison of Berkeley and Audiophilleo USB to S/PDIF showing the former to have improved performance (in green).

The correlated distortion spikes in red produced by the Audiophilleo essentially vanish when the Mark Levinson is driven by the Berkeley.

There is no free lunch here as the Audiophilleo is about one third the cost and physically about 1/10th the size of the Berkeley. The small size makes it very difficult to provide physical separate of the components. And the lower cost excludes using more exotic parts. So it is not surprising that there is some measured difference here.

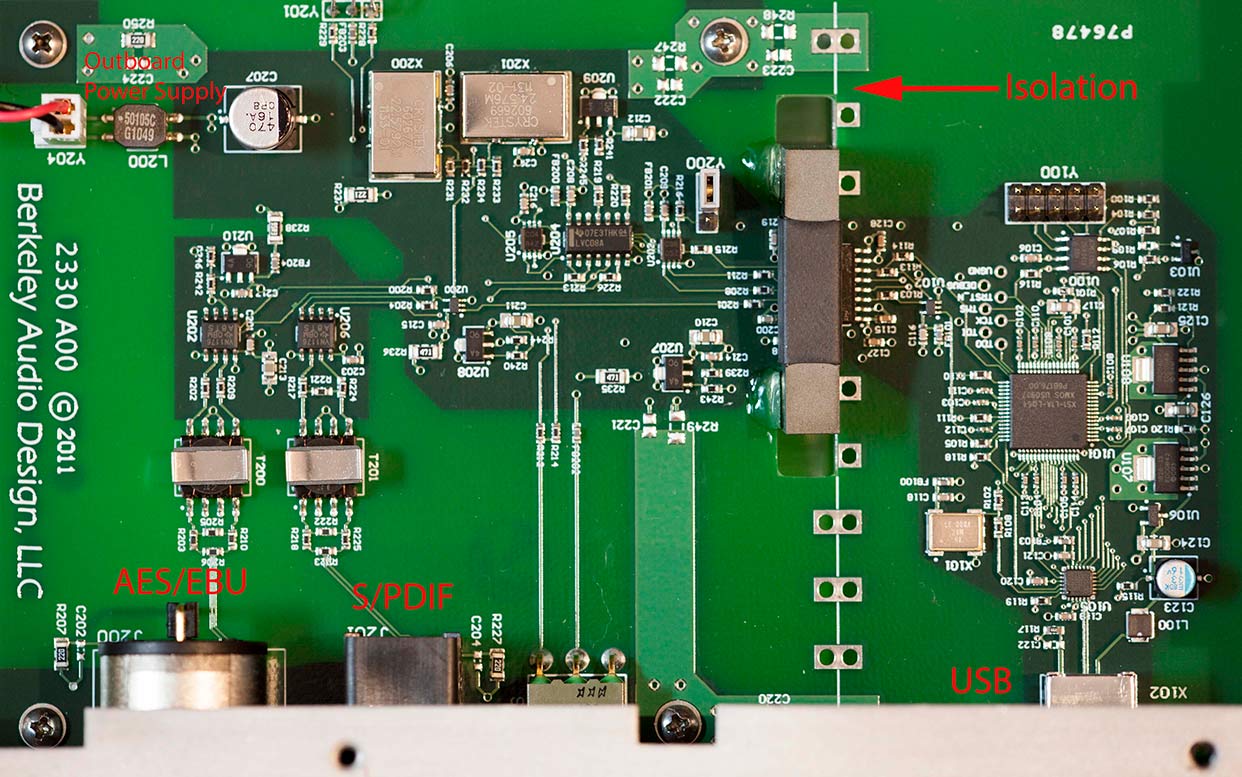

To see what it takes to produce the superlative performance that the Berkeley manages, let’s look at what is inside it as shown in Picture 1. On the bottom right is the USB port. It goes into the ubiquitous XMOS USB interface IC (large square chip). This is the “dirty” side of the unit meaning it has high-speed digital signals that must be kept away from our audio signals as much as possible. Such noise can bleed not only through connected circuits but literally through air. Good design hygiene therefore calls for physical separation which we see here as I have indicated with the marker "Isolation." USB computer interface stays on the right whereas S/PDIF and AES/EBU clock and signal generation are to the left. This reduces the chances of the clock oscillators (the shiny metal boxes) becoming contaminated with the digital activity on the dirty (USB) side.

Picture 1: Main interface board in the Berkeley Alpha USB to S/PDIF and AES converter. Note the excellent isolation of USB digital interface on the right and S/PDIF and AES/EBU on the left to reduce crosstalk (contamination).

There is more to the performance of the Berkeley than just this physical separation that goes beyond the scope of this article. Suffice it to say, excellence in engineering pays off in better measured performance.

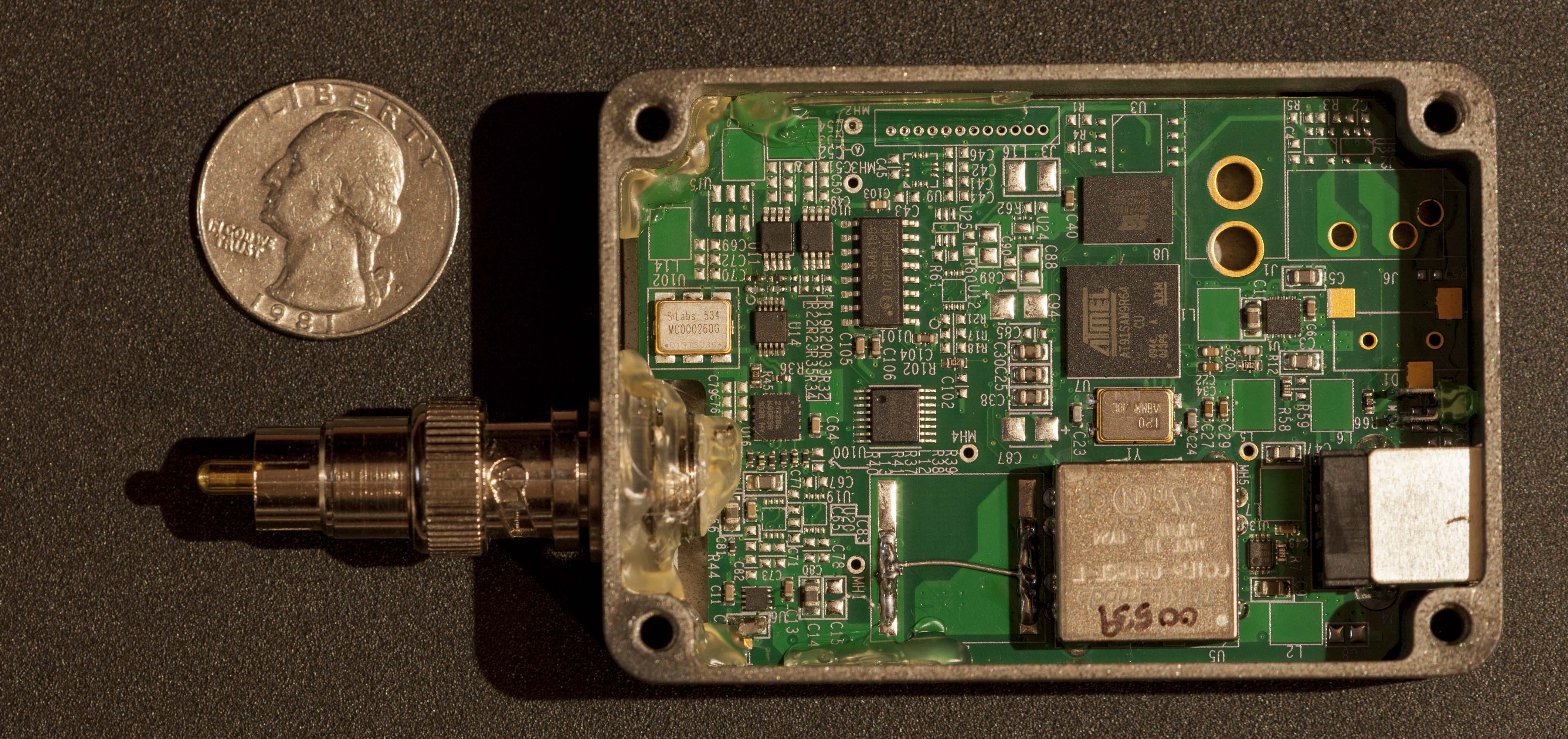

The Audiophilleo is also an excellent device but as you see in Picture 2, it is a very small device, not a whole lot bigger than the American quarter. There is no separation to speak of as we had with the Berkeley. So no wonder that we had some bleeding of noise from USB side into the S/PDIF clock.

Picture 2: Audiophilleo USB to S/PDIF Converter. Note the diminutive size.

There are asynchronous USB adapters at all price points. Unfortunately without measurements you cannot know how well they perform. Given that, look for “galvanic isolation” and separate power supply in the device spec sheet as generic clues to good performance but no guarantee.

In summary, we now have a superb way to get audio out of a computer server into a DAC. By using a well-implemented async USB bridge we get all the benefits and conveniences of modern music systems, with superlative performance. It is not always the case that we can have our cake and eat it too, but such is the case here.

References

Digital Audio: The Possible and Impossible, Amir Majidimehr, Widescreen Review Magazine

There is no larger transformation of personal music consumption than to store your entire library of music on a server and play them on demand. Rich metadata and searching means instant access to all of your content. With the advent of high resolution downloads, the notion of using a physical media is obsolete even for the most diehard devotees of physical media.

I suspect if you have not yet gone this route, one of the reasons is the myriad of choices in building a music server. Unlike buying a stand-alone transport, the choices are infinite when it comes to building a server/playback system. I hope to shed some light on one critical component of such a system, at least in the case of PC and Mac Servers. Namely, how to interface them to an external DAC. Unless you are using a professional audio interface, in general I do not recommend that you use internal DACs to the computers. You get far more flexibility in using a high-quality interface to "bridge" the connection between the PC/MAC music server and the DAC that converts the audio samples to analog.

If you have a modern computer with HDMI output, you already have a very convenient way of sending digital audio out of your computer to an outboard device – usually an Audio/Video Receiver (AVR) or Home Theater processor. HDMI can produce good performance but sadly, that is more of an exception than rule. HDMI “slaves” audio to video meaning you cannot have audio without video. This in turn lights up tremendous amount of circuitry in the receiver. The enemy of high-performance audio is noise and interference and with HDMI that comes with the territory.

Please don’t believe in online folklore that says “digital is digital” and hence it does not matter which digital path you use to output your audio samples to the external DAC (stand-alone or internal to an AVR or Processor). That is the layman understanding of how our audio systems work, not the reality. That reality says that digital audio reproduction requires two pieces of information: audio samples and their timing. For the purposes of this article we assume that digital audio samples are always delivered error free to the receiver (if not, you will get audible glitches). Fidelity differences are not as such due to errors in the digital link. They are due to the other inputs to the digital to audio converter (DAC) as shown in Figure 1. Delivering correct PCM (or DSD) audio samples takes care of only one out of three factors that determine the output of the DAC.

Figure 1: Digital to Analog Converters (DACs) need high quality clock pulses and reference voltage to output clean analog signal. It is not sufficient to just capture the audio samples reliably.

Let's look at the role of the clock pulses. When you play CD audio, there are ostensibly 44,100 such samples/second. I say ostensibly because there is no requirement that the source actually feed the DAC that many samples/second. It can just as easily choose to run a tiny bit slower or faster or alternate between those speeds. The system must reliably handle such small timing differences and stay in “lock” with the source. The DAC cannot generate its own timing using the sample rate to drive its DAC silicon. If it did so, its clock no matter how accurate, would over time drift away from the one used in the source. If it runs too fast, it would run out of data at some point and would have to introduce a pause or glitch. If it ran too slow, then it would keep accumulating audio and at some point, run out of a place to store them. And in the case of video, lose audio and video sync. For these reasons, an unwritten rule of consumer audio is that the DAC must slave its timing, i.e. its clock to the source. It must stay in lock-step with it no matter what.

The timing extraction subjects the DAC to noise and variations in the clock produced by the source. We call such variations "jitter." Yes, the DAC can and routinely filters such noise using a circuit called "PLL" or Phased Locked Loop. No, such a circuit does not eliminate audible jitter. The primary role of the PLL in the DAC is to extract digital values coming to it. That is the mission critical component. Eliminating high-frequency jitter, i.e. well above audio band, accomplishes that and gives us glitch-free audio that is in sync with the source. This however does not ensure best fidelity as if we allow audio-band jitter frequencies to enter the DAC, then they show up in the output of the DAC per Figure 1. They may not always be audible depending on their level but they will be there and no amount of hand-waiving saying there is a PLL and "locally generated clock" explains them away.

Because of high precision of our audio samples, i.e. 16 bits or higher per PCM sample, it takes very little variation in the clock timing to produce a distortion product in the output of the DAC. We are talking mere billionth of a second here. Do not make the mistake of thinking the DAC is running at thousands of samples per second makes this issue go away. What I just said can be shown mathematically to be the case. To produce even 16-bit audio samples correctly requires jitter to be down in nanosecond range.

Jitter in audio systems is a manifestation of real systems versus what I call paper ones. On paper, a DAC performs as well as its silicon manufacturer specs in their publications. We see numbers these days that are superlative even in the case of DACs that cost pennies. What any DAC designer knows though is that as soon as you put said DAC silicon in a real device, there is potential for significant drop in performance. DAC silicon manufacturers test their components using clean and simple circuits. Nothing remotely as complex and noisy as aforementioned HDMI is used. It is left as an exercise for the designer to figure out how to deliver a clean clock (and reference voltage) to the DAC. Something that is missed unfortunately in all the AVRs I have tested. So on that front, my recommendation is to stay away from HDMI for 2-channel, critical music listening. For movies, it is a fine and mandatory choice to get multi-channel sound and such. For music, there are far better choices.

Toslink and S/PDIF

Many desktop PCs have with a digital audio interface in the form optical Toslink or coax S/PDIF digital audio output. These are the same familiar connections we have had in our traditional audio systems such as the output of a CD or DVD/Blu-ray player. Each has its own advantages. Toslink optical connection electrically isolates the server and the DAC and hence can keep the noise in the PC from polluting the sensitive DAC downstream. The down side is that optical connection tends to suffer from higher jitter due to low bandwidth of the cheap optical cable used for audio. In that case, the coax connection may be superior.

Without measurements it is hard to know whether you are better off with Toslink optical or S/PDIF. You could get lower jitter over S/PDIF only to have the electrical coupling of the server and DAC undo that and then some. My suggestion is to connect both links and perform a blind test. Close your eyes and randomize which one you are using. Listen for any differences. Use a soundtrack that has high frequency notes that decay into nothing and pay attention to how clean that decay is and whether it terminates abruptly. Also note whether the sound is brighter in one interface than the other. I have had better luck with coax but in at least one occasion, I found optical sounding better.

USB Audio

The (relatively) new kid on the block is USB. No doubt you are familiar with this ubiquitous computer interface for everything from keyboards to printers. Countless DACs are available that take USB as their input and operate in what is called Isochronous mode. Isochronous mode sends packets of data that are ~1024 bytes long. The receiver uses this block transfer cadence to generate the DAC clock. The problem with this approach is that it is "glitchy." At the end of each block transfer by definition there is a pause before the next block is transmitted. So if care is not taken, we get a timing glitch/jitter every 1 KHz which is smack in the middle of audio band and where the ear is nearly most sensitive.

Despite this issue, USB paves the road to finally fix the ills of our current consumer digital interfaces. Namely the fact that the DAC must stay "slave" to the source in deriving its timing. This as I explained, immediately sets us up for source induced jitter. A much better scheme is put to the DAC in charge of timing and make the source slave. In doing so, we can have a clean clock source for the DAC chip and have the server feed us data as we need and request it. USB if completely suited to this purpose as its primary function is to convey digital samples to the peripheral device. And that is all we want to use it for: to give us data but let us determine the timing of them. This mode of operation is called "asynchronous USB" or async USB for short.

Another benefit of async USB is that it can deal with the server falling behind the DAC. Audio is a "real time" operation. If you are playing 48 Khz PCM audio, there better be an audio sample every 1/48,000 of a second or else you will hear a glitch as the DAC would have nothing to play. A MAC or PC on the other hand is a non-real-time device. They are orders of magnitude faster than what we need them for audio, but they are not always there when needed. Activities on the computer may make it slow at a critical moment to feed data to a DAC resulting in the glitch. It is the case of being way too fast, and too slow at the same time. A technique called buffering allows us to take advantage of our "faster than real-time" performance to make up for slower than real-time response. Instead of reading data just when we need it, we can set aside a large pool of memory called a buffer, and put the audio samples there. Then we "drain" the data as we need it using our precise DAC clock. As long as the pre-fetch mechanism, the logic that fetches audio samples, is faster than us consuming the data, we can survive momentary periods where the server gets too busy to service the DAC. This mechanism is ideally suited to async USB because by definition we can read data at a different rate than we are consuming it in the DAC.

Note that asynchronous audio output has existed for a long time in professional audio cards that you plugged into a computer or used over Firewire or USB. What is new is being able to do the same thing but with standard in-box drivers in Windows or Mac. Both operating systems come with built-in drivers for USB class 2 that allows driver-less attachment of such devices. The Windows implementation stops at 24 bit/96 KHz so you usually get a driver from the manufacturer to support higher data rates. In some cases the implementation is proprietary requiring a driver for the device to operate at all.

Asynchronous mode nicely solves our upstream jitter problem. It matters not how much jitter exists in the USB clock coming or the source PC. We have our own clock in the DAC or S/PDIF interface that is used for conversion of digital audio to analog. This method, on paper at least, is superior to HDMI or SPDIF out of the server because these continue to keep the source/server as the master. I say on paper because the local clock still has to have good performance and external interference kept away. It is possible to build an asynchronous USB interface that does no better than classic interconnects because of failings in this regard. Let's dig into this further.

Electrical Isolation

Asynchronous transfer mode of USB solves our clocking problem but we are still stuck with electrical coupling of the PC and our AV gear courtesy of wired USB connection. You can have the world's best DAC clock but if you let noise bleed into its power supply or even couple through it over the air, you still wind up with noise and distortion on the output of your DAC. The first way to guard against this is to isolate the power including the ground of the server from the DAC. This is usually done with an integrated IC such as the Analog Devices ADuM4160 whose block diagram is shown in Figure 2. Alas, that IC cannot support speeds above 24-bit/96 KHz. Higher speed solutions require a more discrete solution such as a generic digital isolator.

Figure 2: Electrical/Galvanic isolation using off-the-shelf USB isolator IC.

As I noted, it is important that the ground connection is also isolated as otherwise that line will not only allow interference to transmit to our sensitive audio circuits, but can also create ground loops. Ground loops are caused by differences in the voltage potential of the ground on the sending and receiving equipment. They almost always exist in such scenarios and the question only becomes how well the equipment deals with it and how bad the differential is. Best thing is to not create a ground loop than try to diagnose and fix it. Galvanic isolation does that and should be on your list of “must haves” when buying USB audio solutions.

Flavors of Async USB

You have two choices here. You can get a new DAC or AVR with a built-in asynchronous USB interface or buy a “bridge” adapter that converts USB asynchronously to S/PDIF (and its balanced professional AES/EBU counterpart if you have that in your DAC). My preference is for the bridge as that gives you much more choices of AV products including the DAC/AVRs you already have. There are many such interfaces at all price points. In this article I will be talking about two of my favorites:

1. Berkeley Audio Design Alpha USB adapter. This is a very high performance bridge that takes USB input and produces either S/PDIF or AES/EBU. It has a built-in power supply that is a must for clean power as opposed to trying to use the USB power.

2. Audiophilleo USB to S/PDIF adapter. This is another well designed product which is small enough to couple directly to the S/PDIF connector.

Measurements

Let’s put the theory to practice and see if we are able to improve the performance of our systems using this new technology. Figure 3 is the measurement of the Mark Levinson No 502 processor when being fed the “J-Test” signal (essentially a 12 KHz tone). The Ideal performance would have a single tone/sharp spike at 12 KHz and nothing else. The server is my Sony laptop driving the processor either using its built-in HDMI output, or the Berkeley async USB adapter feeding the 502 over S/PDIF.

I have given substantial benefit of the doubt to HDMI as the Mark Levinson 502 processor produces superb performance with the spikes hovering at very low level of -113 dBFs. No other HDMI device I tested could remotely approach this level of performance as you will see later. As good this HDMI performance is though, the Berkeley S/PDIF connection bests it, by taking distortions down by 13 dB (-127 dBFs vs -114 dBFs). Yes, we are talking about how many angels may be dancing on top of the pin but I wanted to show that even in heroic implementations of HDMI, a better architecture in the form of async USB and great implementation of that, can provide superior performance.

Figure 3: Mark Levinson 502 Processor performance using HDMI as input versus S/PDIF driven by the Berkeley USB adapter.

Now let’s come down to earth and see what happens when we make the same comparison using a sampling of higher-end AVRs. First up is the Anthem AVM 50 processor as shown in Figure 4.

Figure 4: Anthem AVM 50 HDMI in red compared to Berkeley S/PDIF in green. Note the much improved level of distortion in the latter case.

An entirely different picture emerges with HDMI distortion spikes reaching up to -84 dBFs. Let's put that in context by mentioning that signal to noise ratio of 16 bit audio is 96 dB. Inverted, the noise floor would be at -96 dBFs which means using HDMI on this AVR, we have introduced distortion products that rise well above our theoretical noise floor for 16 bit audio. Forget about 24 bit audio. We are having trouble and serious one at that, reproducing 16 bit audio here.

Thankfully using the Berkeley to drive the S/PDIF input on the exact same AVR with the exact same DAC results in reduction of distortions by nearly 20 dB. Let me repeat: nothing was changed in this experiment other than replacing one digital interface, HDMI, for another one, USB to S/PDIF bridge. Yet we managed to reduce the analog distortion coming out of the DAC by a huge amount. Distortion products are down to a much more reasonable -103 dBFs making the promise of producing clean 16 bit audio a realistic proposition (we can hear distortions through noise so need better than -96 dBFs distortion performance). Next time someone says "bits are bits" and you can't change anything by modifying the digital feed to a DAC, show them this graph.

To show this is not a unique example, let’s repeat the exercise with the Pioneer Elite SC-63 AVR now in Figure 5. As with the Anthem, HDMI interface is suffering from low frequency jitter which causes our main 12 KHz test tone to broaden its "skirt" on each side. We also have our usual set of "correlated" distortion spikes on both sides of our main test signal.

Switching to the Berkeley USB to S/PDIF bridge yet again shows a marked improvement by attenuating the low frequency jitter and reducing the amplitude of the higher frequencies ones. The reduction in correlated distortion spikes is about 7 dB which is not an insignificant amount.

Figure 5: Comparison of HDMI to S/PDIF on a Pioneer SC-63 AVR showing better performance yet again using USB to S/PDIF bridge.

I won’t bore you with more measurements as the theme continues with all the other AVRs I have tested. Suffice it to say, unless someone backs their statement with objective measurements, you can safely assume that you can do better than HDMI with a well-implemented USB Async interface such as the Berkeley and while not shown, the Audiophilleo.

Quality Implementation

It is important to not automatically assume that just because a device is advertising asynchronous USB operation that it produces the same results I have shown above. The implementation can create its own “local” noise and timing distortions. To show an example of differing performance, let’s look at Figure 6 which compares performance of the Peachtree Decco DAC's internal asynchronous USB versus an external bridge. That input as shown in red produces excellent performance at -116 dBFs distortion level. As good as that is, we are able to improve upon it by using the Audiophilleo USB to S/PDIF to the tune of 13 dB reduction in noise+distortion products. Clearly the two async USB implementations are not the same.

Figure 6: Comparison of built-in asynchronous USB in the Peachtree Decco (in red) versus outboard Audiophilleo (in green).

For another comparison let's look at how the Berkeley compares to the Audiophilleo when driving the mark Levinson 502 in Figure 7.

Figure 7: comparison of Berkeley and Audiophilleo USB to S/PDIF showing the former to have improved performance (in green).

The correlated distortion spikes in red produced by the Audiophilleo essentially vanish when the Mark Levinson is driven by the Berkeley.

There is no free lunch here as the Audiophilleo is about one third the cost and physically about 1/10th the size of the Berkeley. The small size makes it very difficult to provide physical separate of the components. And the lower cost excludes using more exotic parts. So it is not surprising that there is some measured difference here.

To see what it takes to produce the superlative performance that the Berkeley manages, let’s look at what is inside it as shown in Picture 1. On the bottom right is the USB port. It goes into the ubiquitous XMOS USB interface IC (large square chip). This is the “dirty” side of the unit meaning it has high-speed digital signals that must be kept away from our audio signals as much as possible. Such noise can bleed not only through connected circuits but literally through air. Good design hygiene therefore calls for physical separation which we see here as I have indicated with the marker "Isolation." USB computer interface stays on the right whereas S/PDIF and AES/EBU clock and signal generation are to the left. This reduces the chances of the clock oscillators (the shiny metal boxes) becoming contaminated with the digital activity on the dirty (USB) side.

Picture 1: Main interface board in the Berkeley Alpha USB to S/PDIF and AES converter. Note the excellent isolation of USB digital interface on the right and S/PDIF and AES/EBU on the left to reduce crosstalk (contamination).

There is more to the performance of the Berkeley than just this physical separation that goes beyond the scope of this article. Suffice it to say, excellence in engineering pays off in better measured performance.

The Audiophilleo is also an excellent device but as you see in Picture 2, it is a very small device, not a whole lot bigger than the American quarter. There is no separation to speak of as we had with the Berkeley. So no wonder that we had some bleeding of noise from USB side into the S/PDIF clock.

Picture 2: Audiophilleo USB to S/PDIF Converter. Note the diminutive size.

There are asynchronous USB adapters at all price points. Unfortunately without measurements you cannot know how well they perform. Given that, look for “galvanic isolation” and separate power supply in the device spec sheet as generic clues to good performance but no guarantee.

In summary, we now have a superb way to get audio out of a computer server into a DAC. By using a well-implemented async USB bridge we get all the benefits and conveniences of modern music systems, with superlative performance. It is not always the case that we can have our cake and eat it too, but such is the case here.

References

Digital Audio: The Possible and Impossible, Amir Majidimehr, Widescreen Review Magazine